Cache Organization | Set 1 (Introduction)

Last Updated :

14 May, 2023

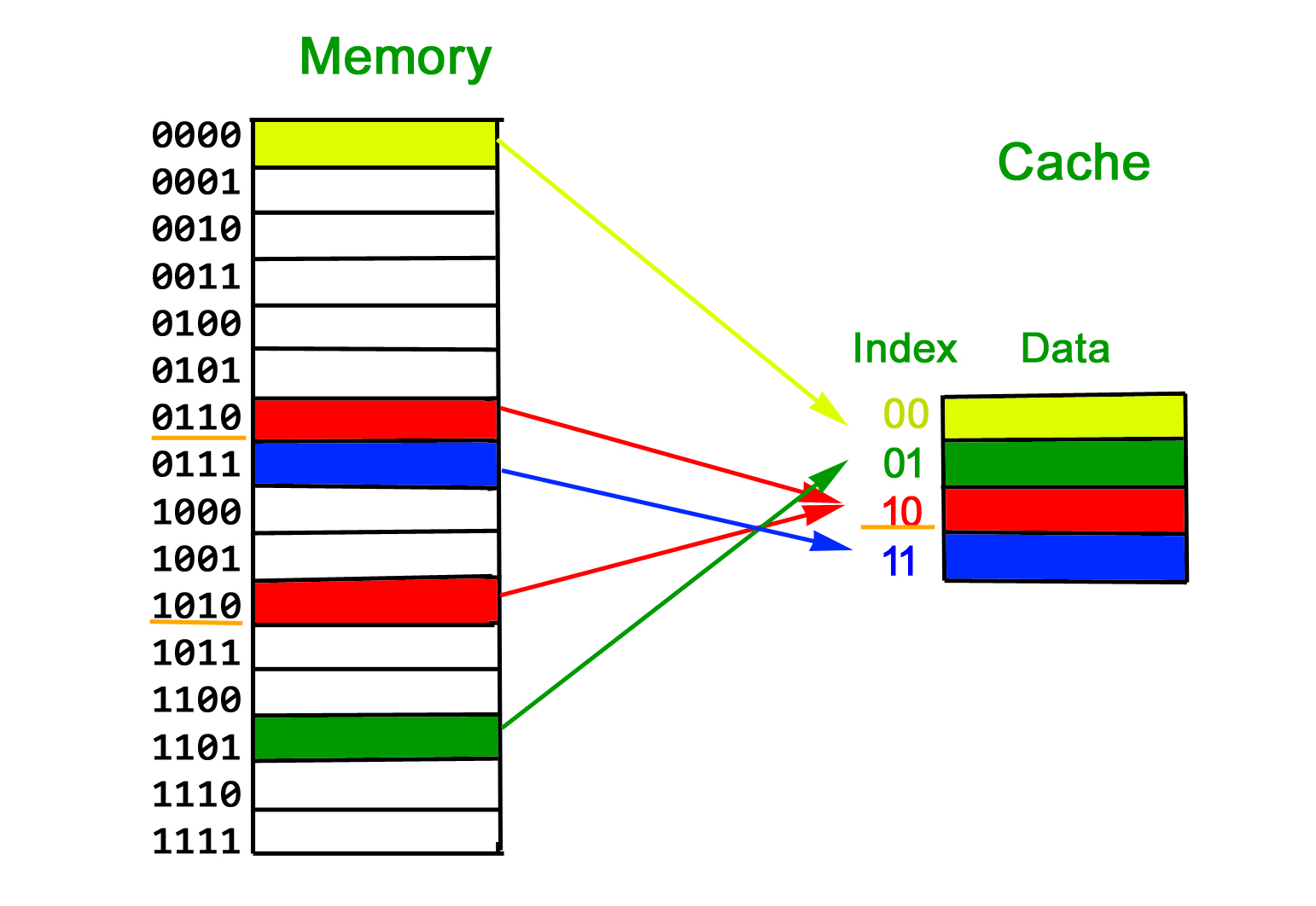

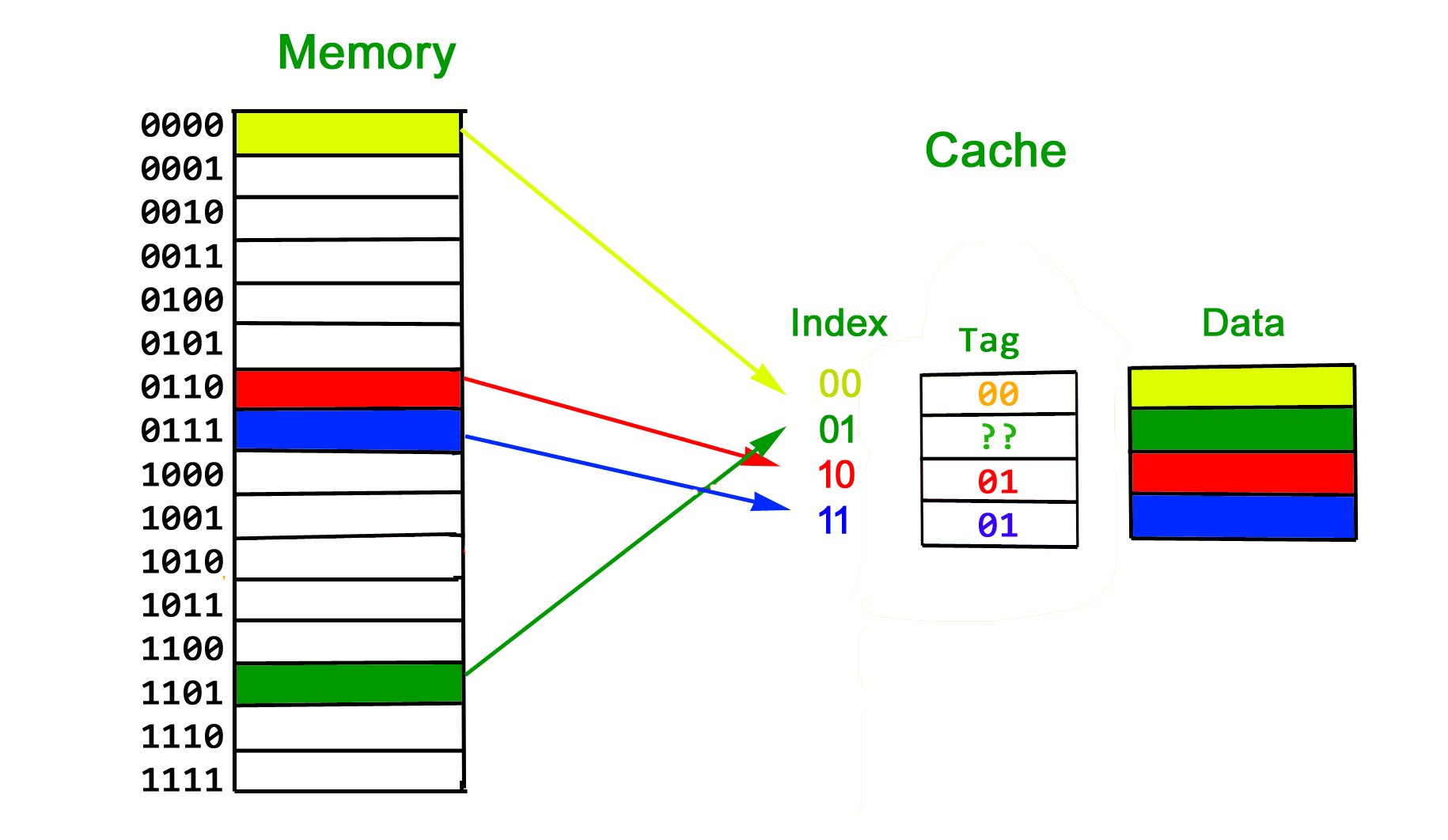

Cache is close to CPU and faster than main memory. But at the same time is smaller than main memory. The cache organization is about mapping data in memory to a location in cache. A Simple Solution: One way to go about this mapping is to consider last few bits of long memory address to find small cache address, and place them at the found address. Problems With Simple Solution: The problem with this approach is, we lose the information about high order bits and have no way to find out the lower order bits belong to which higher order bits.  Solution is Tag: To handle above problem, more information is stored in cache to tell which block of memory is stored in cache. We store additional information as Tag

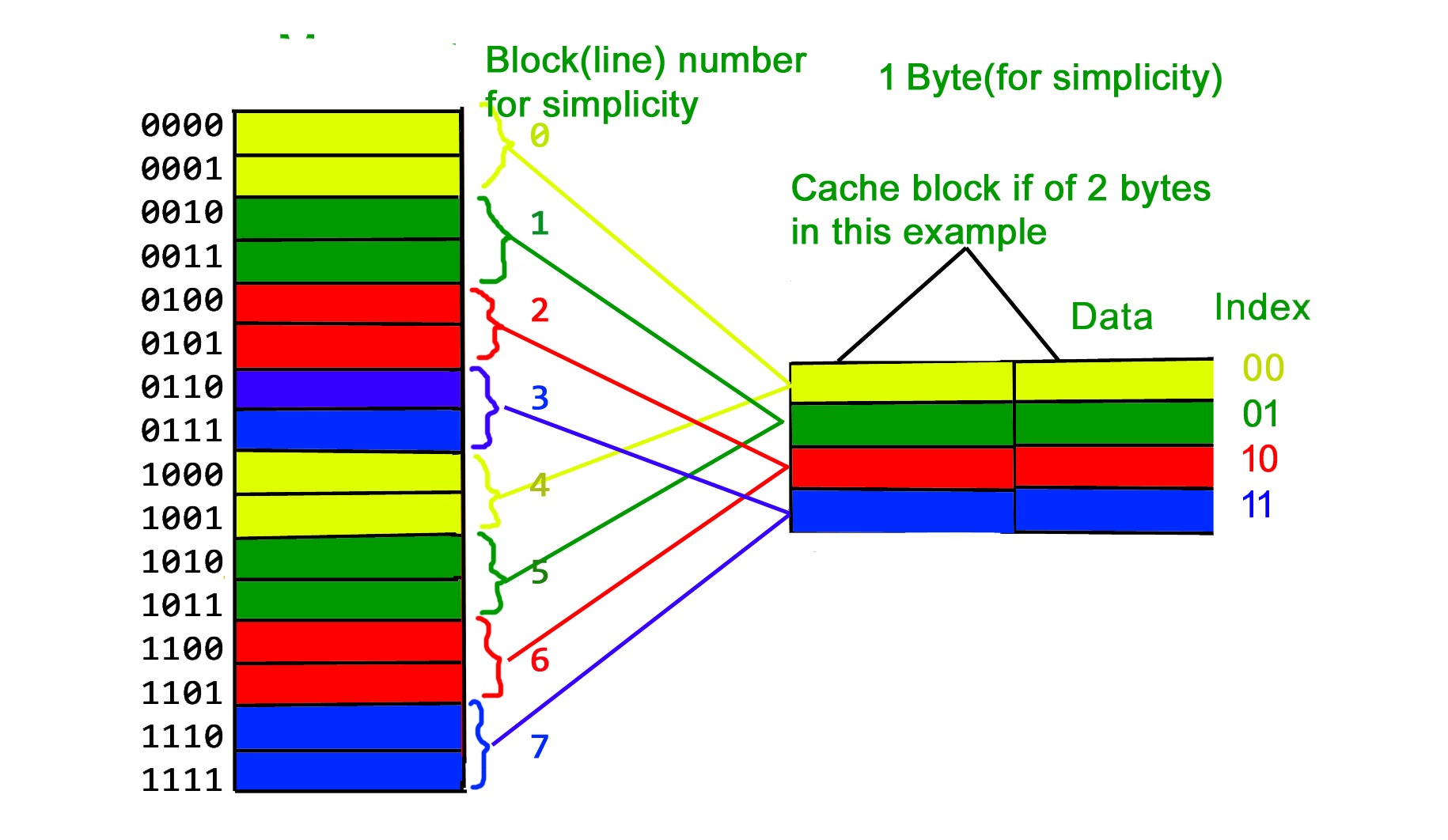

Solution is Tag: To handle above problem, more information is stored in cache to tell which block of memory is stored in cache. We store additional information as Tag  What is a Cache Block? Since programs have Spatial Locality (Once a location is retrieved, it is highly probable that the nearby locations would be retrieved in near future). So a cache is organized in the form of blocks. Typical cache block sizes are 32 bytes or 64 bytes.

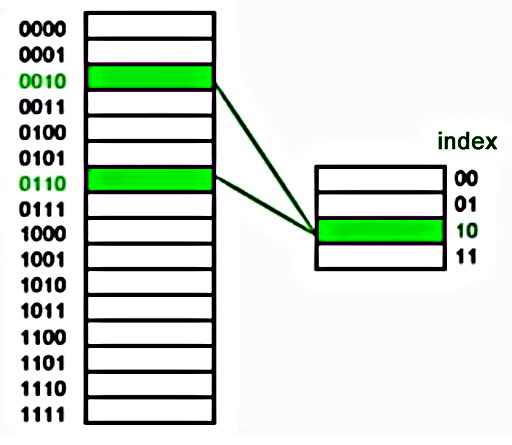

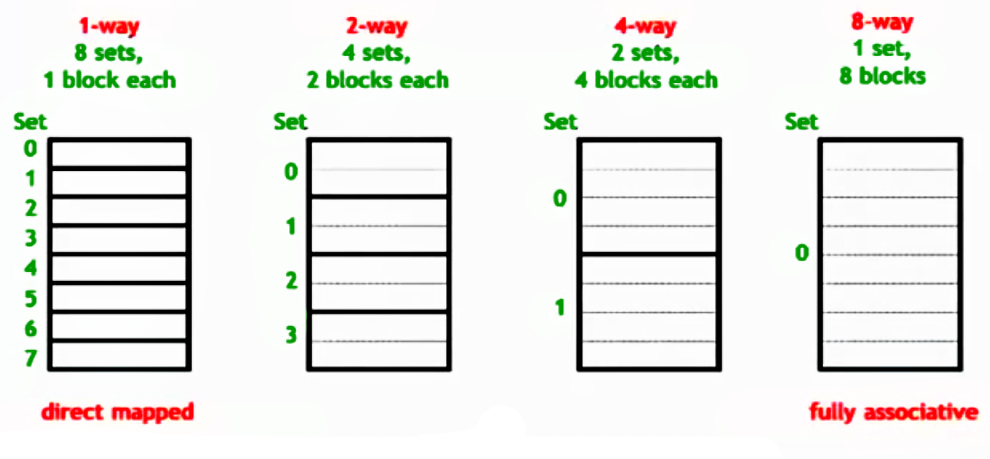

What is a Cache Block? Since programs have Spatial Locality (Once a location is retrieved, it is highly probable that the nearby locations would be retrieved in near future). So a cache is organized in the form of blocks. Typical cache block sizes are 32 bytes or 64 bytes.  The above arrangement is Direct Mapped Cache and it has following problem We have discussed above that last few bits of memory addresses are being used to address in cache and remaining bits are stored as tag. Now imagine that cache is very small and addresses of 2 bits. Suppose we use the last two bits of main memory address to decide the cache (as shown in below diagram). So if a program accesses 2, 6, 2, 6, 2, …, every access would cause a hit as 2 and 6 have to be stored in same location in cache.

The above arrangement is Direct Mapped Cache and it has following problem We have discussed above that last few bits of memory addresses are being used to address in cache and remaining bits are stored as tag. Now imagine that cache is very small and addresses of 2 bits. Suppose we use the last two bits of main memory address to decide the cache (as shown in below diagram). So if a program accesses 2, 6, 2, 6, 2, …, every access would cause a hit as 2 and 6 have to be stored in same location in cache.  Solution to above problem – Associativity What if we could store data at any place in cache, the above problem won’t be there? That would slow down cache, so we do something in between.

Solution to above problem – Associativity What if we could store data at any place in cache, the above problem won’t be there? That would slow down cache, so we do something in between.  Source: https://www.youtube.com/watch?v=sg4CmZ-p8rU

Source: https://www.youtube.com/watch?v=sg4CmZ-p8rU

We will soon be discussing more details of cache organization. This article is contributed Ankur Gupta.

Advantages and downsides of different cache employer techniques:

Direct-Mapped Cache:

Advantages:

- Simple and easy to put into effect

- Low hardware overhead

- Fast hit time

Disadvantages:

- High pass over fee because of restrained wide variety of cache blocks

- Increased war misses because of block collisions

- Limited flexibility in phrases of block placement

Set-Associative Cache:

Advantages:

- Higher hit fee than direct-mapped cache because of more than one blocks being saved in each set

- More bendy block placement than direct-mapped cache

- Lower struggle misses as compared to direct-mapped cache

Disadvantages:

- Higher hardware overhead than direct-mapped cache

- Longer hit time than direct-mapped cache because of looking multiple blocks

- Limited scalability due to fixed quantity of ways in step with set

Fully-Associative Cache:

Advantages:

- Highest hit rate amongst cache businesses

- Most flexible block placement

- No struggle misses because of fully-associative mapping

Disadvantages:

- Highest hardware overhead among cache agencies

- Longest hit time due to searching all blocks in cache

- Limited scalability because of constrained physical area and huge tag storage necessities

Share your thoughts in the comments

Please Login to comment...