Multilevel Cache Organisation

Last Updated :

12 May, 2023

Cache is a random access memory used by the CPU to reduce the average time taken to access memory.

Multilevel Caches is one of the techniques to improve Cache Performance by reducing the “MISS PENALTY”. Miss Penalty refers to the extra time required to bring the data into cache from the Main memory whenever there is a “miss” in the cache.

For clear understanding let us consider an example where the CPU requires 10 Memory References for accessing the desired information and consider this scenario in the following 3 cases of System design :

Case 1 : System Design without Cache Memory

Here the CPU directly communicates with the main memory and no caches are involved.

In this case, the CPU needs to access the main memory 10 times to access the desired information.

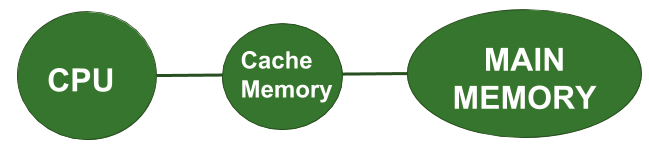

Case 2 : System Design with Cache Memory

Here the CPU at first checks whether the desired data is present in the Cache Memory or not i.e. whether there is a “hit” in cache or “miss” in the cache. Suppose there is 3 miss in Cache Memory then the Main Memory will be accessed only 3 times. We can see that here the miss penalty is reduced because the Main Memory is accessed a lesser number of times than that in the previous case.

Case 3 : System Design with Multilevel Cache Memory

Here the Cache performance is optimized further by introducing multilevel Caches. As shown in the above figure, we are considering 2 level Cache Design. Suppose there is 3 miss in the L1 Cache Memory and out of these 3 misses there is 2 miss in the L2 Cache Memory then the Main Memory will be accessed only 2 times. It is clear that here the Miss Penalty is reduced considerably than that in the previous case thereby improving the Performance of Cache Memory.

NOTE :

We can observe from the above 3 cases that we are trying to decrease the number of Main Memory References and thus decreasing the Miss Penalty in order to improve the overall System Performance. Also, it is important to note that in the Multilevel Cache Design, L1 Cache is attached to the CPU and it is small in size but fast. Although, L2 Cache is attached to the Primary Cache i.e. L1 Cache and it is larger in size and slower but still faster than the Main Memory.

Effective Access Time = Hit rate * Cache access time

+ Miss rate * Lower level access time

Average access Time For Multilevel Cache:(Tavg)

Tavg = H1 * C1 + (1 – H1) * (H2 * C2 +(1 – H2) *M )

where

H1 is the Hit rate in the L1 caches.

H2 is the Hit rate in the L2 cache.

C1 is the Time to access information in the L1 caches.

C2 is the Miss penalty to transfer information from the L2 cache to an L1 cache.

M is the Miss penalty to transfer information from the main memory to the L2 cache.

Example:

Find the Average memory access time for a processor with a 2 ns clock cycle time, a miss rate of 0.04 misses per instruction, a missed penalty of 25 clock cycles, and a cache access time (including hit detection) of 1 clock cycle. Also, assume that the read and write miss penalties are the same and ignore other write stalls.

Solution:

Average Memory access time(AMAT)= Hit Time + Miss Rate * Miss Penalty.

Hit Time = 1 clock cycle (Hit time = Hit rate * access time) but here Hit time is directly given so,

Miss rate = 0.04

Miss Penalty= 25 clock cycle (this is the time taken by the above level of memory after the hit)

so, AMAT= 1 + 0.04 * 25

AMAT= 2 clock cycle

according to question 1 clock cycle = 2 ns

AMAT = 4ns

Advantages of Multilevel Cache Organization:

Reduced access time: By having multiple levels of cache, the access time to frequently accessed data is greatly reduced. This is because the data is first searched for in the smallest, fastest cache level and if not found, it is searched for in the next larger, slower cache level.

Improved system performance: With faster access times, system performance is improved as the CPU spends less time waiting for data to be fetched from memory.

Lower cost: By having multiple levels of cache, the total amount of cache required can be minimized. This is because the faster, smaller cache levels are more expensive per unit of memory than the larger, slower cache levels.

Energy efficiency: Since the data is first searched for in the smallest cache level, it is more likely that the data will be found there, and this reduces the power consumption of the system.

Disadvantages of Multilevel Cache Organization:

Complexity: The addition of multiple levels of cache increases the complexity of the cache hierarchy and the overall system. This complexity can make it more difficult to design, debug and maintain the system.

Higher latency for cache misses: If the data is not found in any of the cache levels, it will have to be fetched from the main memory, which has a higher latency. This delay can be especially noticeable in systems with deep cache hierarchies.

Higher cost: While having multiple levels of cache can reduce the overall amount of cache required, it can also increase the cost of the system due to the additional cache hardware required.

Cache coherence issues: As the number of cache levels increases, it becomes more difficult to maintain cache coherence between the various levels. This can result in inconsistent data being read from the cache, which can cause problems with the overall system operation.

Like Article

Suggest improvement

Share your thoughts in the comments

Please Login to comment...