What’s difference between CPU Cache and TLB?

Last Updated :

30 Oct, 2023

Both CPU Cache and TLB are hardware used in microprocessors but what’s the difference, especially when someone says that TLB is also a type of Cache?

First thing first. CPU Cache is a fast memory that is used to improve the latency of fetching information from Main memory (RAM) to CPU registers. So CPU Cache sits between Main memory and CPU. And this cache stores information temporarily so that the next access to the same information is faster. A CPU cache which used to store executable instructions, it’s called Instruction Cache (I-Cache). A CPU cache is used to store data, it’s called Data Cache (D-Cache). So I-Cache and D-cache speed up fetching time for instructions and data respectively. A modern processor contains both I-Cache and D-Cache. For completeness, let us discuss D-cache hierarchy as well. D-Cache is typically organized in a hierarchy i.e. Level 1 data cache, Level 2 data cache, etc. It should be noted that L1 D-cache is faster/smaller/costlier as compared to L2 D-Cache. But the basic idea of ‘CPU cache‘ is to speed up instruction/data fetch time from Main memory to CPU.

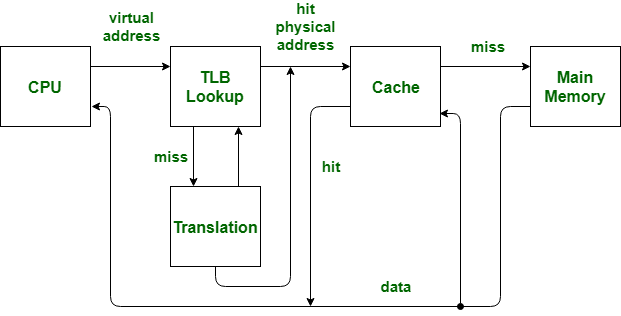

Translation Lookaside Buffer (i.e. TLB) is required only if Virtual Memory is used by a processor. In short, TLB speeds up the translation of virtual addresses to a physical address by storing page-table in faster memory. In fact, TLB also sits between CPU and Main memory. Precisely speaking, TLB is used by MMU when a virtual address needs to be translated to a physical address. By keeping this mapping of virtual-physical addresses in fast memory, access to page-table improves. It should be noted that page-table (which itself is stored in RAM) keeps track of where virtual pages are stored in the physical memory. In that sense, TLB also can be considered as a cache of the page table.

But the scope of operation for TLB and CPU Cache is different. TLB is about ‘speeding up address translation for Virtual memory’ so that page-table needn’t be accessed for every address. CPU Cache is about ‘speeding up main memory access latency’ so that RAM isn’t accessed always by the CPU. TLB operation comes at the time of address translation by MMU while CPU cache operation comes at the time of memory access by CPU. In fact, any modern processor deploys all I-Cache, L1 & L2 D-Cache, and TLB.

Let us understand this in a Tabular Form -:

| |

CPU Cache |

TLB |

| 1. |

CPU cache stands for Central Processing Unit Cache |

TLB stands for Translation Lookaside Buffer |

| 2. |

CPU cache is a hardware cache |

It is a memory cache that stores recent translations of virtual memory to physical memory in the computer. |

| 3. |

It is used to reduce the average time to access data from the main memory. |

It is used to reduce the time taken to access memory location for a user from the main memory of our computer. |

| 4. |

It stores copies of the data from frequently used main memory locations because it is located closer to Processor Core |

Most of our computers include more than 1 TLB in MMH(memory management hardware ) |

| 5. |

Example -: L1 cache, L2 cache, L3 cache |

Example -: L1 TLB |

| |

Much faster than accessing main memory |

Much faster than accessing main memory |

| |

Data cache, instruction cache, unified cache |

Translation lookaside buffer (TLB) |

| |

Stores recently accessed data in faster memory, reducing the need to access slower memory |

Maintains a mapping of virtual memory addresses to physical memory addresses, allowing for faster access to data |

| |

Typically ranges from a few kilobytes to several megabytes |

Usually much smaller than cache and can range from a few entries to a few thousand entries |

| |

Located on the processor chip itself or in a separate module |

Located on the processor chip itself or in a separate module |

| |

Miss Penalty High, requires accessing slower memory to retrieve data |

High, requires accessing slower memory to retrieve mapping information |

| |

Implementation Hardware |

Hardware + software |

| |

Hit Rate Typically high (above 95%) |

Typically high (above 95%) |

Please do Like/Share if you find the above useful. Also, please do leave us a comment for further clarification or info. We would love to help and learn 🙂

Like Article

Suggest improvement

Share your thoughts in the comments

Please Login to comment...