Unsupervised learning is an intriguing area of machine learning that reveals hidden structures and patterns in data without requiring labelled samples. Because it investigates the underlying relationships in data, it’s an effective tool for tasks like anomaly identification, dimensionality reduction, and clustering. There are several uses for unsupervised learning in domains like computer vision, natural language processing, and data analysis. Through self-sufficient data interpretation, it provides insightful information that enhances decision-making and facilitates comprehension of intricate data patterns.

There are many types of unsupervised learning, but here in this article, we will be focusing on Unsupervised neural network models.

Unsupervised Neural Network

An unsupervised neural network is a type of artificial neural network (ANN) used in unsupervised learning tasks. Unlike supervised neural networks, trained on labeled data with explicit input-output pairs, unsupervised neural networks are trained on unlabeled data. In unsupervised learning, the network is not under the guidance of features. Instead, it is provided with unlabeled data sets (containing only the input data) and left to discover the patterns in the data and build a new model from it. Here, it has to figure out how to arrange the data by exploiting the separation between clusters within it. These neural networks aim to discover patterns, structures, or representations within the data without specific guidance.

There are several components of unsupervised learning. They are:

- Encoder-Decoder: As the name itself suggests that it is used to encode and decode the data. Encoder basically responsible for transforming the input data into lower dimensional representation on which the neural network works. Whereas decoder takes the encoded representation and reconstruct the input data from it. There architecture and parameters are learned during the training of the network.

- Latent Space: It is the immediate representation created by the encoder. It contains the abstract representation or features that captures important information about the data’s structures. It is also known as the latent space.

- Training algorithm: Unsupervised neural network model use specific training algorithms to get the parameters. Some of the common optimization algorithms are Stochastic gradient descent, Adam etc. They are used depending on the type of model and loss function.

- Loss Function: It is a common component among all the machine learning models. It basically calculates the model’s output and the actual/measured output. It quantifies how well the model understands the data.

Now, Let’s discuss about some of the types of unsupervised neural network.

Autoencoder

A neural network that learn how to compress and decompress data. They are trained to reconstruct the input data from a compressed representation, which is further learned by the network during training of the model. It can be used for tasks such as image compression, dimensionality reduction, and denoising. Autoencoders are feedforward neural networks with an encoder and a decoder. They aim to map input data to a lower-dimensional representation and then reconstruct the data.

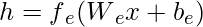

When input data is compressed into a lower-dimensional representation called the latent space, the first part of the neural network to do this is called an encoder. In order to facilitate effective data reconstruction by the decoder, it extracts important aspects from the data. Reducing dimensionality, learning features, and denoising data are common applications for autoencoders. The encoder maps the input data x to an encoding or hidden representation ℎ using a set of weights (We) and biases (be ):

where fe is the activation function of the encoder

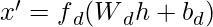

The neural network component that reverses the encoding process is called the decoder. By using the encoder’s compact, lower-dimensional representation (latent space), it reconstructs the original input data. Autoencoders can learn to denoise data or create new data based on learnt characteristics by using the decoder’s goal of closely replicating the input. The decoder maps the encoding ℎ back to the original input x using a different set of weights (Wd) and biases (bd ):

An autoencoder’s loss function measures how much the input data differs from its reconstruction. It calculates the discrepancy between the original and decoder-generated data. In order to help the model acquire useful feature representations in the latent space and provide correct reconstructions, the objective is to minimize this loss. The loss function measures the difference between the input x and the reconstructed output x’:

![Rendered by QuickLaTeX.com L(x,x') = - \Sigma_i[x_ilog(x'_i) + (1-x_i)log(1-x'_i)]](https://www.geeksforgeeks.org/wp-content/ql-cache/quicklatex.com-11ee9da5aac0cb8dc60262573c61cebf_l3.png)

Restricted Boltzmann Machine

A probabilistic model serves as the foundation for Restricted Boltzmann Machines (RBMs), which are unsupervised nonlinear feature learners. A linear classifier, such as a linear SVM or a perceptron, can often yield strong results when given features extracted by an RBM or a hierarchy of RBMs. About how the inputs are distributed, the model makes assumptions. Only BernoulliRBM is currently available through scikit-learn, and it expects that the inputs are binary or between 0 and 1, each of which encodes the likelihood that a given feature will be enabled. The RBM uses a specific graphical model to attempt to optimize the likelihood of the data. The representations capture interesting regularities since the parameter learning approach (Stochastic Maximum Likelihood) keeps them from deviating from the input data. However, this makes the model less useful for small datasets and typically not useful for density estimation.

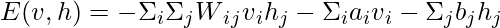

Each RBM has an energy function that measures the compatibility between visible and hidden unit states. The energy of an RBM is defined as follows:

where E(v,h) is the energy of RBM of given state, Wij is weight, and ai and bj are biases.

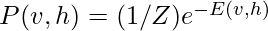

The joint probability of a visible unit configuration (v) and a hidden unit configuration (h) is calculated using the energy function:

and for the marginal probabilities for visible and hidden units are obtained by summing over the respective variables:

Self-Organizing maps (SOM)

Kohonen maps, or self-organizing maps (SOM), are an intriguing class of unsupervised neural network models. They work especially well for comprehending and interpreting complex, high-dimensional data. The capacity of SOMs to reduce dimensionality while maintaining the topological linkages in the input data is one of its distinguishing features. A SOM starts with a grid of neurons, each of which represents a particular area of the data space. Through a process of competitive learning, the neurons adjust to the distribution of the input data throughout training. Neighboring neurons change their weights in response to similar data points, making them more sensitive to these patterns. This self-organizing characteristic produces a map that places comparable data points in close proximity to one another, making it possible to see patterns and clusters in the data.

Applications for SOMs can be found in many areas, such as anomaly detection, feature extraction, and data clustering. They play a crucial role in data mining and exploratory analysis because they make it possible to find hidden structures in intricate datasets without the need for labeled data. SOMs are an important tool for unsupervised learning and data visualization because of their capacity to condense information while maintaining linkages.

Generative Adversarial Networks (GANs)

Generative Adversarial Networks (GANs) are a novel paradigm in the field of unsupervised neural networks. The discriminator and generator neural networks that make up a GAN are always in conflict with one another. As the discriminator works to separate authentic from produced data, the generator aims to create data samples that are identical to real data. After training, the generator in a GAN learns to produce data that is more and more realistic, starting out as random noise. Image generation, style transfer, and data augmentation all benefit from the adversarial training process that pushes the generator to provide data that is frequently very convincing. Drug discovery and natural language processing are two more fields in which GANs are being used.

GANs are an essential tool for unsupervised learning because of their ability to capture complex data distributions without the need for labeled input. However, they may require complex training, and they could be vulnerable to mode collapse, in which the generator concentrates on a small number of data patterns. Notwithstanding these difficulties, GANs have completely changed the field of machine learning and had a significant influence on many artistic and scientific domains.

Implementation of Restricted Boltzmann Machine

Let’s dive deep into the implementation of unsupervised neural network models Restricted Boltzmann machine that is given by the Sklearn or Scikit-learn

RBMs are nonlinear feature learners that extract features from the input and are often used in conjunction with linear classifiers like linear SVMs or perceptron.

It basically makes assumptions from the distribution of inputs. Right now, Scikit-learn provides BernoulliRBM. It assumes input as binary values. It tries to maximize the likelihood of the data using graphical models. BernoulliRBM can also takes value between 0 and 1 which signifies the probability that the visible unit would turn off or on. It is useful in character recognition, where the interest is on which pixels are active and which aren’t.

Importing Libraries

Python3

import numpy as np

import matplotlib.pyplot as plt

from sklearn.neural_network import BernoulliRBM

from sklearn.datasets import fetch_openml

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import classification_report, accuracy_score

|

The libraries and modules required to create a Bernoulli Restricted Boltzmann Machine (RBM) on the MNIST dataset are imported by this code. Data loading, preprocessing, RBM feature extraction, and Logistic Regression classification are all completed by it. After that, it assesses the model’s accuracy and produces a classification report.

Loading Dataset

Python3

mnist = fetch_openml("mnist_784")

X = mnist.data / 255.0

y = mnist.target.astype(int)

|

This code imports the MNIST dataset by retrieving the labels (y) and pixel data (X) using scikit-learn’s fetch_openml method. The pixel values are then scaled to the range [0, 1] by dividing by 255.0, making sure that the format is consistent for subsequent processing. When dealing with image data, this preprocessing step is typical.

Splitting dataset into train and test sets

Python3

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.2, random_state=42)

|

This code uses the train_test_split tool from scikit-learn to divide the MNIST dataset into training and test sets. It allocates 20% of the data to the test set (X_test and y_test) and 80% of the data to the training set (X_train and y_train). Reproducibility is guaranteed via the random_state argument.

Model Development

Python3

rbm = BernoulliRBM(n_components=64, learning_rate=0.1,

n_iter=10, random_state=0, verbose=True)

rbm.fit(X_train)

|

Output:

[BernoulliRBM] Iteration 1, pseudo-likelihood = -106.26, time = 4.14s

[BernoulliRBM] Iteration 2, pseudo-likelihood = -105.04, time = 4.90s

[BernoulliRBM] Iteration 3, pseudo-likelihood = -104.94, time = 4.60s

[BernoulliRBM] Iteration 4, pseudo-likelihood = -98.99, time = 5.14s

[BernoulliRBM] Iteration 5, pseudo-likelihood = -103.12, time = 5.00s

[BernoulliRBM] Iteration 6, pseudo-likelihood = -100.73, time = 5.00s

[BernoulliRBM] Iteration 7, pseudo-likelihood = -98.94, time = 5.42s

[BernoulliRBM] Iteration 8, pseudo-likelihood = -99.63, time = 4.95s

[BernoulliRBM] Iteration 9, pseudo-likelihood = -96.17, time = 4.95s

[BernoulliRBM] Iteration 10, pseudo-likelihood = -97.82, time = 4.94s

This code builds a Bernoulli Restricted Boltzmann Machine (RBM) using certain setup parameters, including 10 iterations, 64 hidden components, and a learning rate of 0.1. In order to learn how to extract features from the input data, the RBM is fitted to the training data (X_train) via the fit technique. Progress updates are enabled during training with the verbose=True option set.

Feature Transformation

Python3

X_train_encoded = rbm.transform(X_train)

X_test_encoded = rbm.transform(X_test)

classifier = LogisticRegression(max_iter=100)

classifier.fit(X_train_encoded, y_train)

|

This function uses the trained RBM to turn the test and training data into hidden representations. Both sets’ encoded data is kept in X_train_encoded and X_test_encoded. The model is then taught to make predictions based on the compressed features that the RBM collected by training a Logistic Regression classifier on these encoded representations.

Model Evaluation and Prediction

Python3

y_pred = classifier.predict(X_test_encoded)

accuracy = accuracy_score(y_test, y_pred)

print("Classifier Accuracy:", accuracy)

|

Output:

Classifier Accuracy: 0.9064285714285715

This code predicts (y_pred) on the test data’s hidden representations (X_test_encoded) using the learned Logistic Regression classifier. It provides an indicator of the model’s performance on the test data by calculating the accuracy of the classifier’s predictions and printing the accuracy score.

Classification Report

Python3

classification_rep = classification_report(y_test, y_pred)

print("Classification Report:")

print(classification_rep)

|

Output:

Classification Report:

precision recall f1-score support

0 0.95 0.95 0.95 1343

1 0.96 0.98 0.97 1600

2 0.91 0.91 0.91 1380

3 0.88 0.88 0.88 1433

4 0.87 0.85 0.86 1295

5 0.91 0.87 0.89 1273

6 0.94 0.96 0.95 1396

7 0.93 0.90 0.92 1503

8 0.88 0.89 0.88 1357

9 0.82 0.86 0.84 1420

accuracy 0.91 14000

macro avg 0.91 0.90 0.91 14000

weighted avg 0.91 0.91 0.91 14000

This code uses scikit-learn’s classification_report function to create a classification report by comparing the true labels (y_test) with the predicted labels (y_pred). A thorough evaluation of the model’s performance on the test data is provided by the report, which is output to the console and contains metrics like precision, recall, and F1-score for each class.

Advantages of Unsupervised Neural network models

- Feature Learning: makes the model proficient in automatic learning and extracting relevant feature.

- Dimensionality Reduction: Since it works on the principle of dimensionality reduction, it preserves a lot of important information.

- Generative model: Generates new data samples which is valuable in tasks like data argumentation and data synthesis.

- Data Preprocessing: It can serve as a preprocessing step for supervised learning tasks. Extracted features or reduced-dimension representations can improve the performance of subsequent supervised models.

Disadvantages of Unsupervised Neural network models

- Lack of labels: Unlabeled data somehow reduces the precise prediction

- Complexity: It is complex and computationally expensive as it needs careful tuning of hyperparameters.

- Difficulty in Evaluation: Evaluating the performance of unsupervised models is more challenging than in supervised learning as there is no ground truth to compare against, making it harder to measure success.

- Limited Applicability: It is not suitable for all types of data or task as effectiveness depends on the quality and nature of the data.

Share your thoughts in the comments

Please Login to comment...