Perceptron class in Sklearn

Last Updated :

13 Oct, 2023

Machine learning is a prominent technology in this modern world and as years go by it is growing immensely. There are several components involved in Machine Learning that make it evolve and solve various problems and one such crucial component that exists is the Perceptron. In this article, we will be learning about what a perceptron is, the history of perceptron, and how one can use the same with the help of the Scikit-Learn, library which is arguably one of the most popular machine learning libraries in Python.

Frank Rosenblatt led the development of perceptron in the late 1950s. It is said that this was one of the earliest supervised learning algorithms that did exist. The primary reason behind developing a perceptron was to classify the given data into two categories. So we are confident enough to claim that a perceptron is a type of artificial neural network, that is actually based on real-life biological neurons which in turn makes it a binary classifier.

Understanding Perceptron

A perceptron is a kind of artificial neuron or node that is utilized in neural networks and machine learning. It is an essential component of more intricate models.

- It is popular for being the initial point for supervised learning algorithms.

- Considered to lay the foundation for complex neural networks.

- It is very useful for binary classification.

- It is inspired by the biological neuron. We can assume that it acts as a simplified version of the biological neuron.

- One thing that must be known is that the real purpose behind using a perceptron is to collect the input features and then calculate a weighted sum of all these features. Finally, it makes a binary decision based on the result and hence it is useful in binary classification problems.

- Binary Classification: Binary Classification is a supervised learning algorithm whose primary job is to classify the data into two separate classes. Perceptron has an important role to play in binary classification as it is used to classify the data into one of the two classes.

- Weights and Bias: The main function of the perceptron is to give weights to every input parameter present in the data and then adding them with a bias unit. In order to get the optimal results, the weights and bias are adjusted during the training part.

- Activation Function: The main role of the activation function is to determine whether a neuron should be activated or not based on certain conditions. The perceptron executes a simple activation function. Based on certain conditions such as if the weighted sum of inputs and the bias is more than or equal to zero, then one of the two classes is predicted else the other class.

- Learning Rate: Learning Rate has the ability to control the weights and it represents how quickly the neural network understands and updates the concepts that it has previously learned.

Mathematical Foundation

A perceptron’s architecture is made up of the following parts:

- Input Values (x1, x2,….,xn): The values x1, x2,…, xn, which can represent characteristics or signals, are input values used by the Perceptron. We assign a weight to each input.

- Weights(w1, w2,…,wn): Every input has a corresponding weight (w1, w2,…, wn). The strength or significance of each input is represented by a weight. Throughout the training process, these weights are memorized.

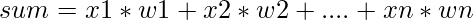

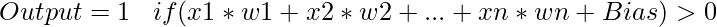

- Summation Function: The inputs and weights are added together by the Perceptron. It computes the inputs and weights dot product, which is expressed as follows:

- Activation Function: An activation function is applied to the summation result. Usually, the step function is employed, which returns 1 in the event that the total exceeds a bias threshold and 0 in the other case. The result can be shown as:

Output = 1 if Sum > Threshold (Bias)

Output = 0 if Sum ≤ Threshold (Bias) - Threshold: The constant term that modifies the decision boundary is called the bias (threshold). During training, it is also a learned parameter.

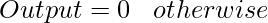

Mathematically, the perceptron’s output can be represented as:

By categorizing incoming data into one of two groups (such as 1 or 0), the Perceptron performs binary judgments. This linear classifier uses weights and bias to learn how to divide data points into distinct classes. Perceptrons may handle more difficult jobs like pattern recognition when they are utilized in multilayer networks. But one Perceptron can only handle linearly separable situations.By categorizing incoming data into one of two groups (such as 1 or 0), the Perceptron performs binary judgments. This linear classifier uses weights and bias to learn how to divide data points into distinct classes. Perceptrons may handle more difficult jobs like pattern recognition when they are utilized in multilayer networks. But one Perceptron can only handle linearly separable situations.

Parameters

Let us dive deep into these parameters and understand them in detail:

- max_iter (int, default=100): The first parameter that we will understand is the max_iter parameter. Its main task is to specify the maximum number of iterations also known as epochs which the perceptron will go through during training. If we increase the value then the algorithm will train longer. There is a chance that this can improve the performance.

- tol (float, default=1e-3): The tol stands for tolerance. This tolerance can be thought of as a level required for the stopping criterion for training. It is generally helpful for preventing the overfitting.

- eta0 (float, default=1.0): The eta0 parameter acts as the learning rate. This means that it has ability to determine the size of weight updates during every iteration.

- fit_intercept (bool, default=True): When the fit_intercept parameter is set True which is the default value, the model includes the bias term in the decision function.

- shuffle (bool, default=True): This one is self understanding by its name. When the shuffle parameter is True then the training data is shuffled at the beginning.

- random_state (int or RandomState instance, default=None): It is used for seed initialization. It is eligible to ensure that there random weight initialization and data shuffling.

- verbose (int, default=0): The verbose parameter is mainly used to control the verbosity level for progress messages during training. If there is a value of 0 then it denotes that no progress messages are printed.

- warm_start (bool, default=False): When the warm_start parameter is set to True, it allows us to reuse the solution from a previous call.

Variants of the Perceptron Algorithm

There are various variants of the perceptron algorithm and following are the few important ones:

1) Multi-layer Perceptron (MLP):

A feedforward neural network having multiple layers, including one or more hidden layers in between the input and output layers, is called a Multilayer Perceptron (MLP). MLPs are able to solve complicated problems because of this architecture, which helps them discover complex patterns and relationships in data. The network’s ability to capture hierarchical and nonlinear information is made possible by the interconnected perceptrons that make up the hidden layers. MLPs are a flexible tool in machine learning and artificial intelligence since they are widely employed in many different applications, such as image identification, natural language processing, and predictive mo

2) Voted Perceptron:

The Voted Perceptron is a flexible method that may hold several weight sets, each of which represents a distinct decision boundary. Because of this characteristic, it is incredibly accurate and resilient for a wide range of activities. By utilizing the collective intelligence of a group of perceptrons, it enhances its overall capabilities and flexibility in managing intricate and varied information. It can perform well in classification problems because of the weighted voting process, which combines the judgments of several models.

3) Averaged Perceptron:

Given that both the Voted Perceptron and the Averaged Perceptron retain several weight vectors throughout training, they are similar. But their application is where the real difference exists. The Averaged Perceptron computes the average of these weight vectors rather than applying them directly. By efficiently integrating the knowledge contained in distinct weight vectors, this method improves the model’s resilience and versatility while producing better results for a wider range of jobs.

4) Kernelized Perceptron:

The Kernelized Perceptron uses kernel functions to handle non-linearly separable data. These kernels transform the input data into linear decision boundaries from previously complex feature spaces of greater dimensions. The Perceptron is an effective tool for categorizing patterns that simple linear models are unable to identify since popular kernels like polynomials and radial basis functions (RBF) aid in capturing complex relationships within the data.

Implementation

For our implementation part of using the perceptron for binary classification, we will be using the the Iris flower dataset. Our goal over here is to classify the Iris flowers into two categories: Setosa and Versicolor. For this purpose we will be using Python as our programming language and Scikit-Learn to implement and train the perceptron.

Import necessary libraries

Python3

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.linear_model import Perceptron

from sklearn.metrics import accuracy_score

|

- load_iris is used to load the built-in Iris dataset.

- train_test_split to split the dataset into training and testing sets.

- Perceptron is to create our perceptron.

- accuracy_score is used to calculate the accuracy of our classifier.

Load the Iris dataset

Split the dataset into training and testing sets

Python3

X, y = data.data[:100, :], data.target[:100]

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

|

This line of code extracts the first 100 samples (rows) from a dataset named data, along with the matching labels named data.target. Using train_test_split from Scikit-Learn and a fixed random seed (random_state) for reproducibility, it divides these samples and labels into training (80%) and testing (20%) sets.

Create a perceptron classifier

Python3

perceptron = Perceptron(max_iter=100, eta0=0.1, random_state=42)

perceptron.fit(X_train, y_train)

|

This code starts a Perceptron classifier with a maximum of 100 iterations, a fixed random seed for reproducibility (random_state), and an initial learning rate (eta0) of 0.1. Following that, it applies the fit method to fit the classifier to the training data (X_train, y_train).

Make predictions on the test data and calculate the accuracy

Python3

y_pred = perceptron.predict(X_test)

accuracy = accuracy_score(y_test, y_pred)

print(f'Accuracy: {accuracy}')

|

Output:

Accuracy: 1.0

This code predicts labels for the test data X_test using the trained Perceptron classifier (perceptron), and it keeps those predictions in the variable y_pred. It then computes the predictions’ accuracy by comparing them to the actual labels in the y_test dataset, and publishes the accuracy score.

Classification Report

Python3

class_report = classification_report(y_test, y_pred)

print("Classification Report:\n", class_report)

|

Output:

Classification Report:

precision recall f1-score support

0 1.00 1.00 1.00 12

1 1.00 1.00 1.00 8

accuracy 1.00 20

macro avg 1.00 1.00 1.00 20

weighted avg 1.00 1.00 1.00 20

This code compares the actual labels (y_test) and predicted labels (y_pred) to provide a classification report that includes multiple classification metrics such as precision, recall, and F1-score. The report provides a thorough assessment of the model’s functionality using the test set of data.

Advantages

- Simplicity: The perceptron is a simple and easy to use algorithm.

- Efficiency: Perceptrons do possess the ability of being computationally efficient.

- Linear Separability: When the data is linearly separable, the perceptron performs very well.

Disadvantages

- No Probabilistic Outputs: The perceptron has the ability to provide binary outputs (0 or 1), which at times can be a drawback when the need is for probabilistic outputs.

- Sensitivity to Initial Conditions: There might be sensitiveness in the performance of the perceptron to the initial random values of weights and biases.

Conclusion

In conclusion, the Perceptron is a straightforward yet essential technique in binary classification and machine learning. In order to divide the data into two classes, it works by learning a linear decision boundary. It can only be used for data that can be separated into lines, for example, but despite these drawbacks, it forms the foundation for more intricate neural network structures. In this illustration, we successfully used a dataset to train a Perceptron classifier, make predictions, and assess its accuracy. comprehending the fundamentals of the perceptron is essential for comprehending more complex machine learning algorithms and neural networks.

Share your thoughts in the comments

Please Login to comment...