Implementation of Perceptron Algorithm for NAND Logic Gate with 2-bit Binary Input

Last Updated :

08 Jul, 2020

In the field of Machine Learning, the Perceptron is a Supervised Learning Algorithm for binary classifiers. The Perceptron Model implements the following function:

![Rendered by QuickLaTeX.com \[ \begin{array}{c} \hat{y}=\Theta\left(w_{1} x_{1}+w_{2} x_{2}+\ldots+w_{n} x_{n}+b\right) \\ =\Theta(\mathbf{w} \cdot \mathbf{x}+b) \\ \text { where } \Theta(v)=\left\{\begin{array}{cc} 1 & \text { if } v \geqslant 0 \\ 0 & \text { otherwise } \end{array}\right. \end{array} \]](https://www.geeksforgeeks.org/wp-content/ql-cache/quicklatex.com-f98cbf4744582c2b3309f1b0ceb8a313_l3.png)

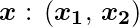

For a particular choice of the weight vector

and bias parameter

, the model predicts output

for the corresponding input vector

.

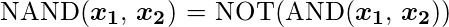

NAND logical function truth table for

2-bit binary variables, i.e, the input vector

and the corresponding output

–

We can observe that,

Now for the corresponding weight vector

of the input vector

to the AND node, the associated Perceptron Function can be defined as:

![Rendered by QuickLaTeX.com \[$\boldsymbol{\hat{y}\prime} = \Theta\left(w_{1} x_{1}+w_{2} x_{2}+b_{AND}\right)$ \]](https://www.geeksforgeeks.org/wp-content/ql-cache/quicklatex.com-353b5e95f0e9ba3139cffb3004ef373a_l3.png)

Later on, the output of AND node

is the input to the NOT node with weight

. Then the corresponding output

is the final output of the NAND logic function and the associated Perceptron Function can be defined as:

![Rendered by QuickLaTeX.com \[$\boldsymbol{\hat{y}} = \Theta\left(w_{NOT} \boldsymbol{\hat{y}\prime}+b_{NOT}\right)$\]](https://www.geeksforgeeks.org/wp-content/ql-cache/quicklatex.com-176aa68936dc6b2a6618fc23c6708820_l3.png)

For the implementation, considered weight parameters are

and the bias parameters are

.

Python Implementation:

import numpy as np

def unitStep(v):

if v >= 0:

return 1

else:

return 0

def perceptronModel(x, w, b):

v = np.dot(w, x) + b

y = unitStep(v)

return y

def NOT_logicFunction(x):

wNOT = -1

bNOT = 0.5

return perceptronModel(x, wNOT, bNOT)

def AND_logicFunction(x):

w = np.array([1, 1])

bAND = -1.5

return perceptronModel(x, w, bAND)

def NAND_logicFunction(x):

output_AND = AND_logicFunction(x)

output_NOT = NOT_logicFunction(output_AND)

return output_NOT

test1 = np.array([0, 1])

test2 = np.array([1, 1])

test3 = np.array([0, 0])

test4 = np.array([1, 0])

print("NAND({}, {}) = {}".format(0, 1, NAND_logicFunction(test1)))

print("NAND({}, {}) = {}".format(1, 1, NAND_logicFunction(test2)))

print("NAND({}, {}) = {}".format(0, 0, NAND_logicFunction(test3)))

print("NAND({}, {}) = {}".format(1, 0, NAND_logicFunction(test4)))

|

Output:

NAND(0, 1) = 1

NAND(1, 1) = 0

NAND(0, 0) = 1

NAND(1, 0) = 1

Here, the model predicted output (

) for each of the test inputs are exactly matched with the NAND logic gate conventional output (

) according to the truth table for 2-bit binary input.

Hence, it is verified that the perceptron algorithm for NAND logic gate is correctly implemented.

Share your thoughts in the comments

Please Login to comment...