How To Deploy An Azure Databricks Workspace?

Last Updated :

11 Mar, 2024

Azure Databricks is an open cloud-based platform that helps organizations to analyze and process large amounts of data, build artificial intelligence (AI) models, and share their work. It is designed in such a way that it can easily handle complex data tasks at a large scale. Databricks helps user to connect with the cloud storage and security settings, so our data remains secure.

It also takes care of setting up and managing the necessary cloud infrastructure automatically. This platform help multiple teams to collaborate and work together in easy steps. Using the Azure Databricks, companies can unlock more brief knowledge about their data and create powerful applications without worrying about technical issues.

What Is Azure Databricks Workspace?

When we talk about the Azure Databricks workspace, it is an environment with a centralized system where we users as a team can collaborate on our data projects. It acts as a warehouse where we can manage and organize all our data, code, and computing resources. With the help of a workspace, we can create notebooks and interactive coding environments that allow us to write, run, and share code without any trouble. The workspace notebooks support us with the various programming languages, making it easy for data scientists to analyze the data and engineers to work together.

The workspace have various features like version control, access control, and its supports the other Azure services. It also has a user-friendly interface and powerful functionality, by which the Azure Databricks workspace streamlines the entire data analysis and development process, enabling teams to focus on extracting valuable insights from their data. A workspace file is any file in the Azure Databricks workspace that is not a Databricks notebook. Workspace files can be any file type. Common examples includes as mentioned below:

- .py files used in custom modules

- .md files, such as README.md

- .csv or other small data files

- .txt files

- .whl libraries

- Log files

- Workspace files include files formerly referred to as “Files in Repos”.

Understanding Of Primary Terminologies

- Workspace: The Workspace is a central platform where data professionals collaborate, develop, and manage their projects.

- Clusters: The use of Clusters help in Virtual computing resources which is allocated by us for running data processing tasks, with customizable specifications based on workload needs.

- Workspace Notebooks: Workspace Notebooks are most interesting part where we can perform tasks like documents combining code, visualizations, and explanatory text, used for data exploration and analysis.

- Workspace Jobs: Workspace Jobs are done using the Automated tasks which run notebooks or code at scheduled intervals, enabling the product ionization of data workflows.

- Libraries: The use of Libraries help user to import External packages and directories, such as Python libraries or JARs, can be installed on Databricks clusters and used to increase the functionality of the databricks.

- Delta: Delta data management system is used to enhance the features of data versioning capabilities to data lakes, ensuring data integrity and reliability.

- Runtime: The Runtime is the execution environment for Databricks workloads, optimized for performance and efficiency.

- Command Line Interface: The command-line interface (CLI) offers a straightforward way to manage Databricks resources like clusters, jobs, and notebooks, allowing you to control these components efficiently.

Overview Of Azure DataBricks Workspace

The Azure Databricks workspace is a powerful platform that brings all your data sources together in one place. It provides tools that help us to connect our data from one platform to another by multiple steps like it process, store, share, analyze, model, and monetize datasets with solutions from BI to Generative AI.

Some basic tasks that are performed by the Azure Databricks workspace includes the following. It also provides a unified interface and tools for most data tasks, including:

- Data processing scheduling and management, in particular ETL

- Generating dashboards and visualizations

- Managing security, governance, high availability, and disaster recovery

- Data discovery, annotation, and exploration

- Machine learning (ML) modeling, tracking, and model serving

- Generative AI solutions

The Databricks Workspace supports strong commitment towards the open source community. It manages the updates of open source community by the use of the following technologies which are used for open source projects:

- Delta Lake and Delta Sharing

- MLflow

- Apache Spark and Structured Streaming

- Redash

Azure Databricks Workspace Architecture

We know that the Azure Databricks workspace is designed in such a way that it allows multiple teams to work together with safety and it also manages the backend services. This makes us easy to focus on your data tasks but the architecture of the workspace can vary according to the user e.g. custom setups (when we deploy Databricks to our own virtual network), the diagram shows the most common structure and data flow for Azure Databricks.

Azure Databricks has two main parts. They are

1. A Control Plane and

2. A Compute Plane

- The control plane is where Azure Databricks manages the backend services in your Databricks account. Things like notebook commands and workspace settings are stored and encrypted here.

- The compute plane is where your data gets processed. For most Databricks computations, the compute resources are in your Azure subscription, called the classic compute plane. This is the network and resources in your Azure subscription. Azure Databricks uses the classic compute plane for your notebooks, jobs, and for certain types of Databricks SQL warehouses.

Create An Azure Databricks Workspace

Databricks recommends you deploy your first Azure Databricks workspace using the Azure portal. You can also deploy Azure Databricks with one of the following options:

- Azure CLI

- PowerShell

- ARM template

- Bicep

You must be an Azure Contributor or Owner, or the Microsoft. Managed Identity resource provider must be registered in your subscription. To register the Microsoft. Managed Identity resource provider, you must have a custom role with permissions to do the /register/action operation. For more information, see Azure resource providers.

Deploying An Azure Databricks Workspace: A Step-By-Step Guide

Step 1: In the Azure portal, select Create a resource > Analytics > Azure Databricks.

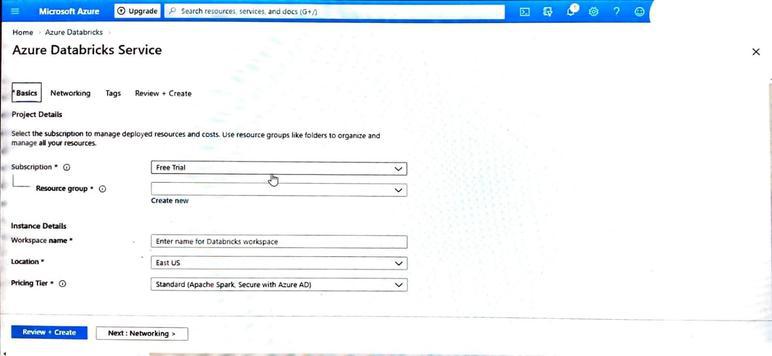

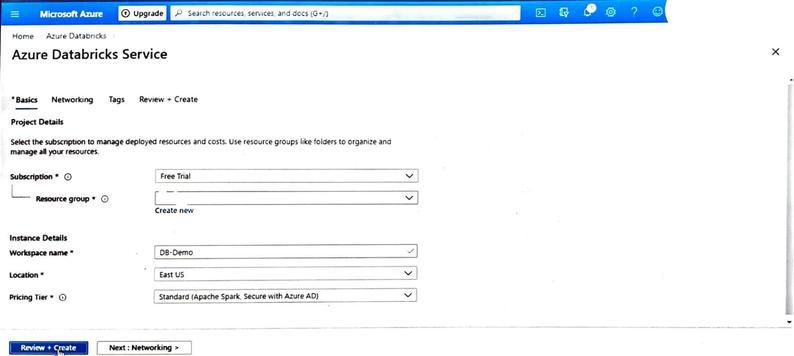

Step 2: Under Azure Databricks Service, provide the values to create a Databricks workspace.

Step 2: Under Azure Databricks Service, provide the values to create a Databricks workspace.

|

Property

|

Description

|

|

Workspace name

|

Provide a name for your Databricks workspace

|

|

Subscription

|

From the drop-down, select your Azure subscription.

|

|

Resource group

|

Specify whether you want to create a new resource group or use an existing one. A resource group is a container that holds related resources for an Azure solution. For more information, see Azure Resource Group overview.

|

|

Location

|

Select East US 2. For other available regions, see Azure services available by region.

|

|

Pricing Tier

|

Choose between Standard, Premium, or Trial. For more information on these tiers, see Databricks pricing page

|

Step 3: Select Review + Create, and then Create. The workspace creation takes a few minutes. During workspace creation, you can view the deployment status in Notifications.

- Once this process is finished, your user account is automatically added as an admin user in the workspace.

Step 4: When a workspace deployment fails, the workspace is still created in a failed state. Delete the failed workspace and create a new workspace that resolves the deployment errors.

- When you delete the failed workspace, the managed resource group and any successfully deployed resources are also deleted.

Your next steps depend on whether you want to continue setting up your account organization and security or want to start building out data pipelines:

- Connect your Databricks workspace to external data sources. See Connect to data sources.

- Ingest your data into the workspace. See Ingest data into a Databricks lake house.

- To onboard data to your workspace in Databricks SQL, see Load data using streaming tables in Databricks SQL.

- To continuing building out your account organization and security, follow the steps in Get started with Azure Databricks administration.

Advantages Of Azure Databricks

The following are the advantages of Azure Databricks:

- Databricks have a unified platform which brings the data engineers, scientists, and ML experts together on one platform for seamless collaboration.

- It have scalable resources which help to scale the resources up or down based on demand, optimizing performance and costs.

- The presence of Azure Integration allow Databrick to integrate smoothly with other Azure services like Blob Storage and SQL Data Warehouse.

- It gives high performance which is distributed for computing capabilities and enables fast processing of large data volumes for quicker insights.

- The Collaborative Workflows Features are available for the user such as version control and reproducibility foster team collaboration and ensure workflow integrity.

- It has an advanced analytics and ML Built-in support for popular libraries like TensorFlow and PyTorch which empowers advanced analytics and machine learning.

- It has a Robust Security as it features include Role-based access control, data encryption, and compliance certifications ensure data privacy and regulatory adherence.

- It is also said to be cost optimized because it is Pay-as-you-go and reserved instance pricing options, along with resource monitoring tools, help optimize costs based on usage patterns.

Disadvantages Of Azure Databricks

The following are the disadvantages of Azure Databricks:

- It is costly particularly for smaller businesses or projects because it works on the principle of “pay-as-you-go” pricing model.

- It is complex to manage because it is difficult to manage expertise in both Azure services and Databricks functionalities.

- It only integrates with AWS services and users are tied to the Azure ecosystem which limits its flexibility and portability.

Conclusion

The use of Azure Databricks provides us with tools that help us connect our sources of data to one platform to process, store, share, analyze, model, and monetize datasets with solutions from BI to generative AI. But there are some things to think about. They can get costly according to our application size, especially for small setups or projects. It is difficult to manage expertise in both Azure services and Databricks functionalities. we need to have knowledge about the technical setup, scaling, monitoring, and troubleshooting.

However, we also can’t ignore that Databricks have a unified platform which brings the data engineers, scientists, and ML experts together on one platform for seamless collaboration are worth these potential drawbacks. We can integrates with it seamlessly with other AWS services and we don’t have to worry about maintenance tasks. It gives high performance which is distributed for computing capabilities and enables fast processing of large data volumes for quicker insights. So we can conclude that it saves, both the time and effort of the user.

Azure Databricks Workspace – FAQ’s

Can I Use Azure Key Vault To Store Keys/Secrets To Be Used In Azure Databricks?

Yes. You can use Azure Key Vault to store keys/secrets for use with Azure Databricks. For more information, see Azure Key Vault-backed scopes.

Can I Use Azure Virtual Networks With Databricks?

Yes. You can use an Azure Virtual Network (VNET) with Azure Databricks. For more information, see Deploying Azure Databricks in your Azure Virtual Network.

How Do I Access Azure Data Lake Storage From A Notebook?

Follow these steps:

- In Microsoft Entra ID (formerly Azure Active Directory), provision a service principal, and record its key.

- Assign the necessary permissions to the service principal in Data Lake Storage.

- To access a file in Data Lake Storage, use the service principal credentials in Notebook.

How Many Options Are There For Deploying Azure Data Bricks?

Databricks can be deployed by the following options:

- Azure CLI

- PowerShell

- ARM template

- Bicep

What Are The Pre-Requirements For Deploying Azure Data Bricks Workspace?

You must be an Azure Contributor or Owner, or the Microsoft. Managed Identity resource provider must be registered in your subscription.

Share your thoughts in the comments

Please Login to comment...