An outlier is a data point that differs significantly from other data points. This significant difference can arise due to many circumstances, be it an experimental error or mistake or a difference in measurements. In this article, we will review one of the types of outliers: global outliers.

In data analysis, it is essential to comprehend and recognize global outliers. Understanding the overall distribution of the data and spotting any outliers both depend heavily on visual inspection. Additionally, the visualization sheds light on the potential effects of outliers on the relationship between characteristics and the target variable.

What is an outlier?

Data points in a dataset that substantially differ from the rest of the data are called outliers. Any kind of data, including time series, categories, and numerical data, may contain them. It is crucial to comprehend and manage outliers properly since they can significantly affect statistical analysis and machine learning models.

Reason For Outliers

Outliers in a dataset can occur for several reasons.

- Flaws in data collection, measurement flaws, or inherent variability in the data could be the cause of them.

- A sudden surge in website traffic or a quick drop in stock price are examples of events that can cause an outlier.

- Outliers can also result from modifications in the underlying process being assessed.

- Wrong numbers accidentally entered into the dataset might lead to data entry errors, which can also result in outliers.

Global Outlier

Global Outlier (also referred to as Point Anomaly), is when a single data point or observation is very different than the usual pattern. For example, consider a scenario where 98 out of 100 scores lie between 200 and 350, but the remaining 2 points have values of 600 and 720. In this case, the data point with a value of 720 stands out as a potential global outlier.

Such data points usually stand out to other data points. Those outliers are at the extremes of the mappings, irregulars in the observations.

Global outliers will do the same thing as any other outlier, i.e., it will be responsible for skewing the data distribution and affect the model performance of the machine learning model is it getting used by. But handling them with absolute care is necessary because sometimes those outliers can be crucial in identifying any trend.

Global Outliers Detection Methods

There are different different methods to detect and remove the outliers. Some of them are as follows:

1. Distance based Outlier Detections:

Distance-based outlier detection methods identify outliers in a dataset based on the distances between data points. These methods rely on the assumption that outliers are far away from the majority of the data points. Here are some common distance-based outlier detection techniques:

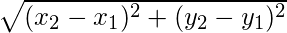

- Euclidean Distance

In two dimensions, the formula for the Euclidean distance between two points (x1, y1) and (x2, y2) is as follows:

- Mahalanobis Distance

An further measure of distance is the Mahalanobis distance, which computes distances while accounting for the data’s correlation structure. With a covariance matrix  , the formula yields the Mahalanobis distance between a data point x and the mean vector

, the formula yields the Mahalanobis distance between a data point x and the mean vector  :

:

2. Z-Scores

Global outliers can be found mathematically using a variety of statistical techniques. Using z-scores, which calculate how much a data point deviates from the dataset mean in terms of standard deviations, is one method. The following formula is used to determine a data point x’s z-score:

where  is the standard deviation,

is the standard deviation,  is the dataset mean, and x is the data point. Outliers are data points whose z-scores are higher than a certain threshold (e.g., 3 or -3).

is the dataset mean, and x is the data point. Outliers are data points whose z-scores are higher than a certain threshold (e.g., 3 or -3).

3. Interquartile Range (IQR)

The interquartile range (IQR), which calculates the spread of the middle 50% of the data, is an alternative method.

- To compute the IQR, one must first determine the difference between the data’s 75th percentile (Q3) and 25th percentile (Q1) values.

- Outliers are defined as data points that go outside of a specific range (such as 1.5 times the IQR).

Managing Global outlier

When global outliers are found, there are various methods for managing them. Removing the outliers from the dataset is one strategy, but caution must be used to prevent eliminating legitimate data points. Altering the data using methods like winsorizing, which swaps out extreme values with less extreme ones, or log transformations, which can lessen the effect of outliers on statistical analysis and machine learning models, is an additional strategy.

Importance of Detecting Outlier

Machine learning models and statistical analysis are susceptible to major disruptions from outliers. In statistical analysis, for instance, anomalies have the potential to distort the mean and standard deviation, resulting in imprecise estimations of central tendency and variability. Outliers in machine learning models can skew the findings by exerting an excessive amount of influence on the model’s predictions.

Outlier Detection is an important process in identifying the patterns and the “story” a dataset holds. Some of the important significance of Outlier Detection is as follows:

- Assuring data quality: The presence of Outlier in a dataset is indicative of measurement in error or rare events. Identifying and addressing these outliers helps in maintaining data integrity and quality

- Model performance : The presence of global outliers can have a significant impact on model performance. Outliers can reduce the effectiveness of predictive models and lead to poor generalization. Identifying them helps in getting more accurate results

- Inference and Hypothesis Testing: It is important to detect Outliers so as to avoid making any incorrect hypothesis about the data and validate our inferences

- Domain Specific Insights: In many industrial aspects, Outlier can be used as rare events occuring and further enhance the pattern recognition and trend generation. For example, Outliers have great impact in risk assessment of Trading or Financial modellings.

Implementation of Global Outlier

Let’s illustrate this with an example

We will do a demonstration on the California Housing dataset. We will first load the necessary libraries needed.

Python

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from sklearn.datasets import fetch_california_housing

|

The provided code snippet imports necessary libraries such as numpy, pandas, and matplotlib for data manipulation and visualization. It also imports the fetch_california_housing dataset from the sklearn.datasets module, which contains the California housing dataset for further analysis and modeling.

Loading Dataset

After we have loaded the libraries, we need to load the California housing dataset and do a describe on the dataset. Describe is a great method to find any anomalies in the dataset as it gives the measure of central tendency about the data observations.

Python

X, y = fetch_california_housing(return_X_y=True, as_frame=True)

X.describe()

|

Output:

MedInc HouseAge AveRooms AveBedrms Population \

count 20640.000000 20640.000000 20640.000000 20640.000000 20640.000000

mean 3.870671 28.639486 5.429000 1.096675 1425.476744

std 1.899822 12.585558 2.474173 0.473911 1132.462122

min 0.499900 1.000000 0.846154 0.333333 3.000000

25% 2.563400 18.000000 4.440716 1.006079 787.000000

50% 3.534800 29.000000 5.229129 1.048780 1166.000000

75% 4.743250 37.000000 6.052381 1.099526 1725.000000

max 15.000100 52.000000 141.909091 34.066667 35682.000000

AveOccup Latitude Longitude

count 20640.000000 20640.000000 20640.000000

mean 3.070655 35.631861 -119.569704

std 10.386050 2.135952 2.003532

min 0.692308 32.540000 -124.350000

25% 2.429741 33.930000 -121.800000

50% 2.818116 34.260000 -118.490000

75% 3.282261 37.710000 -118.010000

max 1243.333333 41.950000 -114.310000

- The provided code snippet stores the features in the dataframe X and the target variable in the series Y.

- It loads the California housing dataset using the fetch_california_housing function from the sklearn.datasets module. The data is guaranteed to be returned as a pandas DataFrame and Series when the as_frame=True argument is used.

- The features in the dataset, including the count, mean, standard deviation, minimum, maximum, and quartile statistics, are then summarized descriptively by calling X.describe().

In order to help with the identification of possible outliers and the evaluation of the general qualities of the data, this summary offers a preliminary grasp of the distribution and features of the dataset.

Here, we can see that the describe function provides some really interesting facts about the dataset, which are summarised below:

- The “AveRooms” has a max value of 141.909091 whereas the 75% and mean is about 6.052381 and 5.42901 respectively

- The same trend can be seen for “AveBedrms” and “AveOccup” where the max, 75% value and mean are significantly different.

Here, it is safe to assume that these columns in our dataframe have Global Outliers.

Using Plots and Visualizations to detect global outliers

The describe method is not much evidence, and is not sufficient as a data professional. Hence, we will now plot the graphs for each column in our dataset. For that, we will use all the columns except “Latitude” and “Longitude” since they will not be of relevant use here.

Python

cols = X.columns.tolist()[:-2]

fig,ax = plt.subplots(2,3,figsize = (9,7))

ax = ax.ravel()

for i,k in enumerate(cols):

ax[i].scatter(y, X[k])

ax[i].set_title(f" {cols[i]} vs Price")

ax[i].set_xlabel("Price in millions")

ax[i].set_ylabel(f"{cols[i]}")

plt.tight_layout()

plt.show()

|

Output:

.jpg)

- A plot is made for each column against the target variable

y which represent the median house value for California districts. - Subplots are used to organize the plots in a 2×3 grid, and a for loop is used to construct a scatter plot for each column against ‘y’. Every plot has the column name vs. “Price” as its title, and the x- and y-axes have the proper labels set.

- Finally, the plots are displayed using plt.show() after tight_layout has been applied to improve the gap between subplots. The link between each feature and the target variable in the California housing dataset may be shown visually with the help of this code.

Here, in the plot above, we can clearly see that some datapoints are far from the other datapoints. This holds true for our analysis from describe() where we can that AveBedrms, AveOccup and AveRooms have significantly different values between their measures of central tendency and Quartile measures.

This does not mean that they are observational mistakes. This could mean that there was a house listed which was a mansion with more than 50 rooms, or which had more than 20 bed rooms or had more than 100 occupants (because it was a hostel or servants had the same place of living). These things would need further survey and investigation. But until then, we can be sure to brand these outliers as Global Outliers.

Outlier Detection Using Boxplot

Python3

selected_columns = [col for col in X.columns if col not in ['Latitude', 'Longitude']]

plt.figure(figsize=(16, 10))

for i, col in enumerate(selected_columns, start=1):

plt.subplot(3, 3, i)

sns.boxplot(y=X[col], width=0.4, color='skyblue')

plt.xlabel(col)

plt.title(f'Boxplot for {col}')

plt.tight_layout()

plt.show()

|

Output:

.webp)

Box Plot

Here, we visualize the outliers using box plots.

Outlier detection using Z-Score

Python3

z_scores = np.abs(stats.zscore(X['Population']))

outlier_indices = np.where(z_scores > 3)

|

- This snippet of code applies Z-Score-based outlier detection to the housing dataset for California.

- Using the stats.zscore function from SciPy, the Z-Score is calculated for each feature in the input dataset (X).

- In order to detect outliers, one must determine whether the absolute Z-Score for any given data point is greater than 3.

Python3

X_no_outliers = X[(z_scores_all_columns <= 3).all(axis=1)]

y_no_outliers = y[(z_scores_all_columns <= 3).all(axis=1)]

cols_no_outliers = X_no_outliers.columns.tolist()

num_cols = len(cols_no_outliers)

num_rows = (num_cols - 1) // 3 + 1

fig, ax = plt.subplots(num_rows, 3, figsize=(12, 3 * num_rows))

ax = ax.ravel()

for i, k in enumerate(cols_no_outliers):

ax[i].scatter(X_no_outliers[k], y_no_outliers)

ax[i].set_title(f"{k} vs Price (No Outliers)")

ax[i].set_xlabel(k)

ax[i].set_ylabel("Price in millions")

for j in range(num_cols, len(ax)):

fig.delaxes(ax[j])

plt.tight_layout()

plt.show()

|

Output:

.webp)

- After the Z-Score-identified outliers are removed from the data, the distribution of the data is shown by plotting each selected feature against the target variable (y) in a scatter plot.

- By reducing the impact of extreme values on the general data patterns, this technique helps to strengthen the dataset’s robustness for additional analysis.

Outlier detection using IQR (Interquartile Range)

Python3

outliers_by_column = {}

selected_columns = [col for col in X.columns if col not in ['Latitude', 'Longitude']]

for col in selected_columns:

Q3 = np.percentile(X[col], 75, interpolation='midpoint')

Q1 = np.percentile(X[col], 25, interpolation='midpoint')

IQR = Q3 - Q1

upper_bound = Q3 + 1.5 * IQR

lower_bound = Q1 - 1.5 * IQR

outliers_upper = X[X[col] > upper_bound]

outliers_lower = X[X[col] < lower_bound]

outliers_by_column[col] = {'upper': outliers_upper[col].tolist(), 'lower': outliers_lower[col].tolist()}

print(f"\nResults for '{col}' column:")

print("IQR:", IQR)

print("Upper Bound:", upper_bound)

print("Lower Bound:", lower_bound)

|

Output:

Results for 'MedInc' column:

IQR: 2.1802999999999995

Upper Bound: 8.013849999999998

Lower Bound: -0.7073499999999995

Results for 'HouseAge' column:

IQR: 19.0

Upper Bound: 65.5

Lower Bound: -10.5

Results for 'AveRooms' column:

IQR: 1.6116969213354757

Upper Bound: 8.469926334384166

Lower Bound: 2.023138649042263

Results for 'AveBedrms' column:

IQR: 0.0934531669715235

Upper Bound: 1.2397058168079962

Lower Bound: 0.8658931489219022

Results for 'Population' column:

IQR: 938.0

Upper Bound: 3132.0

Lower Bound: -620.0

Results for 'AveOccup' column:

IQR: 0.8525687229665166

Upper Bound: 4.561116868480889

Lower Bound: 1.1508419766148226

- This code segment uses the California housing dataset and the Interquartile Range (IQR) algorithm to detect outliers.

- For every characteristic in the dataset (X), , the first quartile (Q1), third quartile (Q3), and the IQR are first calculated. Each data point is compared to a range that is determined by 1.5 times the IQR below Q1 and 1.5 times the IQR above Q3, which is how outliers are found.

- Accordingly we can find outliers based on IQR.

Conclusion

Global outliers in data analysis can have a big effect on how a dataset is interpreted and modeled. For statistical studies and machine learning models to be accurate and reliable, global outliers must be recognized and managed. In this case, the code snippet that is provided provides a useful visual aid for illustrating how various attributes in the California housing dataset relate to the target variable, which is likely home prices. The algorithm enables a clear understanding of the relationship between specific features and house prices by generating scatter plots for each feature against the target variable.

Finding possible outliers and comprehending the data’s overall distribution depend on this visual examination. It also sheds light on how outliers might affect how characteristics and the target variable relate to one another. All things considered, the code snippet-enabled visualization is a useful tool for learning about the dataset and making defensible choices about how to handle global outliers.

Share your thoughts in the comments

Please Login to comment...