Padding is a technique used in convolutional neural networks (CNNs) to preserve the spatial dimensions of the input data and prevent the loss of information at the edges of the image. It involves adding additional rows and columns of pixels around the edges of the input data. There are several different ways to apply padding in Python, depending on the type of padding being used.

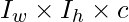

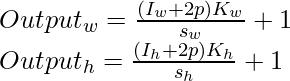

Let’s an image of dimension  is having filtered with

is having filtered with  dimension kernel with stride

dimension kernel with stride  then the output shape will be:

then the output shape will be:

Then the output shape will be

Where, C = number of filtered channel.

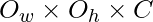

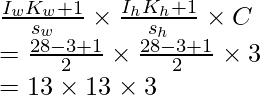

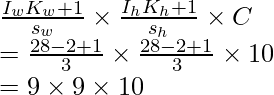

For example the image of shape  is filtered with

is filtered with  kernel with stride length =2 then the output shape of the image will be.

kernel with stride length =2 then the output shape of the image will be.

So, the image shrinks when the convolution is performed.

And the corner and edge pixels do have not equal participation. as we can see from the figure below.

Padding

To resolve this we add extra layers of zeros to our image, which is known as the same padding.

Pooling

Pooling is a technique used in convolutional neural networks (CNNs) to reduce the dimensionality of the input while retaining important features. There are several types of pooling operations, such as max pooling, average pooling, and sum pooling. Max pooling is the most commonly used type of pooling in CNNs.

In a CNN, the pooling layer is typically inserted between the convolutional layer and the fully connected layer. The purpose of the pooling layer is to reduce the spatial size of the input, which helps to reduce the number of parameters and computational complexity of the model. It also helps to reduce overfitting by reducing the sensitivity of the model to small translations in the input.

Max pooling

Max pooling works by dividing the input into a set of non-overlapping regions and taking the maximum value from each region. For example, if the input has a size of [5, 5] and the pooling window has a size of [3, 3], the max pooling operation will take the maximum value from each 3×3 window of the input, resulting in an output with a size of [2, 2].

Types of padding:

- Valid Padding

- same Padding

Valid Padding:

Valid padding is a technique used in convolutional neural networks (CNNs) to process the input data without adding any additional rows or columns of pixels around the edges of the data. This means that the size of the output feature map is smaller than the size of the input data. Valid padding is used when it is desired to reduce the size of the output feature map in order to reduce the number of parameters in the model and improve its computational efficiency.

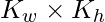

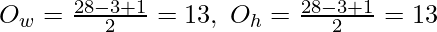

Formula to find the output shape of valid padding:

Where,  = Number of rows and column of input data.

= Number of rows and column of input data.

= Filter kernel dimensions,

= Filter kernel dimensions,

= Stride

= Stride

Here is an example of how to create a convolutional layer with valid padding and a stride of 2 in the vertical and horizontal dimensions :

Python3

import tensorflow as tf

from tensorflow.keras.layers import Conv2D

input_shape = (1, 28, 28, 3)

x = tf.random.normal(input_shape)

y = Conv2D(filters=3,

kernel_size=(3, 3),

strides=(2, 2),

padding='valid',

data_format ='channels_last',

input_shape=input_shape

)(x)

print('Output shape :',y.shape[1:])

|

Outputs:

Output shape : (13, 13, 3)

In the output, channels will be equal to the filters i.e c = 3

Let’s calculate the output shape with the formula :

You can also specify different stride values for the vertical and horizontal dimensions by providing a tuple with different values. For example:

Python3

from tensorflow.keras.layers import Conv2D

input_shape = (1, 28, 28, 3)

x = tf.random.normal(input_shape)

y = Conv2D(filters=10,

kernel_size=(2,2),

strides=(3, 3),

padding='valid',

data_format ='channels_last',

input_shape=input_shape

)(x)

print('Output shape :',y.shape[1:])

|

Output :

Output shape : (9, 9, 10)

In the output, channels will be equal to the filters i.e c = 10

Let’s calculate the output shape with the formula :

When valid padding is used, the output feature map is smaller than the input data because the filters do not extend beyond the edges of the input data. This can result in the loss of information at the edges of the image, which can affect the performance of the CNN. Valid padding is also known as no padding because no padding is applied to the input data before the convolution operation.

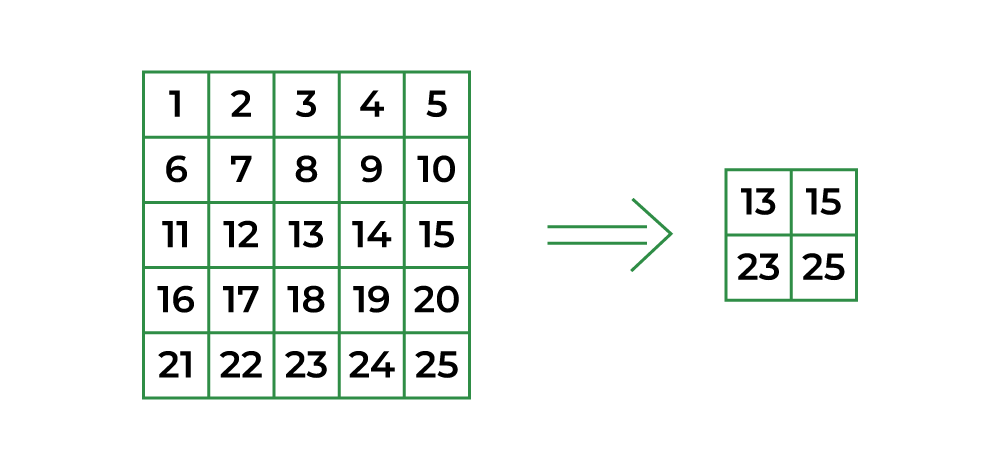

Let’s try with tf.nn.max_pool in TensorFlow to perform max pooling with valid padding and stride 2

Valid Padding

Python3

import tensorflow as tf

input_tensor = tf.constant(

[

[1, 2, 3, 4, 5],

[6, 7, 8, 9, 10],

[11, 12, 13, 14, 15],

[16, 17, 18, 19, 20],

[21, 22, 23, 24, 25]

])

input_tensor = tf.expand_dims(input_tensor, axis=0)

input_tensor = tf.expand_dims(input_tensor, axis=3)

print('Input Tensor shape:', input_tensor.shape)

output_tensor = tf.nn.max_pool(input = input_tensor,

ksize=[1, 3, 3, 1],

strides=2,

padding='VALID')

print('\noutput_tensor :\n',output_tensor)

|

Output :

Input Tensor shape: (1, 5, 5, 1)

output_tensor :

tf.Tensor(

[[[[13]

[15]]

[[23]

[25]]]], shape=(1, 2, 2, 1), dtype=int32)

Code Explanation

In this code, a 5×5 input tensor is created using the tf.constant function. This input tensor represents a 2D array with 5 rows and 5 columns, and its values are set to the integers from 1 to 25.

Next, the input tensor is reshaped to [batch_size, height, width, channels] using the tf.expand_dims function. The axis parameter specifies the dimension along which to expand the shape of the tensor. In this case, the input tensor is expanded along the first and third dimensions, resulting in an input tensor with shape [1, 5, 5, 1].

Then, the tf.nn.max_pool function is used to perform max pooling on the input tensor. The function takes in the input tensor, the size of the pooling window (ksize), the stride of the sliding window (strides), and the type of padding to use (padding). In this example, the pooling window has a size of [1, 3, 3, 1], which means that the window will be 3×3. The stride is set to 2 or [1, 2, 2, 1], which means that the window will move 2 units in both the height and width directions. The padding is set to ‘VALID’, which means that no padding will be used.

Finally, the output tensor is printed using the print function. The output tensor will have a shape of [1, 2, 2, 1], and it will contain the maximum values from each 3×3 window of the input tensor.

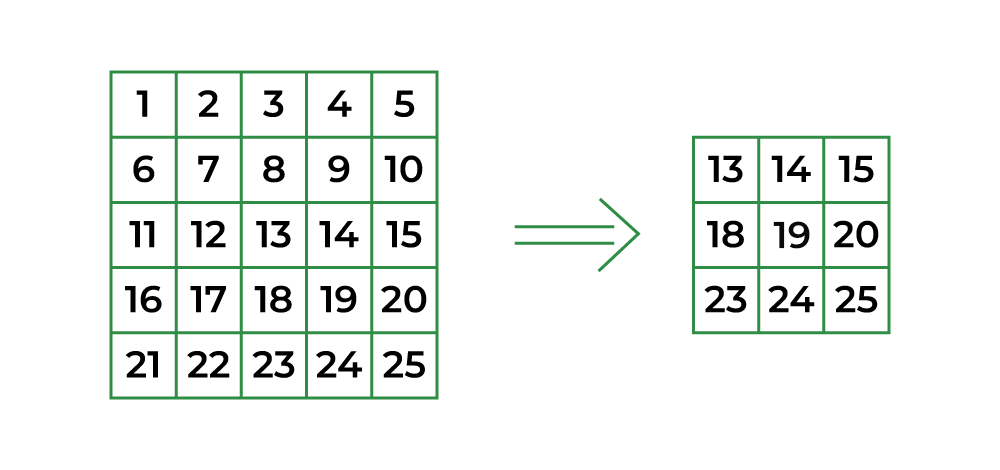

Here is an example of using tf.nn.max_pool in TensorFlow to perform max pooling with valid padding and stride 1

Valid Padding Stride = 1

Python3

import tensorflow as tf

input_tensor = tf.constant([

[1, 2, 3, 4, 5],

[6, 7, 8, 9, 10],

[11, 12, 13, 14, 15],

[16, 17, 18, 19, 20],

[21, 22, 23, 24, 25]

])

input_tensor = tf.expand_dims(input_tensor, axis=0)

input_tensor = tf.expand_dims(input_tensor, axis=3)

print('Input Tensor shape:', input_tensor.shape)

output_tensor = tf.nn.max_pool(input = input_tensor,

ksize=[1, 3, 3, 1],

strides=[1, 1, 1, 1],

padding='VALID'

)

print('\noutput_tensor :\n',output_tensor)

|

Outputs:

Input Tensor shape: (1, 5, 5, 1)

output_tensor :

tf.Tensor(

[[[[13]

[14]

[15]]

[[18]

[19]

[20]]

[[23]

[24]

[25]]]], shape=(1, 3, 3, 1), dtype=int32)

With a kernel size of (3,3) and a stride of 1, the pooling window will move 1 unit in both the height and width directions. This will result in an output tensor with a shape of [1, 3, 3, 1], and it will contain the maximum values from each 3×3 window of the input tensor.

Same Padding:

The same padding is another technique used in convolutional neural networks (CNNs) to process the input data. Unlike valid padding, same padding adds additional rows and columns of pixels around the edges of the input data so that the size of the output feature map is the same as the size of the input data. This is achieved by adding rows and columns of pixels with a value of zero around the edges of the input data before the convolution operation.

The same padding is implemented in CNNs by specifying the ‘same’ option when creating a convolutional layer. For example, in Python using the Keras library, a convolutional layer with same padding can be created as follows:

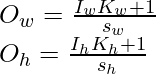

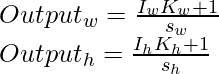

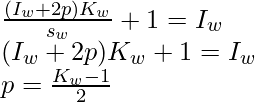

The formula for calculating the output shape for the same padding

Where,

= Number of rows and column of input image.

= Number of rows and column of input image.

= Filter kernel dimensions.

= Filter kernel dimensions.

= Strides

= Strides

p = Number of layer of zeros.

For same output shape: stride = 1 and

Python

import tensorflow as tf

from tensorflow.keras.layers import Conv2D

input_shape = (1, 28, 28, 3)

x = tf.random.normal(input_shape)

y = Conv2D(filters=3,

kernel_size=(3, 3),

strides=1,

padding='same',

data_format ='channels_last',

input_shape=input_shape

)(x)

print('Output shape :',y.shape[1:])

|

Output:

Output shape : (28, 28, 3)

In the output, channels will be equal to the filters i.e c = 3

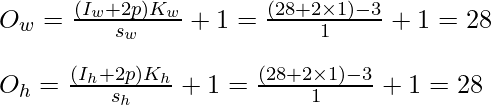

Let’s calculate the output shape with the formula :

- Specify different stride values for the vertical and horizontal dimensions by providing a tuple with different values. For example:

Python3

import tensorflow as tf

from tensorflow.keras.layers import Conv2D

input_shape = (1, 28, 28, 3)

x = tf.random.normal(input_shape)

y = Conv2D(filters=10,

kernel_size=(3, 3),

strides=(1,3),

padding='same',

data_format ='channels_last',

input_shape=input_shape

)(x)

print('Output shape :',y.shape[1:])

|

Output:

Output shape : (28, 10, 10)

In the output, channels will be equal to the filters i.e c = 3

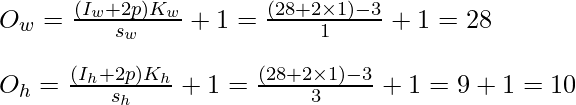

Let’s calculate the output shape with the formula :

This would create a convolutional layer with a stride of 1 in the vertical dimension and a stride of 3 in the horizontal dimension.

When the same padding is used, the output feature map has the same size as the input data because the filters are applied to the input data with additional rows and columns of zero padding around the edges. This can help to preserve the information at the edges of the image and improve the performance of the CNN.

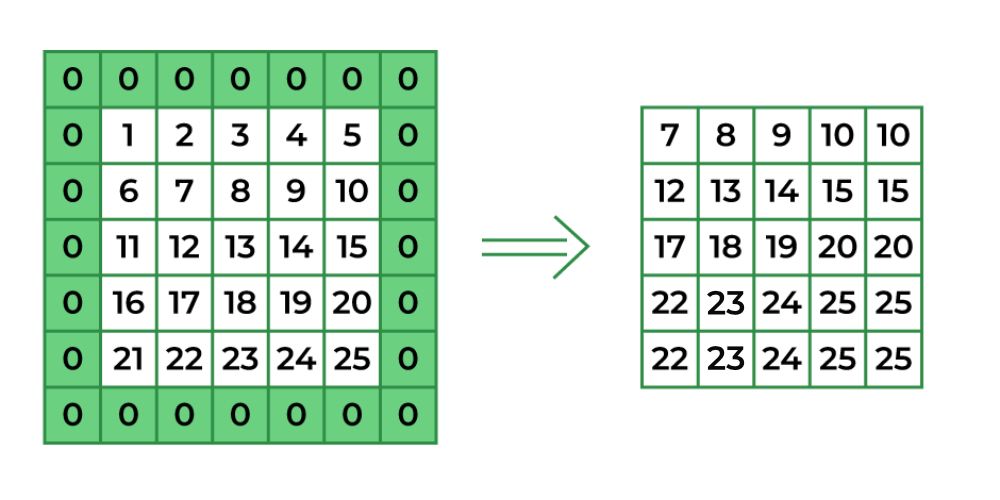

Max pool with same padding and stride 2

Same Padding

Python3

import tensorflow as tf

input_tensor = tf.constant([

[1, 2, 3, 4, 5],

[6, 7, 8, 9, 10],

[11, 12, 13, 14, 15],

[16, 17, 18, 19, 20],

[21, 22, 23, 24, 25]

])

input_tensor = tf.expand_dims(input_tensor, axis=0)

input_tensor = tf.expand_dims(input_tensor, axis=3)

print('Input Tensor shape:', input_tensor.shape)

output_tensor = tf.nn.max_pool(input = input_tensor,

ksize=[1, 3, 3, 1],

strides=[1, 1, 1, 1],

padding='SAME'

)

print('\noutput_tensor :\n',output_tensor)

|

Output:

Input Tensor shape: (1, 5, 5, 1)

output_tensor :

tf.Tensor(

[[[[ 7]

[ 8]

[ 9]

[10]

[10]]

[[12]

[13]

[14]

[15]

[15]]

[[17]

[18]

[19]

[20]

[20]]

[[22]

[23]

[24]

[25]

[25]]

[[22]

[23]

[24]

[25]

[25]]]], shape=(1, 5, 5, 1), dtype=int32)

Code Explanation

In this code, a 5×5 input tensor is created using the tf.constant function. This input tensor represents a 2D array with 5 rows and 5 columns, and its values are set to the integers from 1 to 25.

Next, the input tensor is reshaped to [batch_size, height, width, channels] using the tf.expand_dims function. The axis parameter specifies the dimension along which to expand the shape of the tensor. In this case, the input tensor is expanded along the 0th and 3rd dimensions, resulting in a tensor with shape [1, 5, 5, 1].

Then, the tf.nn.max_pool function is used to perform max pooling on the input tensor. The function takes in the input tensor, the size of the pooling window (ksize), the stride of the sliding window (strides), and the type of padding to use (padding). In this example, the pooling window has a size of [1, 3, 3, 1], which means that the window will be 3×3. The stride is set to [1, 1, 1, 1], which means that the window will move 1 unit in both the height and width directions. The padding is set to ‘SAME’, which means that padding will be added to the input tensor so that the output has the same size as the input.

Finally, the output tensor is printed using the print function. The output tensor will have as same as the input tensor of shape [1, 5, 5, 1], and it will contain the maximum values from each 3×3 window of the input tensor and stride 1.

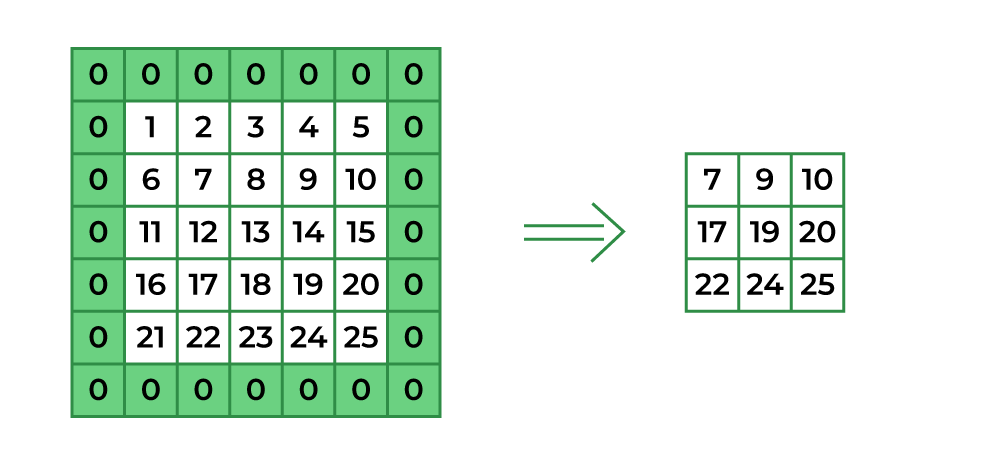

Max pool with same padding and stride 2

Same Padding

Python3

import tensorflow as tf

input_tensor = tf.constant([

[1, 2, 3, 4, 5],

[6, 7, 8, 9, 10],

[11, 12, 13, 14, 15],

[16, 17, 18, 19, 20],

[21, 22, 23, 24, 25]

])

input_tensor = tf.expand_dims(input_tensor, axis=0)

input_tensor = tf.expand_dims(input_tensor, axis=3)

print('Input Tensor shape:', input_tensor.shape)

output_tensor = tf.nn.max_pool(input = input_tensor,

ksize=[1, 3, 3, 1],

strides=2,

padding='SAME'

)

print('\noutput_tensor :\n',output_tensor)

|

Output:

Input Tensor shape: (1, 5, 5, 1)

output_tensor :

tf.Tensor(

[[[[ 7]

[ 9]

[10]]

[[17]

[19]

[20]]

[[22]

[24]

[25]]]], shape=(1, 3, 3, 1), dtype=int32)

Explanation

The output tensor has a shape of [1, 3, 3, 1] and contains the maximum values from each 3×3 window of the input tensor. The maximum value from the first 3×3 window is 7, the maximum value from the second 3×3 window is 9, and so on.

With “same” padding and a stride of 2, the output tensor has the size of(1, 3, 3, 1).

Share your thoughts in the comments

Please Login to comment...