Project Idea | myVision

Last Updated :

26 Jun, 2018

Project Title: myVision

Objective: The idea behind this project is to create an application that would assist people with visual impairment in analyzing their surroundings.

Description: To analyze they are surrounding all they have to do is to take a picture with their mobile phone and application will automatically learn the contents/objects in the picture and will provide voice assistance, about the types of objects nearby.

But there might be one catch that how visually impaired people will operate this application. Well, all they have to do is to open this application with the help of any virtual assistance on their mobile devices and after that, for taking the picture they can use any one of the volume keys or the camera button.

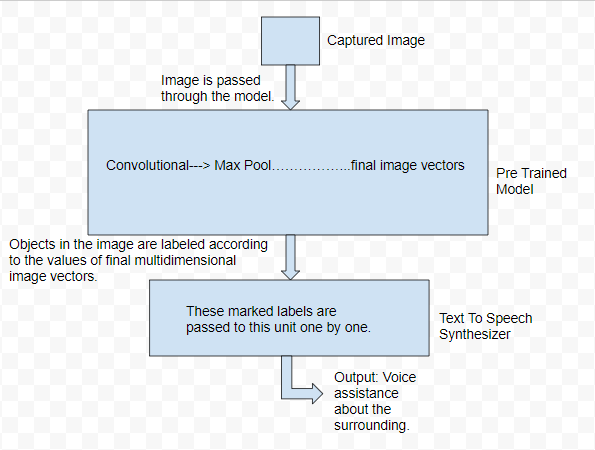

Then that captured picture will be inputted, to pre-trained Convolutional Neural Network (CNN) and all the detected objects in that picture will get labeled. Those labels will then be passed to Text To Speech engine which by analyzing and processing the text using Natural Language Processing (NLP) converts the text into speech.

One plus point of this idea is that it is not only limited to a mobile application. On an advanced level, one can implement this idea to build some gadget that will work in a similar manner but instead of taking pictures manually it can take live images of the surrounding (like in self-driven cars) and provide voice assistance to visually impaired people not only about types of objects nearby but also about approximate distance of that objects, by using different types of sensors.

Flow Diagram:

Tools Used:

- For hardware point of view:

- Mobile device with good quality camera.

- Good Processing power to learn weights of the model.

- For software point of view:

- Google Firebase.

- Google Cloud services.

- Android/Visual studio.

- Python, Java, JavaScript.

Note: Algorithm used for object detection is YOLO algorithm and for text to speech conversion google cloud services are used.

Features and Application:

- Provide voice assistance to visually impaired people.

- Convenient to use as pictures can be clicked by just pressing volume keys.

-

The idea is not just restricted to a simple mobile application as a system can be integrated with various other

devices that can work on a live image capturing pictures manually cars) technique rather than taking pictures

manually again and again.

- With the use of different types of sensors, the function the quality of the system can greatly be increased, as this

system can be implemented to output approximate distance to the objects and similar other features also.

Important links:

Yolo website: https://pjreddie.com/darknet/yolo/

Training your own model from scratch: https://timebutt.github.io/static/how-to-train-yolov2-to-detect-custom-objects/

Text To Speech Engine: https://cloud.google.com/text-to-speech/docs/

Thank You!

Note: This project idea is contributed for ProGeek Cup 2.0- A project competition by GeeksforGeeks.

Share your thoughts in the comments

Please Login to comment...