RNN for Sequence Labeling

Last Updated :

31 Jul, 2023

Recurrent Neural Networks (RNNs) have proven to be highly effective in Natural Language Processing (NLP) tasks, particularly in sequence labeling. Sequence labeling involves assigning labels to each element in a sequence, such as part-of-speech tagging, named entity recognition, sentiment analysis, and more. In this article, we will explore the concepts behind RNNs and their application in sequence labeling tasks in NLP, along with step-by-step explanations and mathematical formulations.

Recurrent Neural Networks

Recurrent Neural Networks (RNNs) have proven to be powerful models for various sequence labeling tasks, such as named entity recognition, part-of-speech tagging, and sentiment analysis. They excel in processing sequential data due to their ability to maintain a hidden state that captures information from previous time steps. Sequence labeling involves assigning a label to each element in a sequence, making RNNs a natural choice for such tasks.

- Recurrent Neural Networks (RNNs): RNNs are a class of neural networks that can handle sequential data by maintaining hidden states that capture contextual information. Unlike traditional feed-forward neural networks, RNNs have feedback connections, allowing them to process inputs in a sequential manner. This makes them well-suited for sequence labeling tasks in NLP.

- Understanding RNN Architecture: The basic building block of an RNN is the recurrent cell, which receives an input at each time step and produces an output and hidden state. The hidden state at each time step serves as the memory of the network and is updated based on the current input and the previous hidden state. The output at each time step can be used for various purposes, such as classification or further processing.

- Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRUs): Traditional RNNs suffer from the vanishing gradient problem, where gradients diminish exponentially as they propagate through time steps. LSTM and GRU units are variations of RNNs that alleviate this issue by introducing gating mechanisms. These mechanisms control the flow of information within the cell and allow the network to selectively retain or forget information over long sequences.

- Sequence Labeling Task: In sequence labeling, each element in a sequence is assigned a label based on its context. For example, in part-of-speech tagging, each word in a sentence is labeled with its corresponding part of speech. In named entity recognition, named entities such as person names or locations are identified and labeled.

- RNNs for Sequence Labeling: To apply RNNs to sequence labeling tasks, we can use a many-to-many architecture, where the input sequence and output sequence have the same length. Each input element is passed through the RNN, and the output at each time step is used to predict the label for that element.

- Mathematical Formulation: Let’s consider a sequence labeling problem with an input sequence X = [x₁, x₂, …, xₙ] and a corresponding output sequence Y = [y₁, y₂, …, yₙ]. We can represent the RNN computation as follows:

- Hidden State Update: hₜ = f(hₜ₋₁, xₜ)

- Output Computation: oₜ = g(hₜ)

- Loss Function:

Here, f is the recurrent cell function, g is the output function, and loss represents the chosen loss function (e.g., cross-entropy) to measure the discrepancy between predicted and true labels.

Steps for Sequence Labelling using RNN

Let’s dive into the steps involved in using RNN for sequence labeling and provide mathematical explanations along with relevant examples.

- Data Preparation: To start, we need a labeled dataset for training and evaluation. The dataset should consist of input sequences and their corresponding output labels. For example, consider a named entity recognition task where we want to label named entities in sentences. The dataset would contain sentences and their associated entity labels.

- Input and Output Encoding: Next, we need to encode the input and output data in a suitable format for the RNN. In NLP, inputs are typically represented as word embeddings or one-hot vectors, while outputs are often represented as numerical labels or one-hot vectors.

- RNN Architecture: Choose the appropriate RNN architecture for the sequence labeling task. The most commonly used architectures are Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRUs) due to their ability to capture long-term dependencies. Let’s consider LSTM for our explanation.

- Forward Pass: The forward pass of the RNN involves processing the input sequence and generating the corresponding output sequence.

Let’s denote the input sequence as X = [x₁, x₂, …, xₙ], where each xᵢ represents the input at time step i. Similarly, let Y = [y₁, y₂, …, yₙ] represent the output sequence.

At each time step t, the LSTM computes the hidden state hₜ and the output oₜ using the following equations:- Forget Gate: ft = σ(Wf · [ht-1, xt] + bf)

- Input Gate: it = σ(Wi· [ht-1, xt] + bi)

- Candidate State:

![Rendered by QuickLaTeX.com \hat{C}_ₜ = \tanh(W_C · [h_{ₜ₋₁}, x_ₜ] + b_c)](https://www.geeksforgeeks.org/wp-content/ql-cache/quicklatex.com-9a0657d4b1a8babb49efde0ea2f65b3a_l3.png)

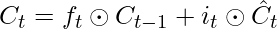

- Updated Cell State:

- Output Gate:

![Rendered by QuickLaTeX.com O_t = σ(W_o · [h_{t-1}, x_t] + b_o)](https://www.geeksforgeeks.org/wp-content/ql-cache/quicklatex.com-6a22cda6af0d3d4fa5d6b18a19b13859_l3.png)

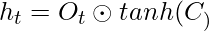

- Hidden State:

- Output: Ot = g(ht)

Here, σ represents the sigmoid activation function, ⊙ denotes element-wise multiplication, and W and b represent the weight and bias matrices/parameters of the LSTM.

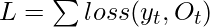

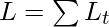

- Loss Calculation: To train the RNN, we need a loss function that measures the discrepancy between the predicted output Ot and the true output yt. Commonly used loss functions for sequence labeling tasks include cross-entropy loss and categorical loss. The loss at each time step t can be computed as:

Lt = loss(yt, Ot)

The overall loss L is calculated as the sum of the losses across all time steps:

- Backpropagation Through Time (BPTT): To update the model parameters and minimize the loss, we use the technique of backpropagation through time (BPTT). BPTT involves calculating the gradients of the loss with respect to the parameters at each time step and updating the parameters using gradient descent optimization.

- Training and Evaluation: Iteratively trains the RNN using the labeled dataset. Monitor the loss on a separate validation set and perform early stopping to prevent overfitting. Once trained, evaluate the RNN on a test set using metrics such as accuracy, precision, recall, and F1 score to assess its performance.

Example: Named Entity Recognition (NER)

Let’s consider the task of named entity recognition, where the goal is to identify and label named entities in sentences. For instance, given the sentence “John lives in New York,” the named entity labels would be [PERSON, O, O, LOCATION, LOCATION].

In this example, we have to encode the input sentence as a sequence of word embeddings and represent the output labels using a one-hot vector representation.

Using an LSTM-based RNN, we process the input sequence word by word, updating the hidden state at each time step. The final output at each time step is used to predict the label for that word.

During training, we compare the predicted labels with the true labels using a loss function such as cross-entropy. We compute the gradients using BPTT and update the LSTM’s parameters through gradient descent.

Finally, we evaluate the trained model on a test set, measuring metrics such as accuracy, precision, recall, and F1 score to assess its performance in identifying named entities.

In this article, we will explore how to implement RNNs for sequence labeling using the Python programming language and a popular deep learning library, TensorFlow. Specifically, we will focus on using Long Short-Term Memory (LSTM) units, a variant of RNNs that can handle long-term dependencies effectively.

Problem Statement:

The goal of this article is to develop an RNN-based sequence labeling model to perform named entity recognition (NER). NER involves identifying entities such as person names, locations, organizations, and dates in a given text. The task is to train an LSTM-based RNN model to predict the entity label (e.g., “PERSON”, “LOCATION”, “ORGANIZATION”) for each word in a given sentence.

We will use a dataset containing labeled sentences where each word is tagged with its corresponding entity label. The model will be trained on this data to learn the patterns and relationships between words and their corresponding labels, enabling it to predict the entity labels for unseen sentences accurately.

Let’s dive into the implementation of the RNN for sequence labeling using TensorFlow and explore the LSTM model in detail.

Python3

import tensorflow as tf

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Embedding, LSTM, Dense, TimeDistributed

def create_rnn_model(vocab_size, num_labels, embedding_dim, lstm_units):

model = Sequential()

model.add(Embedding(vocab_size, embedding_dim, input_length=max_sequence_length))

model.add(LSTM(lstm_units, return_sequences=True))

model.add(TimeDistributed(Dense(num_labels, activation='softmax')))

return model

vocab_size = 10000

num_labels = 3

embedding_dim = 100

lstm_units = 64

max_sequence_length = 50

model = create_rnn_model(vocab_size, num_labels, embedding_dim, lstm_units)

model.summary()

|

Output:

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

embedding (Embedding) (None, 50, 100) 1000000

lstm (LSTM) (None, 50, 64) 42240

time_distributed (TimeDistr (None, 50, 3) 195

ibuted)

=================================================================

Total params: 1,042,435

Trainable params: 1,042,435

Non-trainable params: 0

_________________________________________________________________

Explanation:

- Import the required libraries: We begin by importing TensorFlow and other necessary libraries for our implementation.

- Create_rnn_model function: This function is used to create the RNN model for sequence labeling. It takes the following arguments:

- vocab_size: The size of the vocabulary, i.e., the number of unique words in the dataset.

- num_labels: The number of entity labels (output classes) that we want the model to predict.

- embedding_dim: The dimension of the word embeddings used to represent words in a continuous vector space.

- lstm_units: The number of LSTM units (neurons) in the LSTM layer.

- Model architecture: Inside the create_rnn_model function, we create a Sequential model and add layers to it:

- Embedding layer: This layer is responsible for learning word embeddings from the input data. It maps each word index to a dense vector representation of given embedding_dim dimensions. The input_length parameter sets the maximum length of input sequences.

- LSTM layer: We use the LSTM layer with return_sequences=True to enable sequence-to-sequence mapping. This means that the LSTM layer returns the hidden state for each time step in the input sequence, which is required for sequence labeling.

- TimeDistributed layer: This layer applies the dense Dense layer to each time step independently, allowing the model to output a label for each word in the sequence.

- Dense layer: This layer is a fully connected layer that outputs the predicted probabilities for each entity label using the softmax activation function.

- Return the model: The create_rnn_model function returns the constructed RNN model.

- Example usage: We provide example values for the arguments (vocab_size, num_labels, embedding_dim, lstm_units) and create an instance of the RNN model using the create_rnn_model function. We also print a summary of the model architecture using the summary() method to visualize the model’s layers and their output shapes.

By following this article and adapting the code to your specific sequence labeling task, you can leverage the power of RNNs, particularly LSTM, for accurate predictions of entity labels in textual data.

Conclusion:

RNNs have proven to be powerful tools for sequence labeling in NLP. Their ability to capture contextual information makes them well-suited for tasks like part-of-speech tagging, named entity recognition, sentiment analysis, and more. Understanding the concepts behind RNNs, their architectural variations, and the mathematical formulations involved is crucial for effectively applying them to sequence labeling tasks. By leveraging the capabilities of RNNs, we can achieve state-of-the-art performance in various NLP applications.

Share your thoughts in the comments

Please Login to comment...