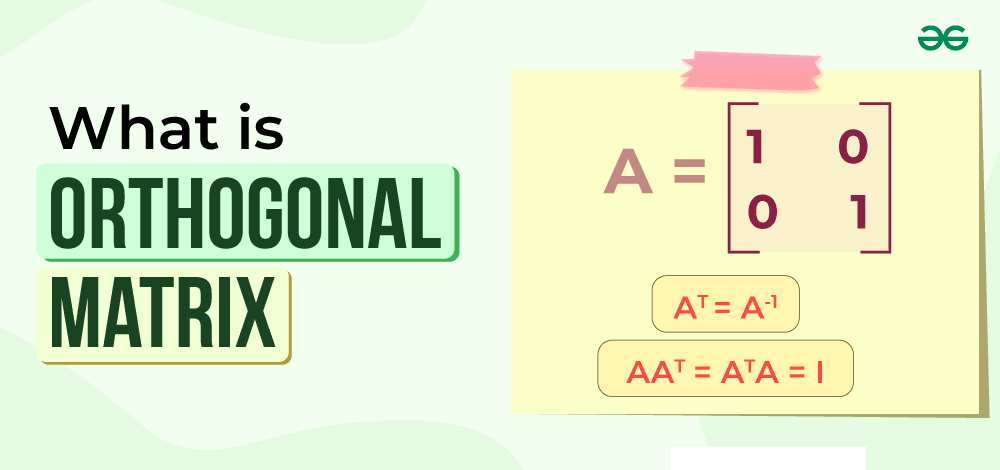

Orthogonal Matrix in Linear Algebra is one of the many types of matrices in which the transpose of the matrix is equal to the inverse of that matrix. As we know, the transpose of a matrix is obtained by swapping its row elements with its column elements. For an orthogonal matrix, the product of the transpose and the matrix itself is the identity matrix, as the transpose also serves as the inverse of the matrix.

This article explores the concept of the Orthogonal Matrix in detail and makes it easy to grasp for all the readers of the article without any regard to their academic level. All subtopics such as their meaning, definition, symbol, example, and many many more, are covered in the article with plenty of examples.

What is an Orthogonal Matrix?

Orthogonal Matrix is a square matrix in which all its rows and columns are mutually orthogonal unit vectors, meaning that each row and column of the matrix is perpendicular to every other row and column, and each row or column has a magnitude of 1.

Orthogonal Matrix Definition

Mathematically, an n x n matrix A is considered orthogonal if

AT = A-1

OR

AAT = ATA = I

Where,

- AT is the transpose of the square matrix,

- A-1 is the inverse of the square matrix, and

- I is the identity matrix of same order as A.

AT = A-1 (Condition for an Orthogonal matrix)…(i)

Pre-multiply by A on both sides,

We get, AAT = AA-1,

We know this relation of the identity matrix, AA-1 = I, (of the same order as A).

So we can also write it as AAT = I. (From (i))

Similarly, we can derive the relation ATA = I.

So, from the above two equations, we get AAT = ATA = I.

Read more about Transpose of a Matrix.

Condition for Orthogonal Matrix

For any matrix to be an orthogonal Matrix, it needs to fulfil the following conditions:

- Every two rows and two columns have a dot product of zero, and

- Every row and every column has a magnitude of one.

Orthogonal Matrix in Linear Algebra

The condition of any two vectors to be orthogonal is when their dot product is zero. Similarly, in the case of an orthogonal matrix, every two rows and every two columns are orthogonal. Also, one more condition is that the length of every row (vector) or column (vector) is 1.

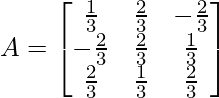

For Example, let’s consider a 3×3 matrix, i.e.,

Here, the dot product between vector 1 and vector 2 i.e. between row 1 and row 2

Row 1 ⋅ Row 2 = (1/3)(-2/3)+(2/3)(2/3)+(-2/3)(1/3) =0

So, Row 1 and Row 2 are Orthogonal.

Also, the Magnitude of Row 1 = ((1/3)2+(2/3)2+(-2/3)2)0.5 = 1

Similarly, we can check for all other rows.

Thus, this matrix A is an example of Orthogonal Matrix.

Example of Orthogonal Matrix

If the transpose of a square matrix with real numbers or values is equal to the inverse matrix of the matrix, the matrix is said to be orthogonal.

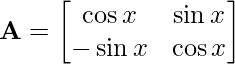

Example of 2×2 Orthogonal Matrix

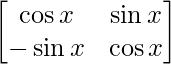

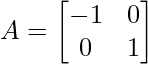

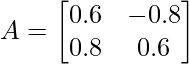

Let’s consider the an 2×2 i.e.,  .

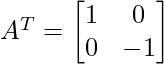

.

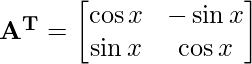

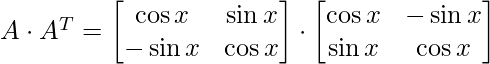

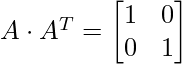

Let’s check this using the product of the matrix and its transpose.

Thus,

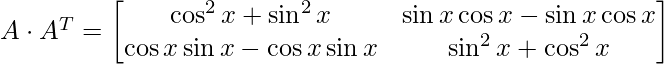

⇒

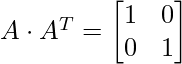

⇒

Which is an Identity Matrix.

Thus, A is an example of an Orthogonal Matrix of order 2×2.

Example of 3×3 Orthogonal Matrix

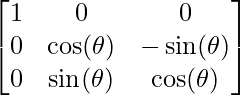

Let us consider 3D Rotation Matrix i.e., 3×3 matrix such that A =  ,

,

To check this matrix is an orthogonal matrix, we need to

Verify that each column and row matrix is a unit vector, i.e., vector with unit magnitude, and

Verify that the columns and rows are pairwise orthogonal i.e., the dot product between any two rows and columns is 0.

Let’s check.

From the matrix, we get

Column 1: [1, 0, 0]

Column 2: [0, cos(θ), sin(θ)]

Column 3: [0, -sin(θ), cos(θ)]

Now,

|[1, 0, 0]| = √(12 + 02 + 02) = 1,

|[0, cos(θ), sin(θ)]| = √(02 + cos2(θ) + sin2(θ)) = 1, and

|[0, -sin(θ), cos(θ)]| = √(02 + sin2(θ) + cos2(θ)) = 1

Thus, each column is a unit vector.

Column 1 ⋅ Column 2 = [1, 0, 0] ⋅ [0, cos(θ), sin(θ)] = 1*0 + 0*cos(θ) + 0*sin(θ) = 0

Similarly, we can check, other columns as well.

Thus, this satisfies all the conditions for a matrix to be orthogonal.

It follows that the provided matrix is an orthogonal matrix given the characteristics of orthogonal matrices.

Determinant of Orthogonal Matrix

Determinant of any Orthogonal Matrix is either +1 or -1. Here, let’s demonstrate the same. Imagine a matrix A that is orthogonal.

For any orthogonal matrix A, we know

A · AT = I

Taking determinants on both sides,

det(A · AT) = det(I)

⇒ det(A) · det(AT) = 1

As, determinant of identity matrix is 1 and det(A) = det(AT)

Thus, det(A) · det(A) = 1

⇒ [det(A)]2 = 1

⇒ det(A) = ±1

Read more about Determinant of a Matrix.

Inverse of Orthogonal Matrix

The inverse of the orthogonal matrix is also orthogonal as inverse is same transpose for orthogonal matrix. As for any matrix to be an orthogonal, inverse of the matrix is equal to its transpose.

For an Orthogonal matrix, we know that

A-1 = AT

Also A · AT = AT · A = I . . . (i)

Let two matrix A and B and if they are inverse of each other then,

A · B = B · A = I . . . (ii)

From (i) and (ii),

B = AT which is same as A = AT

So, we conclude that the transpose of an orthogonal matrix is its inverse only.

Learn moe about Inverse of Matrix.

How to Identify Orthogonal Matrices?

If the transpose of a square matrix with real numbers or elements equals the inverse matrix, the matrix is said to be orthogonal. Or, we may argue that a square matrix is an orthogonal matrix if the product of the square matrix and its transpose results in an identity matrix.

Suppose A is a square matrix with real elements and of n x n order and AT is the transpose of A. Then according to the definition, if, AT = A-1 is satisfied, then,

A ⋅ AT = I

Eigen Value of Orthogonal Matrix

The eigenvalues of an orthogonal matrix are always complex numbers with a magnitude of 1. In other words, if A is an orthogonal matrix, then its eigenvalues λ satisfy the equation |λ| = 1. Let’s prove the same as follows:

Let A be an orthogonal matrix, and let λ be an eigenvalue of A. Also, let v be the corresponding eigenvector.

By the definition of eigenvalues and eigenvectors, we have:

Av = λv

Now, take the dot product of both sides of this equation with itself:

(Av) ⋅ (Av) = (λv) ⋅ (λv)

Since A is orthogonal, its columns are orthonormal, which means that AT (the transpose of A) is also its inverse:

AT ⋅ A = I

Where I is the identity matrix.

Thus, (vTAT) ⋅ Av = (λv)T ⋅ (λv)

⇒ vT (AT A) v = (λv)T (λv)

⇒ vT I v = (λv)T (λv)

⇒ vT v = (λv)T (λv)

⇒ |v|2 = |λ|2 |v|2

Now, divide both sides of the equation by |v|2:

1 = |λ|2

⇒ |λ| = 1

Read more about, Eigenvalues and Eigenvectors.

Multiplicative Inverse of Orthogonal Matrices

The orthogonal matrix’s inverse is also orthogonal. It is the result of the intersection of two orthogonal matrices. An orthogonal matrix is one in which the inverse of the matrix equals the transpose of the matrix.

Properties of an Orthogonal Matrix

Some of the properties of Orthogonal Matrix are:

- Inverse and Transpose are equivalent. i.e., A-1 = AT.

- An identity matrix is the outcome of A and its transpose. That is, AAT = ATA = I.

- In light of the fact that its determinant is never 0, an orthogonal matrix is always non-singular.

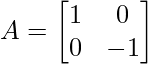

- An orthogonal diagonal matrix is one whose members are either 1 or -1.

- AT is orthogonal as well. A-1 is also orthogonal because A-1 = AT.

- The eigenvalues of A are ±1 and the eigenvectors are orthogonal.

- As I × I = I × I = I, and IT = I. Thus, I an identity matrix (I) is orthogonal.

Orthogonal Matrix Applications

Some of the most common applications of Orthogonal Matrix are:

- Used in multivariate time series analysis.

- Used in multi-channel signal processing.

- Used in QR decomposition.

Read More,

Solved Examples on Orthogonal Matrix

Example 1: Is every orthogonal matrix symmetric?

Solution:

Every time, the orthogonal matrix is symmetric. Thus, the orthogonal matrix is a property of all identity matrices. An orthogonal matrices will also result from the product of two orthogonal matrices. The orthogonal matrix will likewise have a transpose that is orthogonal.

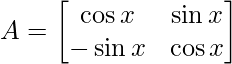

Example 2: Check whether the matrix X is an orthogonal matrix or not?

Solution:

We know that the orthogonal matrix’s determinant is always ±1.

The determinant of X = cos x · cos x – sin x · (-sin x)

⇒ |X| = cos2x + sin2x = 1

⇒ |X| = 1

Hence, X is an Orthogonal Matrix.

Example 3: Prove orthogonal property that multiplies the matrix by transposing results into an identity matrix if A is the given matrix.

Solution:

Thus,

⇒

Which is an identity matrix.

Thus, A is an Orthogonal Matrix.

Practice Problems on Orthogonal Matrix

Q1: Let A be a square matrix:

Determine whether matrix A is orthogonal.

Q2: Given the matrix A:

Is matrix A orthogonal?

Q3: Let Q be an orthogonal matrix. Prove that the transpose of Q is also its inverse, i.e., QT = Q-1

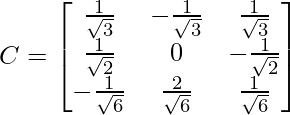

Q4: Consider the matrix C:

Is matrix C orthogonal?

Orthogonal Matrix – FAQs

1. What does the Term “Orthogonal Matrix” Mean?

If the inverse of a square matrix ‘A’ equals the transpose, the matrix is said to be orthogonal. i.e., A-1 = AT. If AAT = ATA = I, where I is the identity matrix, and only if it does so, then a matrix A is said to be orthogonal.

2. Are all Diagonal and Orthogonal Matrices the Same?

Not all diagonal matrices are orthogonal, though. Only when each of a diagonal matrix’s main diagonal members equals 1 or -1 is it considered to be orthogonal.

3. Why is Identity Matrix Orthogonal?

An identity matrix is an orthogonal matrix because its inverse equals its transpose, or because the identity matrix itself is equal to the product of the identity matrix and its transpose.

4. Define Orthogonal Matrix.

An orthogonal matrix is a square matrix in which the rows and columns are mutually orthogonal unit vectors and the transpose of an orthogonal matrix is its inverse.

5. How can you tell If a Matrix is Orthogonal?

To check for matrix is orthogonal or not, we can simply check if AT · A = I (the identity matrix). If it holds for any matrix A, then A is orthogonal matrix.

6. What is the Determinant of Orthogonal Matrix?

An orthogonal matrix has a determinant that is either +1 or -1.

7. What is an Inverse of Orthogonal Matrix?

An orthogonal matrix is one whose inverse is the same as its transpose. In other words, A-1 = AT for any orthogonal matrix A.

8. Does an Orthogonal Matrix Always Have Non-Singularity?

Since its determinant equals 1, an orthogonal matrix is non-singular in all cases.

9. What is an Orthogonal Matrix Eigenvalues?

The eigenvalues of orthogonal matrices have unit modulus i.e., | λ | = 1 .

10. What is an Example of an Orthogonal Matrix?

An example of an orthogonal matrix is the 2×2 matrix:

Where x is any Real Number.

11. What is the Difference between Orthogonal and Orthonormal Matrix?

An orthogonal matrix has orthogonal (perpendicular) columns or rows, meaning their dot products are zero, but they may not have unit lengths. An orthonormal matrix is orthogonal and additionally has columns with unit lengths as well (magnitude 1).

Share your thoughts in the comments

Please Login to comment...