LLMOPS vs MLOPS: Making the Right Choice

Last Updated :

30 Apr, 2024

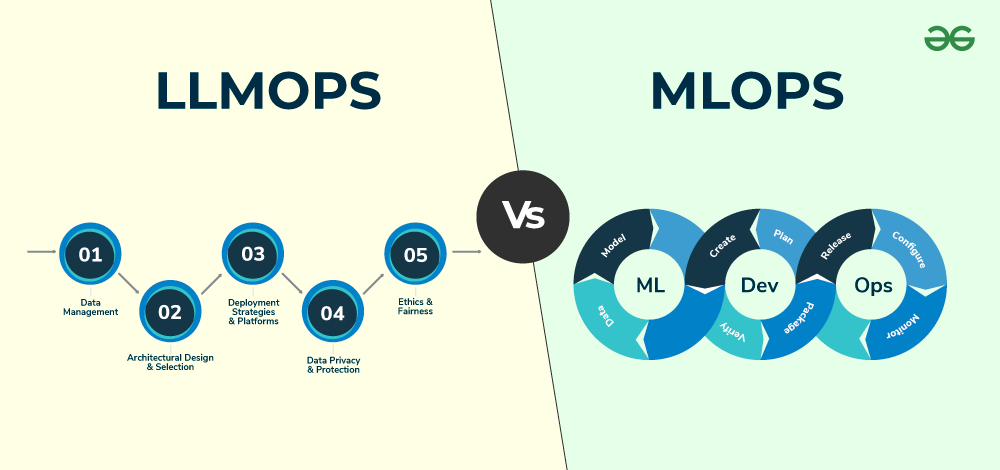

In the rapidly evolving landscape of artificial intelligence and machine learning, new terminologies and concepts frequently emerge, often causing confusion among business leaders, IT analysts, and decision-makers. While sounding similar, LLMOps and MLOps represent distinct approaches that can significantly impact how organizations harness the power of AI technologies.

LLMOPS vs MLOPS: Making the Right Choice

This article compares LLMOps and MLOps, clarifying their roles, and illustrating the impact of each approach on the deployment and management of AI initiatives.

What is LLMOps?

LLMOps (Large Language Model Operations) is a specialized domain within the broader machine learning operations (MLOps) field. LLMOps focuses specifically on the operational aspects of large language models (LLMs). LLM examples include GPT, BERT, and similar advanced AI systems.

LLM models are large deep learning models trained on vast datasets, adaptable to various tasks, and specialized in NLP tasks. Addressing LLM risks is an important part of gen AI productization. These risks include bias, IP and privacy issues, toxicity, regulatory non-compliance, misuse, and hallucination. Mitigation starts by ensuring the training data is reliable, trustworthy, and adheres to ethical values.

What is MLOps?

MLOps (Machine Learning Operations) is the set of practices and processes of streamlining and optimizing the deployment, monitoring, and maintenance of ML models in production environments. This ensures that the models are effective, efficient, and scalable, so they can reach production in an efficient, cost-effective and timely manner.

MLOps is a merger of ML with DevOps practices to cover the entire lifecycle of the ML model, from development and testing to deployment and maintenance. Activities include managing data, selecting algorithms, training models, and evaluating their performance. This is done automatically, at scale, and while enhancing collaboration.

Difference between LLMOPS & MLOPS : How are they different?

|

Features

|

LLMOPS

|

MLOPS

|

|

Scope

|

MLOps for LLMs

|

Lifecycle management of ML models

|

|

Model Complexity

|

Varied, from simple to complex models

|

High complexity due to size and scope

|

|

Resource Management

|

Focus on efficient use of resources and automated scaling for scalability and cost-effectiveness

|

Emphasis on managing extremely large computational resources

|

|

Performance Monitoring

|

Continuous monitoring for accuracy, drift, etc.

|

Specialized monitoring for biases, ethical concerns, and language nuances

|

|

Model Training

|

Regular updates based on performance metrics and drift detection

|

Updates may involve significant retraining and data refinement

|

|

Ethical Considerations

|

Depending on the application, can be a concern

|

High priority due to the potential impact on communication and content generation

|

|

Deployment Challenges

|

Requires overcoming silos, technological considerations and resource issues

|

MLOps challenges + model size, integration requirements and ethical AI considerations

|

LLMOPS vs MLOPS: Choosing the Right Approach for Your Project

Choosing between MLOps and LLMOps depends on your specific goals, background, and the nature of the projects you’re working on. Here are some instructions to help you make an informed decision:

- Understand your goals : Define your primary objectives by asking whether you focus on deploying machine learning models efficiently (MLOps) or working with large language models like GPT-3 (LLMOps).

- Project requirements : Consider the nature of your projects by checking if you primarily deal with text and language-related tasks or with a wider range of machine learning models. If your project heavily relies on natural language processing and understanding, LLMOps is more relevant.

- Resources and infrastructure : Think about the resources and infrastructure you have access to. MLOps may involve setting up infrastructure for model deployment and monitoring. LLMOps may require significant computing resources due to the computational demands of large language models.

- Evaluate expertise and team composition : Assess your in-house skill set by asking if you are more experienced in machine learning, software development, or both? Do you have members with machine learning, DevOps, or both expertise? MLOps often involves collaboration between data scientists, software engineers, and DevOps professionals and requires expertise in deploying, monitoring, and managing machine learning models. LLMOps involve working with large language models, understanding their capabilities, and integrating them into applications.

- Industry and use cases : Explore the industry you’re in and the specific use cases you’re addressing. Some industries may heavily favour one approach over the other. LLMOps might be more relevant in industries like content generation, chatbots, and virtual assistants.

- Hybrid approach : Remember that there’s no strict division between MLOps and LLMOps. Some projects may require a combination of both systems.

Conclusion

MLOps and LLMOPS are complementary solutions. They are not competing with each other. It focus on building models, evaluating, deploying them, and Model Operations focus on governance and full life cycle management of AI and ML. If any organizations want to implement AI or machine learning, they would need both. So, the business scale faster, and more models will be deployed. It is used by data scientists and ML engineers, whereas it is for the organization’s people at a higher level. It automate the process of ML workflow, and its operationalize the whole process. It provides a dashboard, reports, and more.

Share your thoughts in the comments

Please Login to comment...