Batch Processing With Spring Cloud Data Flow

Last Updated :

06 Mar, 2024

the Spring Cloud Data Flow is an open-source architectural component, that uses other well-known Java-based technologies to create streaming and batch data processing pipelines. The definition of batch processing is the uninterrupted, interaction-free processing of a finite amount of data.

Components of Spring Cloud Data Flow

- Application runtime: Kubernetes, Cloud Foundry, Apache Yarn, Apache Mesos, or a local server are examples of application runtimes that we need to run SCDF.

- Data Flow Server: This server is in charge of setting up messaging middleware and applications (starter and/or custom) so that they may be launched at runtime via the dashboard, shell, or even the REST API directly.

- Messaging middleware: Apache Kafka and RabbitMQ are two messaging middleware broker engines that Spring Cloud Data Flow supports and connects these Spring Boot applications to.

- Applications: Source, processor, and sink applications are divided into groups. The source application receives data from an HTTP endpoint, a cache, or persistent storage.

Step-by-Step Implementation of Batch Processing with Spring Cloud Data Flow

Below are the steps to implement Batch Processing with Spring Cloud Data Flow.

Step 1: Maven Dependencies

Let’s add the necessary Maven dependencies first. Since this is a batch application, we must import the Spring Batch Project’s libraries:

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-batch</artifactId>

</dependency>

Step 2: Add Main Class

The @EnableTask and @EnableBatchProcessing annotations must be added to the main Spring Boot class in order to enable the necessary functionality. The annotation at the class level instructs Spring Cloud Task to perform a complete bootstrap.

Java

@EnableTask

@EnableBatchProcessing

@SpringBootApplication

public class BatchProcessingApplication

{

public static void main(String[] args)

{

SpringApplication.run(BatchJobApplication.class, args);

}

}

|

Step 3: Configure a Job

Let’s configure a job now, which is just a straightforward output of a String to a log file.

Java

@Configuration

public class JobConfiguration

{

private static final Log logger = LogFactory.getLog(JobConfiguration.class);

@Autowired

public JobBuilderFactory jobBuilderFactory;

@Autowired

public StepBuilderFactory stepBuilderFactory;

@Bean

public Job job() {

return jobBuilderFactory.get("job")

.start(

stepBuilderFactory.get("jobStep1")

.tasklet(new Tasklet() {

@Override

public RepeatStatus execute(StepContribution contribution,

ChunkContext chunkContext) throws Exception {

logger.info("Job logic executed successfully");

return RepeatStatus.FINISHED;

}

})

.build()

)

.build();

}

}

|

Step 4: Register the Application

We require a unique name, an application type, and a URI that points to the app artefact in order to register the application with the App Registry. Enter the command at the prompt in the Spring Cloud Data Flow Shell:

app register --name batch-job --type task

--uri maven://org.geeksforgeeks.spring.cloud:batch-job:jar:0.1.1-SNAPSHOT

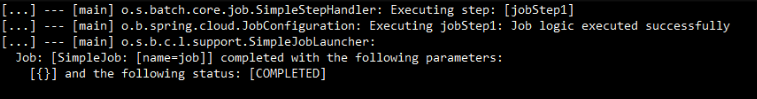

Output:

In the output, all the job does is print a string to a log file. The log files can be found in the directory that appears in the log output of the Data Flow Server.

This is Batch Processing with Spring Cloud Data Flow.

Share your thoughts in the comments

Please Login to comment...