Kubernetes Horizontal vs Vertical scaling

Last Updated :

18 Apr, 2024

In Kubernetes, scaling is the most fundamental concept that makes sure applications run smoothly and consistently. Just consider a scenario where low resources are causing the application to freeze under the existing load. One potential solution is to manually assign the resources whenever this occurs, but it will take a lot of time. This is the place where scaling(moreover autoscaling) comes into action: the ability to scale applications to meet varying needs efficiently is one of the key features of Kubernetes.

What Is Scaling In Kubernetes?

Scaling in Kubernetes simply means adjustment (dynamically), adjustment of the number of running models of an application or service based on the requirement/need. In Kubernetes with the help of scaling, we can easily manage our resources, and make sure they are consistently available and capable of handling variable requirements. In simple words, I would say it is the process of dynamically adjusting available resources.

Types of Scaling in Kubernetes

There are primarily two types of scaling,

- Horizontal scaling/Horizontal Pod Autoscaler (HPA): This feature can effortlessly add or release pod replicas automatically.

- Vertical scaling/Vertical Pod Autoscaler (VPA): This feature in which CPU and memory reservations adjust automatically.

- Cluster Autoscaler: In this feature an analysis of resources occurs, and we make essential adjustments in the deployment and handle the load.

Horizontal Scaling

Horizontal scaling simply means adding more new servers to our infrastructure and distributing the workload across them. That is why this is also known as “scaling out.” The use of cloud services, especially those that provide auto-scaling and serverless computing, has made scaling workloads a breeze. This simplified approach has been confirmed to be highly useful for multiple cases.

Suppose an online video streaming platform experiences a sudden increase in viewership. Initially, the service is running on a Kubernetes cluster with 5 pods, each on the node with 4 vCPUs and 8 GB of RAM. So, in order to manage the sudden increase in demand, the service operates with horizontal scaling by increasing the number of pods. Using Kubernetes’ HPA (i.e. Horizontal Pod Autoscaler), the number of pods automatically increase from 5 to 15. This increase distributes the load across more additional nodes, permitting the service to maintain smooth streaming.

High availability (i.e. Distributed systems) offers increased availability through redundant servers and fail over mechanisms. Predictable growth allows headroom in which we can add more servers as needed, scaling our capacity as we require. We see improved performance and lower cost over time, so these are some positives about having horizontal scaling. However, there are also downsides to having horizontal scaling. They can be complex to implement since setting up and managing a distributed system is more complex than managing a single server. We also may see higher upfront cost. Horizontal scaling is generally preferred in the case where the company depends on mission and important techniques and workloads act as the backbone of the main procedures.

Limitations

- If the nodes are already running downward (in term of resources) and expanding the number of pods won’t necessarily be useful.

- It cannot be apply to those cases where things that cannot be scaled.

- It does not support external metrics that can be used in complex and distributed applications.

- There is a potential cost implication because of the increase in resource utilization, which in turn can increase the resource loads.

- Horizontal scaling is much less effective in stateful applications.

Vertical Scaling

Vertical scaling focuses on increasing the capacity of the system, unlike horizontal which focuses on increasing the overall number of machines. Simply put we could say Vertical Scaling operates by adding more resources like CPU, memory, etc. Vertical Scaling is also called “scaling up.” Essentially this is upgrading the running system and the key part of Vertical Scaling. In other words, Vertical Scaling simply means we can switch to a distinct/different and more powerful machine without changing the actual number in the system ( i.e. upgrading to better model).

Real World Scenario of Vertical Scaling

Let’s take an example where a small business accounting application is originally hosted on a server provided with 2 vCPUs and 4 GB of RAM. As time moves on and the application gets more data, it starts to experience performance problems due to the increase in the workload. In order to solve this challenge, the hosting server is upgraded to a more powerful configuration with 8 vCPUs and 16 GB of RAM. This upgrade helps the application efficiently manage larger data workloads, without requiring any changes to the number of servers.

Pros and Cons of Vertical Scaling

Vertical scaling can sometime be cost efficient, simpler to work with and easier to maintain. Aside from many advantages, there are multiple disadvantages as well. This can create a single point of failure, for instance. Vertical Scaling is inherently limited and can be very costly sometimes due new hardware requirements. Vertical Scaling is generally preferred in non-essential systems and workloads that are not expected to need additional scaling in the future, keeping the original amount down but still may increase in the future.

Limitations

- Vertical scaling needs to restart the affected pod. This can lead to resources and applications being temporarily unavailable.

- There may already b a limited maximum number of resources available on a node. So when a situation occurs where the requirement is more than accepted, then Vertical Scaling would not be an effective solution.

- CPU-intensive, memory-intensive, etc this simply means Complexity in Mixed Workloads.

- Vertical scaling is not suitable for all workloads. For instance, the cases where highly dynamic resource requirements are needed (like VPN), so this may cause repeated temporary unavailability (i.e. restart).

- Careful configuration of resources are required i.e. Fine-tuning is needed.

Kubernetes Horizontal vs Vertical scaling

|

parameter

|

horizontal scaling

|

vertical scaling

|

|

Main Aim

|

Increasing/decreasing the number of machines

|

Adjusting the CPU and memory on existing machine

|

|

Best Use in

|

stateless applications

|

stateful applications

|

|

Scalability

|

High

|

Limited to maximum resources

|

|

Complexity

|

High

|

Lower

|

|

Tool Used

|

HAP ( Horizontal Pod Autoscaler )

|

VAP ( Vertical Pod Autoscaler )

|

|

Tolerance in fault cases

|

Higher, one fault doesn’t effect other

|

lower, one fault can effect other

|

|

cost

|

Can be cost-effective

|

Can lead to higher costs

|

Similarities Between Horizontal vs Vertical Scaling

- Both horizontal and vertical scaling aim to enhance the application ability in order to handle the workload.

- Both horizontal and vertical scaling have Automated Scaling Options for each of the methods: horizontal scaling uses HPA (Horizontal Pod Autoscaler) and vertical scaling uses VPA (Vertical Pod Autoscaler).

- Horizontal and vertical scaling both help in improving performance and availability which also leads to better, more effective and efficient management and utilization of resources.

- Both are the integral tools that exist in the Kubernetes Ecosystem, and both focus on efficiency.

How To Use Scaling?

In order to use scaling we need configuring our cluster to automatically change by the number of pods. depend on workload needs(i.e. horizontal and vertical scaling). In,

Horizontal Scaling

- “kubectl” installed and configured to communicate.

- Make sure Metrics Server in deployed.

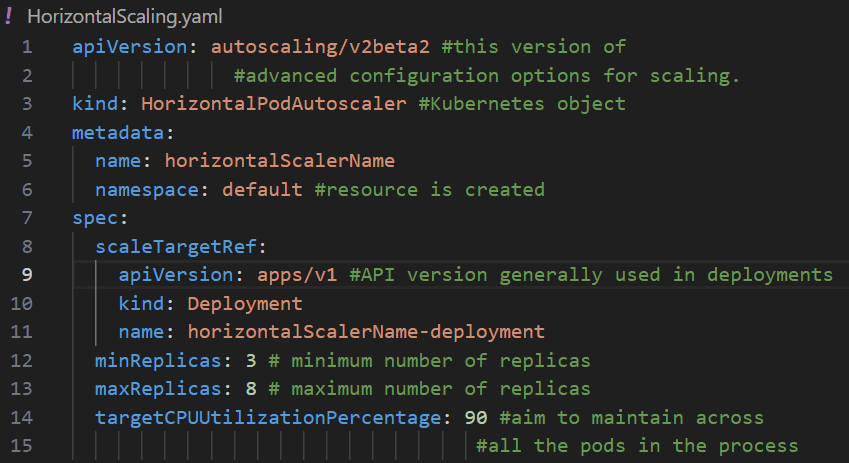

- Create a resource definition in a YAML file.

- Deployment of this by following command:

kubectl apply -f HorizontalScaling.yaml

- Monitor the Deployment by following command:

kubectl get deployment horizontalScalerName-deployment -n default

Vertical Scaling

- Just like Horizontal Scaling, makes sure we have cluster and “kubectl”.

- Deploy components in the cluster in case they are not already established.

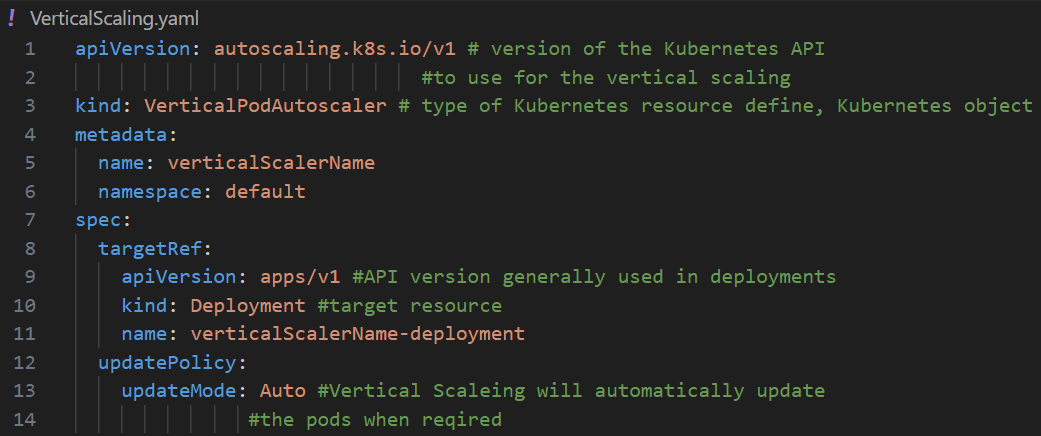

- Create a resource definition in a YAML file, for vertical scaling.

- Deployment of this by following command:

kubectl apply -f VerticalScaling.yaml

kubectl get deployment verticalScalerName-deployment -n default

When to Use Scaling?

- When an application experiences a sudden increase in workload while resource utilization is being monitored. Kubernetes provides the scaling tools in handling increased load and resource efficiency management.

- Performance optimization, high availability and fault tolerance to ensure high availability, we might scale the application across other nodes or even different regions.

- Performance optimization and Auto-Scaling for Dynamic Workloads is required.

- Preparing for Predicted Load Balancing , Microservices Architecture Scaling allows us to adjust resources for each microservice individually.

- Proper Deployment Strategies are needed.

Conclusion

Horizontal and Vertical both are scaling in Kubernetes serve the basic objective is to adapting applications to inconsistent workloads, via different mechanisms. Horizontal scaling is generally used in kubernetes stateless applications. It can easily handle sudden boosts in traffic by adding or subtracting pod replicas. And vertical scaling is more geared toward stateful applications. It concentrates on improving the power of current resources. In order to choose between horizontal and vertical scaling, it will always depends on application needs, infrastructure, configuration and version goals.

Kubernetes Horizontal and Vertical Scaling – FAQs

Can We Use Both Horizontal And Vertical Scaling Together?

Yes. We can use both the Horizontal and Vertical Scaling together based on the use case requirement.

How Someone Can Choose Between Horizontal And Vertical Scaling?

You can choose the horizontal scaling for distributed systems with dynamic workloads and Vertical scaling for a Single powerful machine that meets for current and future needs.

In Which Cases Vertical scaling Is Generally Preferred ?

Vertical scaling is generally preferred in Kubernetes stateful applications, where adding more machine is not easy to establish a stabilized network.

Is There Any Limit On How Much Kubernetes Environment Can Be Scaled?

Yes, We can set the limit for both horizontal and vertical scaling depending on the needs of the Kubernetes Resources.

What Is The Role Of Monitoring in Effective Scaling?

Scaling helps in providing the necessary data regarding client traffic to the particular that helps us in scaling the pods effectively.

Share your thoughts in the comments

Please Login to comment...