To make sure our model’s performance satisfies evolving expectations and criteria, proper evaluation is crucial when it comes to machine learning model construction. Yandex’s CatBoost is a potent gradient-boosting library that gives machine learning practitioners and data scientists a toolbox of measures for evaluating model performance.

CatBoost

CatBoost, short for “Categorical Boosting,” is an open-source library specifically designed for gradient boosting. It is renowned for its efficiency, accuracy, and ability to handle categorical features with ease. Due to its high performance, it’s a go-to choice for many real-world machine-learning tasks. However, a model’s true worth is measured not just by its algorithms but also by how it performs practically. That’s where metrics come into play. In CatBoost, ‘evaluate ()’ and ‘eval_metric’ are the basic functions provided for model evaluation. These functions cover a wide range of metrics. However, CatBoost also provides other functions.

CatBoost Metrics

Metrics for Classification

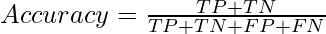

1. Accuracy: A popular statistic for assessing how well categorization models work is accuracy. It calculates the percentage of accurate predictions a model makes on a particular dataset.

Accuracy for binary classifications will be:

- TP (True Positives): The quantity of cases that were accurately projected to be positive (that is, correctly classified as being in a particular class).

- TN (True Negatives): The quantity of cases that were accurately anticipated to be negative (that is, correctly classified as not being a member of a particular class).

- FP (False Positives): The quantity of cases that were miscalculated to be positive (e.g., wrongly classified as being in a particular class).

- FN (False Negatives): The quantity of cases that were miscalculated to be negative (i.e., wrongly classified as not being within a particular category).

2. Multiclass Log Loss: Multiclass Log Loss, sometimes referred to as log loss or cross-entropy loss, is a frequently used metric to assess how well classification models perform in multiclass issues. It calculates the difference between each instance’s expected class probability and true class labels.

The mathematical representation of multiclass log loss is as follows:

![Rendered by QuickLaTeX.com Multiclass Log Loss = - \frac{1}{N} \sum ^{N} _{i=1} \sum ^{M} _{j=1}[y_{ij} . log(p_{ij})]](https://www.geeksforgeeks.org/wp-content/ql-cache/quicklatex.com-eed0c464784566b418205f5ef67d9244_l3.png)

Where,

- N: The total no. of instances in the dataset.

- M: The total no. of classes.

- yij : A binary indicator (0 or 1) of whether class j is the correct classification for instance i.

- pIJ : The predicted probability that instance i belongs to class j.

3. Binary Log Loss: Binary Log Loss is a frequently used statistic to assess the effectiveness of binary classification models. It is sometimes referred to as logistic loss or cross-entropy loss. It calculates the difference between each instance’s anticipated probabilities and true binary labels.

The mathematical representation of Binary Log Loss is as follows:

![Rendered by QuickLaTeX.com Binary Log Loss = - \frac{1}{N} \sum ^{N} _{i=1} [y_{ij} . log(p_{i}) + (1 - y_{i}) . log(1 - p_{i})]](https://www.geeksforgeeks.org/wp-content/ql-cache/quicklatex.com-0b817973c6445d86a345d231703e82aa_l3.png)

Where,

- N: The total number of instances in the dataset.

- yij: The true binary label ( 0 or 1) for instance i.

- pi : The predicted probability that instance i belongs to class 1.

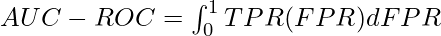

4. AUC-ROC and AUC-PRC: Two frequently used measures to evaluate the effectiveness of binary classification models are AUC-ROC (Area Under the Receiver Operating Characteristic) and AUC-PRC (Area Under the Precision-Recall Curve).

- AUC-ROC (Area Under the Receiver Operating Characteristic): The effectiveness of a binary classification model over different thresholds is shown graphically by the Receiver Operating Characteristic (ROC) curve. At various probability thresholds, it plots the True Positive Rate (TPR) vs the False Positive Rate (FPR).

The AUC-ROC is the area under the ROC curve and is calculated as:

- AUC-PRC (Area Under the Precision-Recall Curve): A binary classification model’s performance can also be represented graphically by the Precision-Recall Curve (PRC). It graphs, at various probability thresholds, the Precision (PPV) versus the Recall (Sensitivity).

The AUC-PRC is the area under the PRC curve and is calculated as:

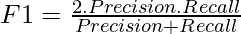

5. F1-Score: A popular metric in binary classification tasks, the F1 Score strikes a balance between precision (positive predictive value) and recall (sensitivity) by combining both into a single score.

The mathematical representation of the F1 Score is as follows:

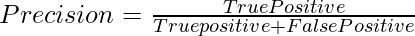

6. Precision: Precision is a statistic used in classification tasks to quantify the accuracy of positive predictions made by a model. It is sometimes referred to as Positive Predictive Value.

The mathematical representation of precision is as follows:

Where:

- The quantity of accurately anticipated positive cases is known as True Positives (TP).

- The quantity of cases when a positive outcome was anticipated but a negative outcome was observed is known as False Positives (FP).

Metrics For Regression

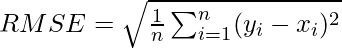

1. RMSE (Root Mean Squared Error): To calculate the average size of the residuals or errors in a regression or prediction task, one can use the Root Mean Squared Error (RMSE) metric.

Its mathematical representation is as follows:

Where:

- n is the number of data points or observations.

- yi represents the actual value for the i-th data point.

- xi represents the predicted value for the i-th data point.

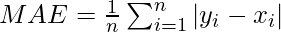

2. MAE (Mean Absolute Error): The average size of the errors or residuals in a regression or prediction task is determined using a metric called mean absolute error (MAE).

Its mathematical representation is as follows:

Where,

- n is the number of data points or observations.

- yi represents the actual value for the i-th data point.

- xi represents the predicted value for the i-th data point.

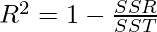

3. R-Squared: In a regression model, the coefficient of determination, often known as R-squared, is a statistical metric that shows how much of the variance in the dependent variable (goal) can be predicted from the independent variables (features).

It mathematical representation is as follows:

Where,

- SSR is the sum of squared residuals (the sum of the squared differences between the actual values and the predicted values).

- SST is the total sum of squares (the sum of the squared differences between the actual values and the mean of the actual values).

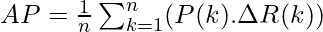

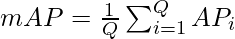

4. Mean Average Precision(mAP): Measuring the effectiveness of object detection and information retrieval systems is often done with the Mean Average Precision (mAP) metric. As a reliable indicator of a model’s performance, it calculates the average precision over a range of queries or classes.

Its mathematical representation is as follows:

- Average Precision(AP): The area under the precision-recall curve represents the average precision for a given query or class. It is determined by averaging the precision calculations at various recall thresholds:

Where, n is the total number of retrieved instances and ΔR(k) is the change in recall at each step.

- The mean of all queries’ or classes’ average precision values is used to compute mAP:

Where, Q is the total number of queries or classes.

How to Use CatBoost Metrics

To use CatBoost metrics for model evaluation:

- Import necessary libraries and dataset and create a model (CatBoost model).

- Split data into train and test data.

- Train your CatBoost model on the imported training data.

- Evaluate the model using the appropriate metrics for your task.

- Interpret the metric values to assess the model’s performance.

Any metric can be chosen based on the performance of the model, and other factors like metrics relevantness to the problem and objectives. Different metrics provide different view on the models performance.

Let us consider datasets and demonstrate some of the metrics that are supported by CatBoost. The Iris dataset, which contains information about the three species of iris blooms (iris setosa, iris versicolor, and iris virginica). Sepal length, sepal width, petal length, and petal width measurements are included in this dataset.

Metrics for Classification

The goal of classification tasks is to categorize data points into distinct classes. CatBoost offers several metrics to assess model performance.

1. Accuracy

Instances successfully classified as a percentage of all instances is how accuracy is calculated. Despite being the most logical measurement, it may not be the most appropriate measurement for datasets with imbalances, where one class considerably dominates the other.

Python3

import numpy as np

from catboost import CatBoostClassifier, Pool

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

iris = load_iris()

X, y = iris.data, iris.target

X_train, X_test, y_train, y_test = train_test_split(X, y,

test_size=0.2,

random_state=42)

model = CatBoostClassifier(iterations=100,

learning_rate=0.1,

depth=6,

loss_function='MultiClass',

verbose=0)

train_pool = Pool(X_train, label=y_train)

test_pool = Pool(X_test, label=y_test)

model.fit(train_pool)

metrics = model.eval_metrics(test_pool,

metrics=['Accuracy'],

plot=True)

accuracy = metrics['Accuracy'][-1]

print(f'Accuracy: {accuracy:.2f}')

|

Output:

Accuracy: 1.00

.jpg)

Since, iris dataset deals with classification, This is one of the suitable metric for evaluation.

Here, iris dataset from Scikit-learn datasets is loaded using ‘load_iris()’ function. The dataset is further split into train and test sets using ‘train_test_split()’ function. CatBoostClassification model is created using multiclass loss function as iris dataset is a multiclassification problem. Pool objects are created for train and test set. Then the model is trained on train_pool using ‘fit()’ function. Then the model is tested and evaluated on accuracy using test_pool and CatBoost’s ‘eval_metrics()’ function.

The output shows that the model has correctly predicted all of the instances in the dataset and the model is perfect fit for the dataset.

2. Multiclass Log Loss

Multiclass Log Loss, also known as cross-entropy for multiclass classification, is a variation of Log Loss designed for multiclass classification problems. This predicts a probability distribution over multiple classes and measures how well these predicted probabilities match the true class labels.

Python3

import numpy as np

from catboost import CatBoostClassifier, Pool

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

iris = load_iris()

X, y = iris.data, iris.target

X_train, X_test, y_train, y_test = train_test_split(X, y,

test_size=0.2,

random_state=42)

model = CatBoostClassifier(iterations=100,

learning_rate=0.1,

depth=6,

loss_function='MultiClass',

verbose=0)

train_pool = Pool(X_train, label=y_train)

test_pool = Pool(X_test, label=y_test)

model.fit(train_pool)

metrics = model.eval_metrics(test_pool,

metrics=['MultiClass'],

plot = True)

multi_class_loss = metrics['MultiClass'][-1]

print(f'Multi-Class Loss: {multi_class_loss:.2f}')

|

Output:

Multi-Class Loss: 0.03

A multi-class loss value of 0.03 suggests that the model is performing well in terms of multi-class classification on the test dataset.

3. Binary Log Loss

Log Loss (cross-entropy loss), quantifies the dissimilarity between the predicted probabilities and the true labels. Lower log loss values indicate better performance. This metric is particularly useful when there’s a need for well-calibrated estimates. Can be used in applications like fraud detection or medical diagnosis, where better calibration of probabilities becomes crucial. It is often referred to in the context of binary classification, i.e, only two classes present in the dataset.

The Iris dataset has three classes, hence it is not appropriate for this metric. Therefore, let’s use the Breast Cancer dataset, which can has only two classes i.e, presence or absence of breast cancer.

Python3

import numpy as np

from catboost import CatBoostClassifier, Pool

from sklearn.datasets import load_breast_cancer

from sklearn.model_selection import train_test_split

data = load_breast_cancer()

X, y = data.data, data.target

X_train, X_test, y_train, y_test = train_test_split(X, y,

test_size=0.2,

random_state=42)

model = CatBoostClassifier(iterations=100,

learning_rate=0.1,

depth=6,

verbose=0)

train_pool = Pool(X_train, label=y_train)

test_pool = Pool(X_test, label=y_test)

model.fit(train_pool)

metrics = model.eval_metrics(test_pool,

metrics=['Logloss'],

plot =False)

logloss = metrics['Logloss'][-1]

print(f'Log Loss (Cross-Entropy): {logloss:.2f}')

|

Output:

Log Loss (Cross-Entropy): 0.08

It quantifies how well the model’s predicted probabilities match the true class labels on the validation set. A lower log loss of 0.08 indicates better alignment between predictions and actual labels.

4. AUC-ROC and AUC-PRC

Area Under the Receiver Operating Characteristic Curve (AUR-ROC) and Area Under the Precision-Recall Curve (AUC-PRC) are very important for binary classification models. AUC-ROC measures the model’s ability to distinguish between positive and negative classes, while AUC-PRC emphasizes precision and recall trade-offs.

Python3

import catboost

from catboost import CatBoostClassifier, Pool

from sklearn import datasets

from sklearn.model_selection import train_test_split

iris = datasets.load_iris()

X = iris.data

y = iris.target

y_binary = (y == 2).astype(int)

X_train, X_test, y_train, y_test = train_test_split(X, y_binary,

test_size=0.2,

random_state=42)

model = CatBoostClassifier(iterations=500,

random_seed=42,

eval_metric='AUC')

train_pool = Pool(X_train, label=y_train)

model.fit(train_pool, verbose=100)

validation_pool = Pool(X_test, label=y_test)

eval_result = model.eval_metrics(validation_pool,

['AUC'])['AUC']

metrics = model.eval_metrics(validation_pool,

metrics=['PRAUC'],

plot = True)

auc_pr = metrics['PRAUC'][-1]

print(f'AUC-PR: {auc_pr:.2f}')

print(f"AUC-ROC: {eval_result[-1]:.4f}")

|

Output:

earning rate set to 0.007867

0: total: 789us remaining: 394ms

100: total: 154ms remaining: 608ms

200: total: 308ms remaining: 458ms

300: total: 505ms remaining: 334ms

400: total: 667ms remaining: 165ms

499: total: 785ms remaining: 0us

AUC-PR: 1.00

AUC-ROC: 1.0000

The model is trained 500 times (iterations=500). The CatBoost automatically calculates and monitors the specified evaluation metric (‘AUC’) during training. The evaluation is performed on a separate validation set (or testing set) at each iteration, allowing to track and report the model’s performance. ‘AUC-ROC’ focuses on true positive rate vs. false positive rate and ‘AUC-PR’ focuses on precision vs. recall.

5. F1 Score

The model’s accuracy (how well it predicts a category) and recall (how frequently it was able to identify that category) are combined to create the F1 Score, which is the harmonic mean. This statistic is ideal for balancing the trade-off between false positives and false negatives. Higher F1 Scores indicate superior models.

Python3

import numpy as np

from catboost import CatBoostClassifier, Pool

from sklearn.datasets import load_breast_cancer

from sklearn.model_selection import train_test_split

data = load_breast_cancer()

X, y = data.data, data.target

X_train, X_test, y_train, y_test = train_test_split(X, y,

test_size=0.2,

random_state=42)

model = CatBoostClassifier(iterations=100,

learning_rate=0.1,

depth=6,

verbose=0)

train_pool = Pool(X_train, label=y_train)

test_pool = Pool(X_test, label=y_test)

model.fit(train_pool)

metrics = model.eval_metrics(test_pool,

metrics=['F1'],

plot=True)

f1 = metrics['F1'][-1]

print(f'F1 Score: {f1:.2f}')

|

Output:

F1 Score: 0.98

A categorization statistic called the F1 Score combines recall and precision into one numerical score. The model gets an F1 score of 0.98, suggesting that it fits the dataset the best.

6. Precision

Precision measures the ability of the model to make positive predictions correctly. Ratio of true positive predictions to all positive predictions made by the model is precision.

Python3

import numpy as np

from catboost import CatBoostClassifier, Pool

from sklearn.datasets import load_breast_cancer

from sklearn.model_selection import train_test_split

data = load_breast_cancer()

X, y = data.data, data.target

X_train, X_test, y_train, y_test = train_test_split(X, y,

test_size=0.2,

random_state=42)

model = CatBoostClassifier(iterations=100,

learning_rate=0.1,

depth=6,

verbose=0)

train_pool = Pool(X_train, label=y_train)

test_pool = Pool(X_test, label=y_test)

model.fit(train_pool)

metrics = model.eval_metrics(test_pool,

metrics=['Precision'],

plot=True)

precision = metrics['Precision'][-1]

print(f'Precision: {precision:.2f}')

|

Output:

Precision: 0.97

This means that 97% of the positive predictions made by the model are actually positive.

There are many other classification metrics supported by CatBoost for both binary and multiclassification, they include:

- Recall (‘Recall’ or ‘TruePositiveRate’): It is the ratio of true positive predictions to all positive instances. It measures the ability of the model to correctly identify positive instances.)

- Weighted Metrics (‘WeightedF1’, ‘WeightedPrecision’, ‘WeightedRecall’, ‘WeightedSpecificity’): These metrics are similar to F1, Precision, Recall, and Specificity, respectively, but can be weighted based on class importance, making them suitable for class-imbalanced problems.

- Kappa Score (‘Kappa’): The Kappa Score measures the agreement between predicted and actual classes while adjusting for chance. It’s useful for assessing classification models when class distribution is imbalanced., etc.

Metrics for Regression

Regression challenges aim to predict continuous numerical values, while CatBoost offers metrics to rate the accuracy of predictions.

1. RMSE(Root Mean Squared Error)

RMSE calculates the average magnitude of errors between predicted and actual values. It chastises larger errors and is sensitive to outliers. Smaller RMSE value indicates better model performance. It is vital for tasks where there is a need for careful considerations of outliers.

Python3

import numpy as np

from catboost import CatBoostRegressor, Pool

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

iris = load_iris()

X, y = iris.data, iris.target

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

model = CatBoostRegressor(iterations=100, learning_rate=0.1, depth=6, verbose=0)

train_pool = Pool(X_train, label=y_train)

test_pool = Pool(X_test, label=y_test)

model.fit(train_pool)

metrics = model.eval_metrics(test_pool, metrics=['RMSE'], plot=True)

rmse = metrics['RMSE'][-1]

print(f'Root Mean Squared Error (RMSE): {rmse:.4f}')

|

Output:

Root Mean Squared Error (RMSE): 0.0817

A lower RMSE indicates that the model’s predictions are closer to the actual values, which is desirable. Here, with an RMSE of 0.0817, it suggests that CatBoostRegressor model is making relatively accurate predictions on the Iris dataset, with the average prediction error being quite small.

2. MAE(Mean Absolute Error)

MAE quantifies the average absolute difference between predicted and actual values. It is less affected by outliers than RMSE and is suitable when there is a need to understand the model’s typical prediction error. Lower MAE values are preferred.

Python3

import numpy as np

from catboost import CatBoostRegressor, Pool

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

iris = load_iris()

X, y = iris.data, iris.target

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

model = CatBoostRegressor(iterations=100, learning_rate=0.1, depth=6, verbose=0)

train_pool = Pool(X_train, label=y_train)

test_pool = Pool(X_test, label=y_test)

model.fit(train_pool)

metrics = model.eval_metrics(test_pool, metrics=['MAE'], plot=True)

mae = metrics['MAE'][-1]

print(f'Mean Absolute Error (MAE): {mae:.4f}')

|

Output:

Mean Absolute Error (MAE): 0.0637

It provides a measure of the average magnitude of errors in the model’s predictions. An MAE of 0.0637 suggests that the CatBoostRegressor model is making relatively accurate predictions on the Iris dataset, with the average absolute prediction error being quite small.

3. R-squared(R2)

R-squared (R2)(coefficient of determination) represents the proportion of the variance in the dependent variable (target variable) that is explained by the independent variables (features) used in your model. A better fit is indicated by higher values, which range from 0 to 1. An R2 score of 1 indicates that the model fits the data more closely.

Python3

import numpy as np

from catboost import CatBoostRegressor, Pool

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

iris = load_iris()

X, y = iris.data, iris.target

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

model = CatBoostRegressor(iterations=100, learning_rate=0.1, depth=6, verbose=0)

train_pool = Pool(X_train, label=y_train)

test_pool = Pool(X_test, label=y_test)

model.fit(train_pool)

metrics = model.eval_metrics(test_pool, metrics=['R2'], plot=True)

r2 = metrics['R2'][-1]

print(f'R-squared (R2): {r2:.4f}')

|

Output:

R-squared (R2): 0.9904

An R-squared value of 0.9904 indicates that your CatBoostRegressor model is highly effective in explaining and predicting the target variable in the Iris dataset, and it fits the data exceptionally well.

CatBoost also provide various other Regression metrics, they include:

- Mean Squared Error(MSE): MSE measures the mean squared difference between predicted values and actual values for regression tasks. It’s similar to RMSE but without the square root.

- Poisson Loss (‘Poisson’): Poisson Loss measures the accuracy of predictions for Poisson regression tasks, which are commonly used for count data.

- Quantile Loss (‘Quantile’): Quantile Loss assesses the accuracy of quantile predictions for quantile regression tasks. It’s useful when you need to predict different quantiles of the target variable., etc.

Metrics for Over-fitting Detection

Over-fitting is a common problem where model performs well on the training data but poorly on unseen data. CatBoost provides metrics to assess over-fitting.

1. Cross-Validation

Cross-validation is a crucial technique in machine learning that helps in detecting and mitigating overfitting. Cross-validation helps in overfitting detection by comparing training and validation performance.

Python3

import numpy as np

from catboost import CatBoostClassifier, Pool, cv

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

iris = load_iris()

X, y = iris.data, iris.target

data_pool = Pool(X, label=y)

params = {

'iterations': 100,

'learning_rate': 0.1,

'depth': 6,

'loss_function': 'MultiClass',

'verbose': 0

}

cv_results = cv(pool=data_pool,

params=params,

fold_count=5,

shuffle=True,

partition_random_seed=42,

verbose_eval=False)

for metric_name in cv_results.columns:

if 'test-' in metric_name:

mean_score = cv_results[metric_name].iloc[-1]

print(f'{metric_name}: {mean_score:.4f}')

|

Output:

Training on fold [0/5]

bestTest = 0.1226007055

bestIteration = 72

Training on fold [1/5]

bestTest = 0.09388296402

bestIteration = 99

Training on fold [2/5]

bestTest = 0.05707644554

bestIteration = 99

Training on fold [3/5]

bestTest = 0.1341533772

bestIteration = 93

Training on fold [4/5]

bestTest = 0.19934632

bestIteration = 94

test-MultiClass-mean: 0.1221

test-MultiClass-std: 0.0531

This code performs cross-validation for a CatBoostClassifier model on the Iris dataset, allowing to assess the model’s performance using multiple evaluation metrics. It’s a common practice to use cross-validation to get a more robust estimate of a model’s performance and to avoid overfitting.

2. Feature Importance

CatBoost also offers a feature importance score. It can be used to identify the priority and importance of features and their impact on the model’s prediction. Feature importance can be used to detect overfitting by identifying features that are not important to the model’s predictions. If a feature is not important to the model’s predictions, it is likely that the model is overfitting to that feature.

Python3

import numpy as np

import matplotlib.pyplot as plt

from catboost import CatBoostClassifier, Pool

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

iris = load_iris()

X, y = iris.data, iris.target

X_train, X_test, y_train, y_test = train_test_split(X, y,

test_size=0.2,

random_state=42)

model = CatBoostClassifier(iterations=100,

learning_rate=0.1,

depth=6,

loss_function='MultiClass',

verbose=0)

model.fit(X_train, y_train)

test_pool = Pool(X_test)

feature_importance = model.get_feature_importance(test_pool)

feature_names = iris.feature_names

plt.figure(figsize=(10, 6))

plt.barh(range(len(feature_importance)),

feature_importance,

tick_label=feature_names)

plt.xlabel('Feature Importance')

plt.ylabel('Features')

plt.title('Feature Importance for CatBoost Classifier')

plt.show()

|

Output:

Feature Importance

To calculate feature importance, we create a Pool object for the testing data (X_test) using Pool(X_test). The CatBoost uses model.get_feature_importance() method retrieves the feature importance scores. Finally, a bar plot is created to visualize the feature significance scores.

The resulting bar plot will show the importance of each feature in the model’s predictions. This information can help you identify which features are most relevant to the classification task and guide feature selection or engineering efforts.

Learning curve is also important in detecting overfitting. There is no separate method in CatBoost to plot learning curve. However, it can be plotted using other libraries in python like matplotlib.

Metric for Hyperparameter Tuning

This hyperparameter tuning techniques, like grid search or Bayesian optimization, can be used to optimize the CatBoost model’s performance. This is a crucial step, the choice of the metric to use during herperparameter tuning depends on the nature of our problem and our specific goals.

Python3

import numpy as np

from catboost import CatBoostClassifier, Pool, cv

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

iris = load_iris()

X, y = iris.data, iris.target

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

model = CatBoostClassifier(iterations=100, learning_rate=0.1, depth=6, verbose=0)

train_pool = Pool(X_train, label=y_train)

param_grid = {

'iterations': [100, 200],

'learning_rate': [0.01, 0.1, 0.2],

'depth': [4, 6, 8],

}

grid_search_results = model.grid_search(param_grid, train_pool, cv=3, partition_random_seed=42, verbose=10)

best_params = grid_search_results['params']

print("Best Hyperparameters:")

print(best_params)

|

Output:

Best Hyperparameters:

{'depth': 4, 'iterations': 200, 'learning_rate': 0.1}

Grid search with cross-validation is a good way to find the best hyperparameters for your machine learning model. It works by trying out different combinations of hyperparameter values and evaluating the model on each combination. The best hyperparameters are the ones that produce the best model performance on the cross-validation folds.

CatBoost also provides a number of other metrics:

- Per-class metrics: Using this, accuracy, precision, F1 and recall can be calculated for each individual class in multiclass classification.

- Grouped metrics: Using this, metrics for different groups of data can be calculated.

- Custom metrics: Using this one can create own custom metrics using Python.

CatBoost metrics for model evaluation are invaluable tools that guide you in building high-performing and robust machine learning models. Whether you’re working on classification, regression, over-fitting detection, or hyperparameter tuning, the right choice of metrics allows you to assess and optimize your models effectively.

Conclusion

In conclusion, CatBoost provides a comprehensive set of measurements and evaluation tools that make choosing and evaluating models very successful. For classification and regression tasks, it starts with its default evaluation metrics, such as Logloss and Mean Squared Error, but also supports customization with user-defined metrics. A thorough evaluation is guaranteed by the ability to use early stopping, cross-validate, and monitor several metrics throughout training. It offers a wide range of metrics for classification tasks, whereas MAE and MSE are two widely used metrics for regression jobs. Learning curves and feature importances are visualization tools that support the evaluation process. Data scientists are given the tools to make educated judgments because to CatBoost’s emphasis on flexible and instructive metrics, which results in the creation of machine learning models that perform well.

Share your thoughts in the comments

Please Login to comment...