LightGBM (Light Gradient Boosting Machine) is a popular gradient boosting framework developed by Microsoft known for its speed and efficiency in training large datasets. It’s widely used for various machine-learning tasks, including classification, regression, and ranking. While training a LightGBM model is relatively straightforward, evaluating its performance is just as crucial to ensuring its effectiveness in real-world applications.

In this article, we will explore the key evaluation metrics used to assess the performance of LightGBM models.

Why Model Evaluation is Important?

Before diving into specific evaluation metrics, it’s essential to understand why model evaluation is vital in the machine learning pipeline. Evaluation metrics provide a quantitative measure of how well a model has learned from the training data and how effectively it can make predictions on unseen data. These metrics help us:

- Select the Best Model: By comparing the performance of different models, we can choose the one that performs best on the task at hand.

- Tune Model Hyperparameters: Evaluation metrics help in hyperparameter tuning, enabling us to optimize a model’s performance.

- Assess Generalization: They allow us to assess how well a model generalizes to new, unseen data, preventing overfitting (when a model performs well on the training data but poorly on new data) or underfitting (when a model fails to capture the underlying patterns).

- Provide Insights: Evaluation metrics can provide valuable insights into the strengths and weaknesses of a model, helping data scientists and machine learning engineers make informed decisions.

Now, let’s explore some of the most common evaluation metrics for LightGBM models.

Classification Metrics

Accuracy is perhaps the most intuitive classification metric. It measures the ratio of correctly predicted instances to the total number of instances in the dataset. However, accuracy can be misleading, especially when dealing with imbalanced datasets. In such cases, a model that predicts the majority class most of the time can still achieve high accuracy, even if it fails to correctly predict minority class instances.

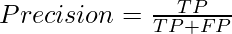

Precision and recall are important metrics for imbalanced datasets and are often used together.

- Precision : Precision measures the accuracy of positive predictions. It’s the ratio of true positives (correctly predicted positive instances) to the total number of positive predictions. High precision indicates that when the model predicts a positive class, it’s likely to be correct.

- Recall : Recall, also known as sensitivity or true positive rate, measures the ability of the model to identify all relevant instances of the positive class. It’s the ratio of true positives to the total number of actual positive instances. High recall indicates that the model can successfully identify most of the positive instances.

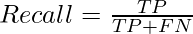

The F1-score is the harmonic mean of precision and recall. It provides a single score that balances both precision and recall. The F1-score is particularly useful when you want to find a balance between false positives and false negatives. It’s calculated as:

- Area Under the Receiver Operating Characteristic Curve (AUC-ROC)

ROC (Receiver Operating Characteristic) curves are useful for binary classification problems. They plot the true positive rate (recall) against the false positive rate at various thresholds. The AUC-ROC measures the area under the ROC curve and provides a single value that summarizes the model’s ability to distinguish between classes. A higher AUC-ROC indicates better discrimination.

- Area Under the Precision-Recall Curve (AUC-PR)

Similar to AUC-ROC, the AUC-PR measures the area under the precision-recall curve. It is particularly useful when dealing with imbalanced datasets where the positive class is rare. A higher AUC-PR indicates better precision-recall trade-off.

Implementation For Classification

Let’s implement the model for classification:

Libraries Imported:

We import the necessary libraries:

- lightgbm as lgb: This is the LightGBM library for gradient boosting.

- numpy as np: Used for numerical operations.

- pandas as pd: Used for data manipulation.

- train_test_split from sklearn.model_selection: Used to split the dataset into training and testing sets.

- accuracy_score and roc_auc_score from sklearn.metrics: These are used to evaluate the classification model.

Dataset Loading and Splitting:

We load the classification dataset from an Excel file for Prediction in Default of Credit Card Payment by a client. The dataset is split into 24 features (Age ,Sex ,Marriage ,Pay, etc. ) and the target variable (Default of Credit Card Payment Next Month) . We further split the data into training and testing sets using train_test_split, with 80% of the data used for training and 20% for testing. random_state ensures reproducibility.

Python3

import lightgbm as lgb

import numpy as np

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score, roc_auc_score

data = pd.read_excel('default of credit card clients.xls',header=1)

X = data.drop('default payment next month', axis=1)

y = data['default payment next month']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

|

LightGBM Parameters for Classification:

We define a dictionary param containing parameters for the LightGBM classifier.

- ‘objective’: ‘binary’ specifies that it’s a binary classification task.

- ‘metric’: ‘binary_logloss’ sets the evaluation metric to binary log loss.

- ‘boosting_type’: ‘gbdt’ specifies the gradient boosting algorithm.

- ‘num_leaves’: 31 sets the maximum number of leaves in each tree.

- ‘learning_rate’: 0.05 determines the step size in gradient descent.

- ‘feature_fraction’: 0.9 controls the fraction of features used for each tree.

Let’s define the parameters for classification:

Python3

param = {

'objective': 'binary',

'metric': 'binary_logloss',

'boosting_type': 'gbdt',

'num_leaves': 31,

'learning_rate': 0.05,

'feature_fraction': 0.9

}

|

LightGBM Dataset and Training:

Moving on with training and evaluation of the model. We create a LightGBM dataset train_data from the training features and labels and train the classifier using lgb.train with the defined parameters for 100 boosting rounds.

Python3

train_data = lgb.Dataset(X_train, label=y_train)

classifier = lgb.train(param, train_data, num_boost_round=100)

|

Predictions and Evaluation:

We make predictions on the test data using the trained classifier and calculate the accuracy and ROC AUC score to evaluate the model’s performance.

Python3

y_pred = classifier.predict(X_test)

accuracy = accuracy_score(y_test, (y_pred > 0.5).astype(int))

roc_auc = roc_auc_score(y_test, y_pred)

print(f'Accuracy: {accuracy}')

print(f'ROC AUC: {roc_auc}')

|

Output:

Accuracy: 0.8193333333333334

ROC AUC: 0.7851287229459846

Accuracy is the proportion of correctly predicted binary class labels. In this case, it’s 82%, indicating that 82% of the test samples were classified correctly.

AUC-ROC (Area Under the Receiver Operating Characteristic Curve) measures the model’s ability to distinguish between the positive and negative classes. An AUC-ROC of 0.78 indicates that the model has good discrimination performance.

Regression Metrics

For regression tasks, where the goal is to predict a continuous target variable, different evaluation metrics are used.

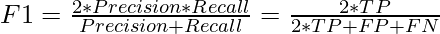

- Mean Absolute Error (MAE)

MAE is the average of the absolute differences between the predicted values and the actual values. It measures the average magnitude of errors and is less sensitive to outliers compared to the mean squared error (MSE).

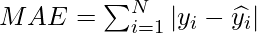

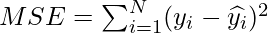

MSE is the average of the squared differences between the predicted values and the actual values. It penalizes larger errors more heavily than MAE.

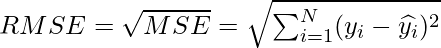

- Root Mean Squared Error (RMSE)

RMSE is the square root of the MSE. It provides a measure of the average magnitude of errors in the same units as the target variable.

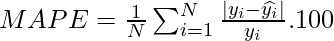

- Mean Absolute Percentage Error (MAPE)

MAPE measures the percentage difference between the predicted values and the actual values. It’s particularly useful when you want to understand the relative size of errors compared to the actual values.

Implementing for Regression

Let’s implement the model for regression:

Libraries Imported:

We import the necessary libraries:

- lightgbm as lgb: This is the LightGBM library for gradient boosting.

- numpy as np: Used for numerical operations.

- pandas as pd: Used for data manipulation.

- train_test_split from sklearn.model_selection: Used to split the dataset into training and testing sets.

- mean_squared_error from sklearn.metrics: This is used to evaluate the regression model.

Dataset Loading and Splitting:

We load the regression dataset from a CSV File for Red Wine Quality Prediction. The dataset is split into 11 features ( pH, density ,alcohol ,etc.) and the target variable (Quality of Red Wine on a scale of 0 to 10) . We further split the data into training and testing sets using train_test_split, with 80% of the data used for training and 20% for testing. random_state ensures reproducibility.

Python3

import lightgbm as lgb

import numpy as np

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.metrics import mean_squared_error

data = pd.read_csv('winequality-red.csv')

X = data.drop('quality', axis=1)

y = data['quality']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

|

LightGBM Parameters for Regression:

Here, we define parameters for the LightGBM regressor.

- ‘objective’: ‘regression’ specifies that it’s a regression task.

- ‘metric’: ‘l2’ sets the evaluation metric to Mean Squared Error (MSE).

- ‘boosting_type’: ‘gbdt’ specifies the gradient boosting algorithm.

- ‘num_leaves’: 31 sets the maximum number of leaves in each tree.

- ‘learning_rate’: 0.05 determines the step size in gradient descent.

- ‘feature_fraction’: 0.9 controls the fraction of features used for each tree.

Let’s define the parameters for regression:

Python3

param = {

'objective': 'regression',

'metric': 'l2',

'boosting_type': 'gbdt',

'num_leaves': 31,

'learning_rate': 0.05,

'feature_fraction': 0.9

}

|

LightGBM Dataset and Training:

We create a LightGBM dataset train_data from the training features and labels and train the regressor using lgb.train with the defined parameters for 100 boosting rounds.

Predictions and Evaluation:

We make predictions on the test data using the trained regressor and calculate the Mean Squared Error (MSE) to evaluate the model’s performance.

Moving on with training and evaluation of the model.

Python3

train_data = lgb.Dataset(X_train, label=y_train)

regressor = lgb.train(param, train_data, num_boost_round=100)

y_pred = regressor.predict(X_test)

mse = mean_squared_error(y_test, y_pred)

print(f'Mean Squared Error: {mse}')

|

Output:

Mean Squared Error: 0.3208175122831507

Mean Squared Error (MSE) measures the average squared difference between the actual and predicted values. In this case, an MSE of 0.32 means that, on average, the predicted values are off by 0.32 squared units from the true values. Lower MSE values indicate better regression performance.

Ranking Metrics

In ranking tasks, where the goal is to predict the order or ranking of items, evaluation metrics are tailored to assess the quality of the ranked lists.

- Normalized Discounted Cumulative Gain (NDCG)

NDCG is a widely used metric for ranking tasks. It measures the quality of a ranked list by considering the relevance of items and their positions in the list. It’s especially common in recommendation systems.

Precision at K measures the proportion of relevant items in the top K positions of the ranked list. It’s used to evaluate how well a model ranks relevant items at the top.

- Mean Reciprocal Rank (MRR)

MRR calculates the average reciprocal rank of the first relevant item in the ranked list. It provides a single value that summarizes the model’s ability to rank relevant items highly.

Cross-Validation and Evaluation

Evaluating a model’s performance on a single dataset can be misleading. To obtain a more reliable estimate of a model’s performance, cross-validation is often used. In cross-validation, the dataset is split into multiple subsets, and the model is trained and evaluated multiple times on different subsets. Common cross-validation techniques include k-fold cross-validation and stratified k-fold cross-validation for classification tasks.

Conclusion

Evaluating the performance of LightGBM models is a crucial step in any machine learning project. The choice of evaluation metrics depends on the specific task and the nature of the data. Understanding and selecting the appropriate metrics can help you fine-tune your models.

Share your thoughts in the comments

Please Login to comment...