In contemporary cloud infrastructure setups, managing and distributing incoming traffic effectively across various instances is central to ensuring the high accessibility and scalability of applications. Among the bunch of services given by AWS (Amazon Web Services), Elastic Load Balancing (ELB) stands apart as a basic part of working with this task. ELB automatically circulates approaching application traffic across a fleet of targets, for example, EC2 instances, containers, IP addresses, or Lambda functions, across different availability zones, ensuring adaptation to non-critical failure and high accessibility.

To streamline out the provisioning and the management of AWS resources, Infrastructure as Code (IaC) apparatuses like Terraform have acquired massive popularity. Terraform offers an explanatory way to deal with characterizing and provisioning infrastructure, allowing clients to determine the ideal condition of their infrastructure utilizing a direct and natural configuration language known as HashiCorp Configuration Language (HCL).

In this article, we embark on a complete journey through the deployment of AWS load balancers utilizing Terraform. Our point is to outfit you with the important information and abilities to engineer powerful and versatile load-balancing solutions in AWS infrastructure. We’ll dig into principal ideas, and terminologies, and give a step-by-step manual for deploying AWS load balancers using Terraform.

Primary Terminologies

- AWS Elastic Load Balancer (ELB): Flexible Burden Balancer is an overseen administration given by Amazon Web Administrations (AWS) that naturally disseminates approaching application traffic across different targets, for example, EC2 occasions, holders, IP locations, or Lambda capabilities, in various accessibility zones. It guarantees high accessibility, adaptation to internal failure, and adaptability of utilizations by uniformly appropriating the responsibility and rerouting traffic away from undesirable targets.

- Terraform: Terraform is an open-source Infrastructure as Code (IaC) tool created by HashiCorp. It allows users to define and provision data center infrastructure utilizing a declarative configuration language called HashiCorp Configuration Language (HCL). Terraform empowers clients to manage and automate the deployment of infrastructure resources across different cloud providers, including AWS, Azure, Google Cloud Platform, and others.

- Terraform Configuration: Terraform configuration refers to a set of records containing infrastructure code written in HCL, determining the ideal condition of the infrastructure. These configuration file characterize the resources, their properties, conditions, and connections expected to provision and manage with the infrastructure. Terraform utilizes these configuration files to make an execution plan and apply changes to the infrastructure.

- Load Balancer Listener: A Load balancer listener is a part that listens in for connection requests from clients and advances them to the proper objective in light of predefined rules. It works on a particular port and convention characterized by the client and courses incoming traffic to the related objective group or backend service. Listeners play assume a critical part in characterizing how traffic is distributed and managed by the load balancer.

- Target Groups: Target Groups are sensible groupings of targets, (for example, EC2 instances, containers, IP addresses, or Lambda functions) enlisted with a load balancer to get approaching traffic. Each target group is related to at least one audience member and characterizes the standards for routing targets to the objectives, for example, health checks, protocols, ports, and conditions. Target groups consider adaptable directing and load adjusload-adjustinging systems in light of the qualities of the targets.

What is a Load Balancer?

A load balancer is a basic part in present-day IT infrastructure that effectively distributes approaching network traffic across various servers or instances. It goes about as a traffic cop, directing client requests to the suitable server based on various factors like server availability, current load, or predefined routing rules. Load balancers are regularly used to work on the accessibility, unwavering quality, and versatility of utilizations by equitably appropriating responsibility and keeping any single server from becoming overwhelmed.

Generally, a load balancer ensures that no single server bears the whole burden of dealing with approaching requests, in this way improving the general execution and versatility of the application. It plays an essential part in keeping up with high accessibility and adaptation to internal failure by rerouting traffic away from servers that are encountering issues or downtime.

Load balancers come in various types

- Hardware Load Balancers: Physical machines explicitly designed for load-balancing tasks. They offer high performance and adaptability yet are frequently expensive and require specific equipment.

- Software Load Balancers: Load balancing software deployed on standard servers or virtual machines. Models incorporate Nginx, HAProxy, and Microsoft Application Request Routing (ARR).

- Cloud Load Balancers: Load balancers are offered as support by cloud suppliers like AWS, Azure, and Google Cloud Platform. These load balancers are highly adaptable, effectively configurable, and flawlessly coordinated with cloud environments.

Cloud load balancers, like the AWS Elastic Load Balancer (ELB), offer extra elements like automatic scaling, health checks, and integration with other cloud services, pursuing famous decisions for present-day cloud-native applications.

Step-by-Step Process to create AWS load balancer using terraform

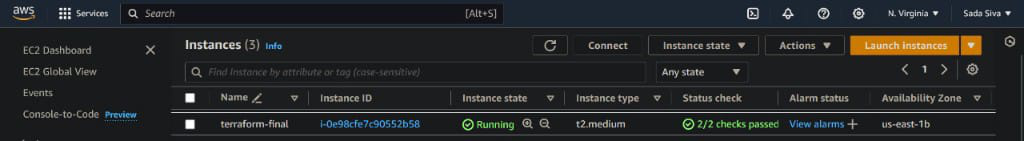

Step 1: Launch An Instance

- Launch EC2 instance with Amazon Linux2 Kernel 5.10(AMI) along with port numbers set SSH – 22, HTTP 8o and allow all traffic and select storage t2.micro.

- Now connect with git bash terminal or any other terminals like putty, command prompt and PowerShell e.t.c.

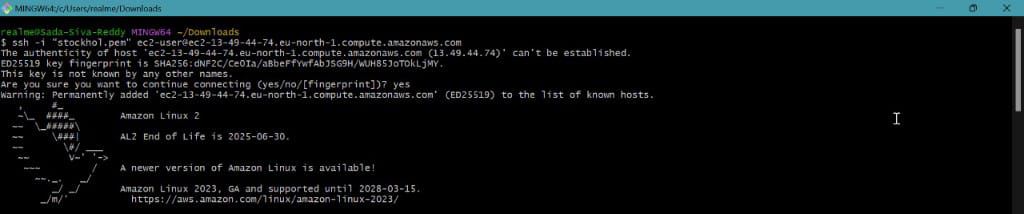

Step 2: Install Terraform

Now install terraform packages from official site of hashicorp or follow below commands

sudo yum install -y yum-utils

sudo yum-config-manager --add-repo https://rpm.releases.hashicorp.com/AmazonLinux/hashicorp.repo

sudo yum -y install terraform

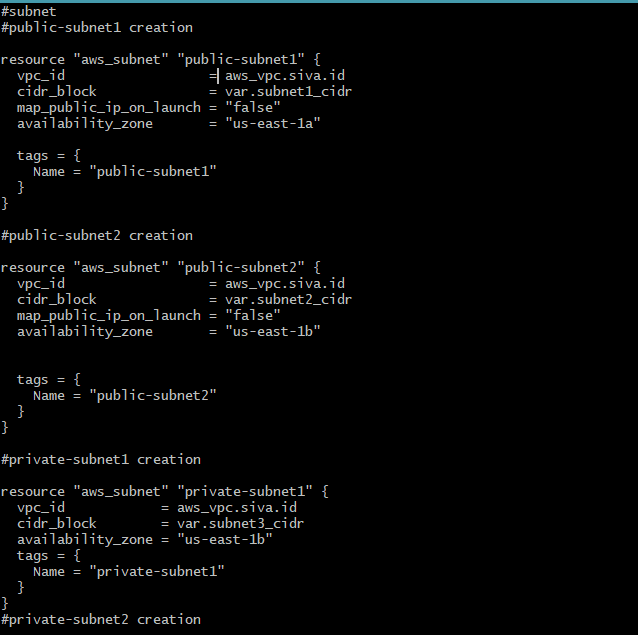

Step 3: Create A File And Write Terraform Script for to create AWS load balancer

Creation of VPC

In this section we are creating VPC for our EC2 Instance

resource "aws_vpc" "siva" {

cidr_block = var.vpc_cidr

instance_tenancy = "default"

tags = {

Name = "siva-vpc" # configure our own name

}

}

Provider Configuration

provider "aws" {

region = "us-east-1"

}

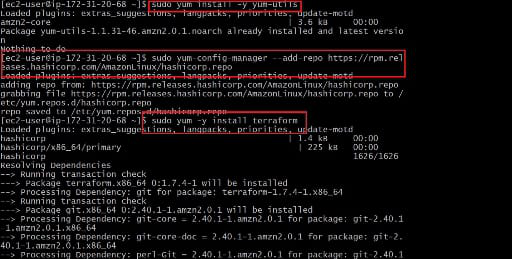

Creation of Subnet

In this section we are creating subnets to VPC

resource "aws_subnet" "public-subnet1" {

vpc_id = aws_vpc.siva.id

cidr_block = var.subnet1_cidr

map_public_ip_on_launch = "false"

availability_zone = "us-east-1a"

tags = {

Name = "public-subnet1"

}

}

#public-subnet2 creation

resource "aws_subnet" "public-subnet2" {

vpc_id = aws_vpc.siva.id

cidr_block = var.subnet2_cidr

map_public_ip_on_launch = "false"

availability_zone = "us-east-1b"

tags = {

Name = "public-subnet2"

}

}

#private-subnet1 creation

resource "aws_subnet" "private-subnet1" {

vpc_id = aws_vpc.siva.id

cidr_block = var.subnet3_cidr

availability_zone = "us-east-1b"

tags = {

Name = "private-subnet1"

}

}

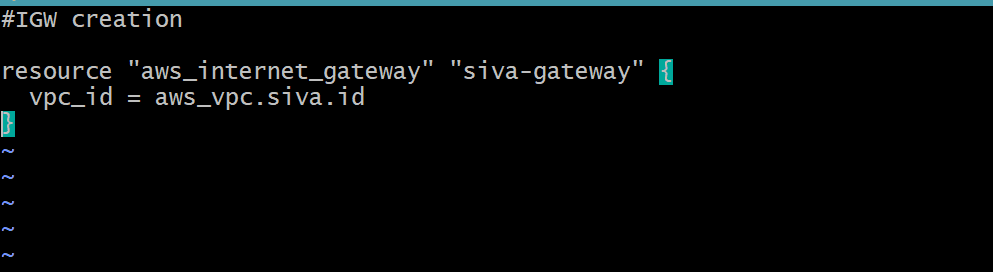

Internet Gateway Creation

In this section we creating internet gateway for VPC to allow internet traffic

resource "aws_internet_gateway" "siva-gateway" {

vpc_id = aws_vpc.siva.id

}

Creation of Rout Table

resource "aws_route_table" "route" {

vpc_id = aws_vpc.siva.id

route {

cidr_block = "0.0.0.0/0"

gateway_id = aws_internet_gateway.siva-gateway.id

}

tags = {

Name = "route to internet"

}

}

#route 1

resource "aws_route_table_association" "route1" {

subnet_id = aws_subnet.public-subnet1.id

route_table_id = aws_route_table.route.id

}

#route 2

resource "aws_route_table_association" "route2" {

subnet_id = aws_subnet.public-subnet2.id

route_table_id = aws_route_table.route.id

}

resource “aws_security_group” “web-sg” {

vpc_id = aws_vpc.siva.id

ingress {

from_port = 80

to_port = 80

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

ingress {

from_port = 443

to_port = 443

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

ingress {

from_port = 22

to_port = 22

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

tags = {

Name = "web-sg"

}

}

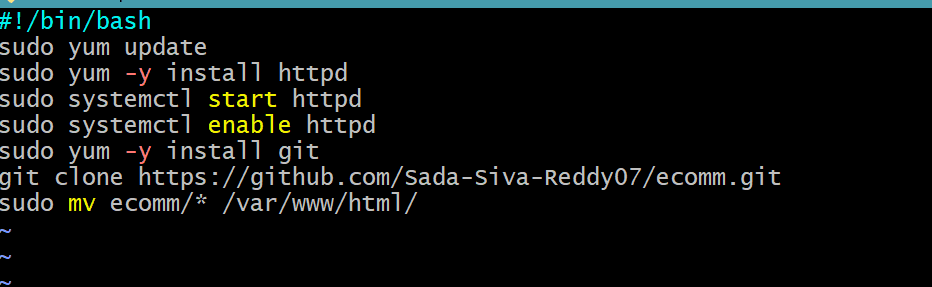

User data for EC2 instance

In this section we are providing user data for EC2 Instance to clone from GitHub because to host application

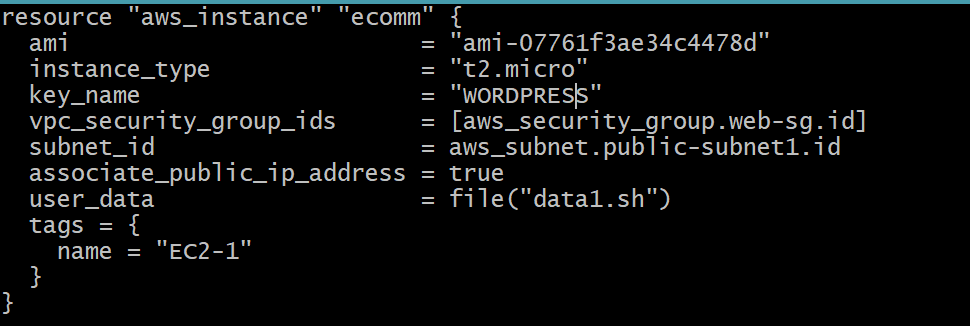

Creation of EC2 instance

resource "aws_instance" "ecomm" {

ami = "ami-07761f3ae34c4478d"

instance_type = "t2.micro"

key_name = "WORDPRESS"

vpc_security_group_ids = [aws_security_group.web-sg.id]

subnet_id = aws_subnet.public-subnet1.id

associate_public_ip_address = true

user_data = file("data1.sh")

tags = {

name = "EC2-1"

}

}

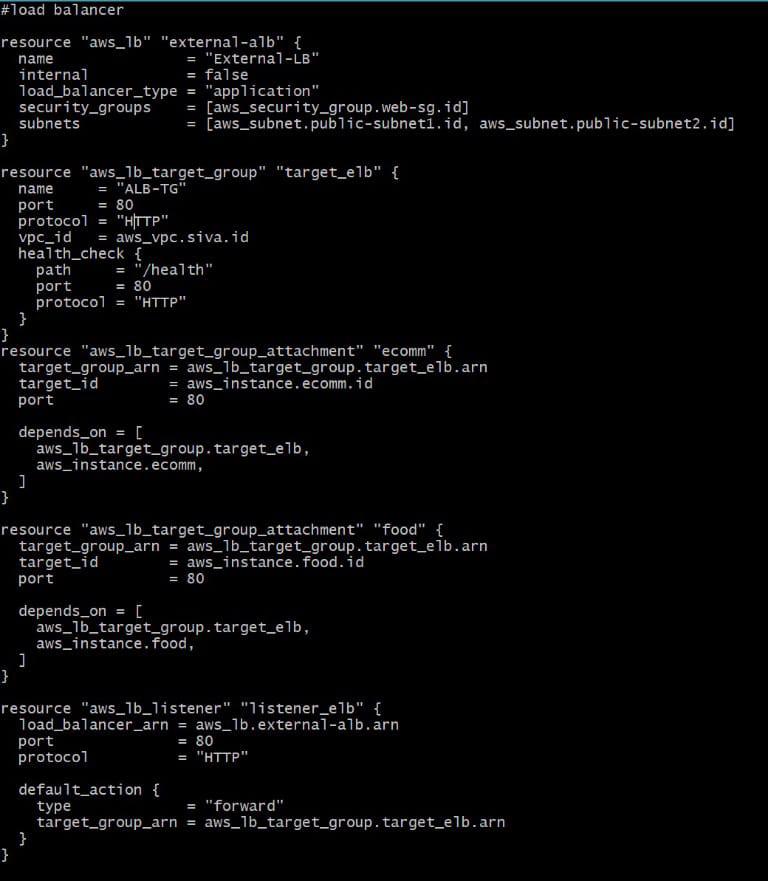

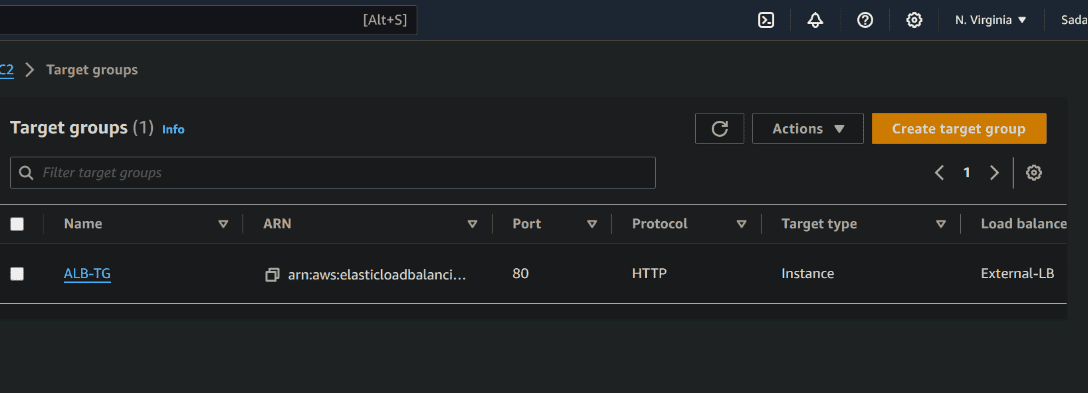

Creation of load balancer

resource "aws_lb" "external-alb" {

name = "External-LB"

internal = false

load_balancer_type = "application"

security_groups = [aws_security_group.web-sg.id]

subnets = [aws_subnet.public-subnet1.id, aws_subnet.public-subnet2.id]

}

resource "aws_lb_target_group" "target_elb" {

name = "ALB-TG"

port = 80

protocol = "HTTP"

vpc_id = aws_vpc.siva.id

health_check {

path = "/health"

port = 80

protocol = "HTTP"

}

}

resource "aws_lb_target_group_attachment" "ecomm" {

target_group_arn = aws_lb_target_group.target_elb.arn

target_id = aws_instance.ecomm.id

port = 80

depends_on = [

aws_lb_target_group.target_elb,

aws_instance.ecomm,

]

}

resource "aws_lb_target_group_attachment" "food" {

target_group_arn = aws_lb_target_group.target_elb.arn

target_id = aws_instance.food.id

port = 80

depends_on = [

aws_lb_target_group.target_elb,

aws_instance.food,

]

}

resource "aws_lb_listener" "listener_elb" {

load_balancer_arn = aws_lb.external-alb.arn

port = 80

protocol = "HTTP"

default_action {

type = "forward"

target_group_arn = aws_lb_target_group.target_elb.arn

}

}

Creation of variable file

The variable file in Terraform serves as a centralized location for defining and managing input variables used across multiple Terraform configurations. These variables allow users to parameterize their infrastructure code, making it more flexible, reusable, and maintainable.

variable "vpc_cidr" {

default = "10.0.0.0/16"

}

#cidr block for 1st subnet

variable "subnet1_cidr" {

default = "10.0.1.0/24"

}

#cidr block for 2nd subnet

variable "subnet2_cidr" {

default = "10.0.2.0/24"

}

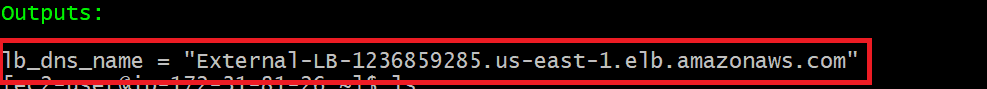

Creation of Output file

#DNS of LoadBalancer

output "lb_dns_name" {

description = "DNS of Load balancer"

value = aws_lb.external-alb.dns_name

}

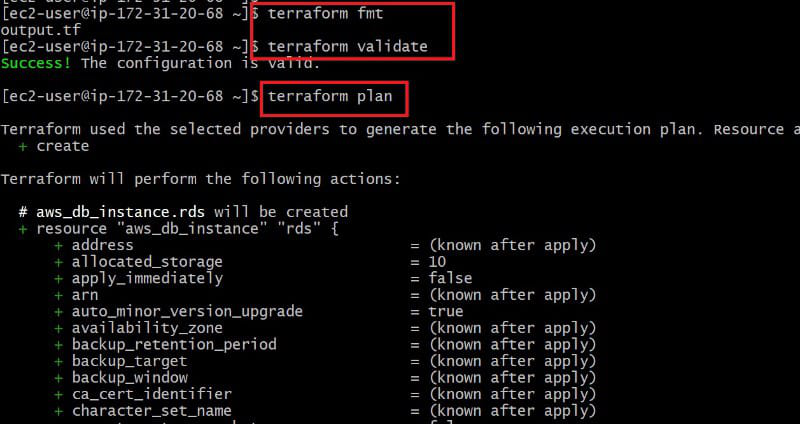

Step 4: Now Initialize Terraform And Execute Terraform Commands

Now initialize terraform by using following command

terraform init

Now execute terraform execution commands by using following commands

terraform fmt

terraform validate

terraform plan

Now execute terraform apply command to build our infrastructure and its give DNS link. Execute using following command

terraform apply --auto-approve

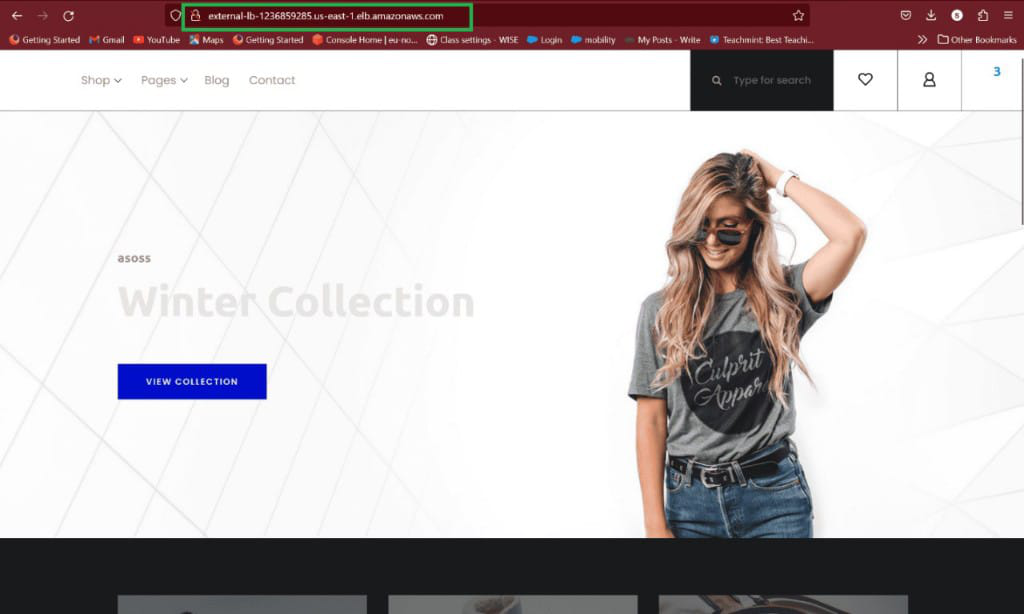

In above figure shows that Load Balancer DNS lin. By coping DNS Link and browse it in google then we see that our application

The following screenshot shows that we successfully created a sqs topic in aws using terraform

Target Groups

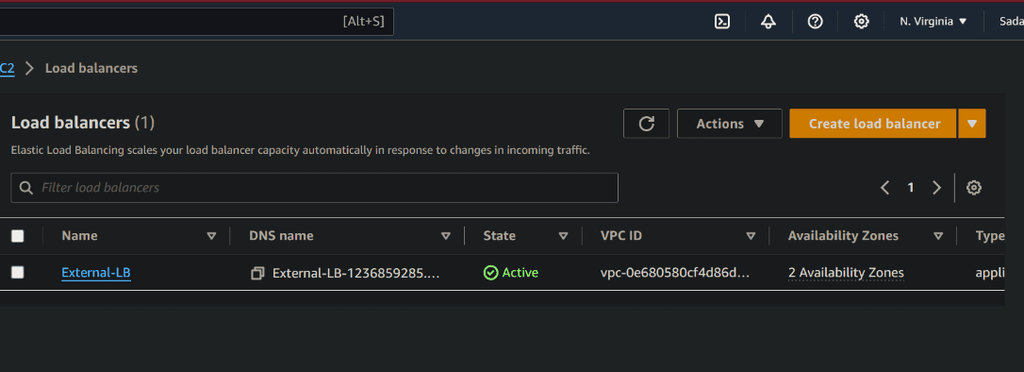

Load balancer

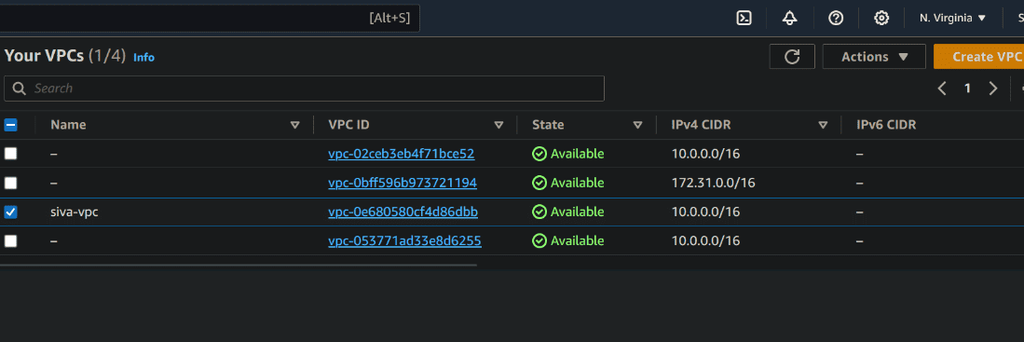

VPC

Conclusion

By Utilizing Terraform for deploying AWS load balancers provides a streamlined and efficient way to deal with managing infrastructure as code. By arranging load balancer configurations, listeners, target groups, and related resources, groups can automate the provisioning system, ensuring consistency and reliability quality across deployments. Terraforms declarative syntax structure works on infrastructure management, empowering users to define the ideal condition of their AWS environment and apply changes reliably. The integration with AWS services, for example, Elastic Load Balancing (ELB) works with high availability, fault tolerance, and scalability of applications. With Terraform, associations can undoubtedly increase assets or down, adjust to evolving responsibilities, and integrate load adjusting into their CI/CD pipelines for continuous delivery. Generally speaking, deploying AWS load balancers with Terraform upgrades functional effectiveness, speeds up infrastructure deployment, and enables groups to fabricate versatile and scalable architectures in the cloud.

Application load balancer using terraform – FAQ’s

Could Terraform manage existing AWS load balancers?

Yes, Terraform can manage existing resources. You can import existing AWS load balancer setups into Terraform state utilizing the terraform import command, permitting you to manage them close by other infrastructure resources.

How does Terraform ensure the security of AWS load balancer configurations?

Terraform supports the definition of security configurations, for example, security groups and access control lists (ACLs) inside its setup files. By executing security best practices and characterizing proper security policies, clients can guarantee the secure deployment and the management of AWS load balancers through Terraform.

Could Terraform can be utilized to automate the scaling of AWS load balancers?

While Terraform itself doesn’t directly deal with autoscaling of load balancers, it tends to be integrated with other AWS services, for example, Auto Scaling groups to automate the scaling of backend cases. By defining scaling policies and triggers inside Terraform configurations, users can powerfully change the limit of backend instances in based of traffic patterns.

Is it possible to update AWS load balancer configurations without interrupting traffic?

Yes, Terraform supports moving updates and blue-green deployments, allowing users to change load balancer configuration without disturbing traffic. By defining changes in Terraform configuration records and applying them steadily, users can ensure consistent updates to stack balancer designs while keeping up with continuous help accessibility.

How might I monitor the performance and health of AWS load balancers provisioned with Terraform?

AWS gives CloudWatch measurements and health checks for monitoring the performance and strength of load balancers. By integrating CloudWatch monitoring with Terraform designs, users can monitor measurements, for example, request latency, error rates, and backend instance health, empowering proactive troubleshooting and streamlining of load balancer configurations.

Share your thoughts in the comments

Please Login to comment...