In an age where information is abundantly available on the internet, the need for efficient content consumption has never been greater. This insightful article explores the development of a cutting-edge web-based application for summarizing website content, all thanks to the powerful capabilities of the Hugging Face Transformer model.

When you like an article but it’s super long, it can be hard to find the time to read the whole thing. That’s where a summarizer can be a real lifesaver. It’s a tool that gives you a short and sweet version of the article, so you can quickly get the main points without spending too much time.

Website Summarizer

A Website Summarizer is a vital tool for summarizing and reducing web page information. Offering succinct and cohesive summaries, enables visitors to easily comprehend the major concepts and vital information from lengthy articles, blog entries, or news reports. Website Summarizers examine the source material, identify essential lines or phrases, and provide summaries that encapsulate the core of the information using powerful natural language processing algorithms. This not only saves time but also improves the user’s capacity to make educated decisions, whether it’s staying up to speed on current events or performing research. Website Summarizers are extremely useful in today’s information age, making internet content more accessible and controllable.

Types of Summarizers

There are two types of summarizers out there:

- Extractive Summarizers: Extractive summarizers select and condense important sentences or phrases directly from the source text. They do not generate new sentences but rather pull relevant portions from the original content. This technique is not very precise and meaningful in the real world.

- Abstractive Summarizers: These summarizers generate summaries in a more human-like manner, producing original sentences to convey the originality of the content. They often rephrase and rewrite sentences. This technique is meaningful and feels like human generated. Abstractive summarization tends to be more contextually accurate.

This article focuses on abstractive summarizers. We will be developing a real-time summarizer that extracts meaningful and human-like summaries from the given website URL.

What is BART?

BART, also known as Bidirectional and Auto Regressive Transformers stands out as a language model created by Facebook AI. It falls under the category of sequence-to-sequence (seq2seq) models enabling it to be trained for tasks involving converting one set of data into another. These tasks include machine translation, text summarization and question answering.

What sets BART apart is its training process. It undergoes training on a dataset consisting of text and code using an objective. In terms of BART is trained to reconstruct text that has been altered in some way such as, through sentence shuffling or replacing sections with a mask token. This pre-training approach equips BART with an understanding of language structure and meaning which empowers it to excel in real-world applications.

BART’s Architecture

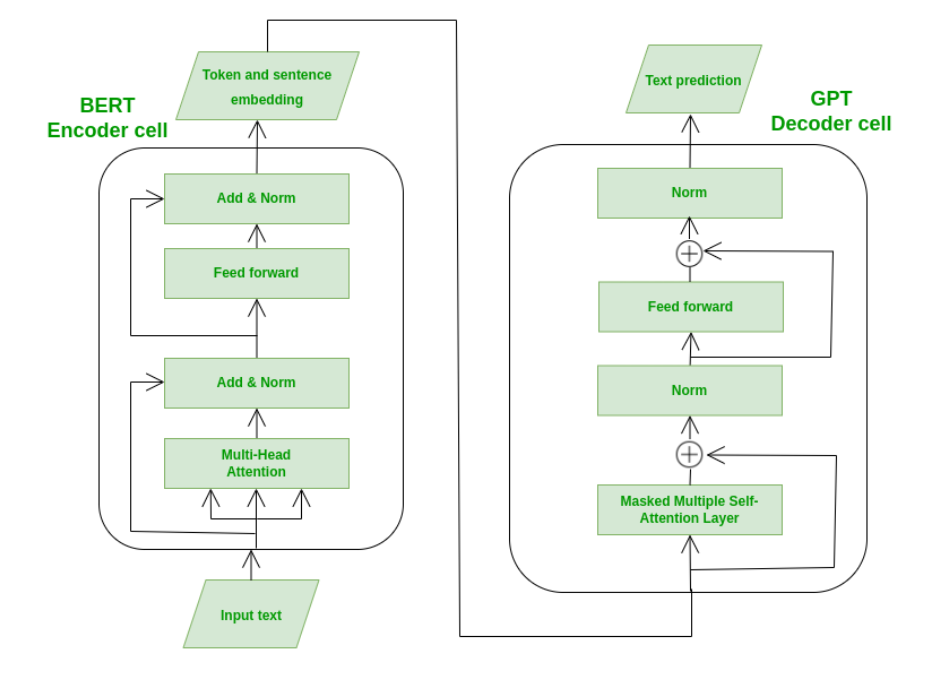

BART functions, as a model that operates in a sequence, to sequence manner. In terms it takes a series of input tokens. Generates a corresponding series of output tokens. The model consists of two components; an encoder and a decoder:

- The encoder is designed as a Transformer meaning it analyzes the input tokens, in both directions. This enables the encoder to understand how different tokens in the input sequence are related to each other over distances.

- The decoder operates as a right Transformer processing the output tokens sequentially. This ensures that the decoder generates an output sequence that makes sense and flows coherently.

BART encoder-decoder network architecture

Decoders consist of multiple layers of attention and feed-forward neural networks. The attention layers enable the model to grasp connections between tokens in both input and output sequences. The feed-forward neural networks enable the model to learn relationships, among these tokens.

BART’s Working

- BART undergoes training using a denoising autoencoder objective, which means it is taught to reconstruct a modified version of the input sequence.

- The modified input sequence is formed by introducing variations to the input sequence.

- These variations can take forms, such, as removing tokens adding random tokens or changing the order of tokens randomly.

- The objective of the denoising autoencoder encourages BART to learn a representation of the input sequence that remains robust in the presence of noise.

- This implies that BART can restore the input sequence even if it has been distorted by noise.

- As a result BART proves to be highly suitable for natural language processing tasks like text summarization, machine translation and question answering where the input data is often prone, to containing noise.

Summarization using Hugging Face Transformer

The summarization task offered by Hugging Face Transformers involves creating a brief and logical summary of a longer piece of text or document. This task falls under the wider scope of natural language processing (NLP) and is especially valuable for condensing information, improving readability for readers, or extracting important insights from long articles, documents, or web pages.

Hugging Face Transformer

The Hugging Face Transformers, commonly known as “Transformers,” is a freely available library and system for deep learning and natural language processing (NLP). It offers a diverse array of pre-trained models, such as BERT, GPT, RoBERTa, and others, based on transformers. These models cater to various NLP tasks, including text categorization, language creation, identifying named entities, and machine translation.

Tools Required

- A text editor (VS Code is recommended)

- Latest version of Python

- A web browser (Google Chrome is recommended)

- Internet Connection (For installing packages)

Building Website Summarizer

Creating Workspace

- Create a new folder on your PC and open it in your editor (VS Code).

- Create a new file named “app.py” in this newly created folder. (This is the main file where we do our work)

Required Packages

transformers: This package uses Natural Language Processing under the hood and summarizes the input text using Transformer architecture.

pip install transformers

tensorflow: This package is needed for transformers to work.

pip install tensorflow

requests: This package is used to make a “GET” request on the given website URL for extracting text.

pip install requests

bs4: This package is used for scraping the content in a given website for summarizing.

lxml: This is used for processing XML and HTML documents.

pip install bs4

pip install lxml

streamlit: This package is used for designing a GUI (Graphical User Interface) thus making an interactive fully functional application.

pip install streamlit

Install all the above packages using pip in the same order mentioned (Use Virtual Environment if you get any issues in the installation)

Importing Packages

Python3

from transformers import pipeline

import requests

from bs4 import BeautifulSoup

import re

import streamlit as st

|

Analysis:

- Here, another inbuilt package is also imported.

- re (Regular Expressions) is used for removing text from scrapped text which is not useful for the summary.

Extracting Text

Python3

def extractText(url):

response = requests.get(url)

if response.status_code == 200:

soup = BeautifulSoup(response.text, 'lxml')

excludeList = ['disclaimer', 'cookie', 'privacy policy']

includeList = soup.find_all(

['h1', 'h2', 'h3', 'h4', 'h5', 'h6', 'p'])

elements = [element for element in includeList if not any(

keyword in element.get_text().lower() for keyword in excludeList)]

text = " ".join([element.get_text()

for element in elements])

text = re.sub(r'\n\s*\n', '\n', text)

return text

else:

return "Error in response"

|

Code Analysis:

- A function extractText is created which returns the text from a given website URL.

- Using the requests library, a GET request is made which returns the body of the website.

- Then by using the Beautiful Soup library, all the headings and paragraphs from the website are extracted and joined into a single text which is then returned by the function.

- Empty lines and strings are removed from text using re package.

- Some of the blocks such as “Disclaimer”, and “Cookies” are removed from the extracted text. You can modify this as per your needs.

Splitting Text Into Chunks

Python3

def splitTextIntoChunks(text, chunk_size=1024):

chunks = []

for i in range(0, len(text), chunk_size):

chunk = text[i:i + chunk_size]

chunks.append(chunk)

return chunks

|

Code Analysis:

- A function splitTextIntoChunks is created which returns the chunks of text from a given long text.

- It first loops through every character and appends a text string of chunk_size (Defaulted to 1024) to the chunks[] list and is returned by the function.

Note: The model we are using is “facebook/bart-large-cnn” which takes a max of 1024 tokens. So 1024 is specified as chunk_size. Adjust the chunk_size parameter according to your model needs.

Summarizing Text

Python3

def summarize(text, chunk_size=1024, chunk_summary_size=128):

summarizer = pipeline("summarization", model="facebook/bart-large-cnn")

chunks = splitTextIntoChunks(text, chunk_size)

summaries = []

for chunk in chunks:

size = chunk_summary_size

if(len(chunk) < chunk_summary_size):

size = len(chunk)/2

summary = summarizer(chunk, min_length=1, max_length=size)[0]["summary_text"]

summaries.append(summary)

concatenated_summary = ""

for summary in summaries:

concatenated_summary += summary + " "

return concatenated_summary

|

Code Analysis:

- A function summarize is created which returns the summary text from the input text.

- Using the pipeline function of the transformer, the task is specified as “summarization” and the model as “facebook/bart-large-cnn” which is quite efficient and powerful for summarization tasks.

- The input text is divided into chunks and each chunk is summarized using the summarizer function.

- Here, chunk_summary_size is defaulted to 128 characters. This is the length of the summary for each text chunk. You can adjust it accordingly.

- The individual summaries are then concatenated and returned by the function.

Note: After this function is run, a TensorFlow model sized 1.63GB gets installed on your machine.

Summary Generations

Python3

text = extractText(url)

summarize(text)

|

Output:

'Natural Language Processing is a subset of artificial intelligence. It enables machines to comprehend and analyze human languages.

In NLP we need to perform some extra processing steps. NLP software mainly works at the sentence level and it also expects words to be separate.

We will see some of the ways of collecting data if it is not available in our local machine or database. In NLP this process of feature engineering is

known as Text Representation or Text Vectorization. In the traditional approach, we create a vocabulary of unique words assign a unique id

(integer value) for each word. Bag of n-gram tries to solve this problem by breaking text into chunks of n continuous words.

N-gram representations are in the form of a sparse matrix, where each row represents a sentence and each column represents an n-gram in the vocabulary.

TF-IDF tries to quantify the importance of a given word relative to the other word in the corpus.

The value in the vector represents the measurements of some features or quality of the word. This is not interpretable for humans but Just for

representation purposes. We can understand this with the help of the below table. Heuristic-based approach is also used for the data-gathering

tasks for ML/DL model. Regular expressions are largely used in this type of model. Recurrent neural networks are a class of artificial neural networks.

The basic concept of RNNs is that they analyze input sequences one element at a time while maintaining track in a hidden state that contains a summary

of the sequence’s previous elements. This enables the RNN to process data from sources like natural languages, where context is crucial.

Long Short-Term Memory (LSTM) is an advanced form of RNN model. LSTMs function by selectively passing or retaining information from one-time

step to the next. Gated Recurrent Unit (GRU) is also the advanced form of RNN. GRUs also have gating mechanisms that allow them to selectively

update or forget information from the previous time steps. '

Creating GUI

Python3

st.title("Website Summarizer")

url = st.text_input("Enter the website URL")

if st.button("Summarize"):

if url:

try:

info_text = st.empty()

info_text.info("Extracting text from the website...")

article = extractText(url)

info_text.info("Summarizing the text...")

summarized = summarize(article)

info_text.info("Adding final touches...")

finalSummary = summarize(summarized)

info_text.empty()

st.header("Summarized Text")

st.write(finalSummary)

except Exception as e:

st.error("An error occurred. Please check the URL or try again later.")

else:

st.warning("Please enter a valid website URL.")

|

Code Analysis:

- Using streamlit, a title and text box are specified.

- A button named “Summarize” is created, which when clicked, first the text will be extracted from the website, and then summarized.

- Then, the summarize function is called again on the summary generated to make the final text condensed and meaningful.

- Info messages are also displayed to the user to make the application interactive.

- Finally, the summary is shown below the text box and info messages are hidden on the application.

Complete Code Implementation

Python3

from transformers import pipeline

import requests

from bs4 import BeautifulSoup

import re

import streamlit as st

def extractText(url):

response = requests.get(url)

if response.status_code == 200:

soup = BeautifulSoup(response.text, 'lxml')

excludeList = ['disclaimer', 'cookie', 'privacy policy']

includeList = soup.find_all(

['h1', 'h2', 'h3', 'h4', 'h5', 'h6', 'p'])

elements = [element for element in includeList if not any(

keyword in element.get_text().lower() for keyword in excludeList)]

text = " ".join([element.get_text()

for element in elements])

text = re.sub(r'\n\s*\n', '\n', text)

return text

else:

return "Error in response"

def splitTextIntoChunks(text, chunk_size=1024):

chunks = []

for i in range(0, len(text), chunk_size):

chunk = text[i:i + chunk_size]

chunks.append(chunk)

return chunks

def summarize(text, chunk_size=1024, chunk_summary_size=128):

summarizer = pipeline("summarization", model="facebook/bart-large-cnn")

chunks = splitTextIntoChunks(text, chunk_size)

summaries = []

for chunk in chunks:

size = chunk_summary_size

if(len(chunk) < chunk_summary_size):

size = len(chunk)/2

summary = summarizer(chunk, min_length=1, max_length=size)[0]["summary_text"]

summaries.append(summary)

concatenated_summary = ""

for summary in summaries:

concatenated_summary += summary + " "

return concatenated_summary

st.title("Website Summarizer")

url = st.text_input("Enter the website URL")

if st.button("Summarize"):

if url:

try:

info_text = st.empty()

info_text.info("Extracting text from the website...")

article = extractText(url)

info_text.info("Summarizing the text...")

summarized = summarize(article)

info_text.info("Adding final touches...")

finalSummary = summarize(summarized)

info_text.empty()

st.header("Summarized Text")

st.write(finalSummary)

except Exception as e:

st.error("An error occurred. Please check the URL or try again later.")

else:

st.warning("Please enter a valid website URL.")

|

The final application can be run and built using the below command in the terminal.

After running the command it will give you a localhost URL where the application can be accessed locally in the system and a Network URL where the application can be accessed anywhere on the internet, copy and paste any of the above two URLs in your browser to access the application.

Here is the website that is displayed after running the above command.

streamlit run app.py

Output:

-660.png)

Video Output

Frequently Asked Questions (FAQs)

Q1: What is the purpose of a summarizer?

In today’s age with an abundance of information online a summarizer proves to be a valuable asset, for individuals seeking to efficiently extract key insights, from lengthy articles. This not only saves time, but also enhances the overall experience of consuming content.

Q2: What are the two main types of summarizers?

The two main types of summarizers are Extractive Summarizers and Abstractive Summarizers.

Q3: What is a Hugging Face Transformer?

Hugging Face Transformers is a tool, for learning and natural language processing (NLP). It offers trained models such as BERT, GPT, RoBERTa and others that play a vital role, in different NLP tasks. One of these tasks includes text summarization as highlighted in the article.

Q4: What is BART, and what makes it stand out in text summarization tasks?

BART, which stands for Bidirectional and Auto-Regressive Transformers is a language model developed by Facebook AI. It has been trained to reconstruct text that has undergone alterations giving it the ability to excel in tasks such, as text summarization. What sets BART apart is its training methodology, which allows it to possess a profound grasp of language structure and significance.

Share your thoughts in the comments

Please Login to comment...