Understanding Kubernetes Kube-Proxy And Its Role In Service Networking

Last Updated :

21 Mar, 2024

Networking is essential to Kubernetes. You may modify your cluster to meet the needs of your application by understanding how its different parts work. Kube-Proxy is a key component that converts your services into useful networking rules and is at the core of Kubernetes networking.

In this article, the following topics are covered:

What Is Kube-Proxy?

Every cluster node has the Kubernetes agent Kube-Proxy installed on it. It keeps track of modifications made to Service objects and their endpoints. If any modifications are made, the node’s internal network rules are updated accordingly. In your cluster, Kube-Proxy typically operates as a DaemonSet. However, it may also be installed straight into the node as a Linux process. Kube-Proxy will install as a DaemonSet if you use kubeadm. The cluster components may be manually installed on the node and will operate directly as a process if you use official Linux tarball binaries.

What Is Kubernetes Service?

A service in Kubernetes is comparable to a traffic management for a collection of pods doing similar tasks. It provides them with a solitary, reliable address (IP and DNS) for correspondence. By doing so, the intricacy of each pod address is hidden and traffic between them is easy to access and balance. Some of the features of Services are:

- Consistent Communication: It is ensured by services providing pods with a dedicated address.

- Load Balancing: They avoid overload by dividing traffic among pods equally.

- Service Discovery: Services make it easier to locate and establish connections with clustered applications.

- Scalability: They facilitate the development of scalable, high-traffic applications.

Benefits Of Using Kube-Proxy

The following are the benefits of using kube-proxy:

- Service-To-Pod Mapping: Kube-proxy keeps track of a network routing table that links the IP addresses of Service pods with the respective addresses of the Service. This guarantees that requests sent to a service are sent to the relevant pods in an accurate manner.

- Continuous Re-Mapping: To account for cluster changes such pod termination and recreation, Kube-proxy updates the network routing table continually. By doing this, the accuracy and currency of the Service-to-Pod mapping are guaranteed.

- Promotes Communication: Despite the dynamic nature of pods in Kubernetes clusters, Kube-proxy allows smooth communication between services and pods by controlling the network routing at the node level.

- Load Balancing: Kube-proxy facilitates pod-to-pod traffic balancing, guaranteeing that requests are dispersed equally across many application instances. This increases the availability of applications and keeps individual pods from overloading.

Role Of Kube-Proxy In Service Networking

A key component of a Kubernetes cluster’s networking design is the Kubernetes Proxy, often known as Kube-Proxy. By controlling network routing and load balancing, it guarantees effective communication between services and pods alike. The Kube-Proxy workflow inside the Kubernetes cluster architecture is explained in full below, along with a diagram:

- Creation/Update of Services: Kube-Proxy keeps track of when a new service is launched or an old one is modified within the Kubernetes cluster.

- Retrieving Service IP: To get the IP address and port information of the service, Kube-Proxy establishes a connection with the Kubernetes API server.

- Pod Mapping: A network routing table that links the IP addresses of the Service’s pods to the Service’s IP addresses is kept up to date by Kube-Proxy. This mapping is updated continuously to account for cluster changes, like pod termination and recreation.

- Interception Of Traffic: Kube-Proxy intercepts traffic intended for a service when a request is received to its IP address.

- Routing Lookup: Kube-Proxy looks up the matching pod IP addresses in the routing database using the Service IP address as a point of reference.

- Forwarding Of Packets: Kube-Proxy sends the intercepted traffic to one of the pods connected to the service based on the routing lookup. It guarantees that the traffic is directed to the right pod in the right way.

- Load Balancing: In the event that load balancing is specified for the service, Kube-Proxy divides traffic evenly among several pods using the specified load balancing method (such as round-robin or least connections). As a result, individual pods are kept from overloading.

- Response Return: Kube-Proxy makes sure the client receives an accurate response from the pod when it has completed processing the request.

- Continuous Monitoring: Kube-Proxy keeps an eye out for changes to any Service objects or pod configurations inside the cluster. In order to preserve accurate communication between services and pods, it modifies the routing table and modifies packet forwarding as necessary.

Kube Proxy In Kubernetes Cluster Architecture

Several essential parts of a Kubernetes cluster cooperate to effectively manage containerised apps. The main elements of the Kubernetes cluster architecture are explained as follows:

- Kube-proxy manages networking in the Kubernetes cluster by directing traffic between pods and services, which makes load balancing and service discovery easier. It keeps an eye on the cluster constantly, dynamically adjusting load balancing and routing rules to achieve peak efficiency.

- etcd: The distributed database used by the cluster to store cluster state is called etcd. It preserves the settings, metadata, and up-to-date state data necessary for cluster functioning.

- kube-api-server: This server offers the cluster administration Kubernetes API endpoint. It facilitates communication between users and other components and the cluster by serving as the front-end interface for engaging with the Kubernetes control plane.

- Kube-controller-manager: This module oversees several controllers in order to preserve the intended cluster state. It keeps an eye on the cluster constantly to make sure that the deployed state, replicaset state, and service state—all of which are described in Kubernetes objects—match the expected state.

- kube Scheduler: Kubernetes schedules pods onto nodes according to resource limits and requirements. It chooses the right node for every pod based on the specs of the pod, the node’s state, and the resources that are available.

- kubelet: a programme that monitors node status and manages containers on each node. It exchanges data with the Kubernetes API server in order to obtain pod specifications, retrieve container images, and verify that containers are operating according to plan.

- CRI(Container Runtime Interface): CRI makes it easier for container runtimes and Kubernetes to communicate. It specifies the interface that Kubernetes uses to communicate with container runtimes such as Docker, containerd, or CRI-O in order to control networking, storage, and the lifespan of containers.

- Cloud Control Manager: Cloud Controller Manager manages resources unique to the cloud by interacting with the APIs of the cloud provider. It enables smooth interaction with cloud settings by abstracting Kubernetes control plane functions particular to cloud providers.

- Pods: In Kubernetes, pods—which can hold one or more containers—are the fundamental units of deployment. They include the network settings, storage resources, and application containers needed to run apps inside the cluster.

- Cloud Provider API: The interface that cloud service providers like AWS, Azure, and Google Cloud offer, which enables programmatic management and interaction with cloud resources, is referred to as the cloud provider API.

What Are The Kube-Proxy Modes?

The mode controls how the NAT (Network Address Translation) rules are implemented by Kube-Proxy. Kube-proxy functions in three primary ways:

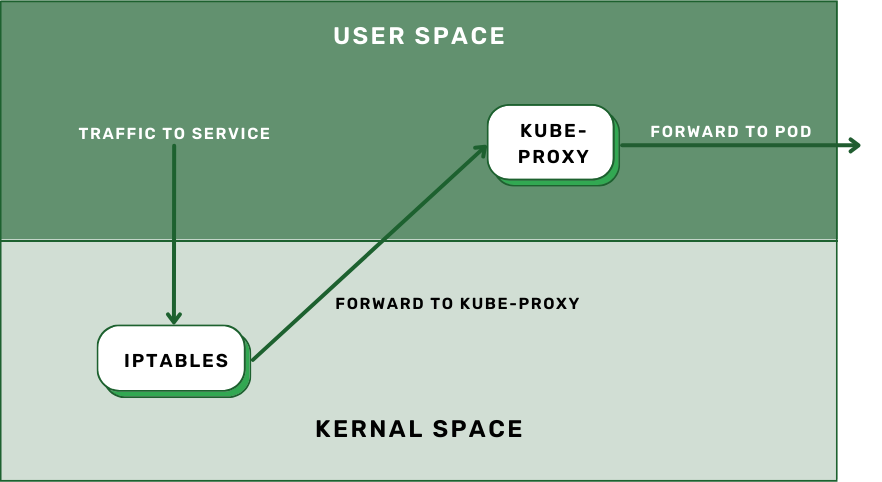

Userspace mode

Kube-proxy operates on each node as a userspace process in this mode. It distributes requests across pods to provide load balancing and intercepts service communication. The overhead of processing packets in userspace makes it less effective for heavy traffic loads, despite its portability and simplicity.

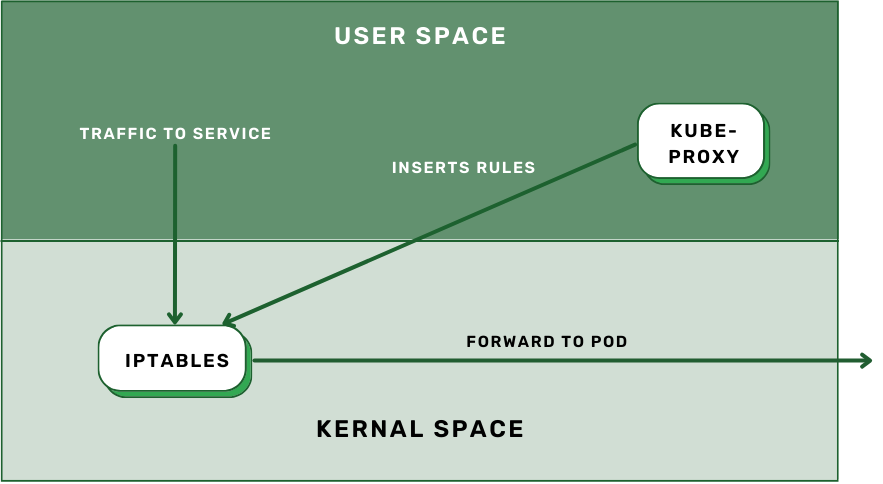

IPtables mode

To manage service traffic, Kube-proxy sets up IPtables rules on each node. It routes packets to the relevant pods using IPtables NAT (Network Address Translation). This mode works well with modest traffic volumes and is more efficient than userspace mode.

IPVS (IP Virtual Server) mode

IPVS mode balances load by using the IPVS capability of the Linux kernel. In comparison to IPtables mode, it offers improved scalability and performance. IPVS is the recommended mode for large-scale installations since it can manage greater traffic volumes with efficiency.

Note: By default, Kube-proxy operates on port 10249. You may utilise a set of endpoints that Kube-proxy exposes to query it for information.

- To verify the kube-proxy mode, visit the /proxyMode endpoint.

- Establish an SSH connection to one of the cluster’s nodes first. After connecting, execute this command:

curl -v localhost:10249/proxyMode

- Based on the size of your cluster and the traffic patterns (IPtables, IPVS, or userspace), choose the appropriate Kube-proxy mode.

- Keep an eye on the network traffic and resource use of Kube-proxy to spot any possible bottlenecks.

- Make sure Kube-proxy settings are in line with the needs of your cluster by periodically reviewing and updating them.

- Reduce the amount of needless traffic that Kube-proxy handles by implementing network controls and security measures.

- When dealing with high-traffic settings, take into consideration offloading traffic from Kube-proxy utilising caching methods or CDN (Content Delivery Network) services.

Kubernetes Kube-proxy – FAQs

In A Kubernetes Cluster, Is It Possible To Deactivate Or Circumvent Kube-proxy?

In certain circumstances, it is feasible to deactivate Kube-proxy or avoid using its features. But doing so could need manual setup, which might affect the cluster’s networking and load balancing capabilities.

In A Kubernetes Cluster, How Is Network Policy And Security Managed By Kube-proxy?

Kube-proxy does not actively enforce network rules or security measures inside the cluster; instead, it is largely in charge of load balancing and network routing. Kube-proxy may be used in combination with other plugins and technologies, such network policy controllers and security solutions, to improve security and enforce network regulations.

What Effects Would Kube-proxy Use Have On Scalability And Performance In Large-scale Kubernetes Deployments?

Although Kube-proxy can handle networking duties well in smaller clusters, bigger deployments with significant traffic levels may have issues with its scalability and performance. For their particular use case, organisations may need to assess other networking options or adjust Kube-proxy setups in order to maximise performance and scalability.

What Is The Relationship Between Kube-proxy And Other Networking Elements Or Kubernetes Plugins, Such CNI (container Network Interface) Plugins?

To oversee networking responsibilities inside the cluster, Kube-proxy collaborates with other networking components and Kubernetes plugins, such CNI plugins. Kube-proxy is used for load balancing, traffic routing, and service discovery; CNI plugins are used for activities like network provisioning and interface setup.

Share your thoughts in the comments

Please Login to comment...