Ridge Classifier

Last Updated :

19 Oct, 2023

Supervised Learning is the type of Machine Learning that uses labelled data to train the model. Both Regression and Classification belong to the category of Supervised Learning.

- Regression: This is used to predict a continuous range of values using one or more features. These features act as the independent variables and the outcome of the predicted value acts as the dependent variable.

- Classification: is used to classify the dataset into categories based on the characteristics.

Ridge Regression

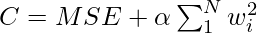

Ridge Regression is a type of Linear Regression in which the regularization term is added as a parameter. This regularization term is also known as L2 regularization. This is done so as to avoid overfitting. Overfitting is when the model performs excellently on training data but poorly on the test or unseen data. Regularization introduces penalties on higher terms so as to reduce loss as well as overfitting in the model. The cost formula for the Ridge Regression is as follows

Ridge Classifier

The Ridge Classifier is a machine learning algorithm designed for multi-class classification tasks. By combining ideas from conventional classification techniques and Ridge Regression, it offers a distinct method for classifying data points. The L2 regularization used by the Ridge Classifier, which has its roots in Ridge Regression, stops overfitting by adding a penalty term that is managed by the hyperparameter alpha. This regularization aids in preserving equilibrium between managing model complexity and fitting the data. Its ability to adapt classification to a regression framework by transforming target variables into a specified range, usually between -1 and 1, is one of its distinguishing features. This conversion reduces the chance of overfitting.

Like Ridge Regression, the Ridge Classifier uses a loss function akin to mean squared loss. The regularization strength is adjusted by the alpha parameter, which also regulates how the penalty affects the model coefficients. Regression-based methods are applied for multiclass classification in order to identify decision boundaries that effectively divide distinct classes. The Ridge Classifier, in its simplest form, blends components of classification and regression to provide a stable and reliable answer to challenging classification problems.

Parameters of Ridge Classifier

Based on Ridge Regression and modified for classification, the Ridge Classifier features a few important parameters that can be adjusted to regulate its behavior. These parameters manage regularization and optimize the model’s performance. The following are the Ridge Classifier’s primary parameters:

- alpha(float, default=1.0): The parameter for regularization strength is called alpha. It regulates how much L2 (Ridge) regularization is used on the model. Although the model may become less flexible, a larger alpha value strengthens the regularization impact and lessens the likelihood of overfitting. To fine-tune the ratio of regularizing the model to fitting the training data, you can modify this hyperparameter.

- fit_intercept(bool, default=True): The Ridge Classifier uses this parameter to determine whether to estimate and utilize an intercept (bias) term during training. It is a Boolean flag. There will be an intercept term in the model if it is set to True. In case it is set to False, the decision boundary will traverse the origin (0,0) and no intercept will be predicted.

- copy_X(bool, default=True): An additional Boolean flag is Copy_X. It denotes that making a copy of the data prior to training should be done when set to True. Although it might use more memory, this is done to prevent changing the original data. The model uses the input data as input if it is set to False.

- max_iter(int, default=None): The max_iter argument indicates the most iterations that must be done for the solver to converge. This option determines the number of iterations the solver should complete before halting, as the Ridge Classifier is usually optimized by iterative methods. When the solution converges or exceeds the predefined maximum number of iterations, if none is set, the solver will terminate.

- tol(float, default=0.0001): Tol stands for convergence tolerance. When the difference in the coefficients (weights) between iterations is less than this value, the solver ends the loop. It specifies how accurate the optimization procedure is.

- class_weight(dict or ‘balanced’, default=None): Classes can be given varying weights thanks to this option. It is possible to offer a dictionary with weights assigned to each class. For datasets that are not balanced, the ‘balanced’ option can be helpful since it automatically determines the weights based on the quantity of samples in each class.

- solver(str, default=’auto’): The optimization solver to be used is specified by the solver parameter. “Auto,” “svd,” “cholesky,” “lsqr,” “sparse_cg,” and “sag” are among the possible values. Based on the features of the problem, the solver is automatically selected via the ‘auto’ option. The “auto” option is often a good option, but if necessary, you can manually choose a solver.

- positive(bool, default=False): This parameter, which only makes sense for positive coefficients in particular applications, can be helpful in ensuring that the coefficients (weights) are non-negative if set to True.

- random_state(int, RandomState instance, or None, default=None): When the solution calls for data shuffles, such as when utilizing the’sag’ solver, the random_state option governs the random number generator. Reproducibility of your findings is guaranteed when a specific random state is set.

Implementation of Ridge Classifier

Here’s the implementing of a Ridge Classifier on the Breast Cancer dataset using scikit-learn:

Importing Libraries

Python3

from sklearn.datasets import load_breast_cancer

from sklearn.model_selection import train_test_split

from sklearn.linear_model import RidgeClassifier

from sklearn.metrics import accuracy_score, classification_report

|

First import the necessary library sklearn. Sklearn is a powerful ML library that contains all the algorithms and also some inbuilt datasets like iris,cancer dataset. Here we have imported the cancer dataset. The cancer dataset comprises binary labels: one is benign and other is malign. Benign has label 0 and malign has label 1.

Loading Dataset

Python3

data = load_breast_cancer()

X, y = data.data, data.target

|

The Breast Cancer dataset is loaded in this code segment from the scikit-learn datasets. It puts the target labels on y and the feature matrix on X.

Splitting the Data

Python3

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.2, random_state=42)

|

The training data’s features and labels are represented, respectively, by the variables x_train and y_train.The features and labels of the testing data are represented by the variables x_test and y_test. For reproducibility, the test size is set at 20% of the original dataset, and the random seed is fixed at 42.

Defining Parameters

Python3

alpha = 1.0

max_iter = 1000

solver = 'auto'

tol = 1e-3

|

This code snippet creates and trains a Ridge Classifier using the following important parameters:

- ‘alpha’: The degree of regularization is set by this parameter. Stronger regularization, which helps avoid overfitting, is achieved with lower values; these values can be modified according to the features of the dataset.

- ‘max_iter’: It specifies the most iterations that must pass before the solver converges on a solution. In case the model fails to converge within the default limit, it might be imperative to raise this value.

- ‘solver’: The optimization method is specified by the’solver’ argument. If ‘auto’ is selected, scikit-learn will select the solver that best fits the problem and data at hand.

- ‘tol’: The stopping criterion’s tolerance is determined by this parameter. The solution ends if, after a number of iterations, the improvement in the goal function is less than this value. Requiring more iterations, a smaller ‘tol’ value might result in more exact convergence.

Training the Model

Python3

ridge_classifier = RidgeClassifier(

alpha=alpha, max_iter=max_iter, solver=solver, tol=tol)

ridge_classifier.fit(X_train, y_train)

|

This line of code instantiates a Ridge Classifier model using the given hyperparameters (alpha, max_iter, solver, and tol) and trains it on the provided training set (X_train and y_train). The regularization strength and convergence requirements established by the hyperparameters are taken into consideration as the model learns to identify the decision boundary that divides the classes in the training set.

Prediction and Evaluation

Python3

accuracy = accuracy_score(y_test, y_pred)

print("Accuracy:", accuracy)

|

Output:

Accuracy: 0.956140350877193

The model’s performance is evaluated in this code using the accuracy_score function. The genuine labels of the testing data (y_test) are compared to the predicted labels (y_pred). The resulting accuracy score is reported as the accuracy of the model and quantifies the percentage of correctly identified instances.

Classification Report

Python3

print(classification_report(y_test, y_pred))

|

Output:

precision recall f1-score support

0 0.97 0.91 0.94 43

1 0.95 0.99 0.97 71

accuracy 0.96 114

macro avg 0.96 0.95 0.95 114

weighted avg 0.96 0.96 0.96 114

A thorough description of the model’s performance measures, such as precision, recall, and F1-score for each class (in this case, classes 0 and 1), is given in the output of the classification_report code. In addition, it shows the model’s accuracy based on testing data and other metrics like weighted averages and macros. With true positives and false positives for each class taken into account, these metrics aid in evaluating the model’s capacity to categorize cases in various ways.

Advantages of Ridge Classifier

There are various advantages of Ridge classifiers: Some of the following advantages are:

- Regularization: The L2 regularization used by the Ridge Classifier penalizes large coefficients, hence preventing overfitting. Reducing the likelihood of excessive variance is very helpful when working with datasets that include a lot of features.

- Multicollinearity Handling: When characteristics are highly linked, a condition known as multicollinearity, it successfully addresses it. Ridge regression is capable of managing these kinds of scenarios by more fairly allocating the influence of linked features.

- Stable Results: When compared to conventional linear classifiers, the Ridge Classifier yields findings that are more reliable and consistent. When working with noisy data or datasets that are prone to volatility, this stability is essential.

Disadvantages of Ridge Classifier

There are also various disadvantages that a ridge classifier carries. Some of the disadvantages are:

- Bias-Variance Trade-off: Ridge Classifier may induce bias even though it lowers the likelihood of overfitting. It can be difficult to find the ideal balance between variance and bias, and underfitting could result from an extremely high alpha.

- Computational Complexity: The computing cost of it can be substantial, particularly when working with high-dimensional datasets. Training may be slowed considerably by the requirement to solve a linear system of equations, especially for large-scale problems.

- Not Ideal for Sparse Data: Ridge regularization is less appropriate for datasets with a large number of irrelevant or sparse features since it presupposes that every feature contributes. Other models or feature selection may be more appropriate in these situations.

Conclusion

In summary, the Ridge Classifier is an important tool for classification and machine learning. It can be used in many different situations, especially with datasets that are prone to multicollinearity, which is where classic linear classifiers may struggle. Ridge Classifier successfully prevents overfitting and ensures strong model generalization by implementing regularization through the L2 penalty term. It is the best option when trying to achieve that equilibrium because it offers a balance between bias and variance. Ridge Classifier is a useful option for a range of real-world situations because of its versatility, which is demonstrated by its ability to operate smoothly with datasets that have high feature dimensions. Furthermore, the model’s hyperparameters, including the regularization strength (alpha), let users adjust it to the specifics of their data. Text classification, medical diagnostics, and even image recognition are just a few of its many uses. In general, the Ridge Classifier is a dependable and adaptable classification algorithm that, thanks to its regularization and adjustable parameters, can produce accurate results in a variety of areas.

Share your thoughts in the comments

Please Login to comment...