Machine learning is an effective tool for predicting numerical values, and regression is one of its key applications. In the arena of regression analysis, accurate estimation is crucial for measuring the overall performance of predictive models. This is where the famous machine learning library Python Scikit-Learn comes in. Scikit-Learn gives a complete set of regression metrics to evaluate the quality of regression models.

In this article, we are able to explore the basics of regression metrics in scikit-learn, discuss the steps needed to use them effectively, provide some examples, and show the desired output for each metric.

Regression

Regression fashions are algorithms used to expect continuous numerical values primarily based on entering features. In scikit-learn, we will use numerous regression algorithms, such as Linear Regression, Decision Trees, Random Forests, and Support Vector Machines (SVM), amongst others.

Before learning about precise metrics, let’s familiarize ourselves with a few essential concepts related to regression metrics:

1. True Values and Predicted Values:

In regression, we’ve got two units of values to compare: the actual target values (authentic values) and the values expected by our version (anticipated values). The performance of the model is assessed by means of measuring the similarity among these sets.

2. Evaluation Metrics:

Regression metrics are quantitative measures used to evaluate the nice of a regression model. Scikit-analyze provides several metrics, each with its own strengths and boundaries, to assess how well a model suits the statistics.

Types of Regression Metrics

Some common regression metrics in scikit-learn with examples

- Mean Absolute Error (MAE)

- Mean Squared Error (MSE)

- R-squared (R²) Score

- Root Mean Squared Error (RMSE)

Mean Absolute Error (MAE)

In the fields of statistics and machine learning, the Mean Absolute Error (MAE) is a frequently employed metric. It’s a measurement of the typical absolute discrepancies between a dataset’s actual values and projected values.

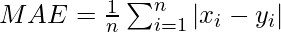

Mathematical Formula

The formula to calculate MAE for a data with “n” data points is:

Where:

- xi represents the actual or observed values for the i-th data point.

- yi represents the predicted value for the i-th data point.

Example:

Python

from sklearn.metrics import mean_absolute_error

true_values = [2.5, 3.7, 1.8, 4.0, 5.2]

predicted_values = [2.1, 3.9, 1.7, 3.8, 5.0]

mae = mean_absolute_error(true_values, predicted_values)

print("Mean Absolute Error:", mae)

|

Output:

Mean Absolute Error: 0.22000000000000003

Mean Squared Error (MSE)

A popular metric in statistics and machine learning is the Mean Squared Error (MSE). It measures the square root of the average discrepancies between a dataset’s actual values and projected values. MSE is frequently utilized in regression issues and is used to assess how well predictive models work.

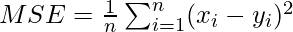

Mathematical Formula

For a dataset containing ‘n’ data points, the MSE calculation formula is:

where:

- xi represents the actual or observed value for the i-th data point.

- yi represents the predicted value for the i-th data point.

Example:

Python

from sklearn.metrics import mean_squared_error

true_values = [2.5, 3.7, 1.8, 4.0, 5.2]

predicted_values = [2.1, 3.9, 1.7, 3.8, 5.0]

mse = mean_squared_error(true_values, predicted_values)

print("Mean Squared Error:", mse)

|

Output:

Mean Squared Error: 0.057999999999999996

R-squared (R²) Score

A statistical metric frequently used to assess the goodness of fit of a regression model is the R-squared (R2) score, also referred to as the coefficient of determination. It quantifies the percentage of the dependent variable’s variation that the model’s independent variables contribute to. R2 is a useful statistic for evaluating the overall effectiveness and explanatory power of a regression model.

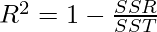

Mathematical Formula

The formula to calculate the R-squared score is as follows:

Where:

- R2 is the R-Squared.

- SSR represents the sum of squared residuals between the predicted values and actual values.

- SST represents the total sum of squares, which measures the total variance in the dependent variable.

Example:

Python

from sklearn.metrics import r2_score

true_values = [2.5, 3.7, 1.8, 4.0, 5.2]

predicted_values = [2.1, 3.9, 1.7, 3.8, 5.0]

r2 = r2_score(true_values, predicted_values)

print("R-squared (R²) Score:", r2)

|

Output:

R-squared (R²) Score: 0.9588769143505389

Root Mean Squared Error (RMSE)

RMSE stands for Root Mean Squared Error. It is a usually used metric in regression analysis and machine learning to measure the accuracy or goodness of fit of a predictive model, especially when the predictions are continuous numerical values.

The RMSE quantifies how well the predicted values from a model align with the actual observed values in the dataset. Here’s how it works:

- Calculate the Squared Differences: For each data point, subtract the predicted value from the actual (observed) value, square the result, and sum up these squared differences.

- Compute the Mean: Divide the sum of squared differences by the number of data points to get the mean squared error (MSE).

- Take the Square Root: To obtain the RMSE, simply take the square root of the MSE.

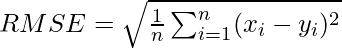

Mathematical Formula

The formula for RMSE for a data with ‘n’ data points is as follows:

Where:

- RMSE is the Root Mean Squared Error.

- xi represents the actual or observed value for the i-th data point.

- yi represents the predicted value for the i-th data point.

Python

from sklearn.linear_model import LinearRegression

from sklearn.metrics import mean_squared_error

import numpy as np

true_prices = np.array([250000, 300000, 200000, 400000, 350000])

predicted_prices = np.array([240000, 310000, 210000, 380000, 340000])

rmse = np.sqrt(mean_squared_error(true_prices, predicted_prices))

print("Root Mean Squared Error (RMSE):", rmse)

|

Output:

Root Mean Squared Error (RMSE): 12649.110640673518

NOTE:

When using regression metrics in scikit-learn, we generally aim to obtain a single numerical value for each metric.

Using Regression Metrics on California House Prices Dataset

Here are the steps for applying regression metrics to our model, and for a better understanding, we’ve illustrated them using the example of predicting house prices.

Import Libraries and Load the Dataset

Python

import pandas as pd

import numpy as np

from sklearn.datasets import fetch_california_housing

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LinearRegression

from sklearn.metrics import mean_absolute_error, mean_squared_error, r2_score

|

We import necessary libraries and load the dataset from our own source or from scikit-learn library.

Loading the Dataset

Python3

data = fetch_california_housing()

df = pd.DataFrame(data.data, columns=data.feature_names)

df['target'] = data.target

|

The code loads the dataset for California Housing Prices using the scikit-learn fetch_california_housing function, builds a DataFrame (df) containing the dataset’s characteristics and the target variable, and then adds the target variable to the DataFrame.

Data Splitting and Train-Test Split

Python

X = df.drop(columns=['target'])

y = df['target']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

|

The code divides the dataset into features (X) and the target variable (y) by removing the ‘target’ column from the DataFrame and allocating it to X while assigning the ‘target’ column to y. With a fixed random seed (random_state=42) for repeatability, it then further divides the data into training and testing sets, utilizing 80% of the data for training (X_train and y_train) and 20% for testing (X_test and y_test).

Create and Train the Regression Model

Python

model = LinearRegression()

model.fit(X_train, y_train)

|

This code builds a linear regression model (model) and trains it using training data (X_train and y_train) to discover a linear relationship between the characteristics and the target variable.

Make Predictions

Python

y_pred = model.predict(X_test)

|

The code estimates the values of the target variable based on the discovered relationships between features and the target variable, using the trained Linear Regression model (model) to make predictions (y_pred) on the test set (X_test).

Calculate Evaluation Metrics

Python

mae = mean_absolute_error(y_test, y_pred)

mse = mean_squared_error(y_test, y_pred)

r_squared = r2_score(y_test, y_pred)

rmse = np.sqrt(mse)

print("Mean Absolute Error (MAE):", mae)

print("Mean Squared Error (MSE):", mse)

print("R-squared (R²):", r_squared)

print("Root Mean Squared Error (RMSE):", rmse)

|

Output:

Mean Absolute Error (MAE): 0.5332001304956553

Mean Squared Error (MSE): 0.5558915986952444

R-squared (R²): 0.5757877060324508

Root Mean Squared Error (RMSE): 0.7455813830127764

The code computes four regression assessment metrics, including Mean Absolute Error (MAE), Mean Squared Error (MSE), R-squared (R2), and Root Mean Squared Error (RMSE), based on the predicted values (y_pred) and the actual values from the test set (y_test). The model’s success in foretelling the values of the target variable is then evaluated by printing these metrics, which shed light on the model’s precision and goodness of fit.

Understanding the output:

1. Mean Absolute Error (MAE): 0.5332

- An MAE of 0.5332 means that, on average, the model’s predictions are approximately $0.5332 away from the true house prices.

2. Mean Squared Error (MSE): 0.5559

- An MSE of 0.5559 means that, on average, the squared prediction errors are approximately 0.5559.

3. R-squared (R²): 0.5758

- An R² of 0.5758 indicates that the model can explain approximately 57.58% of the variance in house prices.

4. Root Mean Squared Error (RMSE): 0.7456

- An RMSE of 0.7456 indicates that, on average, the model’s predictions have an error of approximately $0.7456 in the same units as the house prices.

Conclusion

In conclusion, understanding regression metrics in scikit-learn is important for all people running with predictive models. These metrics allow us to evaluate the quality of our regression models, helping us make wise decisions about overall performance evaluation. In this article, we have seen the logic behind regression metrics, the steps required to evaluate a regression model, and provided examples. Whether we’re predicting house prices, stock market trends, or any other continuous numerical values, the process remains same.

Share your thoughts in the comments

Please Login to comment...