kuberneets Deployment VS Pod

Last Updated :

11 Mar, 2024

In the world of modern software development, managing apps may be as difficult as putting together a puzzle without a picture. This is when Kubernetes steps in to save the day! But what is Kubernetes, and why should you care? Let’s divide it into bite-sized chunks.

Basics of Kubernetes

Think of Kubernetes as the conductor of an orchestra; however, instead of musicians, it is in charge of orchestrating containers, which are executable packages that are standalone, lightweight, and carry all the necessary components for an application to execute. These containers can be easily set up in a variety of settings, including the cloud and your laptop.

With today’s web services, developers anticipate many daily deployments of new versions of apps, and consumers want those applications to be accessible around the clock. Package software can accomplish these objectives with the use of containerization, allowing for the seamless release and upgrade of applications. With the aid of Kubernetes, you can ensure that your containerized apps launch whenever and wherever you choose, as well as locate the necessary tools and resources. With Google’s vast experience in container orchestration, Kubernetes is an open-source platform that is ready for production.

What is Kubernetes deployment and pod?

Kubernetes Deployment: Think about deployments as blueprints for your application’s final state. They specify how many instances of your application should be running, what resources each instance requires, and how to deal with updates and rollbacks.

Kubernetes Pod: Pods are Kubernetes’ smallest deployment units, hosting one or more containers that work together. Consider them cozy apartments where your application’s containers can dwell, share resources, and communicate with one another.

Why Kubernetes Deployments and Pod required?

The demand for scalability, dependability, and efficiency in application management gives rise to the necessity for Kubernetes deployments and pods. Because Kubernetes abstracts away the underlying infrastructure concerns, developers can concentrate on developing and deploying their apps rather than worrying about the specifics of where and how they run. Basically, all the backend processes are taken care of by Deployments and Pods itself.

What is the difference between K8s Deployments and Pods?

|

Features

|

Deployments

|

Pods

|

|

Management

|

Manages ReplicaSets and ensures desired state

|

Basic building blocks, managed directly

|

|

Scaling

|

Supports scaling by modifying replica count

|

Does not support scaling directly

|

|

Self-healing

|

Automatically replaces failed Pods

|

Does not automatically replace failed Pods

|

|

Updates

|

Supports rolling updates with version control

|

No built-in support for rolling updates

|

|

Rollbacks

|

Supports rollback to previous versions

|

Rollback is manual and might require re-creation

|

|

Configuration

|

Allows configuration of pod template

|

Configuration done within the pod manifest

|

|

High Availability

|

Ensures high availability with replica sets

|

Not inherently designed for high availability

|

|

Resources

|

Manages resources at deployment level

|

Resources managed at individual pod level

|

|

Load Balancing

|

Supports load balancing with services

|

Not directly involved in load balancing

|

|

Network

|

Multiple pods can share a single IP address

|

Each pod has its own IP address

|

What is Pod Lifecycle?

Pods have a predetermined lifecycle. They begin in the Pending phase, proceed through the Running phase (assuming that at least one of their primary containers starts successfully), and then proceed through the Succeeded or Failed phases (depending on whether any of the pod’s containers ended successfully or not). The kubelet can restart containers to address various errors while a pod is operating.

Kubernetes monitors various container conditions within a pod and decides what needs to be done to restore the pod’s health.

What is Scalability ?

By allowing you to scale over several clusters, Kubernetes scaling enables you to allocate workloads across various availability zones or regions. This guarantees high availability even in the case of infrastructure failures in a particular region, offers geographical redundancy, and enhances performance for users in many places.

There are three typical approaches to application scalability in Kubernetes environments:

- Horizontal Pod Autoscaler: Pod replicas are added or removed automatically using the Horizontal Pod Autoscaler (HPA).

- Vertical Pod Autoscaler: Adds or modifies CPU and memory reserves for your pods automatically.

- Cluster Autoscaler: Determines the number of nodes to add or remove from a cluster depending on the resources that each pod requests.

What is K8s Rolling Updates and Rollback ?

- Kubernetes Rolling Updates gradually replace older application instances with newer ones to ensure continuous availability. Rollbacks allow reverting to a previous version in case of deployment issues, maintaining system reliability.

- In simpler words, Think of Kubernetes Rolling Updates like renovating a house while still living in it. Instead of shutting down the entire house, you update one room at a time, ensuring you always have a place to stay. Similarly, Rolling Updates in Kubernetes let you update parts of your application without taking it offline, ensuring continuous service for your users.

- Now, imagine you painted a room the wrong color or installed a faulty appliance during the renovation. Rollbacks in Kubernetes are like having a magical undo button. If something goes wrong with the update, you can quickly go back to the previous version, keeping your application running smoothly without any hiccups.

Deployment of one Sample Application in K8s Cluster

Let’s deploy a ArgoCD application, It keeps an eye on active programs and contrasts their present, live state with the intended target state (as defined in the Git repository). An application that has been deployed and whose live state differs from the intended state is said to be out of sync.

Real Time Use Cases, Live Demonstration with Snapshots and Best Practices

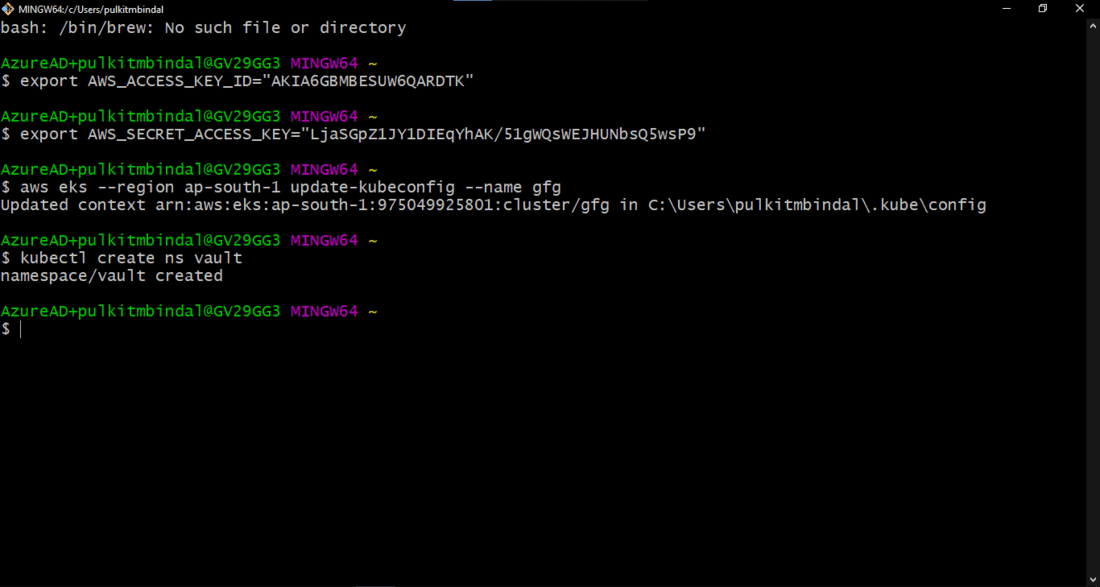

Step 1: Let’s take an example of vault installation, which is nothing but an extra security layer for applications. Many big MNCs uses this in real time and this is the best and easy to use security layer on top of any application.

Step 2: We will first see what resources we have, and later we will see how we can use those resources in order to deploy vault pods and deployments.

Step 3: We will be using a Kubernetes Cluster named “gfg,” a namespace named “vault,” and 2 nodes spun up.

Step 4: Please run “kubectl create ns vault“

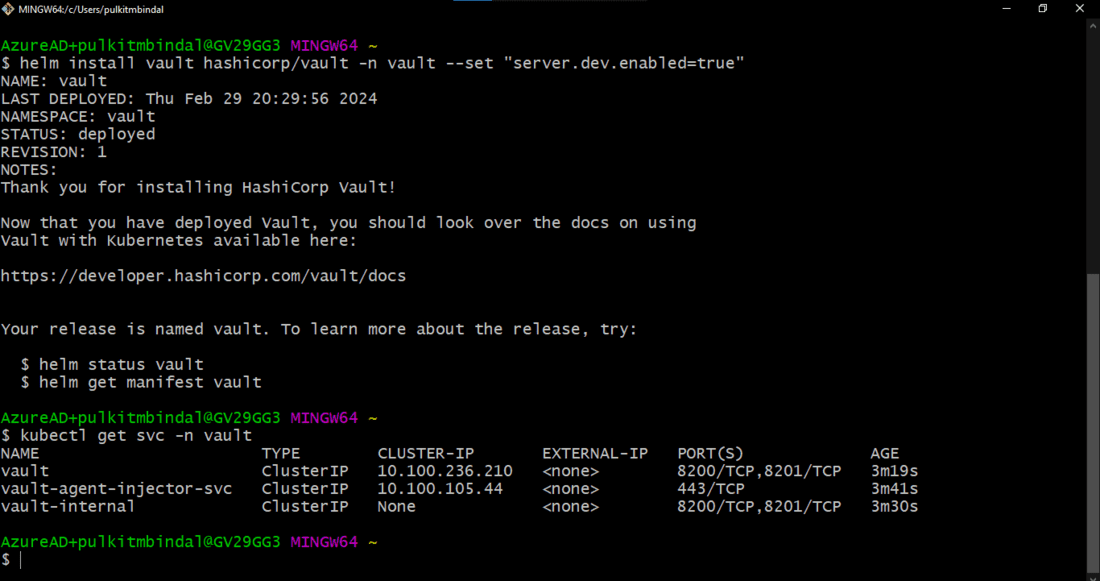

Step 5: Please run “helm repo update.”

Step 6: Please run “helm install vault hashicorp/vault -n vault –set “server.dev.enabled=true“

Step 7: Please run “kubectl get svc -n vault”

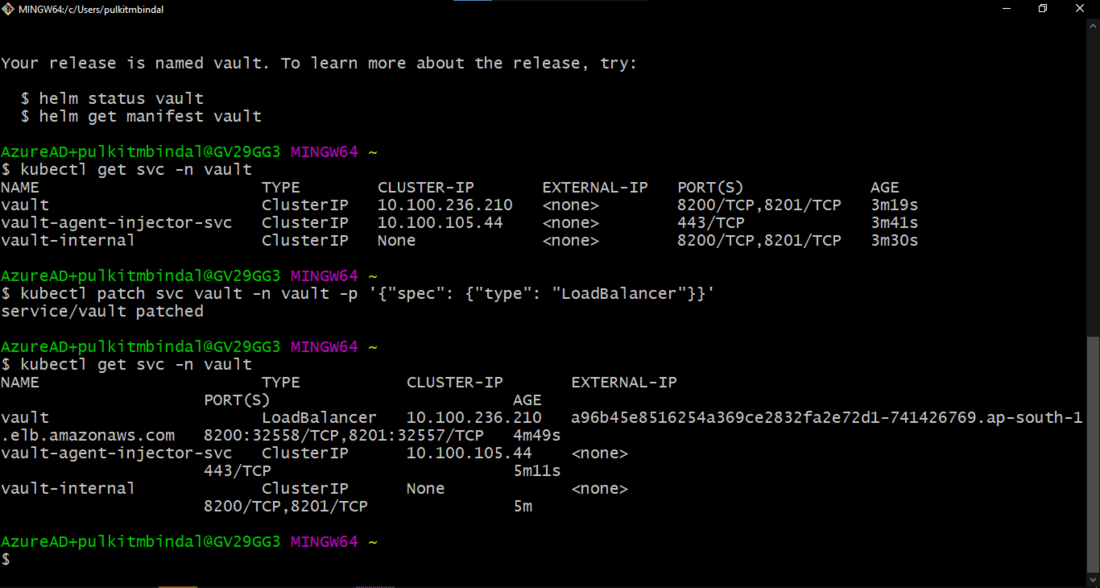

Step 8: Please run “kubectl patch svc vault -n vault -p ‘{“spec”: {“type”: “LoadBalancer”}}’“

Step 9: Please run “kubectl get svc -n vault“

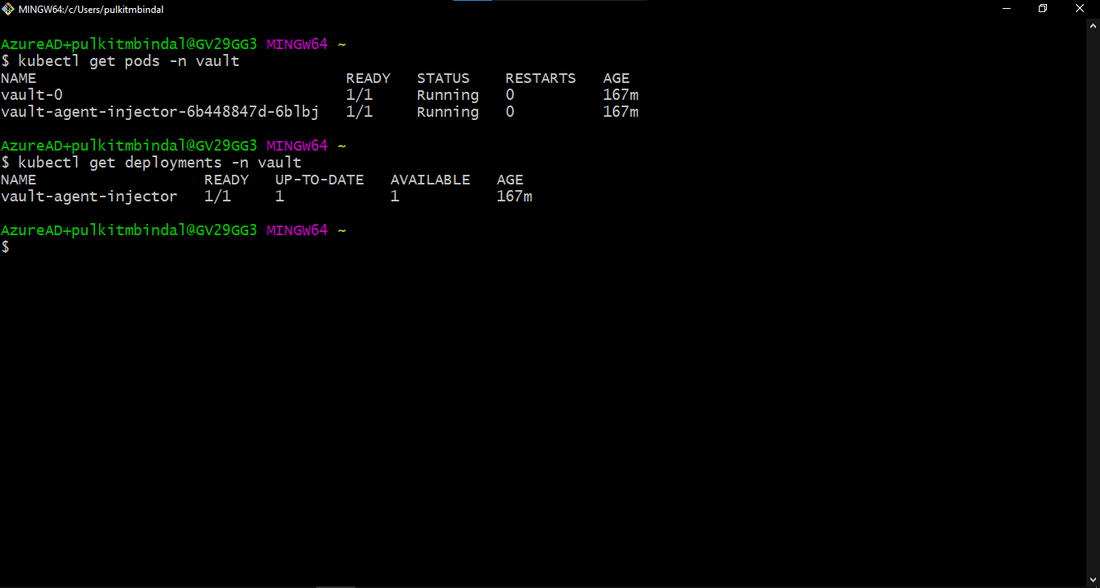

Step 10: Please run “kubectl get pods -n vault” (For Pods) and please run “kubectl get deployments -n vault” (For Deployments). Most Important, this is how pods and deployments will look like in running state.

Step 11: The vault dashboard exposed on port 8200 as a Load Balancer URL will look like –

kuberneets deployment vs pod – FAQ’s

Is Kubernetes limited to large-scale uses?

No, Kubernetes’ scalability, durability, and flexibility in deployment and management make it useful for applications of all sizes.

What distinguishes Kubernetes from conventional virtualization?

Kubernetes provides more efficiency and agility by managing containers at the application level, abstracting away the underlying infrastructure, in contrast to traditional virtualization, which runs many virtual machines on a single physical server.

Is Kubernetes compatible with my local computer?

Yes, you may use tools like Minikube or Docker Desktop to set up a local Kubernetes cluster, which will enable you to create and test applications in a Kubernetes environment on your laptop.

If a Pod malfunctions, what happens?

Kubernetes guarantees your application is always available by automatically restarting failed Pods and rescheduling them to healthy nodes.

Is learning Kubernetes a difficult task?

Although Kubernetes has a learning curve, developers may easily grasp its principles and best practices with the help of a plethora of resources, tutorials, and community support.

Share your thoughts in the comments

Please Login to comment...