How To Opimize kubernets Pod CPU And Memory Utilization?

Last Updated :

15 Mar, 2024

Before we dive into boosting your Kubernetes pod performance, let’s take a moment to understand the key players in this orchestration symphony. In the world of Kubernetes, pods are used to run containers, which are the smallest units of computing in Kubernetes.

When working with Kubernetes, optimizing pod CPU and memory utilization is crucial for several reasons:

- Cost Efficiency: Proper utilization prevents over-provisioning, reducing infrastructure costs

- Performance: Ensures applications run smoothly by avoiding resource bottlenecks.

- Scalability: Optimizing resource utilization allows you to scale your applications more effectively, making better use of the resources available in your cluster.

- Reliability: Prevents resource exhaustion and potential downtime

- Resource Management: Kubernetes provides features for managing resources, such as resource requests and limits, which can be optimized to ensure that your applications get the resources they need without wasting resources.

In the Pod Yaml file, we specify the resource requests and limits for CPU and memory, which define the amount of each resource that the pod needs and the maximum it can use.

EX:

apiVersion: v1

kind: Pod

metadata:

name: frontend

spec:

containers:

- name: app

image: images.example/app:v4

resources:

requests:

memory: "64Mi"

cpu: "250m"

limits:

memory: "128Mi"

cpu: "500m"

We have a java application and we are running inside the pod using kubernetes how can we specify the resources min and max to optimize the application level memory resources.

Two flag we need to set up in the java – jar command

- Xms – Xms is minimum heap size which is allocated to initilization of JVM in java.

- Xmx – Xmx is the maximum heap size that JVM can use

While writing the code, we can use Google Pub/Sub properties to set the thread pool and thread count, which can help optimize CPU and Memory usage in pods.

Horizontal Pod Autoscaler ( HPA )

HPA is a Kubernetes feature that automatically adjusts the number of running pods in a deployment or replica set based on the observed CPU utlilization or custom metrics.

- Automatic Scaling: HPA monitors CPU usage and adjusts pod numbers for optimal performance, without manual intervention.

- Efficient Resource Use: HPA dynamically adjusts pod numbers to match demand, preventing over-provisioning ( costly ) or under-provisioning ( performance issues )

Let’s explore and create job in Kubernetes

Cron Job – A cron job creates job on a repeating schedule.

Imagine you have to-do a list, and you want to be reminded of a specific task every day at 9:00 AM. A cron job is like a setting an alarm on your phone to remind you of that task. You tell the cron job when to run e.g ( every day at 9:00 AM ), and it will execute the task automatically without have to do anything.

EX: 0 9 * * * - Run at 9:00 AM everyday

Cron Job Benefits in Kubernetes

- Forget Routine tasks: With cron jobs, you can set up tasks to run automatically on a schedule, so you don’t have to remember to do them manually.

- Time Management: They help you manage your time better by scheduling tasks to run at specific times or intervals, freeing you up to focus on other things.

- Consistency: Cron jobs ensure that tasks are done consistently and reliably, even in a complex systems like Kubernetes.

I will discuss a real incident I faced regarding pods ‘CPU and Memory’ usage and share the lessons I learned about optimizing them. We have an application from which we ingest the data every 4 Hours to the postgress DB and for this process we are using Kubernetes Cron job in GCP

Our Infra is in GCP and we are using GKE autopilot and we deployed the cronjob in dev environment for testing the application and forgetten to check whether the pod were in running state or not. For the 14 days the pods were in CreateContainerConfigError and it costs us huge in billing.

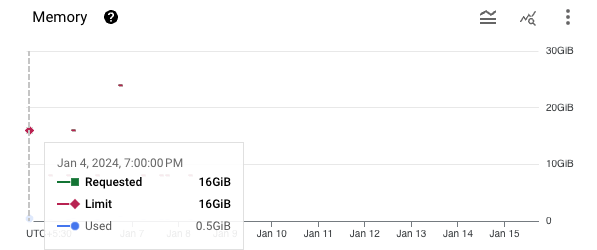

We checked the resource has been a 2848% increase in the Autopilot pod memory requests and an increase of 971% of Autopilot Pod mCPU requests also the kubernetes changes have increased with the increase in resource consumption.

Why does resource consumption increase while a pod is not running state?

When we deployed the cron job pod were in CreateContainerConfigError and every time when the pod is able to start it making requests for CPU cores which explains the spike in costs. Resource requests were incurring charges. Despite having container limit sets, it initiates a new build every 4 hours. If the previous task is still running or has stalled without completion, a new cron job starts, all while remaining within the pod limit. Essentially, the linear increase in CPU and Memory usage in the graph reflects this behaviour.

(16:57:03)──> kbt create ns geeks

+ kubectl

namespace/geeks created

(16:58:34)──> kbt apply -f cronjob.yaml

+ kubectl

cronjob.batch/hello-world created

hellp-world-8c89hc76c-jknmb

To Optimize Kubernetes pod CPU and memory resource utilization, we can use two annotations in the cron job configuration

- ConcurrencyPolicy: Set this to Forbid. This means the cron job will not allow concurrent runs.If it’s time for a new job to run that previous job hasn’t finished yet, the cron job will skip to new run. It’s important to note that when the previous job finishes, the startingDeadlineSeconds specified in the configuration is still taken into account and may result in a new job run.

- RestartPolicy: Set this to Never. This ensures that the pod doesn not automatically restart if it fails or is terminated, which can help to prevent unnecessary resource requests every time the pod tries to start up in a running state.

apiVersion: batch/v1

kind: CronJob

metadata:

name: hello-world

namespace: dev

spec:

schedule: "0 */12 * * *"

concurrencyPolicy: Forbid

startingDeadlineSeconds: 600

failedJobsHistoryLimit: 1

jobTemplate:

spec:

backoffLimit: 2

template:

spec:

restartPolicy: Never

containers:

- name: hello

image: busybox:1.28

imagePullPolicy: IfNotPresent

command:

- /bin/sh

- -c

- date; echo Hello from the Kubernetes cluster

restartPolicy: OnFailure

limits:

cpu: 4

memory: 8Gi

requests:

cpu: 2

memory: 4Gi

This ensures that the pod will not automatically restart if it fails or is terminated, helping to conserve resources and enhance operational efficiency.

hellp-world-8c89hc76c-jknmb

Conclusion

Conclusion

In the world of Kubernetes, optimizing pod performance is like fine-tunnning an orchestra. By mastering the art of resource allocation and scheduling with cron jobs, you can orchestrate a symphony of efficient and cost-effective operations. Remember, learning from experiences, like the real incident shared here, is key to becoming a Kubernetes virtuoso. So, go ahead, apply these optimization tips, and let your Kubernetes clusters sing with efficiency and reliability!

Pod CPU and Memory Utilization – FAQ’s

How can I check the current resource utilization of my pods ?

You can use the `kubectl top pods` command to check the current CPU and memory utilization of our pods.

What is the difference between CPU and memory requests and limits in Kubernetes?

Requests: The amount of CPU or memory that the pod is guaranted to receive. Kubernetes will use this information for scheduling purposes.

Limits: The maximum amount of CPU or memory that the pod can use. If the pod exceeds these limits.Kubernetes will throttle the pod or terminate it, depending on the QOS classes

How can I optimize CPU and memory utilization in my pods?

- Right-sizing: Adjust the CPU and memory requests and limits based on the actual resource requirement of your application.

- Horizontal Pod Autoscaler: Use HPA to automatically scale the number of pod replicas based on CPU or memory utilization.

- Vertical Pod Autoscaler: VPA adjusts the CPU and memory requests and limits of pods based on their actual resource usage.

- Efficient Coding: Write efficient code to reduce resource consumption, such as minimizing unnecessary calculations or memory allocations.

How can I optimize resource usage in pods running Java applications?

- Xms: Specify the initial heap size to reduce heap expansions.

- Xmx: Set the maximum heap size to avoid excessive memory.

- Use Application Profiling: Utilizing tools like Java Flight Recorder ( JFR ) or VisualVm to identify and optimize performance bottlenecks in your applications

- Use Google Pub/Sub properties to configure thread pool and count.

Share your thoughts in the comments

Please Login to comment...