The name is Kyverno comes from the Greek word Kyverno, which means “to govern”. Kyverno is a Policy Engine designed specifically for Kubernetes that is Kubernetes Native (i.e. it uses Kubernetes resources, patterns, and idioms) and enables the users to manage security and best practices in their Kubernetes Clusters. In this article, we will discuss what Kyverno is and how to use Kyverno to manage Policies in a Kubernetes Cluster.

What is Kyverno?

Kyverno is a Policy Engine designed specifically for Kubernetes that is Kubernetes Native (i.e. it uses Kubernetes resources, patterns, and idioms) and enables the users to manage security and best practices in their Kubernetes Clusters. Kyverno differentiates from other Policy Managers by managing the Policies as Kubernetes resources because of which the users don’t have to take the headache of learning a new language in order to write policy.

Kyverno runs as a dynamic admission controller in a Kubernetes cluster, an Admission controller is a Kubernetes standard concept that is responsible for intercepting the requests that come to an API server and then either validating, mutating, or performing both actions on the request.

Example Kyverno Cluster Policy

Given Below is a sample Cluster Policy where you can see we have a single “rules” block inside which we have a “match” block. We have set “validationFailureAction” to Audit therefore, it will be allowed even after the resource validation failure but we should get a report if the resource validation fails.

This policy will perform validation of the pods and check the label. If the label is not provided with any value then it will send a message saying “The label `app.kubernetes.io/name` is a must”.

You can copy the same policy from the following code:

apiVersion: kyverno.io/v1

kind: ClusterPolicy

metadata:

name: require-labels

spec:

validationFailureAction: Audit

background: true

rules:

- name: check-for-labels

match:

any:

- resources:

kinds:

-Pod

validate:

message: "The label `app.kubernetes.io/name` is must"

pattern:

metadata:

labels:

app.kubernetes.io/name: "?*"

You can also declare what action should the Policy take on the resource, Here are the options you can choose in Kyverno:

- Validate the resource

- Mutate the resource

- Generate a resource

- Verify images

Real World Problem with Kubernetes configurations

In this section we will discuss what are the real world problems with Kubernetes Cluster and why even the need of Kyverno immerged. So to create and work with Kubernetes Cluster we have tools like Kind, Minikube and Cloud Service Providers, and these tools are great in the sense that they help us bring up a Kubernetes cluster within minutes. If we have to do it ourselves it would take a lot of time since Kubernetes involves a lot of components but these tools provide an abstraction so that we can get something out of the box quickly and start working with it. But there’s a problem, as we said these tools provide an abstraction so that we can get a Cluster working quickly but that also means these tools assume a some set of default configurations and these default configurations are not always be secure. This makes using these default configurations OK for a local cluster but it is not a good practice to use the same set of default configurations in an production environment.

Kubernetes gives us a lot of flexibility as to what configurations we can change and a down sight of this flexibility is that there are a lot of opportunities to misconfigure these configurations. This where the need of some way to validation came into place to make sure that whatever configurations we set are secure does not open any back doors for any security threats. Other than this there are many more good reasons why we would need Kyverno like Pod security since we know that Kubernetes recommends certain Pod security standards, managing namespace and workload security. To sum up this section and add a few more reasons on why we would need Kyverno, here are a few points:

- Kubernetes configurations are complex and there are chances of misconfigurations.

- Managing pod security

- Managing namespace and workload security

- Generating default configurations

- Applying fine grained access controls

- Some portions are environment specific and some are not developer concerns but critical for operations

- External configuration management tools (e.g. Helm, Kustomize etc.) cannot ensure best-practices and compliance for configurations.

Why Kyverno

- Declarative policies that are easy to write and manage

- Policy results that are easy to view and process

- Validate (audit or enforce), Mutate, Generate and verifylmages

- Support all Kubernetes resource types including CRs (Custom Resources)

- Adopt Kubernetes patterns and practices (e.g. labels and selectors,

- annotations, events, owner References, pod controllers, etc.)

Kyverno Use cases

- Enforcing Pod Security Standards

- Additional security validation and enforcement

- Fine-grained RBAC

- Multi-tenancy

- Auto-Labeling

- Sidecar (including certificate) injection with mounts, etc.

Alternatives to Kyverno

You may know that there are other general purpose Policy Managers since Policy management is not a concept only specific to Kubernetes, but these general purpose policy managers require learning a new language and may not be effective for people that are looking for Kubernetes native Policy managers. Open Policy Agent is one such example and Gatekeeper by Open Policy Agent is a particular implementation for Kubernetes but since these are general purpose policy managers and not Kubernetes native, they often require you to learn some language some new language (like Rego in the case of Open Policy Agent). With Kyverno, you don’t have to learn any new language, Kyverno does the heavy-lifting so that you can work with Kyverno just like you do with other Kubernetes components.

Kyverno Tutorial

In this tutorial we will show how you can use an example Kyverno policy to ensure that label named gfg is present on every Pod that is create otherwise the Pod won’t be created.

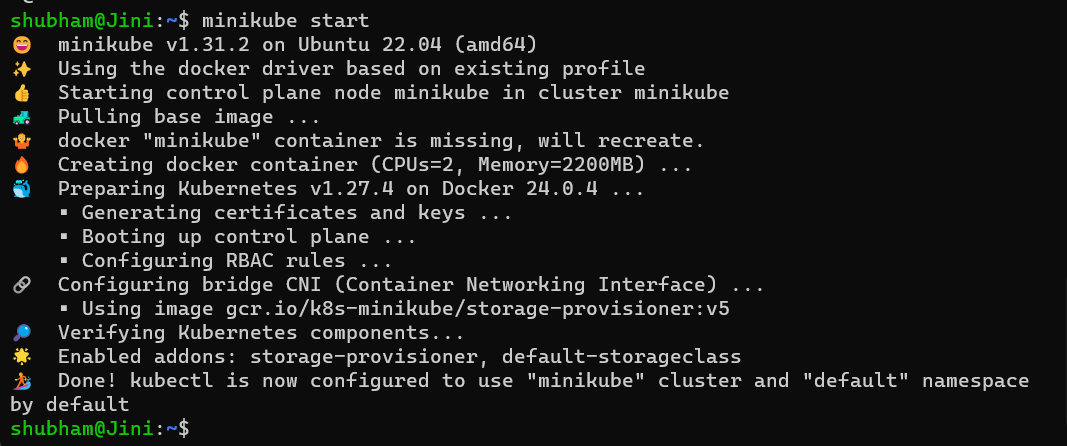

Step 1: Creating a Kubernete Cluster

You can skip this step if you already have a Kubernetes Cluster running in your machine. But in case you don’t have a Cluster running enter the following command to create Kubernetes locally using minikube:

minikube start

Minikube is a one-node Kubernetes cluster where master processes and work processes both run on one node.

Step 2: Installing Kyverno

There are multiple ways to install Kyverno in a Kubernetes Cluster. You can install Kyverno with Helm by adding the Kyverno Helm repository.

helm repo add kyverno https://kyverno.github.io/kyverno/

For this tutorial we will be installing Kyverno using a manifest for the sake of simplicity (But the Helm method id more recommended for production environments)

Enter the following command in your terminal:

kubectl create -f https://github.com/kyverno/kyverno/releases/download/v1.10.0/install.yaml

You will see a similar output, where the required Kubernetes components of Kyverno are being installed.

Step 3: Creating a ClusterPolicy

Let’s create a Custer Policy that contains a validation rule which ensures that all the Pods that are created have a label called “gfg”. If a Pod we create does not contain the label “gfg” it will not be created.

kubectl create -f- << EOF

apiVersion: kyverno.io/v1

kind: ClusterPolicy

metadata:

name: require-labels

spec:

validationFailureAction: Enforce

rules:

- name: check-team

match:

any:

- resources:

kinds:

- Pod

validate:

message: "label 'gfg' is required"

pattern:

metadata:

labels:

gfg: "?*"

EOF

Step 4: Creating an invalid Pod

Now let us try to create an Nginx Deployment without the required label “gfg” and see what response we get.

kubectl create deployment nginx --image=nginx

This gives us the following result:

Here Kyverno denied the request to create a Pod since it didn’t include the required label, and gave us the message:

require-labels:

check-team: 'validation error: label ''gfg'' is required. rule check-team failed

at path /metadata/labels/gfg/'

Step 5: Adding the required label

If we verify and check the list of Policy Reports that exists, we we not find any such Policy Reports:

kubectl get policyreport -o wide

This will give us output:

No resources found in default namespace.

Now the next obvious step would be to add the required label to the Deployment, so let’s do that:

kubectl run nginx --image nginx --labels gfg=random_value

And now since the Pod configuration is complaint with the required label in our Policy, our Pod is created and you will see a similar result:

Step 6: Checking Policy Reports

And finally we can check out the action Kyverno took by looking the at Policy Report created:

kubectl get policyreport -o wide

You will get a similar result where the Policy Report has columns denoting how many Pods “Passed” the Cluster Policy or “Failed” or “Skipped” it, etc. and the number “1” beneath “PASS” column denotes that 1 Pod has passed the policy. And with this we just saw how to implement a simple Cluster Policy in a Kubernetes Cluster using Kyverno.

Advantages of Using Kyverno

1. Kubernetes Native – short learning curve

There are many general purpose Policy Managers and these policy managers require learning a new language and may not be effective for people that are looking for Kubernetes native Policy managers. All of the policies in Kyverno are written in YAML, Kyverno takes care of the heavy-lifting while you can simply use YAML for writing the Policy. Also with Kyverno you can manage policies as code using familiar tools like git and kustomize.

2. Kyverno CLI

Kyverno also comes with a CLI tool used for validating and testing policy behavior to resource prior to adding them to a Kubernetes Cluster. The CLI can be used in CI/CD pipelines to assist with the resource authoring process to ensure they conform to standards prior to them being deployed.

3. Fine-grained RBAC

Traditional RBAC (Role-based access control) defines roles and permissions to perform specific actions within a Kubernetes cluster, but it lacks the granularity needed for certain scenarios. Kyverno allows cluster administrators to impose detailed conditions on top of standard RBAC policies for example, only users that have this specific role can create a secret that has this specific label key and value.

4. Cost control

With Kyverno, you can limit specific resources that may have cost implications on your infrastructure. A Predominant cost control measure is to enforce restriction that only one Service of type LoadBalancer can be created.

5. Multi-tenancy

Many organizations operate internally as multi-tenanted businesses. Kubernetes doesn’t have a native way to enforce boundaries between these tenants, Kyverno can be used to enforce limits on consumption and creation of cluster resources (such as CPU time, memory, and persistent storage) within a specified namespace.

6. Ops automation and Supply Chain Security

Kyverno can help incorparte supply chain security measures like ensuring images originate from trusted sources, preventing unauthorized modifications, enforcing security policies and much more. For example, with Kyverno you can enforce all images with a specific criteria, such as name, registry or repository etc. must be signed and attested using a specified key. About Ops automation, a good example of Ops automation with Kyverno is that you can say sync this Kubernetes Configmap everywhere when my Cert is updated so Kyverno can watch that and perform synchronizations and create new resources.

7. Some more features of Kyverno

Some more features of Kyverno includes:

- Validate, mutate, generate, or cleanup any resource.

- Verify container images for software supply chain security

- Inspect image metadata

- Match resources using label selectors and wildcards

- Validate and mutate using overlays (like Kustomize!)

- Synchronize configurations across Namespaces

- Block non-conformant resources using admission controls, or report policy violations

- Self-service reports and self-service policy exceptions

- test policies and validate resources using the Kyverno CLI, in your CI/CD pipeline, before applying to your cluster

Conclusion

Kyverno is a Policy Engine designed specifically for Kubernetes that is Kubernetes Native (i.e. it uses Kubernetes resources, patterns and idioms) and enables the users to manage security and best practices in their Kubernetes Clusters. Kyverno differentiates from other Policy Managers by managing the Policies as Kubernetes resources because of which the users don’t have to take the headache of learning a new language in order to write policy. To know more about Kyverno and some hands on practice, you can go to the official Kyverno docs. This marks the end of this article and we hope that this article helped you improve your understanding about Kyverno and policy management in Kubernetes.

Kyverno in Kubernetes – FAQ’s

What is the meaning of Kubernetes Native?

A Software is considered to be “Kubernetes native” if the development and use cases of the software is optimized to run efficiently on Kubernetes and the syntax of the software is such that it is easy to use and understand for users who already know Kubernetes.

Which type of admission controller is Kyverno?

Kyverno is a dynamic admission controller. Dynamic admission controllers are admission controllers that are developed in addition to compiled-in admission plugins, and they run as webhooks configured at runtime.

What actions can a Policy perform on a Kubernetes resource?

There are four actions any Policy can take on a resource in Kubernetes:

- Validate the resource

- Mutate the resource

- Generate a resource

- Verify images

What are the alternatives to Kyverno?

There are some alternatives to Kyverno such as Open Policy Agent, but most of these are general purpose Policy Managers. Gatekeeper by Open Policy Agent is a particular implementation for Kubernetes.

Is Kyverno Open-Sourced

Yes, Kyverno is an Open-sourced project, you can find the GitHub link for Kyverno along with its various repositories here – https://github.com/kyverno

Is Kyverno is a CNCF Project?

Yes, Kyverno is a CNCF Incubating Project. It was accepted to CNCF on November 10, 2020 and moved to the Incubating maturity level on July 13, 2022.

Share your thoughts in the comments

Please Login to comment...