How To Create Azure Data Factory Pipeline Using Terraform ?

Last Updated :

26 Mar, 2024

In today’s data-driven economy, organizations process and convert data for analytics, reporting, and decision-making using efficient data pipelines. One powerful cloud-based tool for designing, organizing, and overseeing data integration procedures is Azure Data Factory (ADF), available from Microsoft Azure. In contrast, declarative specification and provisioning of infrastructure resources are made possible by Terraform, an infrastructure as code (IaS) platform. We’ll look at using Terraform to create pipelines for Azure Data Factory in this article, which will automate the administration of cloud data workflows.

What Is A Data Factory In Azure ?

Before getting into the nitty-gritty of creating ADF pipelines using Terraform, it’s crucial to understand the basic concepts underpinning the Azure Data Factory. Data pipelines, including transformation, movement, and orchestration processes, may be constructed using ADF. These tasks can involve moving data between different data sources, changing data with the use of custom scripts or data flows, and starting subsequent procedures when certain triggers are met.

Setting Up Terraform for Azure

In order to use Terraform to develop Azure Data Factory pipelines, you must first set up your Azure Terraform environment. Setting up Azure credentials and starting a fresh Terraform project are required for this. You have two options for doing this:

- Installing Terraform locally or,

- Launching it directly in Azure Cloud Shell.

Using Azure Cloud Shell

Azure Cloud Shell offers a browser-based shell environment. Terraform and other frequently used command-line tools are pre-installed, which makes it a handy choice for resource management on Azure.

Using Local Machine

As an alternative, you may control Azure resources by installing Terraform on your local computer. Here’s how to install Terraform locally on your computer:

- Using a package manager or downloading the relevant binaries for your OS system from the Terraform website, install the Terraform CLI locally.

- Set up Azure authentication by utilising Managed Identity for Azure resources (MSI) or by generating an Azure Service Principal.

- Launch terraform init command to initialise a new Terraform project after creating a directory for your Terraform configuration files.

- Create Terraform configuration files (.tf) that specify the pipelines and resources for Azure Data Factory.

- Using the specified setup, run the Terraform commands terraform plan, terraform apply to construct or edit Azure Data Factory pipelines.

Steps to Create Azure Data Factory Pipeline using Terraform

Step 1: Navigate to the Azure portal.

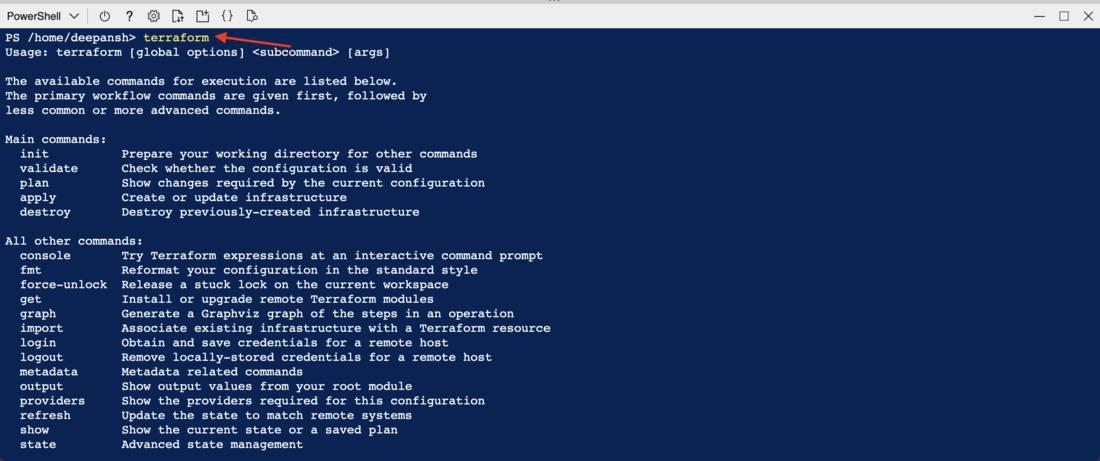

Step 2: Open your Azure Cloud Shell. If you haven’t mounted a storage account yet, you need to mount one. If no storage account is present, create a new storage account. You can check for the presence of Terraform by running the command terraform in the Cloud Shell.

You should see the Terraform CLI output if it’s installed.

Step 3: Create a .tf file that contains the Terraform configuration for creating the Azure Data Factory pipeline. This file will later be executed in the Cloud Shell. Here, I have created terra.tf file.

# Specify the Azure provider configuration.

provider "azurerm" {

# Turn on any available provider features.

features {}

}

# Define a "devRG" resource group in the "East US" region.

resource "azurerm_resource_group" "devRG" {

name = "devResourcegroup"

location = "East US"

}

# Define an Azure Data Factory named "azuredf" within the "devRG" resource group.

resource "azurerm_data_factory" "azuredf" {

name = "devDF"

location = azurerm_resource_group.devRG.location

resource_group_name = azurerm_resource_group.devRG.name

}

# Define the "sourcestorage" storage account within the "devRG" resource group.

resource "azurerm_storage_account" "sourcestorage" {

name = "sourcecodedf"

location = azurerm_resource_group.devRG.location

resource_group_name = azurerm_resource_group.devRG.name

account_replication_type = "GRS"

account_tier = "Standard"

}

# Define the "destinationstorage" storage account within the "devRG" resource group.

resource "azurerm_storage_account" "destinationstorage" {

name = "destinationcodedf"

location = azurerm_resource_group.devRG.location

resource_group_name = azurerm_resource_group.devRG.name

account_replication_type = "GRS"

account_tier = "Standard"

}

# Define the "containersource" storage container within the "sourcestorage" storage account.

resource "azurerm_storage_container" "containersource" {

name = "coursecontainer"

storage_account_name = azurerm_storage_account.sourcestorage.name

}

# Define the "containerdestination" storage container within the "destinationstorage" storage account.

resource "azurerm_storage_container" "containerdestination" {

name = "destinationcontainer"

storage_account_name = azurerm_storage_account.destinationstorage.name

}

Note: Use unique Storage Account Name and Resource Group names, else you will get an error.

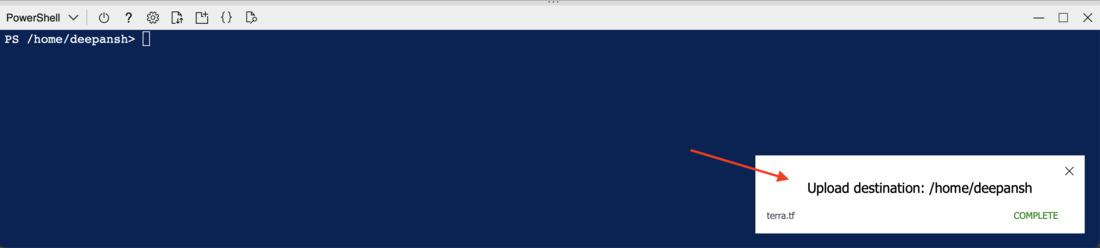

Step 4: Go to your Cloud Shell and upload the .tf file you created in Step 3. Use the upload/download file buttons to navigate to the terra.tf file and click upload.

You will see the following if upload is successful.

You can verify if the file is present using the ls command.

Step 5: Run the command terraform init. This initializes the Terraform working directory and downloads the provider plugins necessary for the configuration.

Step 6: After initialising, run terraform validate and then run terraform plan command to check for syntax errors and to see a preview of the changes that will be applied.

Step 7: Finally, run the terraform apply command to apply the Terraform configuration and create the Azure Data Factory pipeline. You will be prompted to enter a value; enter yes and press enter to confirm the execution of the plan.

After successful execution, you will see the following:

Step 8: Navigate to Resource Groups. Here, you will see the resources you just created including the Azure Data Factory.

Basic Troubleshooting

I get the Error: building account: could not acquire access token to parse claims: running Azure CLI: exit status 1: ERROR: Failed to connect to MSI. Please make sure MSI is configured correctly.

The error points to a problem with Terraform’s Managed Service Identity (MSI) authentication for Azure resources.

To confirm that the account login and permissions are valid, run az logout and then az login in the Azure CLI. Run these commands, then follow the Powershell instructions. After successful Login, run the command az account show, you should see the following output.

I get the following error while trying to run the terraform apply command:

Just use a unique name for your Storage Account, Data Factory and other resources in Azure.

Azure data factory pipeline using terraform – FAQ’s

Is Terraform compatible with cloud providers other than Azure?

Yes, Terraform works with a variety of cloud providers, such as AWS and Google Cloud Platform (GCP). Terraform is a tool for managing infrastructure in various cloud environments.

Does using Terraform and Azure Data Factory come with a price?

Indeed, depending on how resources are used and provided, Terraform and Azure Data Factory may have costs. Reviewing the price information on the Terraform and Azure websites is crucial, and you should schedule your infrastructure appropriately.

Is it possible to combine Azure Data Factory pipelines with external systems and other Azure services?

Yes, Azure Blob Storage, Azure SQL Database, and other Azure services can be integrated with Azure Data Factory using connectors and integration features. To interface with other systems, you may also leverage external APIs and custom activities.

What are the best methods for cooperatively managing Azure Data Factory pipelines with Terraform code?

Using version control systems such as Git, putting infrastructure as code (IaC) ideas into reality, modularizing Terraform code, and creating well-defined deployment pipelines for testing and production environments are examples of best practices.

Share your thoughts in the comments

Please Login to comment...