How To Connect To Kafka Running In Docker ?

Last Updated :

13 Feb, 2024

Docker and Kafka are two important tools used in today’s digital world. Docker makes an easy deployment process by encapsulating applications into containers while Kafka provides a distributed event-streaming platform for building real-time data pipelines and data streaming applications. Here in this guide, I will first discuss what is Docker. Then I will discuss Kafka and its components. Finally, I will walk you through the various steps to run Kafka using a Docker container and also perform real-time data streaming using some Golang codes.

What is Docker?

Docker is a lightweight, fast, and resource-efficient tool that wraps applications and their dependencies inside compact units called containers. Docker simplifies software packaging and deployment. While developing software, developers may be using Linux Windows, or MAC operating systems to do their task. As they are using different operating systems there is more chance of getting errors and issues related to operating systems. But docker allows the developers to build, test, and deploy on any operating system without worrying about any type of compatible issues or hardware requirements.

What is Kafka?

Kafka is an open-source distributed event streaming platform. This is used to build real-time data pipelines and real-time data streaming applications. Let us understand this through an example. Think about what YouTube does. YouTube is a content streaming platform. Here the creators create content and any person who wants to see the content, has to subscribe to that YouTube channel. Kafka similarly runs like YouTube.

Kafka has four main key components topics, brokers , producers and consumers . Topics process the real time data . Brokers are responsible for storing and managing the stream of records . Kafka follows a publish-subscribe model . Here the producers produces the messages to the topic and consumers consume the messages from these topics in real time . It also provides some important features like data replication , scalability and fault tolerance which makes it efficient and reliable data streaming . Kafka has various use cases , such as it can be used as messaging system , gather metrics of different locations , track activities , collect application logs and many more . Overall Kafka is a fundamental toolkit used in organizations to leverage data for insights and decision making.

Components Of Kafka Running In Docker

Before moving on to next section make sure you have made the following installations . If not then follow these detailed GeeksForGeeks articles to install.

1. For Linux users :

- Golang (optional if you have written kafka-producer-consumer pair using other programming language)

2. For Windows users:

- Golang (optional if you have written kafka-producer-consumer pair using other programming language)

Steps To Connect Kafka Running In Docker

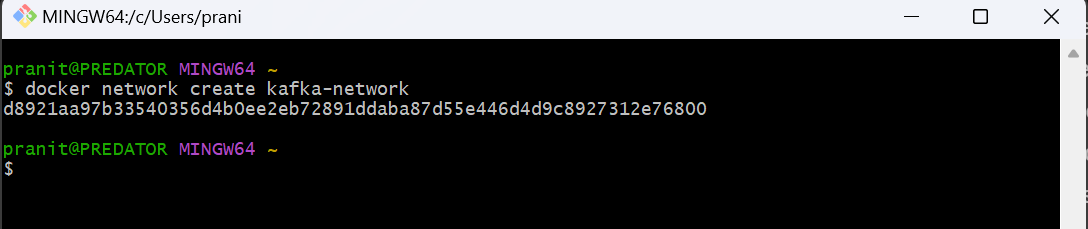

Step 1: First create a docker network .

docker network create kafka-network

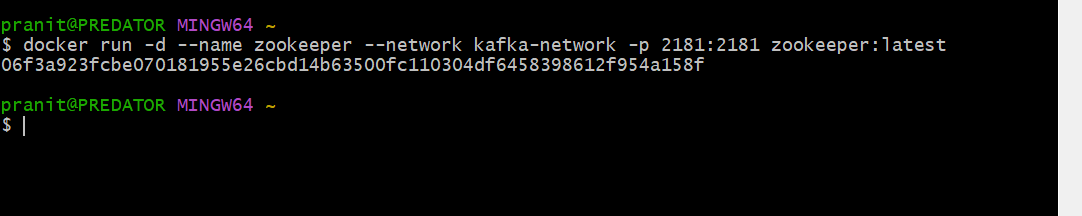

Step 2: Run the Zookeeper container using the docker network created in the step 1 (kafka-network).

docker run -d --name zookeeper --network kafka-network -p 2181:2181 zookeeper:latest

Step 3: Run the Kafka container using the docker network created in step 1 .

docker run -d --name kafka --network kafka-network -p 9092:9092 \

-e KAFKA_LISTENERS=PLAINTEXT://0.0.0.0:9092 \

-e KAFKA_ADVERTISED_LISTENERS=PLAINTEXT://localhost:9092 \

-e KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR=1 \

-e KAFKA_ZOOKEEPER_CONNECT=zookeeper:2181 \

wurstmeister/kafka:latest

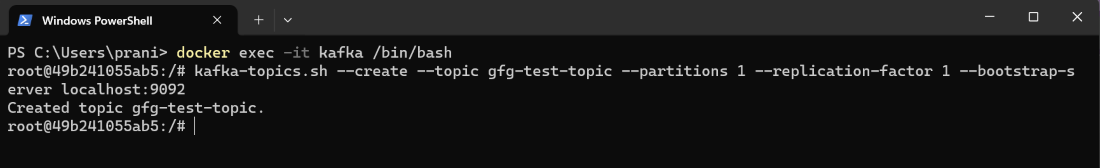

Step 4: Create a topic inside Kafka container . Here you have to first enter into the Kafka container and then create a topic .

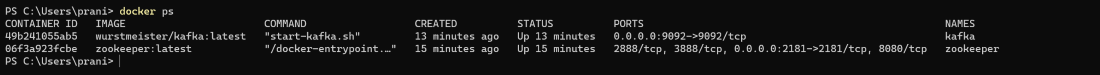

Step 5: Check if your containers are running or not .

Step 6: Now create two different directory producer and consumer . Then inside producer create a file producer.go and in consumer directory consumer.go.

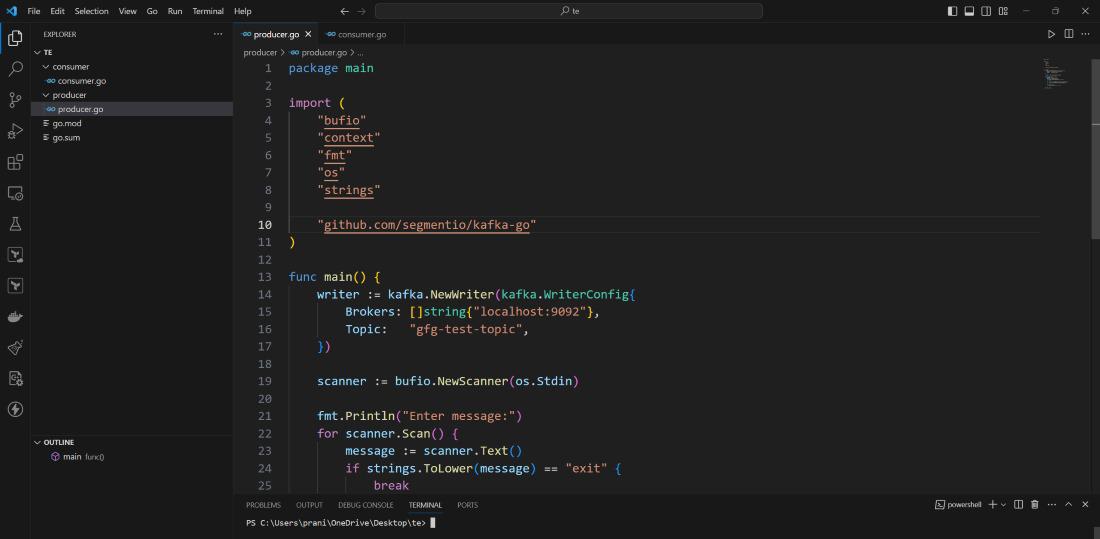

producer.go

package main

import (

"bufio"

"context"

"fmt"

"os"

"strings"

"github.com/segmentio/kafka-go"

)

func main() {

writer := kafka.NewWriter(kafka.WriterConfig{

Brokers: []string{"localhost:9092"},

Topic: "gfg-test-topic",

})

scanner := bufio.NewScanner(os.Stdin)

fmt.Println("Enter message:")

for scanner.Scan() {

message := scanner.Text()

if strings.ToLower(message) == "exit" {

break

}

err := writer.WriteMessages(context.Background(),

kafka.Message{Value: []byte(message)},

)

if err != nil {

fmt.Println("Error :", err)

}

}

writer.Close()

}

consumer.go

package main

import (

"context"

"fmt"

"github.com/segmentio/kafka-go"

)

func main() {

reader := kafka.NewReader(kafka.ReaderConfig{

Brokers: []string{"localhost:9092"},

Topic: "gfg-test-topic",

GroupID: "my-group",

})

defer reader.Close()

for {

msg, err := reader.ReadMessage(context.Background())

if err != nil {

panic(err)

}

fmt.Printf("Received message: %s\n", string(msg.Value))

}

}

After you have written the code then you can run the below command to download all the imported packages .

go mod tidy

Step 7: Run the go files in two different terminals . Then you can write anything in the producer terminal . All the messages you will receive in the consumer terminal . You will real time data streaming .

go run producer.go

go run consumer.go

Conclusion

Here we have started by learning what is Docker . Then we have learned what is Kafka and why it is used . After this we run Kafka in a docker container . Then we have created a topic inside the Kafka container . Finally we have written some simple Golang code and executed them to experience real time data streaming using Kafka container.

Kafka – FAQ’s

What are the key components of Kafka ?

Topics, brokers , consumers and producers are the four key components in Kafka.

What is the importance zookeeper in Kafka ?

Zookeeper keeps an eye on the broker used in Kafka . It is used for managing and coordinating brokers.

Explain fault tolerance in Kafka ?

In Kafka messages are replicated across multiple brokers which results in ensuring redundancy and fault tolerance.

What is Kafka consumer ?

A Kafka consumer is the one who receives or consumes the message of a Kafka topic.

What is Kafka producer ?

These Kafka producers are responsible to publish the messages to the Kafka topics.

Share your thoughts in the comments

Please Login to comment...