The F1 score is an important evaluation metric that is commonly used in classification tasks to evaluate the performance of a model. It combines precision and recall into a single value. In this article, we will understand in detail how the F1 score is calculated and compare it with other metrics.

What is an F1 score?

The F1 score is calculated as the harmonic mean of precision and recall. A harmonic mean is a type of average calculated by summing the reciprocal of each value in a data set and then dividing the number of values in the dataset by that sum. The value of the F1 score lies between 0 to 1 with 1 being a better

1. Precision: Precision represents the accuracy of positive predictions. It calculates how often the model predicts correctly the positive values. It is the number of true positive predictions divided by the total number of positive predictions (true positives + false positives).

It could be there are 10 positive cases and 5 negative cases. The model can identify 5 positive cases. But out of these 5 identified cases, 4 positive cases only 4 are positive and 1 is negative. Thus precision becomes 80% (4/5)

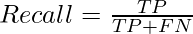

2. Recall (Sensitivity or True Positive Rate): Recall represents how well a model can identify actual positive cases. It is the number of true positive predictions divided by the total number of actual positive instances (true positives + false negatives). It measures the ability of the model to capture all positive instances.

Taking the above example though the accuracy of predicting the positive case is very high(precision 80%) the recall will be very poor as out of the actual 10 positive case model was able to identify only 4 positive cases. Thus recall comes to (4/10) = 40%

There is often an inverse relationship between precision and recall. There could be cases depending on the domain where we would want either precision or recall to be an important metric. However, generally, we would want a model that can perform better on both. This is where the F1 metric comes into the picture.

F1 score combines precision and recall into a single metric

Why harmonic mean and not simply average?

The harmonic mean is the equivalent of the arithmetic mean for reciprocals of quantities that should be averaged by the arithmetic mean. More precisely, with the harmonic mean, you transform all your numbers to the “averageable” form (by taking the reciprocal), you take their arithmetic mean and then transform the result back to the original representation (by taking the reciprocal again).

If we look at precision and recall their numerators are the same but denominators are different. So to take the average we of this quantity we need to convert them to the same base. This is done by harmonic means.

How to calculate F1 Score?

Let us first understand confusion matrix . then we will understand how F1 score is calculated using confusion matrix for binary classification. We will then extend the concept to multi-class.

A confusion matrix is a N*N matrix used in classification to evaluate the performance of a machine learning model. It summarizes the results of the model’s predictions on a set of data, comparing the predicted labels to the actual labels. The four components of a confusion matrix are

- True Positive (TP) : The number of instances correctly predicted as positive by the model.In a binary classification problem, TP would be the number of actual positive instances that the model correctly predicted as positive.

- False Positive (FP) : The number of instances incorrectly predicted as positive by the model.In a binary classification problem, FP would be the number of actual negative instances that the model incorrectly predicted as positive

- True Negative (TN) : The number of instances correctly predicted as negative by the model.In a binary classification problem, TN would be the number of actual negative instances that the model correctly predicted as negative

- False Negative (FN) : The number of instances incorrectly predicted as negative by the model.In a binary classification problem, FN would be the number of actual positive instances that the model incorrectly predicted as negative.

A 2*2 matrix for binary classification can be represented as

These components are often used to calculate various performance metrics for a classification model. The Precision, Recall , Accuracy and F1score can be calculated as below.

|

TP

| FP

| Total Predicted Positive cases

| Precision = (TP/(TP+FP))

|

FN

| TN

| Total Predicted Negative cases

|

|

Total Actual Positive Case

| Total Actual Negative Case

| Total Cases

|

|

Recall = (TP/(TP+FN))

|

| Accuracy = (TP+TN)/(TP+FP+FN+TN)

| F1 = (2 * P * R)/(P+R)

|

Binary Classification

Let’s take an example of a dataset with 100 total cases. Out of these 90 are positive and 10 are negative cases. The model predicted 85 positive cases out of which 80 are actual positive and 5 are from actual negative cases. The confusion matrix would look like

|

|

80

|

5

|

85

| Precision = (80/85) = 0.94

|

|

10

|

5

|

15

|

|

|

90

|

10

|

100

|

|

Recall = (80/90) = 0.88

|

| Accuracy = (80+5)/100 = 85%

| F1 = 0.91

|

Let us see how does F1 score help when there is a class imbalnce

Example 1

Consider the below case where there are only 9 cases of true positives out of a dataset of 100.

Precision 0.50

Recall 0.11

Accuracy 0.91

F1 0.18

In this case, if we give importance to accuracy over model will predict everything as negative. This gives us an accuracy of 91 %. However, our F1 score is low

Example 2:

However one must also consider the opposite case where the positives outweigh the negative cases. In such a case our model will try to predict everything as positive.

Precision 0.92

Recall 0.99

Accuracy 0.91

F1 0.95

Here we get a good F1 score but low accuracy. In such cases, the negative should be treated as positive and positive as negative.

Multiclass Classification

In a multi-class classification problem, where there are more than two classes, we calculate the F1 score per class rather than providing a single overall F1 score for the entire model. This approach is often referred to as the one-vs-rest (OvR) or one-vs-all (OvA) strategy.

For each class in the multi-class problem, a binary classification problem is created. Essentially, we treat one class as the positive class, and the rest of the classes as the negative class. Then we proceed to calculate the F1 score as outlined above. For a specific class, the true positives (TP) are the instances correctly classified as that class, false positives (FP) are instances incorrectly classified as that class, and false negatives (FN) are instances of that class incorrectly classified as other classes.

This means that you train a separate binary classifier for each class, considering instances of that class as positive and instances of all other classes as negative.

Once we have calculated the F1 score for each class, we might want to aggregate these scores to get an overall performance measure for your model. Common approaches include calculating a micro-average, macro-average, or weighted average of the individual F1 scores.

- Micro-average involves calculating the total true positives, false positives, and false negatives across all classes and then computing precision, recall, and F1 score.

- Micro F1 =

- where

- Micro Precision =

- Micro Rcall =

- Macro-average calculates the average of F1 scores for each class without considering class imbalance.

- Macro F1 =

- where

- N is the number of classes

- F1i is F1 score for ith class

- Weighted average considers class imbalance and weights the F1 scores by the number of instances in each class.

- Weighted F1 =

- where

- Weighti =

F1 Score vs ROC-AUC vs Accuracy

Besides the F1 score, there are other metrics like accuracy, AUC-ROC, etc which can be used to evaluate model performance. The choice of metric depends on the problem at hand. There is no one-size-fits-all all. More than often a combination of metrics are looked at to gauge the overall performance of the model. Below are general rules that are followed :

F1 vs Accuracy

If the problem is balanced and you care about both positive and negative predictions, accuracy is a good choice. If the problem is imbalanced(a lot of negative cases compared to positive) and we need to focus on positive cases the F1 score is a good choice.

F1 vs AUC-ROC

AUC-ROC helps us to understand the ability of the model to discriminate between positive and negative instances overall, regardless of class imbalance at different thresholds while the F1 score evaluates the performance of the model at a particular threshold. Hence one might use F1 for class-specific evaluation while AUC-ROC for overall assessment of model.

How to calculate F1 Score in Python?

The f1_score function from the sklearn.metrics module is used to calculate the F1 score for a multi-class classification problem. The function takes two required parameters, y_true and y_pred, and an optional parameter average. Here’s an explanation of the function and its parameters:

Python3

from sklearn.metrics import f1_score

f1_score(y_true, y_pred, average=None)

|

- y_true (array-like of shape (n_samples,)): This parameter represents the true labels or ground truth for the samples. It should be an array-like structure (e.g., a list or NumPy array) containing the true class labels for each sample in the dataset.

- y_pred (array-like of shape (n_samples,)): This parameter represents the predicted labels for the samples. Like y_true, it should be an array-like structure containing the predicted class labels for each sample.

- average (string or None, default=None): This is an optional parameter that specifies the method used to calculate the F1 score for multi-class classification. It can take the following values:

- None: Returns the F1 score for each class separately. In this case, the function returns an array of shapes (n_classes,).

- ‘micro’: Calculates the F1 score globally by considering total true positives, false positives, and false negatives across all classes.

- ‘macro’: Calculates the F1 score for each class independently and then computes the unweighted average.

- ‘weighted’: Calculates the F1 score for each class independently and then computes the average weighted by the number of true instances in each class.

Python3

from sklearn.metrics import f1_score

y_true = [0, 1, 2, 2, 2, 2, 1, 0, 2,1, 0]

y_pred = [0, 0, 2, 2, 1 , 2, 1,0, 1,2,1]

f1_per_class = f1_score(y_true, y_pred, average=None)

f1_micro = f1_score(y_true, y_pred, average='micro')

f1_macro = f1_score(y_true, y_pred, average='macro')

f1_weighted = f1_score(y_true, y_pred, average='weighted')

print("F1 score per class:", f1_per_class)

print("Micro-average F1 score:", f1_micro)

print("Macro-average F1 score:", f1_macro)

print("Weighted-average F1 score:", f1_weighted)

|

Output:

F1 score per class: [0.66666667 0.28571429 0.66666667]

Micro-average F1 score: 0.5454545454545454

Macro-average F1 score: 0.5396825396825397

Weighted-average F1 score: 0.5627705627705627

Frequently Asked Question(FAQs)

1. Is the F1 score 0 or 1 ?

The F1 score ranges from 0 to 1. A value of 0 indicates poor performance, and a value of 1 represents perfect performance.

2. Why f1 score is good for imbalanced data ?

In imbalanced datasets, where one class significantly outnumbers the other, a classifier may perform well in terms of precision but poorly in terms of recall, or vice versa. F1 score considers both precision and recall, striking a balance between these two metrics. It penalizes classifiers that favor one metric at the expense of the other

3. How is the F1 score calculated for multi-class problems?

F1 score is then calculated for each class, and these individual scores are often aggregated using methods like micro-average, macro-average, or weighted average

4. Difference between accuracy and f1 score

Accuracy can be misleading in the presence of class imbalance.If the costs or consequences of false positives and false negatives are different and need to be carefully managed, F1 score provides a more nuanced evaluation. If class distribution is balanced and the goal is to maximize overall correctness, accuracy might be a reasonable choice.

Share your thoughts in the comments

Please Login to comment...