Few-shot learning in Machine Learning

Last Updated :

08 Jan, 2024

What is a Few-shot learning?

Few-shot learning is a type of meta-learning process. It is a process in which a model possesses the capability to autonomously acquire knowledge and improve its performance through self-learning. It is a process like teaching the model to recognize things or do tasks, but instead of overwhelming it with a lot of examples, it only needs a few. Few-shot learning focuses on enhancing the model’s capability to learn quickly and efficiently from new and unseen data.

If you want a computer to recognize a new type of car and you show a few pictures of it instead of hundreds of cars. The computer uses this small amount of information and recognizes similar cars on its own. This process is known as few-shot learning.

Few-shot learning

Terminologies related to Few-shot learning

- k-ways: It depicts the number of classes a model needs to distinguish or recognize. (2-way means the model needs to classify or generate examples for 2 classes).

- k-shots: It denote the number of samples per class available during training or evaluation. (1- shot means 1 sample for each class is provided to the model).

S= (number of ways*number of shots)

- Query Set: Query set refers to additional samples of data. It is also known as a target set. The model uses the query set to evaluate its performance and generalize to new examples. It consists of examples from the same categories present in the support set but distinct from it.

- Task: Support set + Query set.

It is a recognition or classification problem given to the model for which it is trained to solve.

- Training: It is the process of exposing the model to different tasks and support sets. It generalizes the model to classify new tasks efficiently. The motive is to enable the model to perform well on new and unseen tasks of the same categories given in the support set.

- Test: It involves the process of evaluation in which new unseen tasks are given to the model using query sets that were not seen during the time of training and it is expected by the model to recognize and classify them accurately based on the prior knowledge gained by the support set provided.

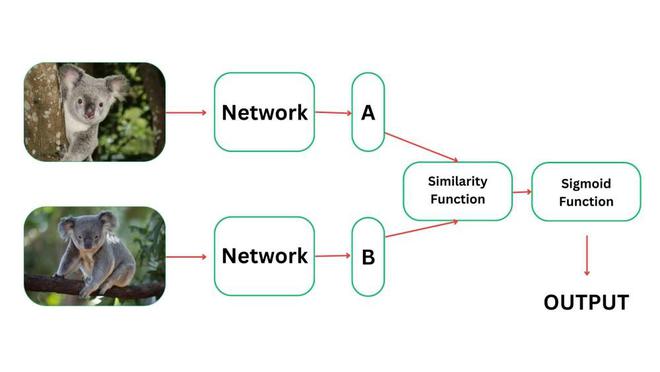

In few-shot learning, a Model is a pair of identical networks that converge into a node called a similarity function. And it terminates to a sigmoid function returning output if the query is similar or different.

Since we are working on a pair of networks, it is called “Siamese Network”.

Working of Few-shot learning

Working on few-shot learning

- Let us take a sample support dataset of two images ‘i ‘ & ‘j ‘.

- The pair of identical neural networks is represented as ‘f ‘.

- The embedding produced by the neural networks is represented by ‘f(i)’ and ‘f(j)’ respectively. These are 64-dimensional vectors.

- The similarity function takes these two numbers and computes the vector difference.

- The similarity function then takes the sum of these values to give us a number

- After that, we pass this number to the sigmoid function. It has an edge-weighted ‘w’ and a bias ‘b’.

![Rendered by QuickLaTeX.com Y = \sigma(w\Sigma\|f(i)k-f(j)k\|^2+b)\epsilon[0,1]](https://quicklatex.com/cache3/7f/ql_141b84d41c08b2d840cdd7990609767f_l3.png)

Y here, represents the probability of being similar. - If the probability is less than the threshold value ‘t’, the output would be 0 i.e., The images are of Different classes.

If the probability is equal to or greater than threshold value ‘t’, the output would be 1 i.e., The images belong to the same class.

Variations In Few-shot learning

- One shot learning: In one shot learning, the model is trained with one shot of each class i.e., one example per class. It is difficult to generalize for a model only with help of a single example. There are more chances of errors in the results when the model is trained with one-shot learning.

- Zero shot learning: In zero shot learning, the model needs to recognize the classes which were not seen on the training time. It has to find a relationship between seen and unseen classes on the basis of some semantic relations or auxiliary information present.

- N shot learning: In n-shot learning, n number of examples are given to train the model. They are more than one but still less than data required for training in supervised learning. This approach is more reliable for training of a model to get optimized results.

Different Algorithms for implementation

- Siamese Networks: In this approach, a model is a pair of identical networks. These networks are trained to minimize the distance between similar objects and maximize the distance between different objects. If the output is less than threshold value, the classes are different, else, if the output is equal to or greater than threshold value, the classes are similar.

- Model Agnostic Meta Learning (MAML): It is an approach of meta-learning in which the model is trained to adapt new tasks quickly. The model learns an initialization that is fine tuned by some examples for a specific task. It is hard to train as the method is more complex. It does not work well on the few shot learning classification benchmarks as compared to other metric algorithms.

- Prototypical Networks: In prototype learning, the model learns the prototype of each class based on embedding of its instances. During training, the model minimizes the distance between the embeddings of instances and the prototype for each class. It is an effective measure of implementing few shot learning technique for classification.

- Triplet Networks: It is an extension to the Siamese Network. It consists of triplets of instances, i.e., Anchor, Positive example, Negative example. The model is trained to minimize the difference between anchor and positive example (which is similar to the anchor) and maximize the distance between anchor and negative example (which is different from the anchor).

- Matching Networks: Matching networks starts by looking at support set provided to model. Then, when a new query comes, it pays attention to the most similar class present and compare its similarities and dissimilarities with the query set. Matching networks make most of the few examples.

Real-World Applications of few shot learning

- Medical Imaging: In medical imaging, the acquiring of labelled data for rare diseases is difficult. Few-shot learning helps the model to detect brain tumor and classify diseases with few examples available.

Medical Imaging using few-shot learning

- Robotics: Few-shot learning is applied in robotics for tasks like object recognition and manipulation. The robots can adapt to new tasks and environment with minimal required support set.

Robotics with few-shot learning

- Image Recognition: The model is trained to recognize images using zero shot learning where it has to classify novel objects into classes which are not seen prior. It is the most common application of zero shot learning in the real world.

Image Recognition using few shot learning

Advantages of Few-shot learning

- Reduced data requirement: A lesser amount of data is required to train the model irrespective of supervised learning where a large dataset is given to the model for training.

- Rapid adaption to new tasks: Models trained using few-shot learning can adapt to new tasks easily using a few examples. This will help in dynamic environments where new tasks emerge.

- Flexibility of Model: The model is more flexible as it can easily generalize with new and evolving tasks.

- Lesser time required: The time required for training a model is lesser in few-shot learning as compared to supervised learning due to the small size of the support set.

- Reduced amount of resources required: The resources required for computation are less in number in the few-shot learning process.

- Good for specialized tasks: In a certain area, where a limited amount of data is available, few-shot learning can be a practical approach to building effective models.

- Adaptable to real-world scenarios: In a real-world scenario, where the environment is continuously changing, few-shot learning can be an approach to train a model that can learn by itself.

Disadvantages of few-shot learning

- Less diverse representation: Due to the limited amount of data provided during training, the model will have a less diverse and less robust representation of underlying data.

- Risk of overfitting: It can be a scenario where the model memorizes the examples given in the support set rather than analyzing the pattern between them. This will result in overfitting where the model will perform well in the support set but poor with the new unseen data.

- Insufficient data for complex tasks: It can be difficult for a model to find the relationship between the features with a limited amount of examples. It can result in inaccurate analysis of complex tasks.

- Sensitive to noise: A model trained using few-shot learning will be sensitive to noise present in the support set. If noisy or incorrect data is present in the support set, it will create a significant impact on the result given by the model.

- Inefficient for rare classes: A model will find it difficult to recognize when it comes to rare classes due to the small number of examples available for these classes.

Share your thoughts in the comments

Please Login to comment...