How to Create S3 Storage Life using Terraform?

Last Updated :

24 Apr, 2024

S3 stands for Amazon Simple Storage Service, foundation in cloud storage arrangements, offering unrivaled versatility, security, and durability. It fills in as a urgent part inside Amazon Web Services (AWS), taking care of the different storage needs of designers and endeavors around the world. At its center, S3 works around the idea of items put away inside the bucket. Where each article exemplifies information close by exceptional identifiers and metadata.

Key terminologies like bucket, objects, lifecycle configuration, progress activities, and termination activities establish the groundwork for understanding S3’s lifecycle management system. Through an organized interaction including can creation, lifecycle design, and rule definition, users can consistently carry out policies to change objects between storage classes and automate their deletion in view of predefined models.

In this article, we dig into the complexities of S3’s lifecycle management, explaining its importance, functional systems, and practical applications. Through clear examples and FAQs, we mean to outfit users with an exhaustive comprehension of utilizing S3’s lifecycle management to improve capacity productivity and smooth out data lifecycle management workflows.

Primary Terminologies Related To Amazon S3 Life Cycle And Terraform

The following are the primary terminologies related to Amazon S3 life cycle and Terraform:

- Bucket: In Amazon S3, a bucket is a logical container for storing objects. Buckets are like envelopes in a file systems, giving a method for putting together and manage stored information. Each bucket includes a unique name inside the AWS S3 namespace, and objects are put away inside buckets.

- Object: An object is the central unit of storage in Amazon S3. It comprises of the information you need to store, alongside metadata, (for example, file size, content type, and last changed timestamp) and an exceptional identifier known as a key. Objects can go from files and images to recordings and backups.

- Expiration Actions: Expiration activities are characterized inside the lifecycle configuration to determine when objects should to be automatically deleted from the bucket. Items can be designed to lapse following a specific number of days since creation or since the objects last modification.

- Lifecycle Configuration: Lifecycle configuration refers to a set of rules defined for managing with the lifecycle of items inside a S3 bucket. These rules automate actions, for example, progressing objects to various storage classes, erasing objects, or adding labels in view of determined rules like age, prefix, or object size.

What is the Amazon S3 storage life cycle?

The S3 storage lifecycle refers to the automated the executives of objects stored in Amazon S3 buckets over the long run. It allows users to define rules or policies that direct moves to be based on objects in specific of determined models like age, prefix, or object size. The essential objective of S3 storage lifecycle management is to upgrade capacity costs, further develop execution, and smooth out data the management workflows.

The lifecycle defines Three types of actions:

- Lifecycle Configuration: Users can define or specify lifecycle rules for their own S3 buckets through lifecycle configuration settings. These principles indicate moves to be made on objects as they age.

- Transition Actions: Transition Actions figure out what happens to objects as they arrive at determined stages in their lifecycle. For instance, items can be consequently changed to lower-cost capacity classes like S3 Standard-IA or Icy mass to diminish storage costs.

- Expiration Actions: Expiration Actions characterize when objects should to be consequently deleted from the bucket. Items can be designed to lapse following a specific number of days since creation or since the objects last modification.

By executing lifecycle policies, users can actually manage with the storage of their S3 objects without manual mediation. This helps in improving storage costs by moving less much of the time got to data to lower-cost capacity levels and automating the evacuation of obsolete or unnecessary objects. Generally, S3 storage lifecycle management improves efficiency, decreases functional, and ensures consistency with data maintenance policies.

How To Create S3 Storage lifecycle rule using Terraform: A Step-By-Step Guide

Step 1 : Login to AWS Console

- Now connect with terminal

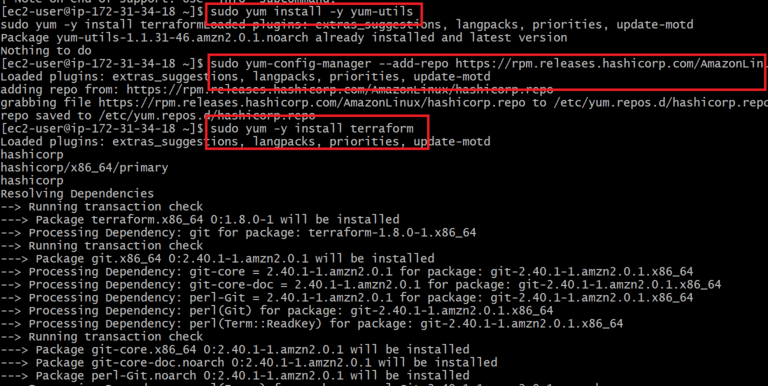

Step 2: Install Terraform

- In this we are dealing with terraform so we need to install terraform

- Now go to terraform official page and copy terraform packages commands or follow below commands

sudo yum install -y yum-utils

sudo yum-config-manager --add-repo https://rpm.releases.hashicorp.com/AmazonLinux/hashicorp.repo

sudo yum -y install terraform

Step 3: Create a file for Terraform Configuration

- Create a file with .tf extension, in that file provide a terraform infrastructure script

- By using this script we are creating S3 Bucket for that bucket defining Lifecycle policy

# Create S3 bucket

resource "aws_s3_bucket" "sadamb" {

bucket = "sadamb"

acl = "private"

}

# Define lifecycle policy

resource "aws_s3_bucket_lifecycle_configuration" "example_lifecycle" {

bucket = aws_s3_bucket.sadamb.id # Reference the S3 bucket resource

rule {

id = "rule1"

filter {

prefix = "" # You can set your prefix here

}

# Transition rule

transition {

days = 30 # Update the transition days as per your requirement

storage_class = "GLACIER"

}

# Expiration rule

expiration {

days = 60 # Update the expiration days as per your requirement

}

# Status

status = "Enabled"

}

}

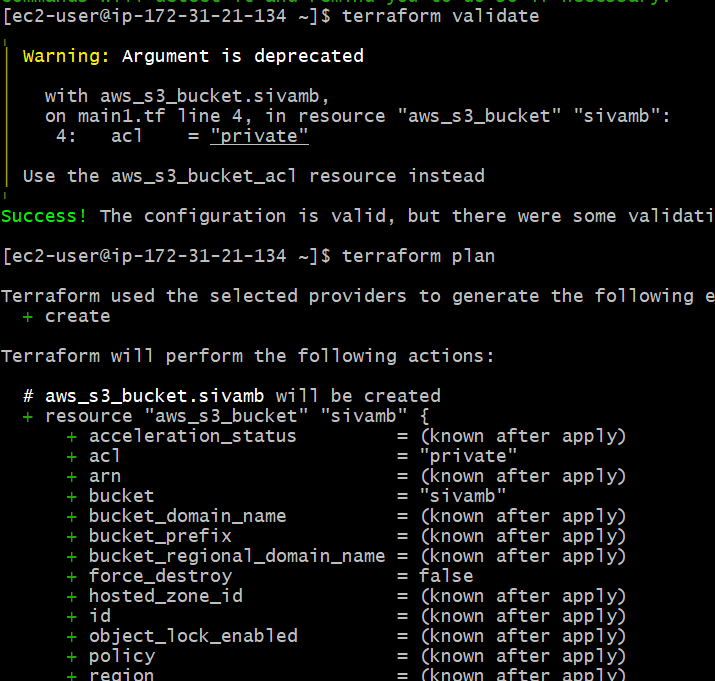

Step 4: Execute Terraform Commands

Step 4: Execute Terraform Commands

- Now execute terraform commands to create terraform infrastructure

- When we execute terraform init, our terraform install the required packages to create infrastructure.

terraform init

terraform validate

terraform plan

terraform apply --auto-approve

Terraform init

Terraform Validate and Terraform Plan

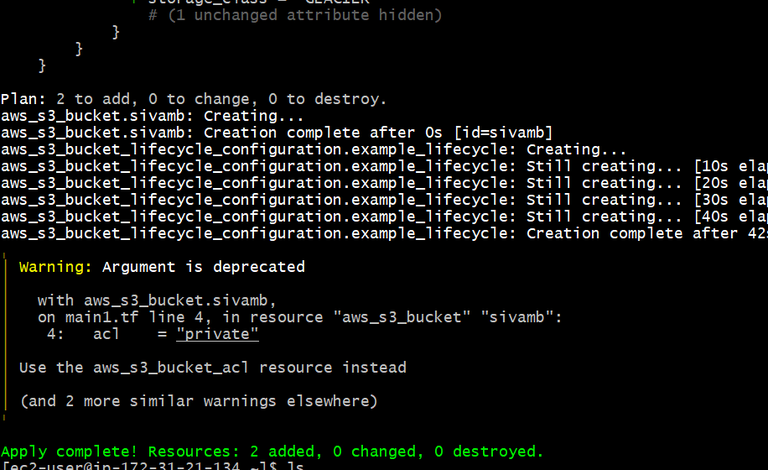

Terraform apply

- Execute terraform apply command to apply the configuration resources that are defined in the file:

- The following screenshot shows successful creation of resources that are defined in the terraform fle.

- Terraform apply was successfully completed.!

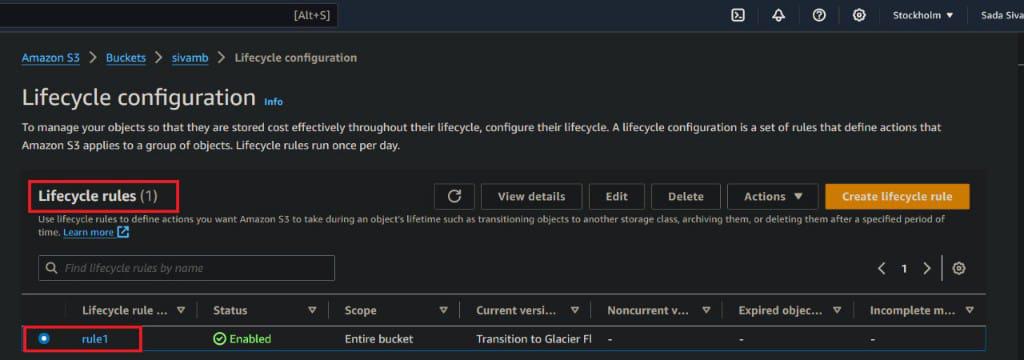

Step 5: Verify Amazon S3 Life Cycle

Now go to AWS Console and navigate to S3 bucket dashboard. Check for S3 bucket , Here we see successfully created S3 Bucket

- Now click on S3 bucket and navigate to management in that we see lifecycle rule.

- Now click on View Lifecycle Configuration. Here we see Lifecycle Configuration S3 bucket

Conclusion

The S3 storage lifecycle is a component of Amazon Simple Storage Service (S3) that empowers users to automate the management of objects stored S3 buckets after some time. It includes characterizing rules or policies that direct moves to be initiated on objects in view of determined models like age, prefix, or capacity class. These activities can incorporate progressing objects to various storage classes, deleting objects, or adding tags.

The S3 storage lifecycle offers various advantages to users. It streamlines capacity costs via consequently moving items to cheaper capacity levels as they age, ensuring efficient resource use. Moreover, it smoothes out information the executives work processes via automating tasks, for example, chronicling and deletion, lessening manual mediation and functional above. By executing lifecycle policies, users can ensure consistency with data maintenance arrangements and keep an efficient and effective storage environment. Generally speaking, the S3 storage lifecycle improves the scalability, strength, and cost-effectiveness of capacity answers for businesses and developers utilizing Amazon S3. The key is to align the lifecycle rules with your organizational needs and periodically review their impact, ensuring they serve your evolving requirements.

Creating Amazon S3 Storage Life Cycle Using Terraform – FAQs

Might I at any point apply different lifecycle strategies to various articles inside a similar container?

No, lifecycle policies are applied at the bucket level, so all objects inside the can are dependent upon similar rules.

Will lifecycle policies influence existing objects in the bucket?

Yes, lifecycle policies apply retroactively to existing items in the container that meet the characterized rules.

Might I at any point suspend or modify lifecycle policies after they’ve been applied?

Yes, you can adjust or modify lifecycle policies whenever through the AWS Management Console or automatically utilizing AWS SDKs.

Might I at any point test lifecycle policies prior to applying them?

Yes, you can utilize the S3 Lifecycle test system to recreate the impacts of lifecycle approaches prior to applying them to your bucket.

Are there any extra expenses related with utilizing S3 lifecycle policies?

No, there are no extra expenses for utilizing lifecycle policies. Nonetheless, there might be expenses related with the capacity classes to which articles are changed.

Share your thoughts in the comments

Please Login to comment...