Time series data is one of the most challenging tasks in machine learning as well as the real-world problems related to data because the data entities not only depend on the physical factors but mostly on the chronological order in which they have occurred. We can forecast a target value in the time series based on a single feature that is univariate, and two features that are bivariate or multivariate but in this article, we will learn how to perform univariate forecasts on the Rainfall dataset that has been taken from Kaggle.

What is Univariate Forecasting?

Univariate forecasting is commonly used when you want to make prediction values of a single variable, especially when there are historical data points available for that variable. It’s a fundamental and widely applicable technique in fields like economics, finance, weather forecasting, and demand forecasting in supply chain management.

For more complex forecasting tasks where multiple variables or external factors may have an impact, multivariate forecasting techniques are used. These models take into account multiple variables and their interactions for making predictions.

Key Concepts of Univariate Forecasting

- Trend: A time series’s long-term movement or direction is represented by its trend. It displays the fundamental pattern in the data, such as values rising or falling over time. Determining and simulating the trend is essential to comprehending the variable’s overall trajectory and producing precise forecasts.

- Seasonality: In a time series, seasonality is the term used to describe periodic fluctuations or patterns that appear at regular intervals. For instance, seasonal patterns are frequently seen in retail sales because of weather-related factors or holidays. Taking seasonality into consideration is crucial for identifying reoccurring trends and modifying forecasts appropriately.

- Stationarity: When a time series’ statistical characteristics, like its mean and variance, don’t change over time, it’s considered stationary. Since non-stationary data can produce inaccurate predictions, stationarity is a crucial premise in many forecasting models.

- Time Series Data: Time series data, or a series of observations or measurements taken over time at regular intervals, are the subject of univariate forecasting. Sales numbers, temperature readings, GDP growth rates, and stock prices are a few examples.

Techniques of Univariate Forecasting

Several methods are used in univariate time series analysis to comprehend, model, and predict the behavior of a single variable over time. In univariate time series analysis, the following methods are frequently employed:

- Autoregression(AR): It makes use of the correlation between an observation and a predetermined number of lag observations (earlier time intervals).

- Moving Average(MA): It uses a moving average model applied to lagged observations to model the relationship between an observation and a residual error.

- Autoreggresive Integrated Moving Average(ARIMA): It makes the time series stationary (i.e., the data has a consistent mean and variance over time) by combining the AR and MA methods and accounting for the differencing of raw observations.

- Seasonal Autoreggresive Integrated Moving Average(SARIMA): It extends ARIMA to take the time series data’s seasonal component into consideration.

- Exponential Smoothning(ETS): It uses a weighted average of historical observations to forecast the next time point, giving more weight to recent observations.

- Long Short-Term Memory(LSTM): A kind of RNN that is specifically made to identify patterns in time series data over extended periods of time.

Implementation of Univariate Forecasting

Importing Libraries

Python3

import pandas as pd

import numpy as np

import seaborn as sb

import matplotlib.pyplot as plt

import statsmodels.api as sm

import warnings

warnings.filterwarnings('ignore')

|

Python libraries make it very easy for us to handle the data and perform typical and complex tasks with a single line of code.

- Pandas – This library helps to load the data frame in a 2D array format and has multiple functions to perform analysis tasks in one go.

- Numpy – Numpy arrays are very fast and can perform large computations in a very short time.

- Matplotlib/Seaborn – This library is used to draw visualizations.

- Sklearn – This module contains multiple libraries having pre-implemented functions to perform tasks from data preprocessing to model development and evaluation.

Loading Dataset

Python3

df = pd.read_csv('Rainfall_data.csv')

df.head()

|

Output:

Year Month Day Specific Humidity Relative Humidity Temperature \

0 2000 1 1 8.06 48.25 23.93

1 2000 2 1 8.73 50.81 25.83

2 2000 3 1 8.48 42.88 26.68

3 2000 4 1 13.79 55.69 22.49

4 2000 5 1 17.40 70.88 19.07

Precipitation

0 0.00

1 0.11

2 0.01

3 0.02

4 271.14

Here, in this code we are loading the dataset into the pandas data frame so, that we can explore the different aspects of the dataset.

Shape of the dataframe

Output:

(252, 7)

The DataFrame “df”‘s row and column counts are returned by this code.

Data Information

Output:

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 252 entries, 0 to 251

Data columns (total 7 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 Year 252 non-null int64

1 Month 252 non-null int64

2 Day 252 non-null int64

3 Specific Humidity 252 non-null float64

4 Relative Humidity 252 non-null float64

5 Temperature 252 non-null float64

6 Precipitation 252 non-null float64

dtypes: float64(4), int64(3)

memory usage: 13.9 KB

By using the df.info() function we can see the content of each column and the data types present in it along with the number of null values present in each column.

Describing the data

Output:

count mean std min 25% \

Year 252.0 2010.000000 6.067351 2000.00 2005.0000

Month 252.0 6.500000 3.458922 1.00 3.7500

Day 252.0 1.000000 0.000000 1.00 1.0000

Specific Humidity 252.0 14.416746 4.382599 5.74 10.0100

Relative Humidity 252.0 67.259524 17.307101 34.69 51.8450

Temperature 252.0 16.317262 6.584842 4.73 10.8650

Precipitation 252.0 206.798929 318.093091 0.00 0.4025

50% 75% max

Year 2010.000 2015.000 2020.00

Month 6.500 9.250 12.00

Day 1.000 1.000 1.00

Specific Humidity 15.200 18.875 20.57

Relative Humidity 66.655 84.610 92.31

Temperature 16.915 22.115 29.34

Precipitation 11.495 353.200 1307.43

The DataFrame df is described statistically via the df. describe() function. To provide a preliminary understanding of the data’s central tendencies and distribution, it includes important statistics such as count, mean, standard deviation, and minimum, and maximum values for each numerical column.

Exploratory Data Analysis

EDA is an approach to analyzing the data using visual techniques. It is used to discover trends, and patterns, or to check assumptions with the help of statistical summaries and graphical representations. While performing the EDA of this dataset we will try to look at what is the relation between the independent features that is how one affects the other.

Python3

df['Date'] = pd.to_datetime(df['Year'].astype(str) + '-' + df['Month'].astype(str) + '-' + df['Day'].astype(str),

format='%Y-%m-%d')

df['DayOfWeek'] = df['Date'].dt.dayofweek

df['Month'] = df['Date'].dt.month

df['Quarter'] = df['Date'].dt.quarter

df['Year'] = df['Date'].dt.year

cat_cols = ['DayOfWeek', 'Month', 'Quarter', 'Year']

for col in cat_cols:

df[['Precipitation', col]].groupby(col).mean().plot.bar()

plt.title(f'Mean Precipitation by {col}')

plt.show()

|

Output:

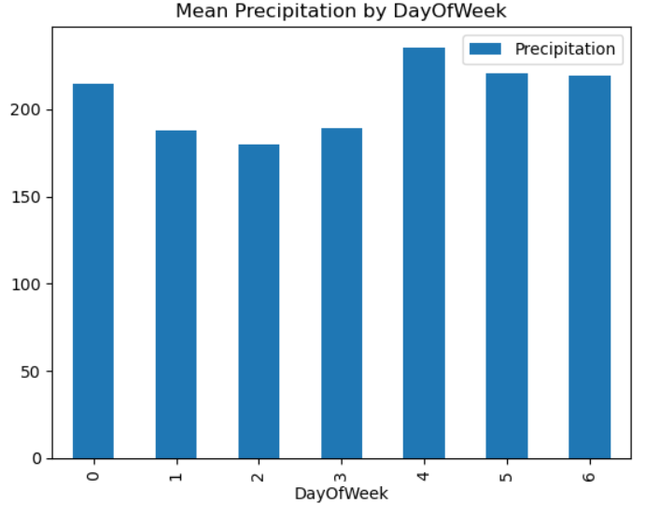

DAY

Interpretation:

- The x-axis represents each day of the week (0 to 6, where 0 is Monday and 6 is Sunday).

- The y-axis represents the mean precipitation for each respective day of the week.

- Analyze which days of the week tend to have higher or lower mean precipitation based on the bars’ heights. So, on the 5th day of the week the precipitation rate is higher than the other days.

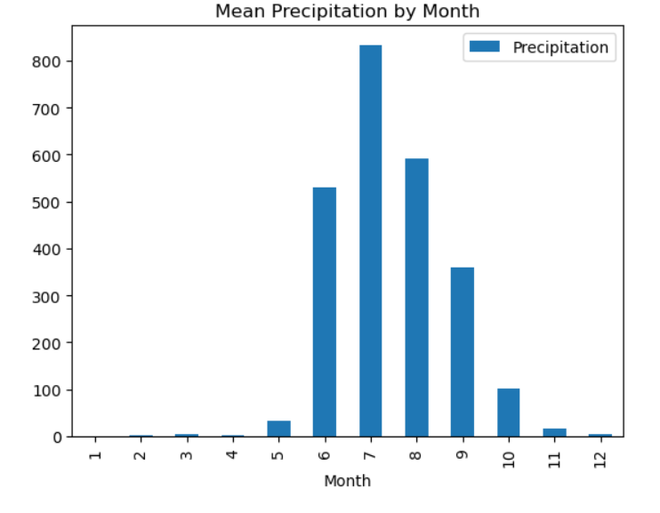

MONTH

Interpretation:

- The x-axis represents each month of the year (1 to 12).

- The y-axis represents the mean precipitation for each respective month.

- Identify patterns in precipitation across different months. Some months may experience more precipitation than others. The precipitation was more in the 7th month.

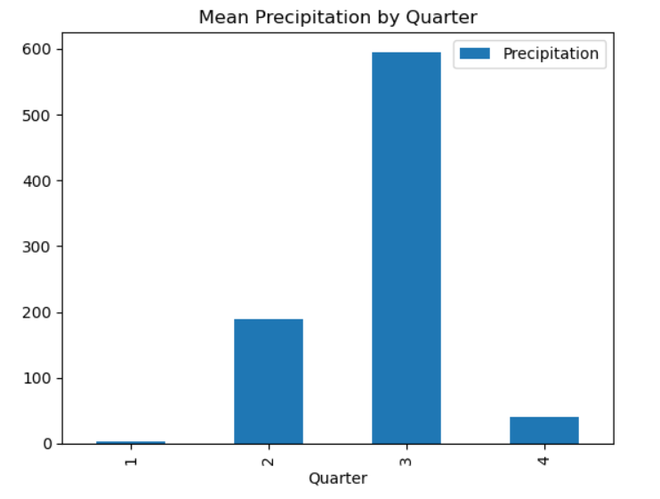

QUARTER

Interpretation:

- The x-axis represents each quarter of the year (1 to 4).

- The y-axis represents the mean precipitation for each respective quarter.

- Explore seasonal variations by examining how precipitation averages differ across the quarters. This interprets that the precipitation was more in the 3rd quarter as compared to the other three quarters(1st, 2nd, and 4th).

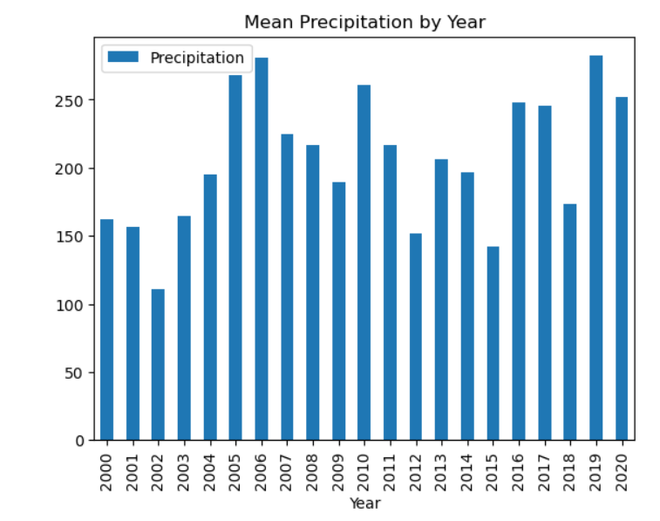

YEAR

Interpretation:

- The x-axis represents each year.

- The y-axis represents the mean precipitation for each respective year.

- Evaluate any trends or changes in mean precipitation over the years. This interpretation shows that precipitation was more in 2019 as compared to the other years.

Using a time series dataset with rainfall data, this code does feature engineering. It takes data from the ‘Date’ column and adds additional temporal elements like ‘DayOfWeek,’ ‘Month,’ ‘Quarter,’ and ‘Year’. Exploratory data analysis (EDA) is then carried out, with an emphasis on the mean precipitation for every unique value of the newly-engineered temporal features. To visually evaluate the average precipitation patterns over the course of a week, month, quarter, and year, the code iterates through the designated categories columns and creates bar charts. By assisting in the discovery of possible temporal trends and patterns in the rainfall data, these visualizations enable additional understanding of the temporal features of the dataset.

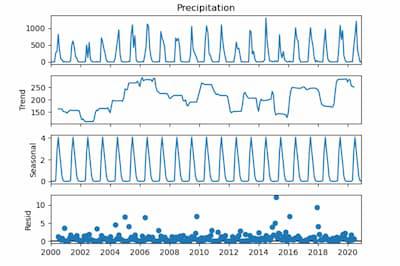

Seasonal Decomposition

A statistical technique used in time series analysis to separate the constituent parts of a dataset is called seasonal decomposition. Three fundamental components of the time series are identified: trend, seasonality, and residuals. The long-term movement or direction is represented by the trend, repeating patterns at regular intervals are captured by seasonality, and random fluctuations are captured by residuals. By separating the effects of seasonality from broader trends and anomalies, decomposing a time series helps to comprehend the specific contributions of various components, enabling more accurate analysis and predictions.

Python3

ts = df.set_index('Date')['Precipitation'] + 0.01

result = seasonal_decompose(ts, model='multiplicative', period=12)

result.plot()

plt.show()

|

Output:

Seasonal Decomposition

For Seasonal Component:

- The upper part of the graph represents the seasonal component.

- The x-axis corresponds to the time, usually in months given the specified period=12.

- The y-axis represents the magnitude of the seasonal variations.

For Trend Component:

- The middle part of the graph represents the trend component.

- The x-axis corresponds to the time, reflecting the overall trend across the entire time series.

- The y-axis represents the magnitude of the trend.

For Residual Component:

- The bottom part of the graph represents the residual component (also known as the remainder).

- The x-axis corresponds to the time.

- The y-axis represents the difference between the observed values and the sum of the seasonal and trend components.

Using a time series (‘ts’) that represents precipitation data, the function performs seasonal decomposition. A little constant is added to account for possible problems with zero or negative values. The code consists of three parts: trend, seasonality, and residuals, and it uses a multiplicative model with a 12-month seasonal period. The resulting graphic helps identify long-term trends and recurrent patterns in the precipitation data by providing a visual representation of these elements.

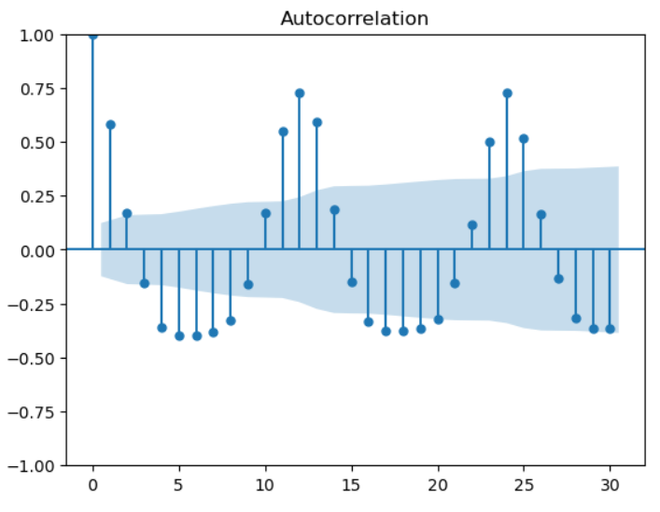

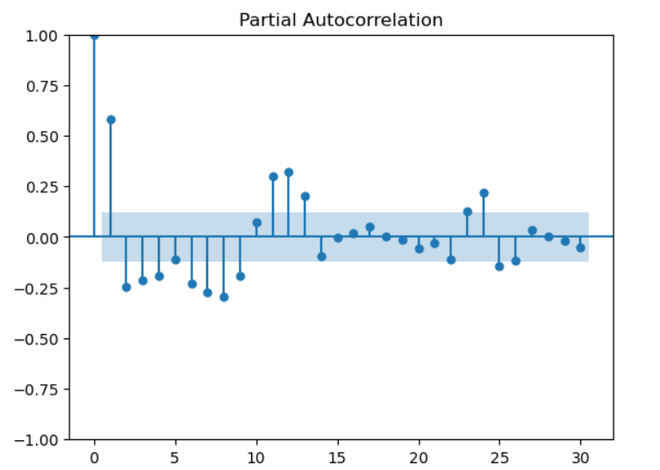

Autocorrelation and Partial Autocorrelation Plots

Autocorrelation: A time series’ association with its lag values is measured by autocorrelation. Every lag is correlated, and peaks in an autocorrelation diagram show high correlation at particular delays. By revealing recurring patterns or seasonality in the time series data, this aids in understanding its temporal structure and supports the choice of suitable model parameters for time series analysis.

Partial Autocorrelation: When measuring a variable’s direct correlation with its lags, partial autocorrelation eliminates the impact of intermediate delays. Significant peaks in a Partial Autocorrelation Function (PACF) plot indicate that a particular lag has a direct impact on the current observation. It helps to capture the distinct contribution of each lag by assisting in the appropriate ordering of autoregressive components in time series modeling.

Python3

plot_acf(ts, lags=30)

plot_pacf(ts, lags=30)

plt.show()

|

Output:

Autocorrelation

Interpretation:

The ACF measures the correlation between a time series and its lagged values at different time intervals.

- In the ACF plot:

- The x-axis represents the number of lags or time intervals.

- The y-axis represents the correlation coefficient.

- Interpretation:

- Points above the blue shaded region are considered statistically significant.

- Positive lags indicate a positive correlation between the current observation and past observations at that lag.

- Negative lags indicate a negative correlation.

Partial Autocorrelation

Interpretation:

The PACF measures the correlation between a time series and its lagged values, controlling for the effects of other lags.

- In the PACF plot:

- The x-axis represents the number of lags or time intervals.

- The y-axis represents the partial correlation coefficient.

- Interpretation:

- Points above the blue shaded region are considered statistically significant.

- The partial autocorrelation at a specific lag represents the correlation between the current observation and past observations at that lag, excluding the influence of intermediate lags.

- It helps identify the direct relationship between the current observation and a specific lag.

For a time series, “ts,” which represents precipitation data, the provided code creates plots of the Autocorrelation Function (ACF) and Partial Autocorrelation Function (PACF). The correlation between the time series and its lag values is displayed in these charts, which are restricted to 30 lags. The ACF plot’s peaks suggest strong connections at particular lags that may be seasonal. Finding appropriate autoregressive terms for time series modeling is made easier by the PACF plot, which illustrates the direct impact of each lag on the current observation. These plots serve as a general reference for selecting model parameters and identifying temporal patterns.

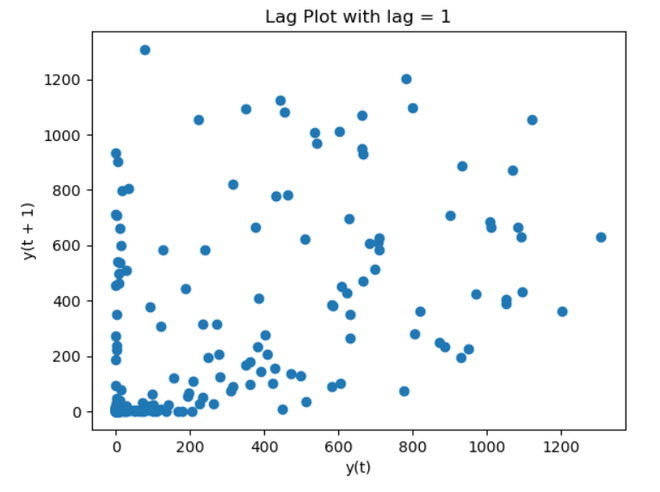

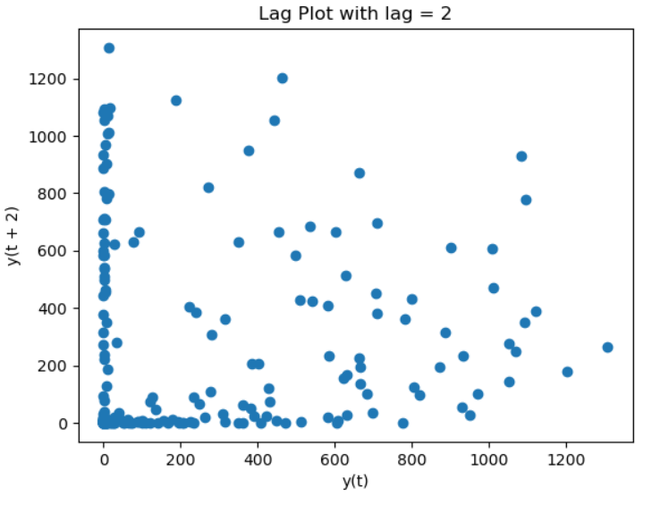

Lag Plot

In time series analysis, a lag plot is a graphical tool that shows the relationship between a variable and its lagged values. It helps find patterns, trends, or unpredictability in the data by comparing each data point to its prior observation. The plot may show the presence of autocorrelation if there is a substantial correlation between a point and its lag. This can help with understanding the temporal dependencies and direct the selection of the best model for time series analysis.

Python3

for i in range(1, 3):

lag_plot(ts, lag=i)

plt.title(f'Lag Plot with lag = {i}')

plt.show()

|

Output:

Interpretation:

- The x-axis represents the values of the time series at time t.

- The y-axis represents the values of the time series at time t+1 (lag 1).

- Interpretation:

- If the points in the plot follow a well-defined pattern or trend, it suggests autocorrelation at lag 1.

- If the points are randomly scattered, it indicates a lack of autocorrelation at lag 1.

Interpretation:

- Examines the relationship between values at time t and values at time t+2.

- Helps identify autocorrelation at lag 2.

With lags set to 1 and 2, the code creates Lag Plots for the time series “ts.” The association between each data point and its antecedent observation at the designated latency is plotted in each iteration. By shedding light on the autocorrelation patterns, these representations make it easier to spot possible trends and temporal relationships in the data. In order to make each Lag Plot easier to understand and evaluate the strength of the autocorrelation, the titles provide the lag values.

Stationarity Check

In order to make sure that a dataset’s statistical characteristics do not change over time, a stationarity check is an essential step in time series analysis. Model predictions are made easier when a time series is stationary since its mean, variance, and autocorrelation remain constant. Visual inspections, such rolling statistics graphs, and formal statistical tests, like the Augmented Dickey-Fuller test, are common approaches. By reducing the influence of non-constant patterns, stationarity assures dependable modeling and facilitates more precise forecasting and trend analysis of time series data.

Python3

adf_result = adfuller(ts)

print('ADF Statistic:', adf_result[0])

print('p-value:', adf_result[1])

|

Output:

ADF Statistic: -2.46632490177327

p-value: 0.12388427626757847

The code uses the Augmented Dickey-Fuller test to verify for stationarity on the time series ‘ts’. The p-value and ADF Statistic are displayed. The p-value denotes the importance of the ADF Statistic’s estimate of the time series’ presence of a unit root. For a more stationary time series, a low p-value and a more negative ADF Statistic point to more evidence against stationarity, which helps determine whether differencing is necessary.

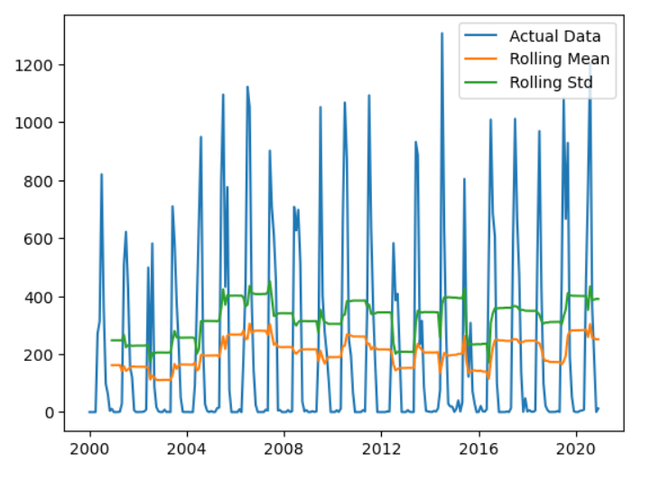

Rolling and Aggregations

Rolling: Rolling is a statistical method for time series analysis that computes summary statistics, such as moving averages, over successive subsets of a dataset. A fixed-size window traverses the data in the context of rolling statistics, and a new value is computed based on the observations within that window at each step. This facilitates the visualization and comprehension of underlying dynamics by reducing volatility and highlighting trends or patterns in the time series.

Aggregation: Aggregations are a common technique in time series analysis to identify broad trends. They entail merging and summarizing several data points into a single value. In this sense, calculating statistical measures within particular time intervals, such as mean or total, might be considered aggregating data. Aggregations streamline the process of interpreting complicated time series data by combining related information. This makes it easier for analysts to spot trends, patterns, or anomalies and promotes more efficient forecasting and decision-making.

Python3

rolling_mean = ts.rolling(window=12).mean()

rolling_std = ts.rolling(window=12).std()

plt.plot(ts, label='Actual Data')

plt.plot(rolling_mean, label='Rolling Mean')

plt.plot(rolling_std, label='Rolling Std')

plt.legend()

plt.show()

|

Output:

Interpretation:

The blue line represents the original time series data.

Rolling Mean:

- The orange line represents the rolling mean of the time series.

- The rolling mean is calculated over a window of 12 data points (months in this case).

- Interpretation:

- If the rolling mean smoothens out the fluctuations in the actual data, it helps in identifying trends.

- Rising or falling trends can be observed by comparing the rolling mean to the actual data.

Rolling Standard Deviation:

- The green line represents the rolling standard deviation of the time series.

- The rolling standard deviation is calculated over a window of 12 data points.

- Interpretation:

- Indicates the volatility or variability of the time series.

- Peaks in the rolling standard deviation can signify periods of increased variability.

The code computes the rolling mean and rolling standard deviation with a window size of 12, performing rolling statistics on the time series ‘ts’. The rolling mean, rolling standard deviation, and actual data are superimposed on the generated graphs to help visualize patterns and variability. This method aids in noise reduction, enhancing the visibility of underlying patterns and offering insights into the temporal properties of the data. For easier understanding, the legend separates the rolling mean, rolling standard deviation, and the original data.

Model Development

We will train a SARIMA model for the univariate forecast by using the date column as the feature for the predictions. But for that first, we will have to create a date column in the dataset that too in the pd. DateTime format so, for that, we will be using the pd.to_datetime function that is available in the pandas dataframe.

Python3

df['Date'] = pd.to_datetime(df['Year'].astype(str) +\

'-' + df['Month'].astype(str) +\

'-' + df['Day'].astype(str),

format='%Y-%m-%d')

df.head()

|

Output:

Year Month Day Specific Humidity Relative Humidity Temperature \

0 2000 1 1 8.06 48.25 23.93

1 2000 2 1 8.73 50.81 25.83

2 2000 3 1 8.48 42.88 26.68

3 2000 4 1 13.79 55.69 22.49

4 2000 5 1 17.40 70.88 19.07

Precipitation Date

0 0.00 2000-01-01

1 0.11 2000-02-01

2 0.01 2000-03-01

3 0.02 2000-04-01

4 271.14 2000-05-01

Now let’s set the index to the date column and the target column is the precipitation column let’s separate it from the complete dataset.

Python3

ts = df.set_index('Date')['Precipitation']

ts

|

Output:

Date

2000-01-01 0.00

2000-02-01 0.11

2000-03-01 0.01

2000-04-01 0.02

2000-05-01 271.14

...

2020-08-01 1203.09

2020-09-01 361.30

2020-10-01 180.18

2020-11-01 0.49

2020-12-01 12.23

Name: Precipitation, Length: 252, dtype: float64

Training the SARIMA Model

Now let’s train a SARIMA model on the dataset at hand.

Python3

p, d, q = 1, 1, 1

P, D, Q, S = 1, 1, 1, 12

model = sm.tsa.SARIMAX(ts, order=(p, d, q), seasonal_order=(P, D, Q, S))

results = model.fit()

|

As the model has been trained we can use this model to predict the rain for the next year and plot it along with the original data to get a feel for whether the predictions are following the previous trend or not.

This code fits the time series ts to a SARIMA model. The model’s order is defined by the order=(p, d, q) argument, where p denotes the number of autoregressive terms, d denotes the degree of differencing, and q denotes the number of moving average terms. The order of the seasonal component of the model is specified by the seasonal_order=(P, D, Q, S) argument, where P denotes the number of seasonal autoregressive terms, D the degree of seasonal differencing, Q the number of seasonal moving average terms, and S the duration of the seasonality period.

A SARIMA model object is created by the code line model = sm.tsa.SARIMAX(ts, order=(p, d, q), seasonal_order=(P, D, Q, S)). The time series that needs to be modeled is the ts argument. The model’s order and seasonal order are specified by the order=(p, d, q) and seasonal_order=(P, D, Q, S) arguments, respectively.

Predictions

Python3

forecast = results.get_forecast(steps=12)

forecast_mean = forecast.predicted_mean

plt.figure(figsize=(12, 6))

plt.plot(ts, label='Actual Data')

plt.plot(forecast_mean, label='SARIMA Forecast')

plt.legend()

plt.show()

|

Output:

.jpg)

This code plots the actual data and the forecast and makes predictions using the fitted SARIMA model. Initially, a forecast object containing the anticipated values for the upcoming 12 periods is created using the get_forecast() function. To get the predicted mean values, the forecast object’s predicted_mean attribute is then extracted.Next, a figure is made, and the plot() function is used to plot the forecast and the actual data. Each line now has a label, and a legend is shown.

Share your thoughts in the comments

Please Login to comment...