The Top 3 Free GPU resources to train deep neural network

Last Updated :

31 Jul, 2023

Model training is a painful task and can be one reason you hate Machine Learning. While simple models train easily on the CPU, deep neural networks require GPU to train in a modest time. You can optimize your graphic card for that after doing some advanced settings. But if you don’t have one that’s high-end and also you want a hassle-free process. You can use the below list that covers the top 3 cloud-based GPU resources available free of cost and no credit cards for any signup.

1) Google Colab

Google Colab the popular cloud-based notebook comes with CPU/GPU/TPU. The GPU allows a good amount of parallel processing over the average CPU while the TPU has an enhanced matrix multiplication unit to process large batches of CNNs. Something that’s very useful for computer vision projects in real-time object detection stuff. It is also developed especially for Machine Learning by Google.

To enable GPU/TPU in Colab:

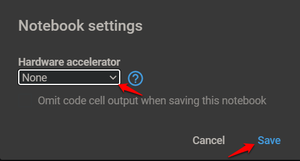

1) Go to the Edit menu in the top menu bar and select ‘Notebook settings’. Then in the appeared prompt select ‘TPU’ or ‘GPU’ under the ‘Hardware Accelerator’ section and click ‘ok’.

GPU/TPU setting in Google Colab.

Colab specs:

- According to Colab, it has a variety of GPU models from Nvidia’s Tesla A100(80GB), A100(40GB), P4, V100, K80, P100, and T4 but in the free version, you are likely to get K80 or T4. To see the model allocated to you in a given session run the following code:

Python3

from tensorflow.python.client import device_lib

device_lib.list_local_devices()

|

Output:

[name: "/device:CPU:0"

device_type: "CPU"

memory_limit: 268435456

locality {

}

incarnation: 4391750449849376294

xla_global_id: -1, name: "/device:GPU:0"

device_type: "GPU"

memory_limit: 14415560704

locality {

bus_id: 1

links {

}

}

incarnation: 1398387971759345144

physical_device_desc: "device: 0, name: Tesla T4, pci bus id: 0000:00:04.0, compute capability: 7.5"

xla_global_id: 416903419]

- You can also list the memory resources allocated to you with this code: (Mine shows around 13.3 GB of GPU Memory)

!cat /proc/meminfo

MemTotal: 13335276 kB

MemFree: 7322964 kB

MemAvailable: 10519168 kB

Buffers: 95732 kB

Cached: 2787632 kB

SwapCached: 0 kB

Active: 2433984 kB

Inactive: 3060124 kB

- The max execution time offered is 12 hours with a varying idle time of 30 minutes. You also get private notebooks to run on free GPU.

- For storage, you can mount Google drive which offers 15 GB of free space.

- With that, the TPU hardware availability and background execution capability are the only limitations of Colab.

You can learn more about Colab’s GPU limitations in this FAQ section.

2) Kaggle

Kaggle now owned by Google is a leading website for data scientists. It offers a customizable GPU/TPU integrated notebook environment(Jupyter). Playgrounds and notebooks teaching you an all-around configuration of TPU/GPU can be easily found. Data science competitions something Kaggle is known for solidifying your understanding of GPU/TPU. To get GPU/TPU enabled on Kaggle follow these steps:

- Sign up for an account in Kaggle and verify your mobile number.

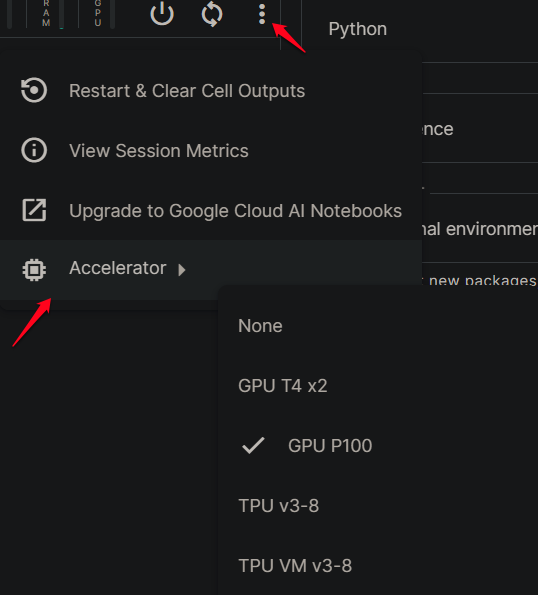

- Open a notebook and click the three dots present in the right corner. From the drop-down select ‘accelerator’ and chose from any GPU/TPU options available.

GPU/TPU setting in Kaggle Kernel

Kaggle specs:

- The GPUs given for free access are Nvidia Tesla P100 and T4 with a maximum of 9 hours runtime for a single session.

- Similarly, the v3-8 and VM v3-8 are the TPUs model granted for free.

- The GPU memory available is around 15.90 GB and you can view its usage with this code:

To check the GPU Usage we can use GPUtil also

!pip install GPUtil

Python3

from GPUtil import showUtilization as gpu_usage

gpu_usage()

|

Output:

| ID | GPU | MEM |

-------------------

| 0 | nan% | 19% |

- For training multiple models you can clear some memory if you get an error like this:

RuntimeError: CUDA out of memory. Tried to allocate 978.00 MiB (GPU 0; 15.90 GiB total capacity; 14.22 GiB already allocated; 167.88 MiB free; 14.99 GiB reserved in total by PyTorch)

import torch

torch.cuda.empty_cache()

- 20 GB of free space is also offered for storage purposes.

- Unique among free GPU providers is that Kaggle only supports background execution.

- The downside is that you get only 30+ hours of GPU/TPU execution per week depending on resources and demand.

You can learn more about the efficient usage of GPU and TPU.

3) Gradient.

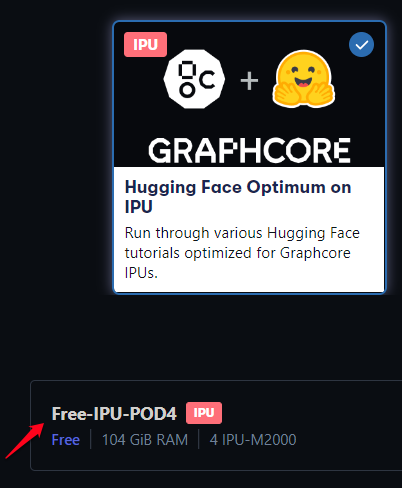

Gradient by Paperspace offers GPUs along with IPUs. You get GPU+(M4000) and IPU(IPU-POD4) with a free account. IPU by GraphCore is specially designed for artificial intelligence that provides a highly parallel architecture for accelerated learning. To get GPU/IPU enabled on Gradient follow these steps:

- Under the Project tab choose ‘create notebook’.

- For free GPU you can choose any of the template runtimes and select ‘Free-GPU’ from the machines available.

- For free IPU you need to choose ‘Hugging Face Optimum’ as runtime and select ‘Free-IPU-POD4’ from the available machines.

GPU/IPU setting in Gradient Notebook.

Gradient specs:

- The free account gives a maximum runtime of 6 Hours.

- With the Free-GPU you get 8 GB of memory and for Free-IPU-POD4 it’s 108 GB of RAM.

- The storage availability comes to 5 GB with a free account.

- The negatives of Gradient are that you don’t get access to private notebooks and background execution capability.

Check these links to know more about how Gradient compares with Colab and with Kaggle.

Share your thoughts in the comments

Please Login to comment...