tf.function in TensorFlow

Last Updated :

01 Mar, 2024

TensorFlow is a machine learning framework that has offered flexibility, scalability and performance for deep learning tasks. tf.function helps to optimize and accelerate computation by leveraging graph-based execution. In the article, we will cover the concept of tf.function in TensorFlow.

What is tf.function in TensorFlow?

tf.function is a decorator provided by TensorFlow that transforms Python functions into graph operations. This transformation enables TensorFlow to compile and optimize the function’s computation, leading to enhanced performance and efficiency. Unlike traditional Python functions, tf.function utilizes graph-based execution, which can significantly improve execution speed, especially for repetitive tasks.

Features of tf.function

Let’s understand important characteristics of tf.function():

- tf.function() can accept Python objects, such as lists, tuples, and dicts, as inputs or outputs, by converting them into tensors or nested structures of tensors.

- tf.function() bridges the gap between eager execution and graph execution by separating the code into two stages: tracing and running. In the tracing stage,

- tf.function() creates a new tf.Graph that captures all the TensorFlow operations (like adding two tensors) and defers their execution. Python code runs normally, but any side effects (like printing or appending to a list) are not recorded in the graph. In the running stage, the tf.Graph that contains everything that was deferred in the tracing stage is executed. The graph can be optimized, serialized, and deployed across different platforms and devices.

- tf.function() works best with TensorFlow ops and tensors; NumPy and Python calls are converted to constants and may cause performance issues or unexpected behaviors.

- tf.function() can also handle control flow, loops, and conditional statements by using AutoGraph, a module that converts Python syntax to TensorFlow graph code.

How does tf.function() work?

The tf.function work involves examining the tracing process, compilation and optimization. Let’s explore the whole process:

- tf.function() works by tracing the Python function and creating a ConcreteFunction for each set of input shapes and dtypes. A ConcreteFunction is a callable graph that executes the traced operations.

- tf.function() takes the Python function as input and returns a tf.types.experimental.PolymorphicFunction, which is a Python callable that builds TensorFlow graphs from the Python function.

- When we call the PolymorphicFunction, it traces the Python function and creates a graph that represents the computation. The graph can be optimized by TensorFlow to improve performance and portability. The PolymorphicFunction can handle different types and shapes of inputs by creating multiple graphs, each specialized for a specific input signature. This is called polymorphism, and it allows us to use the same tf.function for different scenarios.

- We can inspect the graphs created by tf.function by using the get_concrete_function method, which returns a tf.types.experimental.ConcreteFunction. A ConcreteFunction is a single graph with a fixed input signature and output.

- We can also obtain the graph object directly by using the graph property of the ConcreteFunction. The graph object contains information about the nodes and edges of the graph, and can be used for debugging or visualization.

How to use tf.function in TensorFlow?

We can use tf.function in TensorFlow as a decorator. Let’s have a look at the implementation:

1. Define Your Python Function: Start by defining a Python function that contains TensorFlow operations.

Python3

def my_function(x, y):

return tf.add(x, y)

|

2. Decorate the Function: Apply the @tf.function decorator to your Python function.

Python3

@tf.function

def my_function(x, y):

return tf.add(x, y)

|

3. Call the Function: Once decorated, you can call your function as you would any other Python function.

Python3

result = my_function(tf.constant(2), tf.constant(3))

print(result)

|

Output:

tf.Tensor(5, shape=(), dtype=int32)

How can we generate graphs using tf.function?

A graph is a data structure that represents a computation as a set of nodes and edges. Graphs can help you optimize and deploy your TensorFlow models.

To create a graph using the tf.function() function, you can either use it as a decorator or as a direct call.

1. As a decorator:

Python3

@tf.function

def add(a, b):

return a + b

|

2. As a direct call:

Python

def multiply(a, b):

return a * b

multiply = tf.function(multiply)

|

Both methods will convert the Python functions into PolymorphicFunction objects, which can build TensorFlow graphs when called.

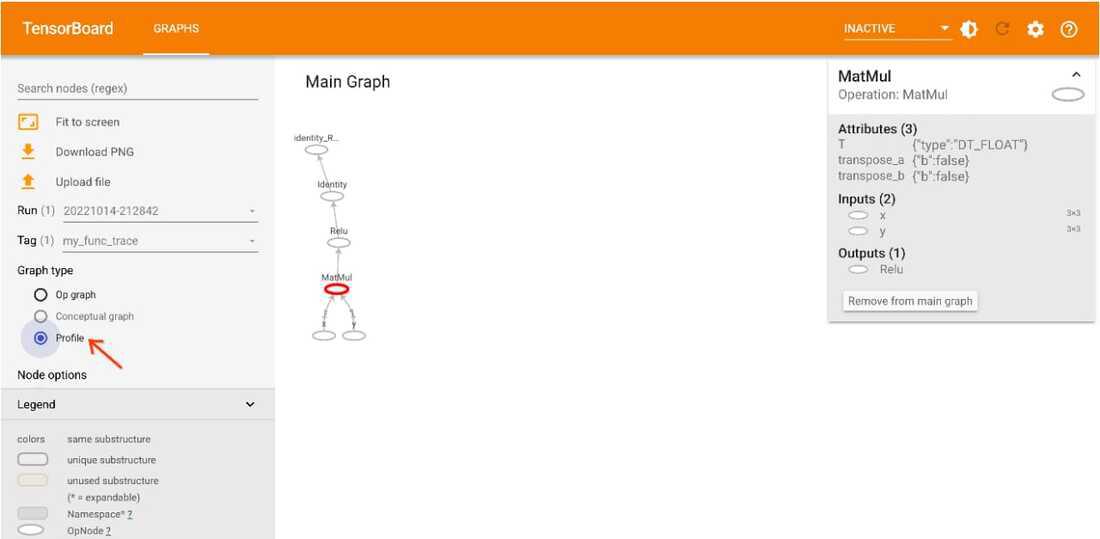

Using TensorBoard

To generate these graphs, we need to use TensorBoard. TensorBoard is a powerful tool that allows us to visualize and analyze our TensorFlow models and data. With TensorBoard, we can:

- Track and compare metrics such as loss and accuracy across different training runs.

- Visualize the model graph and inspect the weights, gradients, and activations.

- Project embeddings to a lower dimensional space and explore their relations.

- Display images, text, audio, and histograms of our data and model.

- Debug our model and optimize its performance.

To use TensorBoard, we need to:

pip install tensorboard

- Add some code to our TensorFlow program to log the data we want to see

- Launch TensorBoard and open it in your browser

tensorboard --logdir logs/graphs

To use the tf.function annotation to “autograph”, i.e., transform, a Python computation function into a high-performance TensorFlow graph. For these situations, we use TensorFlow Summary Trace API to log autographed functions for visualization in TensorBoard.

To use the Summary Trace API: Define and annotate a function with tf.function. Use tf.summary.trace_on() immediately before your function call site. Add profile information (memory, CPU time) to graph by passing profiler=True. With a Summary file writer, call tf.summary.trace_export() to save the log data.

We can then use TensorBoard to see how our function behaves.

Python

@tf.function

def my_func(x, y):

return tf.nn.relu(tf.matmul(x, y))

stamp = datetime.now().strftime("%Y%m%d-%H%M%S")

logdir = 'logs/func/%s' % stamp

writer = tf.summary.create_file_writer(logdir)

x = tf.random.uniform((3, 3))

y = tf.random.uniform((3, 3))

tf.summary.trace_on(graph=True, profiler=True)

z = my_func(x, y)

with writer.as_default():

tf.summary.trace_export(

name="my_func_trace",

step=0,

profiler_outdir=logdir)

|

Run following command in the command prompt:

tensorboard --logdir logs/func

Output:

Output Computational Graph

Share your thoughts in the comments

Please Login to comment...