How to Use Cloud TPU for High-Performance Machine Learning on GCP?

Last Updated :

19 Oct, 2023

Google’s Cloud Tensor Processing Units (TPUs) have emerged as a game-changer in the realm of machine learning. Designed to accelerate complex computations, these TPUs offer remarkable performance enhancements, making them an integral part of the Google Cloud Platform (GCP). This article aims to provide a comprehensive guide on how to utilize Cloud TPUs effectively for high-performance machine learning on GCP.

Getting Started with Cloud TPUs

Before delving into the practical aspects, it’s crucial to set up your GCP environment. Here’s how you can start your journey with Cloud TPUs:

Step1: Begin by accessing the GCP Console, navigating to the “APIs & Services” section, and enabling the “Cloud TPU API.” This enables you to create and manage Cloud TPUs.

Step 2: Select a Project

Create a new GCP project or choose an existing one to host your Cloud TPU resources. Assume that you’ve created a project named “my-ml-project.”

Step 3: Choose a Region

To ensure optimal performance, select an appropriate GCP region for your TPUs. For instance, opt for the “us-central1” region:

Step 4: Training a Machine Learning Model on Cloud TPUs

Training machine learning models with Cloud TPUs significantly expedites the process. Here’s a step-by-step guide with practical examples:

Step 5: Prepare Your Data

Suppose you have a dataset stored in Google Cloud Storage, within a bucket named “my-ml-data” and a folder labeled “training_data.”

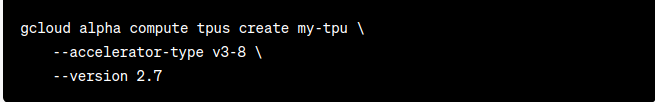

Steps To Create a TPU Node

Step 1: To create an 8-core TPU node, use the following command:

Step 2: Set Up TensorFlow

Ensure TensorFlow is installed, either on your local machine or within a GCP instance:

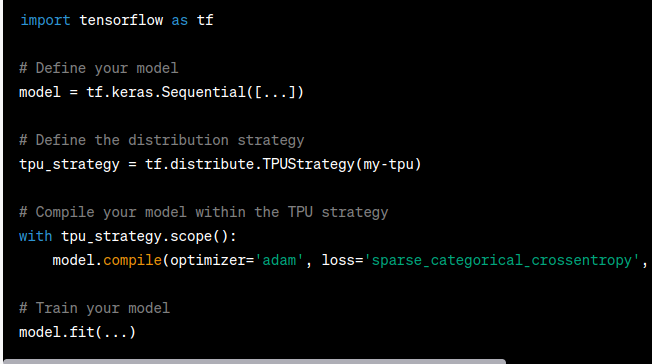

Step 3: Distribute Your Model

Adapt your machine learning code to distribute the training workload across Cloud TPUs. Here’s an example in TensorFlow:

Steps To Deploying a Machine Learning Model on Cloud TPUs

Once your model is trained, deploying it for inference is the next step. Here’s how you can do it, supported by practical examples:

Step 1: Export Your Model

Export your trained model to a deployment-friendly format like TensorFlow’s SavedModel. Here’s how to export a model:

Step 2: Set Up a Serving Infrastructure

Create a serving infrastructure using services like Google Cloud AI Platform or Kubernetes. Configure it to utilize Cloud TPUs for inference.

Step 3: Optimize for Inference

Streamline your model for inference by removing unnecessary layers and operations, improving inference speed.

Step 4: Load and Serve the Model

Load your model into the serving infrastructure and expose it as an API endpoint for predictions. For instance, with Google Cloud AI Platform:

Monitor Training

Leverage GCP’s monitoring tools to closely track your training job’s performance. The GCP Console offers insights into critical metrics, resource utilization, and other vital statistics.

- Monitor Inference: Keep a close eye on the performance and usage of your deployed model to ensure it meets your requirements and scales appropriately.

- Scaling Your Machine Learning Workload on Cloud TPUs: The flexibility of Cloud TPUs allows you to scale your machine learning workloads as needed.

- Auto Scaling: GCP offers auto-scaling options to dynamically adjust the number of TPUs based on workload demands, ensuring efficient resource utilization.

- Batch Processing: Consider batching your inference requests to optimize Cloud TPU usage. Batching enables the processing of multiple requests in a single TPU run, reducing latency and resource consumption.

- Resource Monitoring: Continuously monitor the resource utilization of your Cloud TPUs to identify bottlenecks or over-provisioning issues that may arise during scaling.

Best Practices for Using Cloud TPUs

To maximize the potential of Cloud TPUs, adhere to these best practices:

- Optimize Your Model: Fine-tune your machine learning models to leverage Cloud TPUs efficiently. This may involve architecture adjustments, batch size optimization, or data preprocessing enhancements.

Regularly Update Libraries: Stay up-to-date with TensorFlow and other machine learning libraries to access performance improvements and new TPU-compatible features.

- Cost Management: Exercise vigilant monitoring of resource usage to prevent unexpected expenses. GCP offers cost control tools, including budget alerts.

- Security and Compliance: Ensure your machine learning workloads on Cloud TPUs align with security and compliance standards. Implement access controls, encryption, and other security measures as necessary.

FAQs On Cloud TPU for High-Performance Machine Learning on GCP

1. Are Cloud Tpus Exclusively Compatible With Tensorflow?

Although TensorFlow is the most popular framework for Cloud TPUs, other frameworks such as PyTorch can be used with some modifications.

2. Can Cloud Tpus Be Used For Non-Machine Learning Tasks?

While Cloud TPUs are optimized for machine learning, they can also handle other numerical computing tasks that are amenable to parallelization.

3. How Can I Estimate The Cost Of Using Cloud Tpus?

GCP provides a pricing calculator that allows you to estimate Cloud TPU costs based on your configuration and usage patterns.

Share your thoughts in the comments

Please Login to comment...