How To Scale A Kubernetes Cluster ?

Last Updated :

16 Feb, 2024

Scaling a Kubernetes cluster is crucial to meet the developing needs of applications and ensure the finest performance. As your workload increases, scaling becomes vital to distribute the weight, enhance useful resource usage, and maintain high availability. This article will guide you through the terrific practices and techniques for scaling your Kubernetes cluster efficiently. We will discover horizontal and vertical scaling strategies, load balancing, pod scaling, monitoring, and other vital issues.

Understanding Kubernetes Cluster Scaling

The scaling method automatically adjusts the pod assets based on utilization over the years, thereby minimizing aid waste and facilitating the most fulfilling cluster aid utilization.

- This may be taken into consideration as a bonus when comparing Kubernetes horizontal vs. vertical scaling.

- Explanation of horizontal and vertical scaling principles in a Kubernetes cluster.

- Discussion on using duplicate units and pods for scaling applications inside a cluster.

- Introduction to Kubernetes Autoscaling for automated scaling primarily based on aid utilization.

Planning for Cluster Scaling

Planning for cluster scaling in DevOps entails designing and implementing strategies to address the boom of your application or infrastructure. Scaling is critical to make sure that your device can control expanded load, offer first-rate basic performance, and hold reliability.

- Analysis of workload and needs of current group assets.

- Adjust scaling thresholds and triggers for automatic scaling.

- Considering the impact of scaling on neighborhoods, garages and infrastructure.

Scaling Up a Kubernetes Cluster

Scaling up a Kubernetes cluster in a DevOps environment entails including extra nodes to your cluster to deal with improved workloads or to improve general performance and availability.

- Adding extra worker nodes to the cluster for elevated potential.

- Configuring the cluster to utilize the introduced sources efficiently.

- Monitoring and optimizing aid allocation to make sure balanced scaling.

Scaling Out a Kubernetes Cluster

Scaling out a Kubernetes cluster entails including more worker nodes to the present cluster to deal with accelerated workloads and improve usual overall performance.

- Understanding the concept of cluster federation for scaling across multiple clusters.

- Setting up cluster federation to distribute workloads and enhance fault tolerance.

- Implementing load balancing strategies for green workload distribution.

Automating Cluster Scaling

Automating cluster scaling in a DevOps surroundings is vital for efficaciously coping with infrastructure and responding to changing workloads. Automation reduces manual intervention, improves consistency, and complements the overall agility of your deployment.

- Utilizing Kubernetes Horizontal Pod Autoscaling to automatically regulate the quantity of pods primarily based on resource usage.

- Exploring custom metrics and defining scaling policies for precise utility requirements.

- Using Kubernetes Event-Driven Autoscaling to scale based totally on outside occasions or triggers

Monitoring and Optimization

Monitoring and optimization play indispensable roles in a DevOps environment, making sure the reliability, overall performance, and performance of systems.

- Implementing tracking and logging solutions for monitoring cluster performance and identifying scaling needs.

- Utilizing Kubernetes Dashboard and Prometheus for actual-time tracking and alerting.

- Analyzing performance metrics to become aware of bottlenecks and optimize cluster resources.

Conclusion

Scaling a Kubernetes cluster consists of a aggregate of manual and automatic strategies, supported by means of way of powerful tracking and optimization practices. By following those quality practices, DevOps groups can make certain that their Kubernetes clusters continue to be responsive, reliable, and properly-acceptable to address evolving workloads.

Scale a Kubernetes cluster – FAQs

How can I optimize expenses while scaling a Kubernetes cluster?

- Regularly review and modify vehicle-scaling rules to healthy real usage patterns.

- Leverage reserved times or spot instances in cloud environments.

- Implement useful resource limits for pods to prevent overconsumption.

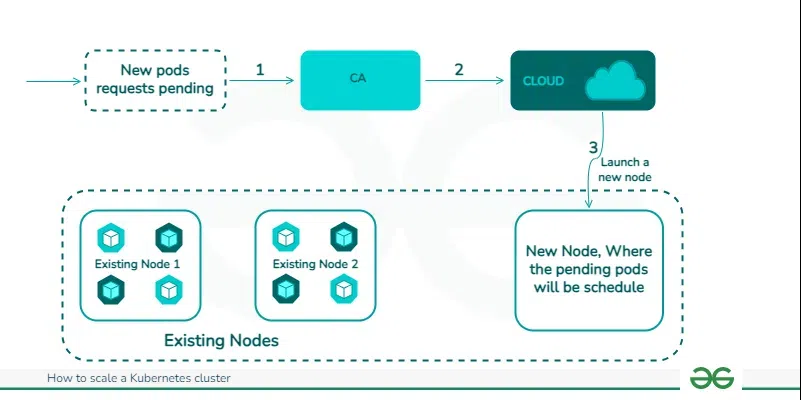

What is Cluster Autoscaling, and while need to it’s utilized in Kubernetes?

Cluster Autoscaling routinely adjusts the quantity of nodes in a Kubernetes cluster based totally on aid requirements. It is in particular useful in cloud-based totally deployments (e.g. AWS, GCP, Azure) where it integrates with the cloud provider’s automobile-scaling companies.

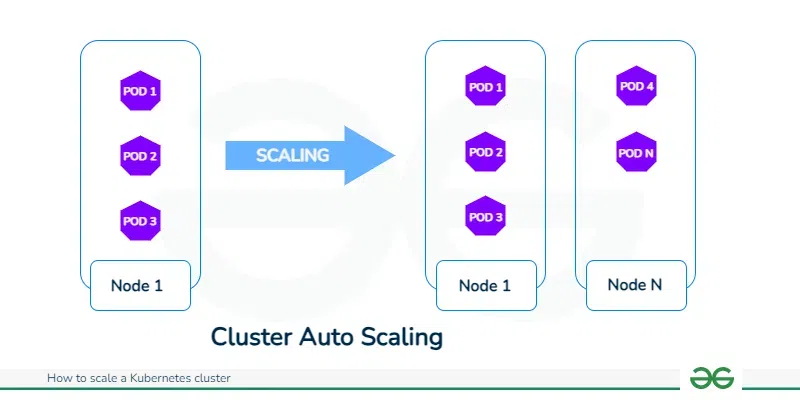

How does Horizontal Pod Autoscaling work in Kubernetes?

The Horizontal Pod Autoscaling routinely adjusts the quantity of pod replicas based totally on distinctive metrics. When positive thresholds (e.g. CPU utilization) are surpassed, the HPA increases or decreases the range of pod replicas to preserve the favored metric tiers. This ensures efficient useful resource usage and responsiveness to various workloads.

What is the difference between horizontal and vertical scaling in Kubernetes?

- Horizontal scaling: Involves adding or subtracting uniform pod shapes to divide the project. It is monitored using tools such as Horizontal Pod Autoscaling based on metrics such as CPU or custom application metrics.

- Vertical scaling: Varies the capacity of individual nodes by varying CPU, memory, or other resources. Typically, it was achieved by resizing the underlying virtual machines in cloud environments.

Why is scaling important for a Kubernetes cluster?

Kubernetes clusters require scaling to provide flexibility and ensure performance. Scaling allows you to handle increased demand by dynamically adjusting the number of nodes or pods, maintaining responsiveness, and effectively distributing work across teams.

Share your thoughts in the comments

Please Login to comment...