Extending PyTorch with Custom Activation Functions

Last Updated :

08 Jun, 2023

In the context of deep learning and neural networks, activation functions are mathematical functions that are applied to the output of a neuron or a set of neurons. The output of the activation function is then passed on as input to the next layer of neurons.

The purpose of an activation function is to introduce non-linearity into the network. Without an activation function, a neural network would simply be a linear function of its inputs, which would severely limit its ability to model complex patterns and relationships.

Activation functions are typically applied element-wise to the output of each neuron, meaning that the same function is applied to each element of the output vector. Some common activation functions used in neural networks include sigmoid, tanh, ReLU, and softmax.

The choice of activation function can have a significant impact on the performance and behavior of a neural network, and it is often an area of active research and experimentation. Additionally, in some cases, it may be beneficial to define and use custom activation functions that are tailored to the specific needs and characteristics of a given task or dataset.

Certainly! Here is an example of how to define a custom activation function in PyTorch:

Custom Activation Function: 1 Softplus function

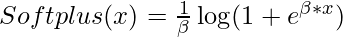

1. Mathematical Formula :

Let’s say we want to define a custom activation function called “Softplus” that takes in a tensor x as input and returns the element-wise function:

This is a non-linear function that has a similar shape to the ReLU activation function, but with a smoother curve.

2. PyTorch Code :

To define this custom activation function in PyTorch, we can write:

Python3

import torch

import torch.nn as nn

class Softplus(nn.Module):

def __init__(self):

super(Softplus, self).__init__()

def forward(self, x, beta=1):

return 1/beta * torch.log(1 + torch.exp(beta * x))

|

Here, we define a new PyTorch module called “Softplus” that inherits from the nn.Module class. The forward method defines how the module should behave when given an input tensor x.

To plot the graph of this activation function, we can use the following code :

Python3

import matplotlib.pyplot as plt

x = torch.linspace(-5, 5, 100)

k = Softplus()

y = k(x)

plt.plot(x, y)

plt.grid(True)

plt.title('Softplus Function')

plt.xlabel('x')

plt.ylabel('y')

plt.show()

|

Output :

.png)

Sofplus function

This is just one example of how to define a custom activation function in PyTorch. The process may vary depending on the specific function being defined, and it may require additional considerations such as ensuring the function is differentiable for use in backpropagation.

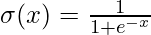

Custom Activation Function: 2 Sigmoid Function

1. Mathematical Formula :

The Sigmoid activation function is defined as:

2. PyTorch Code :

To define this custom activation function in PyTorch, we can write:

Python3

import torch

import torch.nn as nn

class sigmoid(nn.Module):

def __init__(self):

super(sigmoid, self).__init__()

def forward(self, x):

return 1/(1 + torch.exp(-x))

|

Here’s the PyTorch code to define the sigmoid activation function :

Python3

import matplotlib.pyplot as plt

x = torch.linspace(-10, 10, 100)

k = sigmoid()

y = k(x)

plt.plot(x, y)

plt.grid(True)

plt.title('Sigmoid Function')

plt.xlabel('x')

plt.ylabel('y')

plt.show()

|

Output :

.png)

Sigmoid Function

Custom Activation Function: 3 Swish activation function

The Swish activation function is defined as :

swish_activation(x) = x * sigmoid(x)

2. PyTorch Code :

Here’s the PyTorch code to define the Swish activation function :

Python3

class SwishActivation(nn.Module):

def __init__(self):

super(SwishActivation, self).__init__()

def forward(self, x):

sigmoid = 1/(1 + torch.exp(-x))

return x * sigmoid

|

Here’s the PyTorch code to define the sigmoid activation function :

Python3

import matplotlib.pyplot as plt

x = torch.linspace(-10, 10, 100)

k = SwishActivation()

y = k(x)

plt.plot(x, y)

plt.grid(True)

plt.title('Swish Activation Function')

plt.xlabel('x')

plt.ylabel('y')

plt.show()

|

Output:

.png)

Swiss Activation Function

Training a Neural Network with Custom Activation Functions using PyTorch :

In implementations use the above-defined custom activation function and train the model.

Use the Swish activation function in PyTorch to train a simple neural network on the MNIST dataset :

Steps:

- Import the necessary libraries

- Define the custom Swish activation function

- Define the PyTorch neural network model

- Load and prepare the MNIST dataset

- Initialize the model and define the loss function and optimizer.

- Train the model and plot the loss vs iterations curve.

Python3

import torch

import torch.nn as nn

import torch.optim as optim

import torchvision.datasets as datasets

import torchvision.transforms as transforms

import matplotlib.pyplot as plt

class SwishActivation(nn.Module):

def __init__(self):

super(SwishActivation, self).__init__()

def forward(self, x):

sigmoid = 1/(1 + torch.exp(-x))

return x * sigmoid

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.fc1 = nn.Linear(784, 128)

self.activation = SwishActivation()

self.fc2 = nn.Linear(128, 10)

def forward(self, x):

x = x.view(-1, 784)

x = self.activation(self.fc1(x))

x = self.fc2(x)

return x

train_dataset = datasets.MNIST(root='./data',

train=True,

download=True,

transform=transforms.ToTensor())

train_loader = torch.utils.data.DataLoader(train_dataset,

batch_size=128,

shuffle=True)

model = Net()

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=0.01)

loss_list = []

for epoch in range(10):

running_loss = 0.0

for i, (inputs, labels) in enumerate(train_loader, 0):

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

running_loss += loss.item()

loss_list.append(running_loss)

print('Epoch %d loss: %.3f' % (epoch + 1, running_loss))

plt.plot(loss_list)

plt.title('Loss vs Iterations')

plt.xlabel('Iterations')

plt.ylabel('Loss')

plt.show()

|

Output:

Epoch 1 loss: 921.342

Epoch 2 loss: 459.694

Epoch 3 loss: 281.649

Epoch 4 loss: 227.518

Epoch 5 loss: 201.599

Epoch 6 loss: 186.339

Epoch 7 loss: 176.337

Epoch 8 loss: 169.018

Epoch 9 loss: 163.496

Epoch 10 loss: 158.999

.png)

Loss vs Iterations

The learning rate is a hyperparameter that controls the step size at each iteration of gradient descent. In this code, the learning rate is set to 0.01 for the stochastic gradient descent optimizer. The choice of learning rate can significantly affect the training process of the neural network. If the learning rate is too high, the optimizer may overshoot the optimal solution, resulting in instability or divergence. If the learning rate is too low, the optimizer may converge too slowly, leading to long training times.

Overfitting occurs when a neural network model becomes too complex, and it fits the training data too well but generalizes poorly to new data. Overfitting can be observed when the training loss continues to decrease, but the validation loss starts to increase. In this code, we do not have a separate validation set to evaluate the model’s performance on unseen data. Still, overfitting can be avoided by regularization techniques such as early stopping, dropout, or weight decay.

Underfitting occurs when a neural network model is too simple to capture the underlying patterns in the data. Underfitting can be observed when the training loss and validation loss are both high. In this code, we train the neural network for ten epochs, which may not be enough to achieve a good fit to the data. Increasing the number of epochs or adding more layers to the neural network could potentially improve the model’s performance.

Applications of Extending PyTorch with Custom Activation Functions :

- Novel activation functions: Extending PyTorch with custom activation functions allows researchers and practitioners to experiment with new activation functions and test their effectiveness in different applications.

- Customized models: Custom activation functions can be used to create customized models that better suit specific tasks or data distributions.

- Domain-specific models: Custom activation functions can be used to create models that are specifically designed for a particular domain or application, such as computer vision, speech recognition, or natural language processing.

Share your thoughts in the comments

Please Login to comment...