Epoch in Neural Network using R

Last Updated :

26 Oct, 2023

Deep Learning is a subfield of machine learning and artificial intelligence that focuses on training neural networks to perform various tasks, such as image recognition, natural language processing, and reinforcement learning. When training a deep learning model, the concept of an “epoch” is fundamental.

Epoch in Deep Learning using R

An epoch represents a complete pass through the entire training dataset during the training of a neural network. In the R programming language you can use various deep learning libraries like Keras and TensorFlow to define, train, and evaluate your models. An epoch in this context refers to one iteration through the entire dataset, where the model’s parameters (weights and biases) are updated based on the training data to minimize a specified loss function.

Here’s a theoretical overview of what an epoch represents in deep learning

- Data Iteration: In the training phase of a deep learning model, you have a dataset that consists of input samples (often referred to as X) and corresponding target labels (often referred to as Y). These datasets are divided into training sets and, in some cases, validation and test sets.

- Training Loop: To train a neural network, you iterate through your training dataset multiple times. Each complete iteration through the entire dataset is called an “epoch.” During each epoch, the model sees and processes every sample in the training dataset exactly once.

- Parameter Updates: At the heart of deep learning is the process of updating the model’s parameters, which are represented by weights and biases. These parameters are adjusted to minimize a specified loss function, which measures the difference between the model’s predictions and the actual target values.

- Batch Processing: Typically, you don’t process the entire dataset in a single step because it can be computationally intensive. Instead, you break the dataset into smaller subsets called “batches.” The model processes one batch at a time, computes predictions, and updates its parameters based on the gradients of the loss function.

- Multiple Epochs: Deep learning models require multiple epochs to improve their performance. By iterating through the dataset multiple times, the model has the opportunity to learn and adjust its parameters to better fit the underlying patterns in the data. The number of epochs is a hyperparameter that you can adjust.

- Validation and Early Stopping: During training, it’s common to have a separate validation dataset. This dataset is not used for training but is used to monitor the model’s performance during training. Early stopping is a technique where you stop training if the model’s performance on the validation dataset starts to degrade, preventing overfitting.

- Learning Rate: The learning rate is another important hyperparameter that determines the step size by which the model updates its parameters. It affects the rate at which the model converges to a solution. Proper tuning of the learning rate is critical for successful training.

- Convergence: The number of epochs required for a model to converge depends on several factors, including the complexity of the problem, the architecture of the neural network, the size of the dataset, and the learning rate. A model is said to have “converged” when its parameters have settled into values that provide satisfactory predictions.

- Testing: After training is complete, the model’s performance is evaluated on a separate test dataset to assess how well it generalizes to unseen data. Evaluation metrics such as accuracy, precision, recall, and F1-score are commonly used to measure the model’s performance.

- Hyperparameter Tuning: In addition to the number of epochs and the learning rate, other hyperparameters, such as the network architecture, batch size, and regularization techniques, are adjusted and fine-tuned to optimize the model’s performance.

Let’s break down the concept of an epoch in deep learning using R

Loading and Preprocessing Data

In any deep learning task, you begin by preparing your data. This includes loading your training data, often represented as a set of input features (X) and corresponding target labels (Y). The data might need preprocessing, normalization, and transformation to make it suitable for training.

R

library(keras)

mnist <- dataset_mnist()

x_train <- mnist$train$x

y_train <- mnist$train$y

x_test <- mnist$test$x

y_test <- mnist$test$y

|

Convert target labels to one-hot encoded format

R

y_train <- to_categorical(y_train, num_classes = 10)

y_test <- to_categorical(y_test, num_classes = 10)

|

Defining the Neural Network Model

The neural network model is the heart of deep learning. You define the architecture of your model, including the number of layers, the types of layers (e.g., dense, convolutional, recurrent), the number of neurons in each layer, activation functions, and more. In R, you can use Keras to build and customize your neural network.

R

model <- keras_model_sequential()

model %>%

layer_flatten(input_shape = c(28, 28)) %>%

layer_dense(units = 128, activation = 'relu') %>%

layer_dropout(rate = 0.5) %>%

layer_dense(units = 10, activation = 'softmax')

|

Compiling the Model

After defining your model, you need to compile it. Compiling involves specifying critical components such as the optimizer, loss function, and evaluation metrics. The choice of optimizer and loss function depends on the specific problem you’re trying to solve (e.g., classification, regression).

R

model %>% compile(

loss = 'categorical_crossentropy',

optimizer = optimizer_adadelta(),

metrics = c('accuracy')

)

|

Training the Model

The training phase involves feeding the training data to your model and iteratively updating the model’s parameters through a process called backpropagation. During one epoch, the entire training dataset is processed by the model. For each batch of data, the model computes predictions, compares them to the actual targets, and adjusts its parameters to minimize the defined loss function.

R

num_epochs <- 20

history <- model %>% fit(

x_train, y_train,

epochs = num_epochs,

batch_size = 128,

validation_split = 0.2

)

|

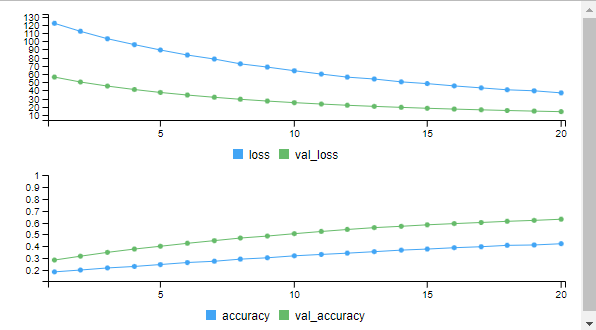

Output:

Epoch 1/20

375/375 [==============================] - 6s 17ms/step - loss: 121.8334 - accuracy: 0.1830 - val_loss: 56.3338 - val_accuracy: 0.2833

Epoch 2/20

375/375 [==============================] - 6s 16ms/step - loss: 112.0906 - accuracy: 0.1986 - val_loss: 50.3320 - val_accuracy: 0.3159

Epoch 3/20

375/375 [==============================] - 6s 17ms/step - loss: 103.0870 - accuracy: 0.2163 - val_loss: 45.3789 - val_accuracy: 0.3484

Epoch 4/20

375/375 [==============================] - 7s 19ms/step - loss: 95.9009 - accuracy: 0.2289 - val_loss: 41.1701 - val_accuracy: 0.3765

Epoch 5/20

375/375 [==============================] - 6s 17ms/step - loss: 89.3483 - accuracy: 0.2452 - val_loss: 37.5932 - val_accuracy: 0.4002

Epoch 6/20

375/375 [==============================] - 6s 16ms/step - loss: 83.1961 - accuracy: 0.2623 - val_loss: 34.4462 - val_accuracy: 0.4252

Epoch 7/20

375/375 [==============================] - 6s 16ms/step - loss: 78.3173 - accuracy: 0.2729 - val_loss: 31.7037 - val_accuracy: 0.4479

Epoch 8/20

375/375 [==============================] - 6s 17ms/step - loss: 72.4743 - accuracy: 0.2909 - val_loss: 29.2730 - val_accuracy: 0.4698

Epoch 9/20

375/375 [==============================] - 6s 17ms/step - loss: 68.5124 - accuracy: 0.3024 - val_loss: 27.1139 - val_accuracy: 0.4866

Epoch 10/20

375/375 [==============================] - 6s 17ms/step - loss: 64.0775 - accuracy: 0.3191 - val_loss: 25.1891 - val_accuracy: 0.5067

Epoch 11/20

375/375 [==============================] - 6s 16ms/step - loss: 59.9963 - accuracy: 0.3309 - val_loss: 23.4935 - val_accuracy: 0.5254

Epoch 12/20

375/375 [==============================] - 6s 16ms/step - loss: 56.2695 - accuracy: 0.3416 - val_loss: 21.9847 - val_accuracy: 0.5423

Epoch 13/20

375/375 [==============================] - 6s 16ms/step - loss: 53.9862 - accuracy: 0.3542 - val_loss: 20.6376 - val_accuracy: 0.5581

Epoch 14/20

375/375 [==============================] - 7s 18ms/step - loss: 50.5103 - accuracy: 0.3674 - val_loss: 19.4278 - val_accuracy: 0.5694

Epoch 15/20

375/375 [==============================] - 6s 16ms/step - loss: 48.3674 - accuracy: 0.3764 - val_loss: 18.3255 - val_accuracy: 0.5820

Epoch 16/20

375/375 [==============================] - 6s 16ms/step - loss: 45.5798 - accuracy: 0.3878 - val_loss: 17.3281 - val_accuracy: 0.5922

Epoch 17/20

375/375 [==============================] - 6s 16ms/step - loss: 43.1677 - accuracy: 0.3962 - val_loss: 16.4330 - val_accuracy: 0.6017

Epoch 18/20

375/375 [==============================] - 6s 17ms/step - loss: 40.8346 - accuracy: 0.4079 - val_loss: 15.6100 - val_accuracy: 0.6116

Epoch 19/20

375/375 [==============================] - 6s 16ms/step - loss: 39.6255 - accuracy: 0.4110 - val_loss: 14.8616 - val_accuracy: 0.6196

Epoch 20/20

375/375 [==============================] - 6s 16ms/step - loss: 37.0921 - accuracy: 0.4216 - val_loss: 14.1825 - val_accuracy: 0.6293

Epoch using r

Evaluating Model Performance

Once training is complete, you can evaluate the model’s performance on a separate test dataset. This helps you assess how well your model generalizes to unseen data. Metrics like accuracy, precision, recall, and F1-score are commonly used to measure performance.

R

eval_result <- model %>% evaluate(x_test, y_test)

cat("Test loss:", eval_result[[1]], "\n")

cat("Test accuracy:", eval_result[[2]], "\n")

|

Output:

313/313 [==============================] - 2s 6ms/step - loss: 14.1852 - accuracy: 0.6398

Test loss: 14.18524

Test accuracy: 0.6398

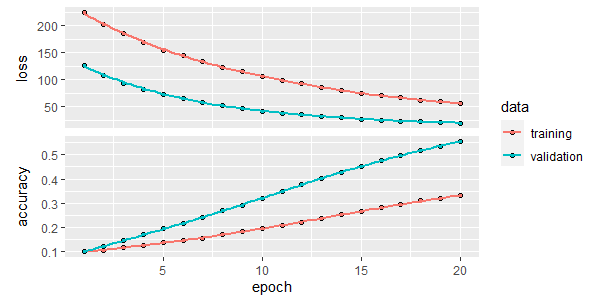

Visualizing Training Progress

We can visualize the training process of the model using plot function.

Output:

Epoch using r

Conclusion

In summary, an epoch is a critical concept in deep learning, representing a complete iteration through the training dataset. It allows the model to learn from the data, adjust its parameters, and converge towards a solution. The number of epochs, along with other hyperparameters, plays a crucial role in the successful training of deep learning models using R or any other programming language.

Share your thoughts in the comments

Please Login to comment...