PyTorch has developed a strong and adaptable framework for creating deep neural networks (DNNs) in the field of deep learning. Choosing the proper weight for your model is an important component in designing an efficient DNN. Initialization of weights is critical in deciding how successfully your neural network will learn from input and converge to a suitable answer. In this post, we will discuss the significance of weight initialization and give advice for choosing the appropriate weight for your deep neural network in PyTorch.

Understanding the Significance of Weight Initialization

Before delving into the complexities of weight initialization in PyTorch, it’s critical to understand why it matters. Weight initialization affects your neural network’s starting settings, altering its training dynamics and final performance. Slow convergence, disappearing or ballooning gradients, and poor solutions can all result from improperly set weights. To address these issues, PyTorch includes numerous weight initialization strategies, each tailored to different types of neural networks and activation functions.

The Role of PyTorch in Weight Initialization

PyTorch simplifies weight initialization by offering built-in functions that help set the initial values of your network’s weights. These functions are located in the torch.nn.init module and can be applied to various layers within your DNN. Some of the commonly used weight initialization methods in PyTorch include:

Xavier/Glorot Initialization:

This method is often used with tanh and sigmoid activation functions. It sets the weights according to a normal distribution with mean 0 and variance calculated based on the number of input and output units.

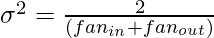

Mathematical Intuition: Xavier/Glorot Initialization sets the weights according to a normal distribution with mean 0 and variance calculated based on the number of input and output units. The variance (σ²) is calculated as:

Here,  represents the number of input units, and

represents the number of input units, and  represents the number of output units.

represents the number of output units.

Syntax:

torch.nn.init.xavier_normal_(tensor) or torch.nn.init.xavier_uniform_(tensor)

Characteristics: Ideal for tanh and sigmoid activation functions.

He Initialization:

Designed for networks using ReLU (Rectified Linear Unit) activations, He initialization sets the weights with a normal distribution having mean 0 and a variance derived from the number of input units.

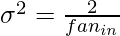

Mathematical Intuition: He Initialization sets the weights with a normal distribution having mean 0 and a variance derived from the number of input units. The variance (σ²) is calculated as:

Here,  represents the number of input units.

represents the number of input units.

Syntax:

torch.nn.init.kaiming_normal_(tensor) or torch.nn.init.kaiming_uniform_(tensor)

Characteristics: Designed for networks using ReLU (Rectified Linear Unit) activations.

Uniform Initialization:

In this method, weights are initialized from a uniform distribution within a specified range, which can be useful when you want to control the spread of initial weights.

Mathematical Intuition: In Uniform Initialization, weights are initialized from a uniform distribution within a specified range. The range is defined by two parameters, a and b, where a is the minimum value, and b is the maximum value. This can be expressed as:

![Rendered by QuickLaTeX.com w_{i} \sim U\left [ a,b \right ]](https://www.geeksforgeeks.org/wp-content/ql-cache/quicklatex.com-1c06d0b518433917f9a409a9598be984_l3.png)

Here,  represents the weight for a neuron.

represents the weight for a neuron.

Syntax:

torch.nn.init.uniform_(tensor, a=0, b=1)

Characteristics: Useful when you want to control the spread of initial weights.

You can also define your own initialization schemes tailored to your specific problem and architecture.

Now, let’s delve into a few guidelines on how to choose the right weight initialization method for your deep neural network in PyTorch.

Guidelines for Selecting the Right Weight Initialization

- Know Your Activation Functions: The weight initialization technique you choose should be compatible with the activation functions in your network. Use Xavier/Glorot initialization for tanh and sigmoid activations, and He initialization for ReLU activations, for example. Matching the initialization approach to the activation function helps to avoid problems such as disappearing or exploding gradients.

- Network Depth Matters: Network Depth Is Important: The depth of your neural network effects the initialization of weights. Deeper networks are more vulnerable to disappearing or ballooning gradient issues, therefore selecting a good initialization approach is critical. He initialization is frequently a reliable choice for deep networks.

- Batch Normalization: If you use batch normalization layers in your network, a standardized initialization like Xavier/Glorot might be useful, as batch normalization minimizes the reliance on weight initialization to some extent.

- Experiment and Fine-Tune: Weight initialization is not a one-size-fits-all procedure. Experiment with numerous ways and fine-tune them to attain the best outcomes for your unique work. Validation metrics may be used to identify which initialization is appropriate for your model.

- Consider Transfer Learning: When you use a pre-trained model for transfer learning, the initial weights are often set and fine-tuned using a big dataset. In such circumstances, you may not need to worry about weight initialization because the weights of the pre-trained model might be a fine starting point.

Implementation in PyTorch

Let’s illustrate how to apply weight initialization methods in PyTorch. We’ll use a simple example with a fully connected neural network. We use He initialization for the weights of the fc1 and fc2 layers, aligning with the ReLU activation used in the network.

Now, let’s explain each section of the code:

Importing Libraries: We start by importing the necessary libraries for PyTorch. These include torch for PyTorch itself, nn for neural network modules, init for weight initialization methods, and optim for optimizers.

Python3

import torch

import torch.nn as nn

import torch.nn.init as init

import torch.optim as optim

import numpy as np

|

Defining a Simple Neural Network Class:

- We define a simple neural network class called SimpleNN that inherits from nn.Module.

- In the constructor (__init__), we define a single fully connected (linear) layer (self.fc1) with 2 input units and 2 output units. This network takes input of size 2 and produces output of size 2.

Forward Method:

- In the forward method, we specify the forward pass of the network. In this case, we simply pass the input through the fc1 layer.

Python3

class SimpleNN(nn.Module):

def __init__(self):

super(SimpleNN, self).__init__()

self.fc1 = nn.Linear(2, 2)

def forward(self, x):

x = self.fc1(x)

return x

|

Creating an Instance of the Neural Network:

- We create an instance of the SimpleNN neural network called net.

Weight Initialization using Xavier/Glorot:

- We initialize the weights of the fc1 layer using Xavier/Glorot Initialization with the line init.xavier_normal_(net.fc1.weight). This sets the initial weights of the layer based on the specified initialization method.

Python3

init.xavier_normal_(net.fc1.weight)

print(net.fc1.weight)

|

Output:

Parameter containing:

tensor([[ 0.0779, 0.9274],

[-0.4728, 0.1678]], requires_grad=True)

Defining a Dummy Input Tensor:

- We define a dummy input tensor input_data with shape (1, 2). This represents a single input sample with 2 features.

Python3

input_data = torch.randn(1, 2)

|

Forward Pass:

We perform a forward pass of the input data through the neural network using output = net(input_data).

Printing Initialized Weights and Output:

- We print the initialized weights of the fc1 layer to see how they’ve been initialized using Xavier/Glorot Initialization.

- We also print the output produced by the network.

Python3

print("Initialized Weights of fc1 Layer:")

print(net.fc1.weight)

print("Output:")

print(output)

|

Output:

Initialized Weights of fc1 Layer:

Parameter containing:

tensor([[ 0.0779, 0.9274],

[-0.4728, 0.1678]], requires_grad=True)

Output:

tensor([[0.8556, 0.8997]], grad_fn=<AddmmBackward0>)

This code shows how to build a basic neural network, use Xavier/Glorot Initialization to establish its weights, and run a forward pass using dummy input data. The essential takeaway is that the weights are initialized using the init.xavier_normal_ function.

Train the model

I’ll use the initialization method and show you how to train a small neural network on a custom-defined dataset using this weight initialization methodology. In this example, we’ll generate a fictitious dataset for binary classification and train a neural network to complete the task.

Here’s a step-by-step implementation using PyTorch:

We constructed a new binary classification dataset, designed a basic neural network using He initialization, and trained the model utilizing stochastic gradient descent (SGD) as the optimizer in this example. We will import same libraries, as discussed before.

Generating Dataset

We have define generate_dataset function to generate random 2D features and assign labels to a custom defined dataset for binary classification using Pytorch framework.

Python3

def generate_dataset(num_samples=100):

np.random.seed(0)

features = np.random.rand(num_samples, 2)

labels = (features[:, 0] + features[:, 1] >

1).astype(int)

return torch.tensor(features, dtype=torch.float32), torch.tensor(labels, dtype=torch.float32)

|

Defining the Neural network with He Initialization

Here, we have define a class SimpleClassifier with two fully connected layers. We have applied He initialization to the weights of these layers.

Python3

class SimpleClassifier(nn.Module):

def __init__(self):

super(SimpleClassifier, self).__init__()

self.fc1 = nn.Linear(2, 64)

self.fc2 = nn.Linear(64, 1)

nn.init.kaiming_normal_(self.fc1.weight)

nn.init.kaiming_normal_(self.fc2.weight)

def forward(self, x):

x = torch.relu(self.fc1(x))

x = torch.sigmoid(self.fc2(x))

return x

|

Setting Hyperparameters

We have set hyperparameters for the model. Setting hyperparameters is an important step in ML workflow. It allows you to fine-tune the model.

Python3

learning_rate = 0.01

epochs = 1000

batch_size = 16

|

To explore deeper and tailor it to your individual needs, feel free to change the hyperparameters, dataset size, or model architecture.

Creating Dataset and Dataloader

In this code, we have defined the batch_size that determines number of samples are processed in each iteration. We have created DataLoader that allows to iterate through the dataset in batches.

Python3

features, labels = generate_dataset()

dataset = torch.utils.data.TensorDataset(features, labels)

dataloader = torch.utils.data.DataLoader(

dataset, batch_size=batch_size, shuffle=True)

|

Initializing model and optimizer

Here, we have initialize a simple neural network classifier model, an optimizer and binary cross-entropy loss function using Pytorch framework. The optimizer set for the training is Stochastic Gradient Descent (SGD) as the optimizer algorithm. The learning rate determine the rate of updating weights.

As it is a binary classification model, the loss function is set to binary cross-entropy to measure the dissimilarity between the predicted probabilities and actual binary labels.

Python3

model = SimpleClassifier()

optimizer = optim.SGD(model.parameters(), lr=learning_rate)

criterion = nn.BCELoss()

|

After setting up these components, let’s proceed to train your model.

Training Loop

In this code snippet, we design the loop to train the model for specified epochs using DataLoader to iterate through the dataset.

- optimizer.zero_grad() : used to reset gradient of the model’s parameters to 0 at the starting of each batch

- model(inputs): generate predictions on the input data

- optimizer.zero_grad() : calculate binary cross-entropy loss

- loss.backward() : computes loss using backpropagation

- optimizer.step() : updates the weights

- loss.item() : keeps the track of the cummulative loss for the entire epoch

Python3

for epoch in range(epochs):

total_loss = 0

for inputs, targets in dataloader:

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, targets.view(-1, 1))

loss.backward()

optimizer.step()

total_loss += loss.item()

if (epoch + 1) % 100 == 0:

average_loss = total_loss / len(dataloader)

print(f"Epoch [{epoch + 1}/{epochs}] - Loss: {average_loss:.4f}")

|

Output:

Epoch [100/1000] - Loss: 0.4184

Epoch [200/1000] - Loss: 0.2807

Epoch [300/1000] - Loss: 0.2209

Epoch [400/1000] - Loss: 0.1875

Epoch [500/1000] - Loss: 0.1531

Epoch [600/1000] - Loss: 0.1704

Epoch [700/1000] - Loss: 0.1382

Epoch [800/1000] - Loss: 0.1160

Epoch [900/1000] - Loss: 0.1246

Epoch [1000/1000] - Loss: 0.1028

Evaluation:

In the last, we evaluate the performance of the model on test dataset and print accuracy, which gives an idea of how well the model is performing on unseen data.

- model.eval() : set the model to evaluation mode

- with torch.no_grad() : temporarily disables gradient computation

- predictions = model(test_samples).round().squeeze().numpy() : pass test samples through trained model to get predictions

- model(test_sample) : forward pass the test sample

- .round() : rounds the raw predictions to 0 or 1

- .squeeze() : remove unnecessary dimensions

- .numpy() : converts prediction from pytorch tensor to numpy array

Finally, we print the accuracy.

Python3

model.eval()

with torch.no_grad():

test_samples, test_labels = generate_dataset(num_samples=20)

predictions = model(test_samples).round().squeeze().numpy()

accuracy = (predictions == test_labels.numpy()).mean()

print(f"Test Accuracy: {accuracy * 100:.2f}%")

|

Output:

Test Accuracy: 100.00%

Selecting weight initialization depends on the activation function, network architecture and nature of the problem. A recommended approach is to try out various weight initialization techniques and closely observe the training process, including metrics such as training loss and convergence speed. This way, you can identify the most suitable initialization method for your particular problem.

Choosing the appropriate weight initialization technique is an important step in creating successful deep neural networks using PyTorch. It can have a considerable influence on your model’s convergence speed and overall performance. We may make an educated selection regarding the weight initialization technique to utilize by taking into account parameters like as activation functions, network depth, and the existence of batch normalization. Remember that testing and fine-tuning are essential for determining the best weight initialization for your particular scenario. With PyTorch’s strength at your disposal, you can build DNNs that learn effectively and provide astounding outcomes.

Share your thoughts in the comments

Please Login to comment...