AWS Lambda – Copy Object Among S3 Based on Events

Last Updated :

24 Jan, 2021

In this article, we will make AWS Lambda function to copy files from one s3 bucket to another s3 bucket. The lambda function will get triggered upon receiving the file in the source bucket. We will make use of Amazon S3 Events. Every file when uploaded to the source bucket will be an event, this needs to trigger a Lambda function which can then process this file and copy it to the destination bucket.

Steps to configure Lambda function have been given below:

- Select Author from scratch template. In this, we need to write the code from scratch.

- Provide the function name.

- Select Runtime. There are several runtimes provided by AWS such as Java, Python, NodeJS, Ruby, etc.

- Select the execution role. Execution Roles are permissions provided to Lambda Function.

Note: Lambda must have access to the S3 source and destination buckets. Therefore, make an IAM Role that has AmazonS3FullAccess policy attached. In this case, s3tos3 has full access to s3 buckets.

Once the function is created we need to add a trigger that will invoke the lambda function. The steps to add trigger is given below.

- In Select Trigger, select S3. There are numerous AWS services that can act as a trigger. Since this article is focused on moving objects from one bucket to another we choose S3.

- In Bucket, select source bucket. This bucket will act as a trigger. We will specify the event type associated with this bucket which will further invoke our lambda function.

- Select Event type as All object create events. All object create event includes put, copy, post, and multi-part upload. Any one of the actions will invoke our lambda function. In our case, when we upload a file into the source bucket, the event type is PUT.

- Prefix and Suffix are optional. Prefix and suffix are used to match the filenames with predefined prefixes and suffixes.

The most remarkable thing about setting the Lambda S3 trigger is that whenever a file is uploaded, it will trigger our function. We make use of the event object to gather all the required information.

The sample event object is shown below. This object is passed to our Lambda function.

{

"Records": [

{

"eventVersion": "2.0",

"eventSource": "aws:s3",

"awsRegion": "ap-south-1",

"eventTime": "1970-01-01T00:00:00.000Z",

"eventName": "ObjectCreated:Put",

"userIdentity": {

"principalId": "GeeksforGeeks"

},

"requestParameters": {

"sourceIPAddress": "XXX.X.X.X"

},

"responseElements": {

"x-amz-request-id": "EXAMPLE123456789",

"x-amz-id-2": "EXAMPLE123/5678abcdefghijklambdaisawesome/mnopqrstuvwxyzABCDEFGH"

},

"s3": {

"s3SchemaVersion": "1.0",

"configurationId": "testConfigRule",

"bucket": {

"name": "gfg-source-bucket",

"ownerIdentity": {

"principalId": "GeeksforGeeks"

},

"arn": "arn:aws:s3:::gfg-source-bucket"

},

"object": {

"key": "geeksforgeeks.txt",

"size": 1024,

"eTag": "0123456789abcdef0123456789abcdef",

"sequencer": "0A1B2C3D4E5F678901"

}

}

}

]

}

Your Lambda function makes use of this event dictionary to identify the location where the file is uploaded. The lambda code is given below:

import json

import boto3

s3_client=boto3.client('s3')

# lambda function to copy file from 1 s3 to another s3

def lambda_handler(event, context):

#specify source bucket

source_bucket_name=event['Records'][0]['s3']['bucket']['name']

#get object that has been uploaded

file_name=event['Records'][0]['s3']['object']['key']

#specify destination bucket

destination_bucket_name='gfg-destination-bucket'

#specify from where file needs to be copied

copy_object={'Bucket':source_bucket_name,'Key':file_name}

#write copy statement

s3_client.copy_object(CopySource=copy_object,Bucket=destination_bucket_name,Key=file_name)

return {

'statusCode': 3000,

'body': json.dumps('File has been Successfully Copied')

}

Note: After writing the code, don’t forget to click Deploy.

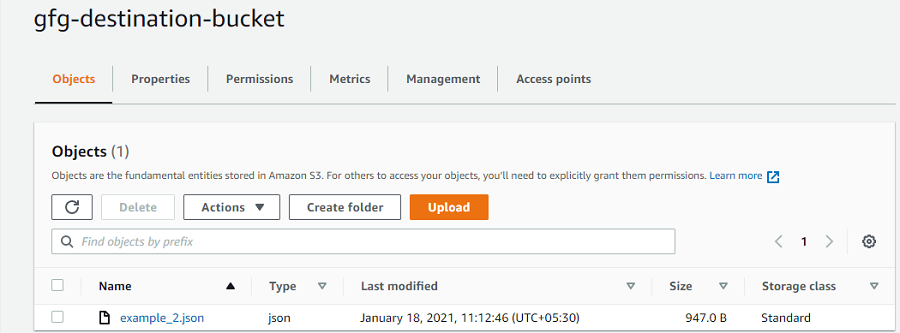

Now when we upload a file in source bucket ‘gfg-source-bucket’, this will trigger the ‘s3Tos3-demo’ lambda function which will copy the uploaded file into destination bucket ‘gfg-destination-bucket‘. The images have been shown below:

File uploaded in Source Bucket

File Copied to Destination Bucket

Destination Bucket

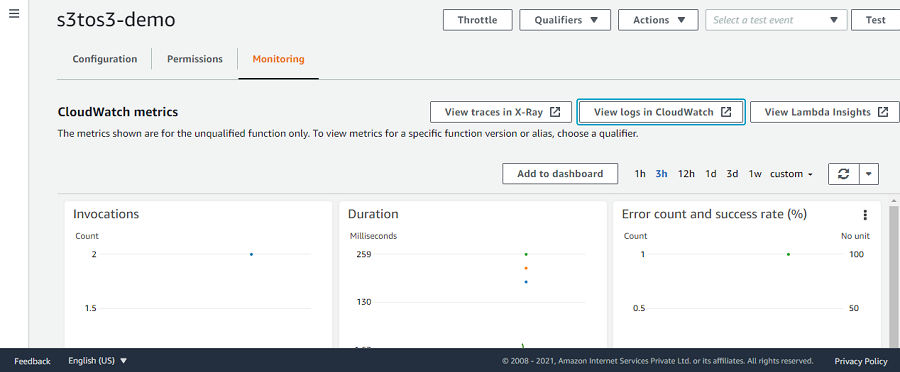

The result can also be verified by clicking on Monitoring Tab in lambda function and then clicking on View logs in Cloudwatch.

CloudWatch Logs

Share your thoughts in the comments

Please Login to comment...