Using the SavedModel format in Tensorflow

Last Updated :

21 Mar, 2023

TensorFlow is a popular deep-learning framework that provides a variety of tools to help users build, train, and deploy machine-learning models. One of the most important aspects of deploying a machine learning model is saving and exporting it to a format that can be easily used by other programs and systems.

The SavedModel format is a standard format for storing and sharing machine learning models that have been trained using TensorFlow. In this article, we will discuss how to use the SavedModel format in TensorFlow, including how to save and export a model, and how to load and use a saved model in a new program. To save a TensorFlow model in the SavedModel format, you need to use the tf.saved_model.save() function. This function takes two arguments: the model to be saved, and the path to the directory where the SavedModel should be stored. The directory should not exist already and will be created by the function.

To load a SavedModel in a new program, you need to use the tf.saved_model.load() function. This function takes a single argument, which is the path to the directory containing the SavedModel.

Syntax:

tf.saved_model.save( obj, export_dir, signatures=None, options=None)

Parameters:

obj : A trackable object (e.g. tf.Module or tf.train.Checkpoint) to export.

export_dir : A directory in which to write the SavedModel.

signatures : Optional, one of the following three types: * a tf.function instance with an input signature specified, which will use the default serving signature key; * the outcome of calling f.get concrete function on a @tf.function-decorated function; in this case, f will be used to generate a signature for the SavedModel under the default serving signature key; or * a dictionary, which maps signature keys to either tf.function instances with input signatures or concrete These dictionaries' keys could contain any text, although they usually come from the tf.saved model.signature constants module.

options : tf.saved_model.SaveOptions object for configuring save options.

Syntax of tf.saved_model.load():

tf.saved_model.load( export_dir, tags=None, options=None)

Parameters:

export_dir : The SavedModel directory to load from.

tags: A tag or sequence of tags identifying the MetaGraph to load. Optional if the SavedModel contains a single MetaGraph, as for those exported from tf.saved_model.save.

options: tf.saved_model.LoadOptions object that specifies options for loading.

Let’s see an example to understand how it works.

Example:

First, we define a simple Keras model with a single dense layer. This model takes an input tensor of shape (2,) and produces an output tensor of shape (1,). We then train the model using some dummy data.

Python3

import tensorflow as tf

def build_model():

inputs = tf.keras.Input(shape=(2,), name='inputs')

x = tf.keras.layers.Dense(4, activation='relu', name='dense1')(inputs)

outputs = tf.keras.layers.Dense(1, name='outputs')(x)

model = tf.keras.Model(inputs=inputs, outputs=outputs)

return model

|

Once the model is trained, we save it in the SavedModel format using the tf.saved_model.save() function. This function takes the trained model and a destination folder as input, and it saves the model in the SavedModel format in the specified folder.

Python3

model = build_model()

model.save('saved_model')

|

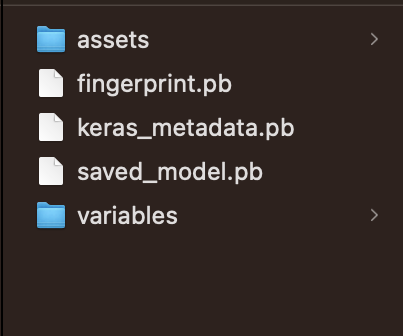

A directory named saved_model gets created and the contents are as below:

saved_model directory

Next, we load the SavedModel using the tf.saved_model.load() function. This function takes the path to the folder containing the SavedModel as input, and it returns a tf.saved_model.load() object that contains the loaded model.

Python3

loaded_model = tf.saved_model.load('saved_model')

|

To perform inference with the loaded model, we retrieve the model’s prediction function by calling the signatures attribute of the tf.saved_model.load() object, which returns a dictionary of signature keys and associated functions. We use the ‘serving_default’ key to retrieve the default prediction function, which we assign to the variable inference_func. Finally, we create a test input tensor and use the prediction function to obtain a prediction for the test input. The output of the prediction function is a dictionary with the model’s output tensor as the value associated with the key ‘output_1’, so we extract the output value using indexing.

Python3

test_input = tf.constant([[0.5, 0.5], [0.2, 0.2]])

result = loaded_model.signatures['serving_default'](inputs=test_input)

print(result)

|

Output:

{'outputs': <tf.Tensor: shape=(2, 1), dtype=float32, numpy=

array([[-0.41576898],

[-0.1663076 ]], dtype=float32)>}

Full code for SavedModel format in Tensorflow

Python3

import tensorflow as tf

def build_model():

inputs = tf.keras.Input(shape=(2,), name='inputs')

x = tf.keras.layers.Dense(4, activation='relu', name='dense1')(inputs)

outputs = tf.keras.layers.Dense(1, name='outputs')(x)

model = tf.keras.Model(inputs=inputs, outputs=outputs)

return model

model = build_model()

model.save('saved_model')

loaded_model = tf.saved_model.load('saved_model')

test_input = tf.constant([[0.5, 0.5], [0.2, 0.2]])

result = loaded_model.signatures['serving_default'](inputs=test_input)

print(result)

|

Output:

{'outputs': <tf.Tensor: shape=(2, 1), dtype=float32, numpy=

array([[-0.3197009 ],

[-0.12788038]], dtype=float32)>}

Share your thoughts in the comments

Please Login to comment...