Understanding Typecasting as a compiler

Last Updated :

18 Apr, 2023

Let’s start our discussion with a very few lines of C –

C

#include <stdio.h>

int main() {

int x = 97;

char ch = x;

printf("The value of %d in character form is '%c'",x, ch);

return 0;

}

|

Output :

The value of 97 in character form is 'a'

Let us understand that how the compiler is going to compile every instruction in this program.

Compiler :

- Enters the main program

- Allocated 4 bytes of memory ( as integer takes 4 bytes generally), named it as x, and gives it a value of 97

- Allocated 1 byte of memory ( as a character takes 1 byte of space ), named it as ch, and stored the value corresponding to x in it

- Printed the value of ch

- Exits the main program

We will understand 2 & 3 points thoroughly as all others are obvious.

Description of the second point :

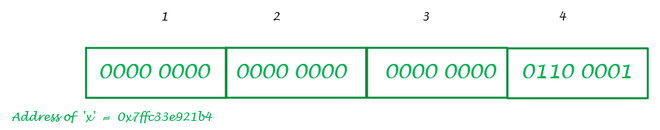

The compiler will convert 97 ( in decimal ) to its corresponding binary form as the memory stores the data in the binary form. 00000000 00000000 00000000 01100001 is the binary representation of 97 in 4-byte(or 32-bit) binary representation. So the content of the variable x is going to look like as in the given image –

Big Endian System

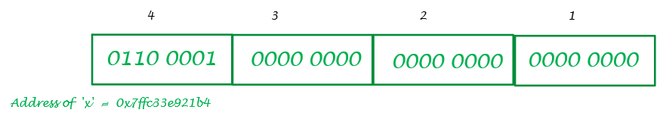

This system of representation of the data in binary form in the memory is known as Big Endian But in most computer systems despite this system, the Little Endian system is followed. So in the Little Endian system content of x is as below –

Little Endian system

So in most computers, every data in the memory is going to be stored in the Little-Endian form only.

Description of the second point :

As said earlier the character takes up 1 byte or 8 bits of space in the memory so the compiler will allocate that but when it comes to assigning the value to the variable ch it will go at the location where x is present and start reading the content of only first byte as it is reading for a character. So the first byte has 0110 0001 in binary form and its corresponding decimal form is 97. Every character has some ASCII code associated with it and the ASCII code for character a is 97. So that is why the content of ch will become a and after that, the compiler will simply print the content of ch.

Some more examples for better understanding :

C

#include <stdio.h>

int main() {

int x = 321;

char ch = x;

printf("The character form of %d is '%c'",x,ch);

return 0;

}

|

Output :

The character form of 321 is 'A'

Explanation :

The binary representation of 321 is 00000000 00000000 00000001 01000001 and the content of x in Little Endian system is 0100001 00000001 00000000 00000000 simply do 1234 ⇢ 4321 (see image) do get this form. Now the compiler only looks only on the first byte which is 0100001 and its corresponding decimal form is 65 which is the ASCII code for character ‘a’.

C

#include <stdio.h>

int main() {

int x = 57;

char ch = x;

printf("The character form of %d is '%c'",x,ch);

return 0;

}

|

Output :

The character form of 57 is '9'

Explanation :

The binary representation of 57 is 00000000 00000000 00000000 00111001 and the content of x in Little Endian system is 00111001 00000000 00000000 00000000 Now the compiler only looks only on the first byte which is 00111001 and its corresponding decimal form is 57 which is the ASCII code for character ‘9’.

Nice To Have :

- ASCII code for A-Z is 65-90, a-z is 97-122, and digits from 0 to 9 also have their corresponding ASCII code from 48 to 57.

- To find the corresponding character form simply find the modulo of that number with 256 and then look up the ASCII table and find the character corresponding to the remainder. That will be your answer.

THANKS ^_^

In compiler design, typecasting refers to the process of converting a value of one data type to another data type. Typecasting is necessary when the data type of an expression needs to be changed to match the data type expected by an operator or function.

Typecasting can be explicit or implicit. Explicit typecasting is when the programmer specifies the conversion explicitly using a typecast operator, such as (int) or (float). Implicit typecasting, also known as coercion, is when the compiler automatically converts one data type to another.

Typecasting can have advantages and disadvantages in compiler design. Some of the advantages of typecasting include:

- Flexibility: Typecasting allows the programmer to use data of different types in the same expression, which can increase the flexibility and expressiveness of the code.

- Efficiency: Typecasting can help reduce memory usage and increase performance by allowing the compiler to optimize the code more effectively.

- Interoperability: Typecasting can help improve interoperability between different programming languages and systems by allowing data to be converted between different formats.

Some of the disadvantages of typecasting include:

- Safety: Typecasting can lead to type errors and other bugs if not used correctly. For example, typecasting can lead to loss of precision or overflow errors if the data being converted is too large or too small to fit into the target data type.

- Code complexity: Typecasting can make the code more complex and harder to read and maintain, especially if there are multiple levels of typecasting involved.

- Performance overhead: Typecasting can introduce additional overhead in terms of memory usage and processing time, especially if it is performed frequently in a program.

- Overall, typecasting is an important tool in compiler design, but it should be used judiciously and with caution to avoid introducing bugs and other issues in the code.

Share your thoughts in the comments

Please Login to comment...