Imagine you’re looking for a coin you dropped in a big room. At first, you take big steps, covering a lot of ground quickly. But as you get closer to the coin, you take tinier steps to look more precisely. This is similar to how learning rate decay works in machine learning.

In training a machine learning model, the “learning rate” decides how much we adjust the model in response to the error it made. Start with a high learning rate, and the model might learn quickly, but it can overshoot and miss the best solution. Start too low, and it might be too slow or get stuck. So, instead of keeping the learning rate constant, we gradually reduce it. This method is called “learning rate decay.” We start off taking big steps (high learning rate) when we’re far from the best solution. But as we get closer, we reduce the learning rate, taking smaller steps, and ensuring we don’t miss the optimal solution. This approach helps the model train faster and more accurately.

There are various ways to reduce the learning rate: some reduce it gradually over time, while others drop it sharply after a set number of training rounds. The key is to find a balance that lets the model learn efficiently without missing the best possible solution.

Learning Rate Decay

Learning rate decay is a technique used in machine learning models, especially deep neural networks. It is sometimes referred to as learning rate scheduling or learning rate annealing. Throughout the training phase, it entails gradually lowering the learning rate. Learning rate decay is used to gradually adjust the learning rate, usually by lowering it, to facilitate the optimization algorithm’s more rapid convergence to a better solution. This method tackles problems that are frequently linked to a fixed learning rate, such as oscillations and sluggish convergence.

Learning rate decay can be accomplished by a variety of techniques, such as step decay, exponential decay, and 1/t decay. Degradation strategy selection is based on the particular challenge and architecture. When training deep learning models, learning rate decay is a crucial hyperparameter that, when used properly, can result in faster training, better convergence, and increased model performance.

How Learning Rate Decay works

Learning rate decay is like driving a car towards a parking spot. At first, you drive fast to reach the spot quickly. As you get closer, you slow down to park accurately. In machine learning, the learning rate determines how much the model changes based on the mistakes it makes. If it’s too high, the model might miss the best fit; too low, and it’s too slow. Learning rate decay starts with a higher learning rate, letting the model learn fast. As training progresses, the rate gradually decreases, making the model adjustments more precise. This ensures the model finds a good solution efficiently. Different methods reduce the rate in various ways, either stepwise or smoothly, to optimize the training process.

Mathematical representation of Learning rate decay

A basic learning rate decay plan can be mathematically represented as follows:

Assume that the starting learning rate is  and that the learning rate at epoch t is

and that the learning rate at epoch t is  .

.

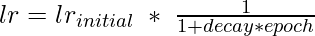

A typical decay schedule for learning rates is based on a constant decay rate  , where

, where  , applied at regular intervals (e.g., every n epochs):

, applied at regular intervals (e.g., every n epochs):

Where,

is the learning rate at epoch t.

is the learning rate at epoch t. is the initial learning rate at the start of training.

is the initial learning rate at the start of training. is the fixed decay rate, typically a small positive value, such as 0.1 or 0.01.

is the fixed decay rate, typically a small positive value, such as 0.1 or 0.01.- t is the current epoch during training.

- The learning rate

decreases as t increases, leading to smaller step size as training progresses.

decreases as t increases, leading to smaller step size as training progresses.

The learning rate is decreased by a percentage of its previous value at each epoch in this formula, which depicts a basic learning rate decay schedule. A timetable like this facilitates the optimization process by enabling the model to converge more quickly at first, then fine-tuning in smaller increments as it gets closer to a local minimum.

Basic decay schedules

In order to enhance the convergence of machine learning models, learning rate decay schedules are utilized to gradually lower the learning rate during training. Here are a few simple schedules for learning rate decay:

- Step Decay: In step decay, after a predetermined number of training epochs, the learning rate is decreased by a specified factor (decay rate). The mathematical formula for step decay is:

- Exponential Decay: The learning rate is progressively decreased over time by exponential decay. At each epoch, a factor is used to adjust the learning rate. The mathematical formula for Exponential decay is:

- Inverse Time Decay: A factor inversely proportional to the number of epochs is used to reduce the learning rate through inverse decay. The mathematical formula for Inverse Time decay is:

- Polynomial Decay: When a polynomial function, usually a power of the epoch number, is followed, polynomial decay lowers the learning rate.The mathematical formula for Polynomial decay is:

In simple words, these schedules adjust the learning rate during training. They help in starting with big steps and taking smaller steps as we get closer to the best solution, ensuring efficiency and precision.

Steps Needed to implement Learning Rate Decay

- Set Initial Learning Rate: Start by establishing a base learning rate. It shouldn’t be too high to cause drastic updates, nor too low to stall the learning process.

- Choose a Decay Method: Common methods include exponential decay, step decay, or inverse time decay. The choice depends on your specific machine learning problem.

- Implement the Decay: Apply the chosen decay method after a set number of epochs, or based on the performance of the model.

- Monitor and Adjust: Keep an eye on the model’s performance. If it’s not improving, you might need to adjust the decay rate or the method.

Implementing Learning Rate Decay

Certainly, let’s see a simple example of implementing learning rate decay using TensorFlow. In this script, we’ll use a basic neural network model for the classification task on the MNIST dataset, which is a dataset of handwritten digits.

Importing Libraries

Python3

import tensorflow as tf

from tensorflow.keras.datasets import mnist

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Flatten

from tensorflow.keras.optimizers import SGD

from tensorflow.keras.callbacks import LearningRateScheduler

import numpy as np

|

We’re importing necessary modules from TensorFlow. We’ll use the Keras API within TensorFlow to load the dataset, build, compile, and train our model.

Loading Data

Python3

(x_train, y_train), (x_test, y_test) = mnist.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

|

This snippet of code loads the MNIST dataset, which includes handwritten digit pictures, and divides the pixel values by 255.0 to normalize them to a range between 0 and 1.

Building the Model

Python3

model = Sequential([

Flatten(input_shape=(28, 28)),

Dense(128, activation='relu'),

Dense(10, activation='softmax')

])

|

This code uses Keras to define a model for a sequential neural network. It consists of an output layer with 10 units using a softmax activation for digit classification, an input layer that flattens the 28×28 pixel picture, and a hidden layer with 128 units utilizing ReLU activation.

Setting up Learning Rate Decay

Python3

initial_learning_rate = 0.1

lr_schedule = tf.keras.optimizers.schedules.ExponentialDecay(

initial_learning_rate=initial_learning_rate,

decay_steps=1000,

decay_rate=0.96,

staircase=True)

|

This code establishes a starting learning rate of 0.1. The learning rate is then gradually lowered over time by defining a learning rate plan using TensorFlow’s ExponentialDecay function. The initial_learning_rate represents the beginning learning rate, decay_steps the frequency of applying decay, decay_rate the rate at which the learning rate falls, and staircase=True the presence of discrete interval decay (staircase function) in the learning rate. During training, this schedule is frequently used to adjust learning rates for greater convergence.

Compiling the Model

Python3

model.compile(optimizer=SGD(learning_rate=lr_schedule),

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

|

This code assembles the model of a neural network. It makes use of the stochastic gradient descent (SGD) optimizer, whose learning rate is set by the previously defined lr_schedule. For classification tasks, the model is configured to minimize the sparse categorical cross-entropy loss. It also monitors and reports the accuracy metric while training. Model convergence is aided by the learning rate schedule, which dynamically modifies the learning rate in accordance with the provided decay schedule.

Learning Rate Scheduler Callback

Python3

def scheduler(epoch, lr):

if epoch < 10:

return lr

else:

return lr * tf.math.exp(-0.1)

callback = LearningRateScheduler(scheduler)

|

The scheduler function, which accepts two parameters, epoch and lr (current learning rate), is a custom learning rate scheduler defined by this code. It maintains an unaltered learning rate (return lr) for the first ten periods. It uses an exponential decay function (return lr * tf.math.exp(-0.1)) to progressively lower the learning rate after the tenth epoch.

Training the model

Python3

model.fit(x_train, y_train, epochs=15, callbacks=[

callback], validation_data=(x_test, y_test))

|

Output:

Epoch 1/15

1875/1875 [==============================] - 3s 1ms/step - loss: 0.3002 - accuracy: 0.9140 - val_loss: 0.1772 - val_accuracy: 0.9470 - lr: 0.0960

Epoch 2/15

1875/1875 [==============================] - 2s 1ms/step - loss: 0.1472 - accuracy: 0.9572 - val_loss: 0.1361 - val_accuracy: 0.9574 - lr: 0.0885

Epoch 3/15

1875/1875 [==============================] - 2s 1ms/step - loss: 0.1079 - accuracy: 0.9688 - val_loss: 0.1016 - val_accuracy: 0.9697 - lr: 0.0815

Epoch 4/15

1875/1875 [==============================] - 2s 1ms/step - loss: 0.0862 - accuracy: 0.9750 - val_loss: 0.0908 - val_accuracy: 0.9727 - lr: 0.0751

Epoch 5/15

1875/1875 [==============================] - 2s 1ms/step - loss: 0.0719 - accuracy: 0.9795 - val_loss: 0.0816 - val_accuracy: 0.9744 - lr: 0.0693

Epoch 6/15

1875/1875 [==============================] - 2s 1ms/step - loss: 0.0620 - accuracy: 0.9821 - val_loss: 0.0836 - val_accuracy: 0.9727 - lr: 0.0638

Epoch 7/15

1875/1875 [==============================] - 2s 1ms/step - loss: 0.0545 - accuracy: 0.9850 - val_loss: 0.0749 - val_accuracy: 0.9758 - lr: 0.0588

Epoch 8/15

1875/1875 [==============================] - 3s 1ms/step - loss: 0.0486 - accuracy: 0.9864 - val_loss: 0.0728 - val_accuracy: 0.9763 - lr: 0.0565

Epoch 9/15

1875/1875 [==============================] - 2s 1ms/step - loss: 0.0433 - accuracy: 0.9882 - val_loss: 0.0722 - val_accuracy: 0.9780 - lr: 0.0520

Epoch 10/15

1875/1875 [==============================] - 2s 1ms/step - loss: 0.0396 - accuracy: 0.9895 - val_loss: 0.0713 - val_accuracy: 0.9785 - lr: 0.0480

Epoch 11/15

1875/1875 [==============================] - 2s 1ms/step - loss: 0.0360 - accuracy: 0.9910 - val_loss: 0.0686 - val_accuracy: 0.9790 - lr: 0.0442

Epoch 12/15

1875/1875 [==============================] - 2s 1ms/step - loss: 0.0332 - accuracy: 0.9919 - val_loss: 0.0696 - val_accuracy: 0.9782 - lr: 0.0407

Epoch 13/15

1875/1875 [==============================] - 3s 1ms/step - loss: 0.0310 - accuracy: 0.9925 - val_loss: 0.0683 - val_accuracy: 0.9793 - lr: 0.0375

Epoch 14/15

1875/1875 [==============================] - 2s 1ms/step - loss: 0.0288 - accuracy: 0.9930 - val_loss: 0.0669 - val_accuracy: 0.9784 - lr: 0.0346

Epoch 15/15

1875/1875 [==============================] - 2s 1ms/step - loss: 0.0271 - accuracy: 0.9939 - val_loss: 0.0684 - val_accuracy: 0.9789 - lr: 0.0319

With the training sets x_train and y_train, this code trains the neural network model for a period of 15 epochs. In order to dynamically modify the learning rate during training, it makes use of the learning rate scheduler callback, callback. Additionally, it assesses how effectively the model generalizes to new data by validating its performance on test data (x_test, y_test).

Evaluation

Python3

test_loss, test_accuracy = model.evaluate(x_test, y_test, verbose=2)

print('\nTest accuracy:', test_accuracy)

|

Output:

Test accuracy: 0.9789000153541565

The test loss and accuracy are calculated by this code, which also assesses the trained model’s performance on the test data (x_test and y_test). The evaluation results with comprehensive information will be shown, as specified by the verbose=2 parameter. In order to give an indication of how effectively the model has classified the test data, it prints the test accuracy at the end.

Check Current Learning Rate

Python3

current_lr = lr_schedule(model.optimizer.iterations)

print(f"Current learning rate: {current_lr.numpy()}")

|

Output:

Current learning rate: 0.031885575503110886

We retrieve and print the current learning rate after training, giving us insight into how much it decayed during the training process. This output displays the results of training a neural network model over 15 epochs using the MNIST dataset.

- Epochs: The training process occurred in 15 cycles, or “epochs.” Each epoch represents one complete forward and backward pass of all training examples.

- Loss & Accuracy: For each epoch, the ‘loss’ and ‘accuracy’ values show how well the model is doing during training. As epochs progress, the loss decreases, and accuracy increases, indicating the model is improving.

- Validation Loss & Accuracy: ‘val_loss’ and ‘val_accuracy’ represent how well the model performs on a separate set of data it hasn’t seen before. A lower validation loss and higher accuracy indicate better generalization.

- Training Time: Each epoch’s duration is noted (e.g., “5ms/step”), showing how long it took to process each batch of data.

- Test Accuracy: After training for all epochs, the model is evaluated on a test dataset, and it achieved an accuracy of approximately 97.9%.

- Learning Rate: The final line shows the current learning rate used in the last epoch. The model started with a higher learning rate and reduced it over time, as per the learning rate decay strategy.

Advantages of Learning Rate Decay

Deep learning and machine learning models are frequently trained using the learning rate decay technique. It provides a number of benefits that support more effective and efficient training, including:

- Improved Convergence: As training goes on, the learning rate is lowered, which aids in the models’ convergence to a better solution. By doing this, it may be avoided that the loss function’s minimum is exceeded.

- Enhanced Generalization: In order to reduce overfitting, a model’s capacity to generalize to new data might be enhanced via slower learning rates in later training rounds.

- Stability: By avoiding significant weight changes that could lead to the model oscillating or diverging, learning rate decay stabilizes training.

Disadvantages of Learning Rate Decay

While there are many benefits to learning rate decay, it’s important to be aware of any potential drawbacks and difficulties while using it. Considerations and disadvantages are as follows:

- Complexity: The training process can get more complicated by implementing and choosing the appropriate learning rate decay schedule, particularly in big and complex neural networks.

- Hyperparameter Sensitivity: Hyperparameter tuning is involved in the decay schedule and learning rate selection. Hyperparameter settings or an improper schedule can work against training instead of in favor of it.

- Delayed Convergence: Aggressive learning rate decay can sometimes make the model converge very slowly, which could require more training time.

Share your thoughts in the comments

Please Login to comment...