How to Fix the “Too Many Open Files” Error in Linux

Last Updated :

18 Mar, 2024

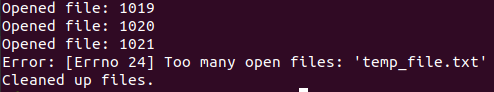

Encountering the “Too Many Open Files” error in Linux can be frustrating, especially when it disrupts your workflow or server operations. This error typically occurs when a process or system reaches its limit for open file descriptors, which are references to open files or resources such as sockets, pipes, or device files. Fortunately, resolving this issue involves understanding the underlying causes and applying appropriate solutions. In this article, we will explore the common causes of this error and provide practical solutions with examples and code executions.

too many opne file error in Linux

Understanding the Error

Before delving into solutions, it’s essential to understand why this error occurs. Linux systems impose limits on the number of file descriptors that a process can open simultaneously. When a process exceeds this limit, the system throws the “Too Many Open Files” error. This limit is controlled by the ulimit command and can be adjusted both globally and per user.

Identifying the Cause

Several factors can contribute to the “Too Many Open Files” error:

- Inefficient Resource Management: Poorly written applications or scripts may fail to release file descriptors after use, leading to a gradual accumulation of open files.

- System Limits: The system may have conservative limits set for file descriptors, which can be exceeded under heavy load or when running multiple processes concurrently.

- File Descriptor Leakage: Some applications may have bugs that cause file descriptors to leak, consuming system resources over time.

Solutions

1. Adjusting System Limits

To address the issue of system limits, you can increase the maximum number of file descriptors allowed per process. Follow these steps:

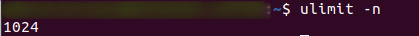

Step 1: Check the current limits using the ulimit command:

ulimit -n

current limit to open files

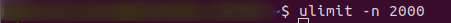

Step 2: To temporarily increase the limit, use the ulimit command with the -n flag followed by the desired value:

ulimit -n 2000

changing limit temporarily

Step 3: To make the change permanent, edit the limits.conf file located in the /etc/security/ directory. Add or modify the following lines:

* soft nofile 65536

* hard nofile 65536

Replace 65536 with the desired maximum number of file descriptors.

2. Closing Unused File Descriptors

Ensure that your application or script properly closes file descriptors after use. Failure to do so can result in resource leakage. Here’s a Python example demonstrating proper file descriptor management:

file = open('example.txt', 'r')

# Do operations with the file

file.close() # Close the file descriptor when done

3. Debugging and Monitoring

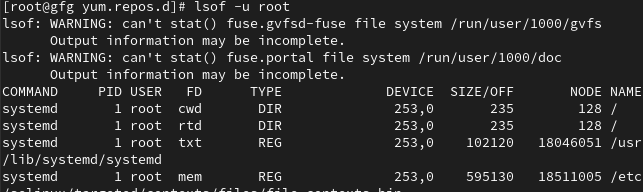

Use tools like lsof (list open files) and strace (trace system calls) to identify processes with a high number of open file descriptors and trace their behavior. For example:

lsof -u <username>

To view list of open files in particular user

Replace <username> with the username of the affected user.

How to Fix the “Too Many Open Files” Error in Linux – FAQs

What does the “Too Many Open Files” error mean in Linux?

This error indicates that the system has reached its limit for the number of open file descriptors that can be simultaneously active. File descriptors are used by programs to access files, sockets, pipes, and other I/O resources. When this limit is exceeded, the system cannot open additional files, leading to the error.

What causes the “Too Many Open Files” error?

The error typically occurs when a process or application opens more files than the system’s limit allows. This can happen due to programming errors, misconfigured applications, or an excessive number of open files in a server environment. It can also be caused by high system load or resource contention.

How can I check the current limit for open files in my Linux system?

You can check the current limit for open files using the `ulimit` command with the `-n` option. Simply type `ulimit -n` in the terminal to display the current maximum number of open file descriptors allowed for your user session.

How do I fix the “Too Many Open Files” error in Linux?

There are several approaches to fixing this error. One common solution is to increase the maximum number of open files allowed by adjusting system-wide or per-user limits using tools like `ulimit`, `sysctl`, or by editing configuration files such as `/etc/security/limits.conf`. Another approach is to optimize your applications to use file descriptors more efficiently, closing files promptly after use and implementing proper error handling to prevent leaks.

Are there any tools or commands to help diagnose and troubleshoot the “Too Many Open Files” error?

Yes, several tools and commands can help diagnose and troubleshoot this error. `lsof` (List Open Files) is a powerful command-line utility that lists all open files and the processes that opened them, which can help identify which processes are consuming too many file descriptors. `strace` can be used to trace system calls made by a process, providing insights into file operations and potential issues. Additionally, monitoring tools like `top` and `htop` can help identify processes consuming excessive resources.

Conclusion

The “Too Many Open Files” error in Linux can be mitigated by adjusting system limits, closing unused file descriptors, and debugging resource-intensive processes. By understanding the causes and applying appropriate solutions, you can ensure smoother operation of your Linux system and applications. Remember to monitor system resources regularly to detect and address potential issues proactively.

Share your thoughts in the comments

Please Login to comment...