Cache Eviction Policies | System Design

Last Updated :

23 Jan, 2024

Cache eviction refers to the process of removing data from a cache to make room for new or more relevant information. Caches store frequently accessed data for quicker retrieval, improving overall system performance. However, caches have limited capacity, and when the cache is full, the system must decide which data to remove. The eviction policy determines the criteria for selecting the data to be replaced. This post will dive deep into Cache Eviction and its policies.

.jpg)

Important Topics for the Cache Eviction Policies

What are Cache Eviction Policies?

Cache eviction policies are algorithms or strategies that determine which data to remove from a cache when it reaches its capacity limit. These policies aim to maximize the cache’s efficiency by retaining the most relevant and frequently accessed information. Efficient cache eviction policies are crucial for maintaining optimal performance in systems with limited cache space, ensuring that valuable data is retained for quick retrieval.

Cache Eviction Policies

Cache eviction policies are algorithms or strategies implemented to decide which data should be removed from a cache when the cache reaches its storage capacity. These policies are essential for optimizing the use of limited cache space and maintaining the most relevant information for faster retrieval. Some of the most important and common cache eviction strategies are:

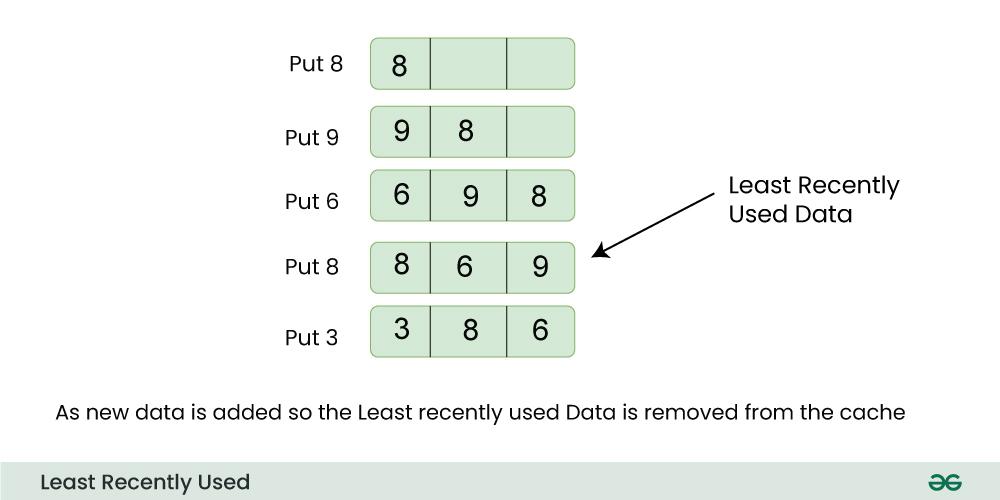

1. Least Recently Used(LRU)

In the Least Recently Used (LRU) cache eviction policy, the idea is to remove the least recently accessed item when the cache reaches its capacity limit. The assumption is that items that haven’t been accessed for a longer time are less likely to be used in the near future. LRU maintains a record of the order in which items are accessed, and when the cache is full, it evicts the item that hasn’t been accessed for the longest period.

For Example:

Consider a cache with a maximum capacity of 3, initially containing items A, B, and C in that order.

- If a new item, D, is accessed, the cache becomes full, and the LRU policy would evict the least recently used item, which is A. The cache now holds items B, C, and D.

- If item B is accessed next, the order becomes C, D, B.

- If another item, E, is accessed, the cache is full again, and the LRU policy would evict C, resulting in the cache holding items D, B, and E. The order now is B, E, D.

LRU ensures that the most recently accessed items are retained in the cache, optimizing for scenarios where recent access patterns are indicative of future accesses.

Advantages of Least Recently Used(LRU)

- Simple Implementation: LRU is relatively easy to implement and understand, making it a straightforward choice for many caching scenarios.

- Efficient Use of Cache: LRU is effective in scenarios where recent accesses are good predictors of future accesses. It ensures that frequently accessed items are more likely to stay in the cache.

- Adaptability: LRU is adaptable to various types of applications, including databases, web caching, and file systems.

Disadvantages of Least Recently Used(LRU)

- trict Ordering: LRU assumes that the order of access accurately reflects the future usefulness of an item. In certain cases, this assumption may not hold true, leading to suboptimal cache decisions.

- Cold Start Issues: When a cache is initially populated, LRU might not perform optimally as it requires sufficient historical data to make informed eviction decisions.

- Memory Overhead: Implementing LRU often requires additional memory to store timestamps or maintain access order, which can impact the overall memory consumption of the system.

Use Cases of Least Recently Used(LRU)

- Web Caching:

- In web caching scenarios, LRU is commonly employed to store frequently accessed web pages, images, or resources. This helps in reducing latency by keeping the most recently used content readily available, improving overall website performance.

- Database Management:

- LRU is often used in database systems to cache query results or frequently accessed data pages. This accelerates query response times by keeping recently used data in memory, reducing the need to fetch data from slower disk storage.

- File Systems:

- File systems can benefit from LRU when caching file metadata or directory information. Frequently accessed files and directories are kept in the cache, improving file access speed and reducing the load on the underlying storage.

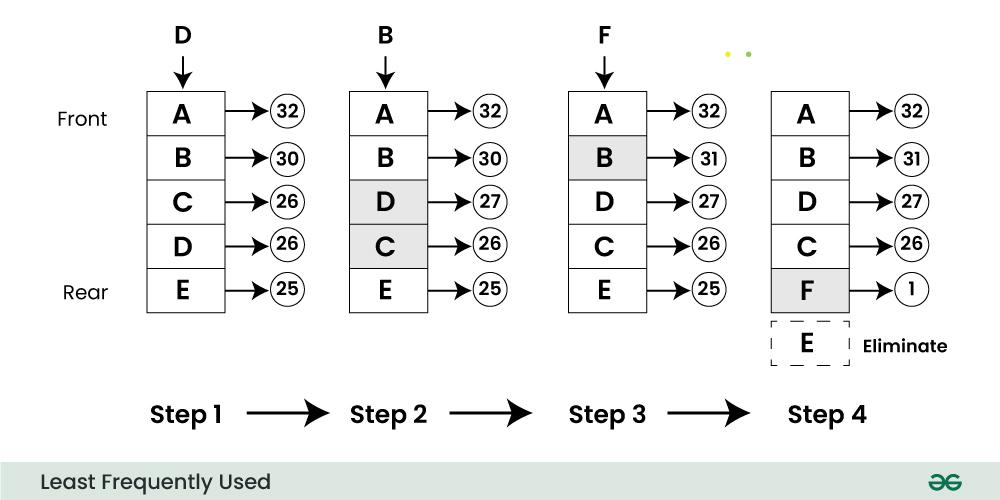

2. Least Frequently Used(LFU)

LFU is a cache eviction policy that removes the least frequently accessed items first. It operates on the principle that items with the fewest accesses are less likely to be needed in the future. LFU maintains a count of how often each item is accessed and, when the cache is full, evicts the item with the lowest access frequency.

For Example:

Consider a cache with items X, Y, and Z. If item Z has been accessed fewer times than items X and Y, the LFU policy will retain the items X and Y and potentially evict item Z when the cache reaches its capacity.

In summary, LRU focuses on the recency of accesses, while LFU considers the frequency of accesses when deciding which items to retain in the cache.

Advantages of Least Frequently Used(LFU)

- Adaptability to Varied Access Patterns:

- LFU is effective in scenarios where some items may be accessed infrequently but are still essential. It adapts well to varying access patterns and does not strictly favor recently accessed items.

- Optimized for Long-Term Trends:

- LFU can be beneficial when the relevance of an item is better captured by its overall frequency of access over time rather than recent accesses. It is well-suited for scenarios where items with higher historical access frequencies are likely to be more relevant.

- Low Memory Overhead:

- LFU may have lower memory overhead compared to some implementations of LRU since it doesn’t require tracking timestamps. This can be advantageous in memory-constrained environments.

Disadvantages of Least Frequently Used(LFU)

- Sensitivity to Initial Access:

- LFU may not perform optimally during the initial stages when access frequencies are still being established. It relies on historical access patterns, and a new or less frequently accessed item might not be retained in the cache until its long-term frequency is established.

- Difficulty in Handling Changing Access Patterns:

- LFU can struggle in scenarios where access patterns change frequently. Items that were once heavily accessed but are no longer relevant might continue to be retained in the cache.

- Complexity of Frequency Counters:

- Implementing accurate frequency counting for items can add complexity to LFU implementations. Maintaining and updating frequency counters for every item in the cache can be resource-intensive.

Use Cases of Least Frequently Used(LFU)

- Database Query Caching:

- In database management systems, LFU can be applied to cache query results or frequently accessed data. It ensures that items that are accessed less frequently but are still important are retained in the cache.

- Network Routing Tables:

- LFU is useful in caching routing information for networking applications. Items representing less frequently used routes are kept in the cache, allowing for efficient routing decisions based on historical usage.

- Content Recommendations:

- In content recommendation systems, LFU can be employed to cache information about user preferences or content suggestions. It ensures that even less frequently accessed recommendations are considered over time.

3. First-In-First-Out(FIFO)

First-In-First-Out (FIFO) is a cache eviction policy that removes the oldest item from the cache when it becomes full. In this strategy, data is stored in the cache in the order it arrives, and the item that has been present in the cache for the longest time is the first to be evicted when the cache reaches its capacity.

For Example:

Imagine a cache with a capacity of three items:

- A is added to the cache.

- B is added to the cache.

- C is added to the cache.

At this point, the cache is full (capacity = 3)

If a new item, D, needs to be added, the FIFO policy would dictate that the oldest item, A, should be evicted. The cache would then look like:

- D is added to the cache (A is evicted).

- The order of items in the cache now is B, C, and D, reflecting the chronological order of their arrival.

- This ensures a fair and straightforward approach based on the sequence of data access, making it suitable for scenarios where maintaining a temporal order is important.

Advantages of First-In-First-Out(FIFO)

- Simple Implementation: FIFO is straightforward to implement, making it an easy choice for scenarios where simplicity is a priority.

- Predictable Behavior: The eviction process in FIFO is predictable and follows a strict order based on the time of entry into the cache. This predictability can be advantageous in certain applications.

- Memory Efficiency: FIFO has relatively low memory overhead compared to some other eviction policies since it doesn’t require additional tracking of access frequencies or timestamps.

Disadvantages of First-In-First-Out(FIFO)

- Lack of Adaptability: FIFO may not adapt well to varying access patterns. It strictly adheres to the order of entry, which might not reflect the actual importance or relevance of items.

- Inefficiency in Handling Variable Importance: FIFO might lead to inefficiencies when newer items are more relevant or frequently accessed than older ones. This can result in suboptimal cache performance.

- Cold Start Issues: When a cache is initially populated or after a cache flush, FIFO may not perform optimally, as it tends to keep items in the cache based solely on their entry time, without considering their actual usage.

Use Cases of First-In-First-Out(FIFO)

- Task Scheduling in Operating Systems: In task scheduling, FIFO can be employed to determine the order in which processes or tasks are executed. The first task that arrives in the queue is the first one to be processed.

- Message Queues: In message queuing systems, FIFO ensures that messages are processed in the order they are received. This is crucial for maintaining the sequence of operations in applications relying on message-based communication.

- Cache for Streaming Applications: FIFO can be suitable for certain streaming applications where maintaining the order of data is essential. For example, in a video streaming cache, FIFO ensures that frames are presented in the correct sequence.

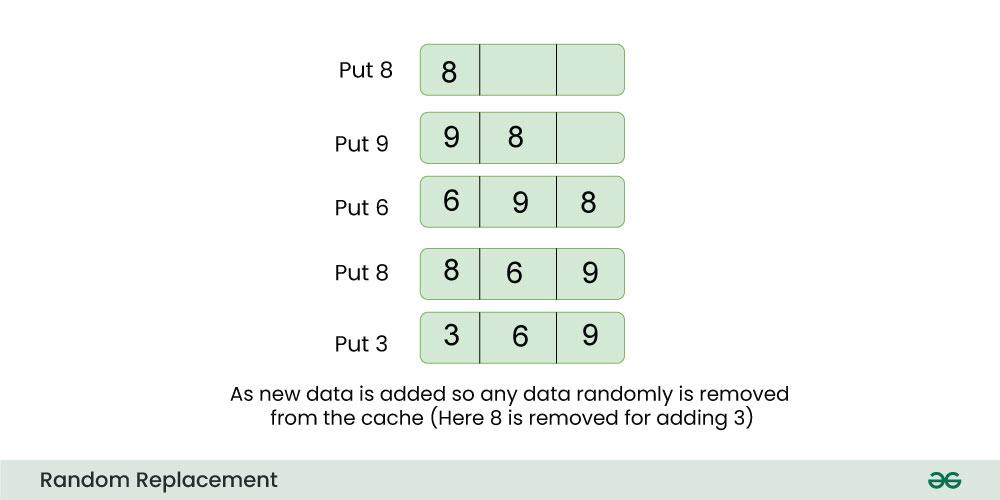

4. Random Replacement

Random Replacement is a cache eviction policy where, when the cache is full and a new item needs to be stored, a randomly chosen existing item is evicted to make room. Unlike some deterministic policies like LRU (Least Recently Used) or FIFO (First-In-First-Out), which have specific criteria for selecting items to be evicted, Random Replacement simply selects an item at random.

For Example:

Consider a cache with three slots and the following data:

- Item A

- Item B

- Item C

Now, if the cache is full and a new item, Item D, needs to be stored, Random Replacement might choose to evict Item B, resulting in:

- Item A

- Item D

- Item C

The selection of Item B for eviction is entirely random in this policy, making it a straightforward but less predictable strategy compared to others. While simple, Random Replacement doesn’t consider the frequency or recency of item access and may not always result in the most optimal cache performance.

Advantages of Random Replacement

- Simplicity: Random replacement is a straightforward and easy-to-implement strategy. It does not require complex tracking or analysis of access patterns.

- Avoids Biases: Since random replacement doesn’t rely on historical usage patterns, it avoids potential biases that may arise in more deterministic policies.

- Low Overhead: The algorithm involves minimal computational overhead, making it efficient in terms of processing requirements.

Disadvantages of Random Replacement

- Suboptimal Performance: Random replacement may lead to suboptimal cache performance compared to more sophisticated policies. It doesn’t consider the actual usage patterns or the likelihood of future accesses.

- No Adaptability: It lacks adaptability to changing access patterns. Other eviction policies, like LRU or LFU, consider the historical behavior of items and adapt to evolving patterns, potentially providing better cache performance over time.

- Possibility of Poor Hit Rates: The random nature of eviction may result in poor hit rates, where frequently accessed items are unintentionally evicted, leading to more cache misses.

Use Cases of Random Replacement

- Non-Critical Caching Environments:

- In scenarios where the impact of cache misses is minimal or where caching is employed for non-critical purposes, such as temporary storage of non-essential data, random replacement can be sufficient.

- Simulation and Testing:

- Random replacement is useful in simulation environments and testing scenarios where simplicity and ease of implementation take precedence over sophisticated eviction policies. It allows for a quick and straightforward approach without the need for complex tracking mechanisms.

- Resource-Constrained Systems:

- In resource-constrained environments, where computational resources are limited, the low overhead of random replacement may be advantageous. The algorithm requires minimal processing power compared to more complex eviction policies.

Conclusion

In conclusion, cache eviction policies play a crucial role in system design, impacting the efficiency and performance of caching mechanisms. The choice of an eviction policy depends on the specific characteristics and requirements of the system. While simpler policies like Random Replacement offer ease of implementation and low overhead, more sophisticated strategies such as Least Recently Used (LRU) or Least Frequently Used (LFU) take into account historical access patterns, leading to better adaptation to changing workloads

Share your thoughts in the comments

Please Login to comment...