Data Ingestion is the process of, transferring data, from varied sources to an approach, where it can be analyzed, archived, or utilized by an establishment. The usual steps, involved in this process, are drawing out data, from its current place, converting the data, and, finally loading it, in a location, for efficient research. Python provides many such tools, and, frameworks for data ingestion. These include Bonobo, Beautiful Soup4, Airflow, Pandas, etc. In this article, we will learn about Data Ingestion with Pandas library.

Data Ingestion with Pandas:

Data Ingestion with Pandas, is the process, of shifting data, from a variety of sources, into the Pandas DataFrame structure. The source of data can be varying file formats such as Comma Separated Data, JSON, HTML webpage table, Excel. In this article, we will learn about, transferring data, from such formats, into the destination, which is a Pandas dataframe object.

Approach:

The basic approach, for transferring any such data, into a dataframe object, is as follows –

- Prepare your source data.

- Data can be present, on any remote server, or, on a local machine. We need to know, the URL of the file if it’s on a remote server. The path of the file, on local machine, is required, if data is present locally.

- Use Pandas ‘read_x’ method

- Pandas provide ‘read_x’ methods, for loading and converting the data, into a Dataframe object.

- Depending on the data format, use the ‘read’ method.

- Print data from DataFrame object.

- Print the dataframe object, to verify, that the conversion was smooth.

File Formats for Ingestion:

In this article, we will be converting, data present in the following files, to dataframe structures –

- Read data from CSV file

- Read data from Excel file

- Read data from JSON file

- Read data from Clipboard

- Read data from HTML table from web page

- Read data from SQLite table

Read data from CSV file

To load, data present in Comma-separated file(CSV), we will follow steps as below:

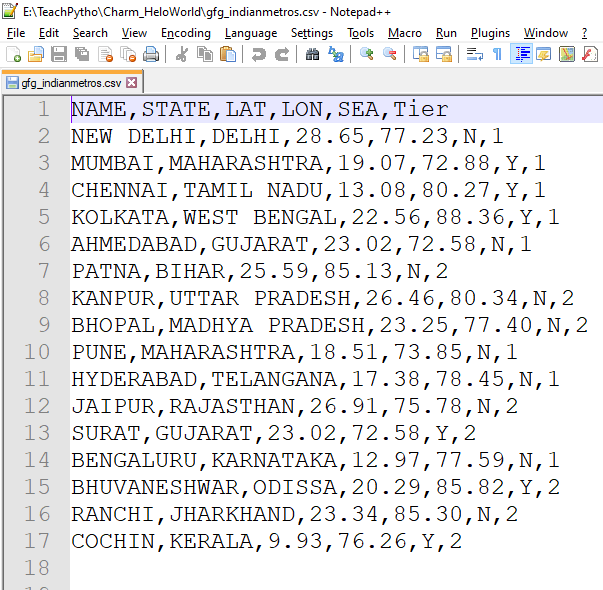

- Prepare your sample dataset. Here, we have a CSV file, containing information, about Indian Metro cities. It describes if the city is a Tier1 or Tier2 city, their geographical location, state they belong to, and if it is a coastal city.

- Use Pandas method ‘read_csv’

- Method used – read_csv(file_path)

- Parameter – String format, containing the path of the file and its name, or, URL when present on the remote server. It reads, the file data, and, converts it, into a valid two-dimensional dataframe object. This method can be used to read data, present in “.csv” as well as “.txt” file formats.

The file contents are as follows:

The contents of “gfg_indianmetros.csv” file

The code to get the data in a Pandas Data Frame is:

Python

import pandas

dfIndianMetros = pandas.read_csv("gfg_indianmetros.csv")

print(dfIndianMetros)

|

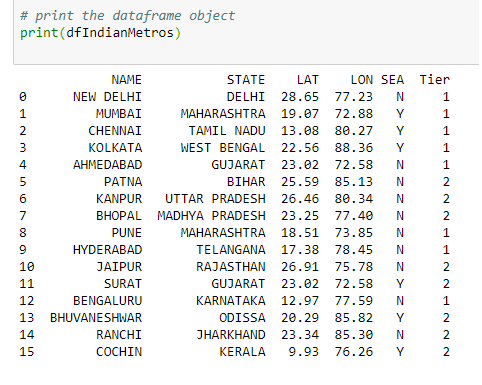

Output:

The CSV data, in dataframe object

Read data from an Excel file

To load data present in an Excel file(.xlsx, .xls) we will follow steps as below-

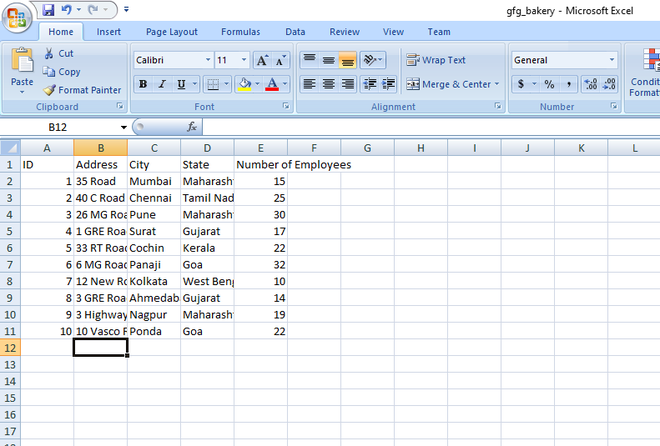

- Prepare your sample dataset. Here, we have an Excel file, containing information about Bakery and its branches. It describes the number of employees, address of branches of the bakery.

- Use Pandas method ‘read_excel’ .

- Method used – read_excel(file_path)

- Parameter – The method accepts, the path of the file and its name, in string format as a parameter. The file can be on a remote server, or, on a machine locally. It reads the file data, and, converts it, into a valid two-dimensional data frame object. This method, can be used, to read data present in “.xlsx” as well as “.xls” file formats.

The file contents are as follows:

The contents of “gfg_bakery.xlsx” file

The code to get the data in a Pandas DataFrame is:

Python

import pandas

dfBakery = pandas.read_excel("gfg_bakery.xlsx")

print(dfBakery)

|

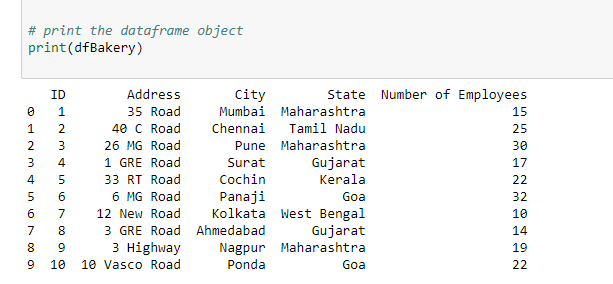

Output:

The Excel data, in dataframe object

Read data from a JSON file

To load data present in a JavaScript Object Notation file(.json) we will follow steps as below:

- Prepare your sample dataset. Here, we have a JSON file, containing information about Countries and their dial code.

- Use Pandas method ‘read_json’ .

- Method used – read_json(file_path)

- Parameter – This method, accepts the path of the file and its name, in string format, as a parameter. It reads the file data, and, converts it, into a valid two-dimensional data frame object.

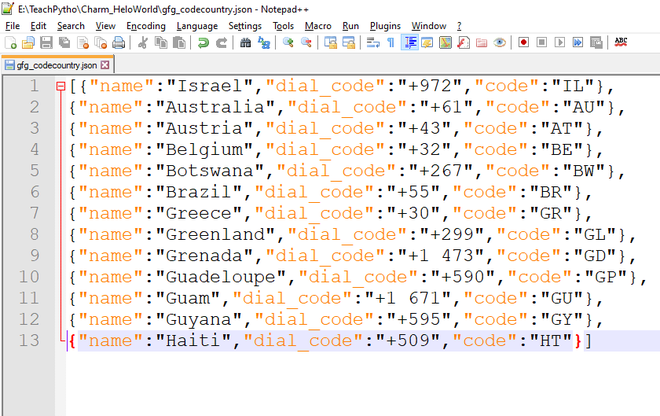

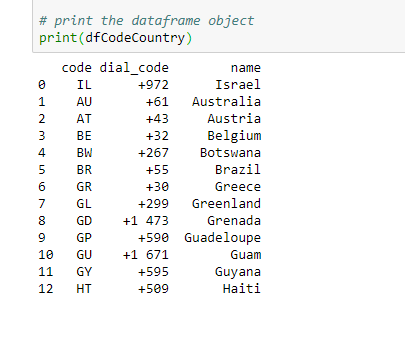

The file contents are as follows:

The contents of “gfg_codecountry.json” file

The code to get the data in a Pandas DataFrame is:

Python

import pandas

dfCodeCountry = pandas.read_json("gfg_codecountry.json")

print(dfCodeCountry)

|

Output:

The JSON data, in dataframe objects

Read data from Clipboard

We can also transfer data present in Clipboard to a dataframe object. A clipboard is a part of Random Access Memory(RAM), where copied data is present. Whenever we copy any file, text, image, or any type of data, using the ‘Copy’ command, it gets stored in the Clipboard. To convert, data present here, follow the steps as mentioned below –

- Select all the contents of the file. The file should be a CSV file. It can be a ‘.txt’ file as well, containing comma-separated values, as shown in the example. Please note, if the file contents are not in a favorable format, then, one can get a Parser Error at runtime.

- Right, Click and say Copy. Now, this data is transferred, to the computer Clipboard.

- Use Pandas method ‘read_clipboard’ .

- Method used – read_clipboard

- Parameter – The method, does not accept any parameter. It reads the latest copied data as present in the clipboard, and, converts it, into a valid two-dimensional dataframe object.

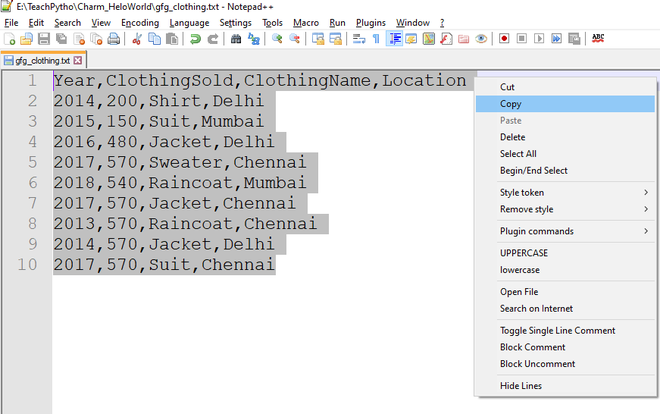

The file contents selected are as follows:

The contents of “gfg_clothing.txt” file

The code to get the data in a Pandas DataFrame is:

Python

import pandas

pdCopiedData = pd.read_clipboard()

print(pdCopiedData)

|

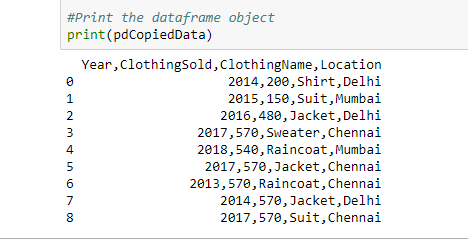

Output:

The clipboard data, in dataframe object

Read data from HTML file

A webpage is usually made of HTML elements. There are different HTML tags such as <head>, <title> , <table>, <div> based on the purpose of data display, on browser. We can transfer, the content between <table> element, present in an HTML webpage, to a Pandas data frame object. Follow the steps as mentioned below –

- Select all the elements present in the <table>, between start and end tags. Assign it, to a Python variable.

- Use Pandas method ‘read_html’ .

- Method used – read_html(string within <table> tag)

- Parameter – The method, accepts string variable, containing the elements present between <table> tag. It reads the elements, traversing through the table, <tr> and <td> tags, and, converts it, into a list object. The first element of the list object is the desired dataframe object.

The HTML webpage used is as follows:

HTML

<!DOCTYPE html>

<html>

<head>

<title>Data Ingestion with Pandas Example</title>

</head>

<body>

<h2>Welcome To GFG</h2>

<table>

<thead>

<tr>

<th>Date</th>

<th>Empname</th>

<th>Year</th>

<th>Rating</th>

<th>Region</th>

</tr>

</thead>

<tbody>

<tr>

<td>2020-01-01</td>

<td>Savio</td>

<td>2004</td>

<td>0.5</td>

<td>South</td>

</tr>

<tr>

<td>2020-01-02</td>

<td>Rahul</td>

<td>1998</td>

<td>1.34</td>

<td>East</td>

</tr>

<tr>

<td>2020-01-03</td>

<td>Tina</td>

<td>1988</td>

<td>1.00023</td>

<td>West</td>

</tr>

<tr>

<td>2021-01-03</td>

<td>Sonia</td>

<td>2001</td>

<td>2.23</td>

<td>North</td>

</tr>

</tbody>

</table>

</body>

</html>

|

Write the following code to convert the HTML table content in the Pandas Dataframe object:

Python

import pandas

html_string =

df = pandas.read_html(html_string)

dfHtml = df[0]

print(dfHtml)

|

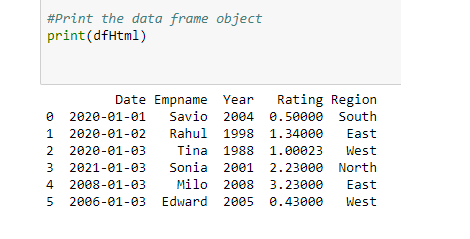

Output:

The HTML <table> data, in dataframe object,

Read data from SQL table

We can convert, data present in database tables, to valid dataframe objects as well. Python allows easy interface, with a variety of databases, such as SQLite, MySQL, MongoDB, etc. SQLite is a lightweight database, which can be embedded in any program. The SQLite database holds all the related SQL tables. We can load, SQLite table data, to a Pandas dataframe object. Follow the steps, as mentioned below –

- Prepare a sample SQLite table using ‘DB Browser for SQLite tool’ or any such tool. These tools allow the effortless creation, edition of database files compatible with SQLite. The database file, has a ‘.db’ file extension. In this example, we have ‘Novels.db’ file, containing a table called “novels”. This table has information about Novels, such as Novel Name, Price, Genre, etc.

- Here, to connect to the database, we will import the ‘sqlite3’ module, in our code. The sqlite3 module, is an interface, to connect to the SQLite databases. The sqlite3 library is included in Python, since Python version 2.5. Hence, no separate installation is required. To connect to the database, we will use the SQLite method ‘connect’, which returns a connection object. The connect method accepts the following parameters:

- database_name – The name of the database in which the table is present. This is a .db extension file. If the file is present, an open connection object is returned. If the file is not present, it is created first and then a connection object is returned.

- Use Pandas method ‘read_sql_query’.

- Method used – read_sql_query

- Parameter – This method accepts the following parameters

- SQL query – Select query, to fetch the required rows from the table.

- Connection object – The connection object returned by the ‘connect’ method. The read_sql_query method, converts, the resultant rows of the query, to a dataframe object.

- Print the dataframe object using the print method.

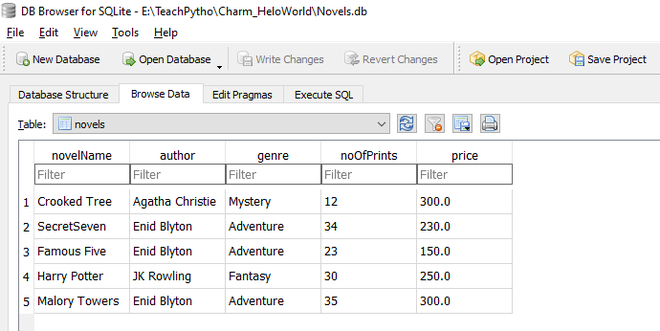

The Novels.db database file looks as follows –

The novels table, as seen, using DB Browser for SQLite tool

Write the following code to convert the Novels table, in Pandas Data frame object:

Python

import sqlite3

import pandas

conn = sqlite3.connect("Novels.db")

pdSql = pd.read_sql_query("SELECT * FROM novels", conn)

print(pdSql)

conn.close()

|

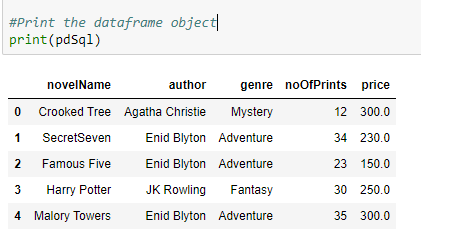

Output:

The Novels table data in dataframe object

Share your thoughts in the comments

Please Login to comment...