Program to Convert ASCII to Unicode

Last Updated :

08 Feb, 2024

In this article, we will learn about different character encoding techniques which are ASCII (American Standard Code for Information Interchange) and Unicode (Universal Coded Character Set), and the conversion of ASCII to Unicode.

What is ASCII Characters?

ASCII characters are a standardized set of 128 symbols, including letters, digits, punctuation, and control characters, each assigned a unique numerical code. This character encoding system is widely used to represent text in computers, providing a common framework for data interchange and communication.

What is ASCII Table?

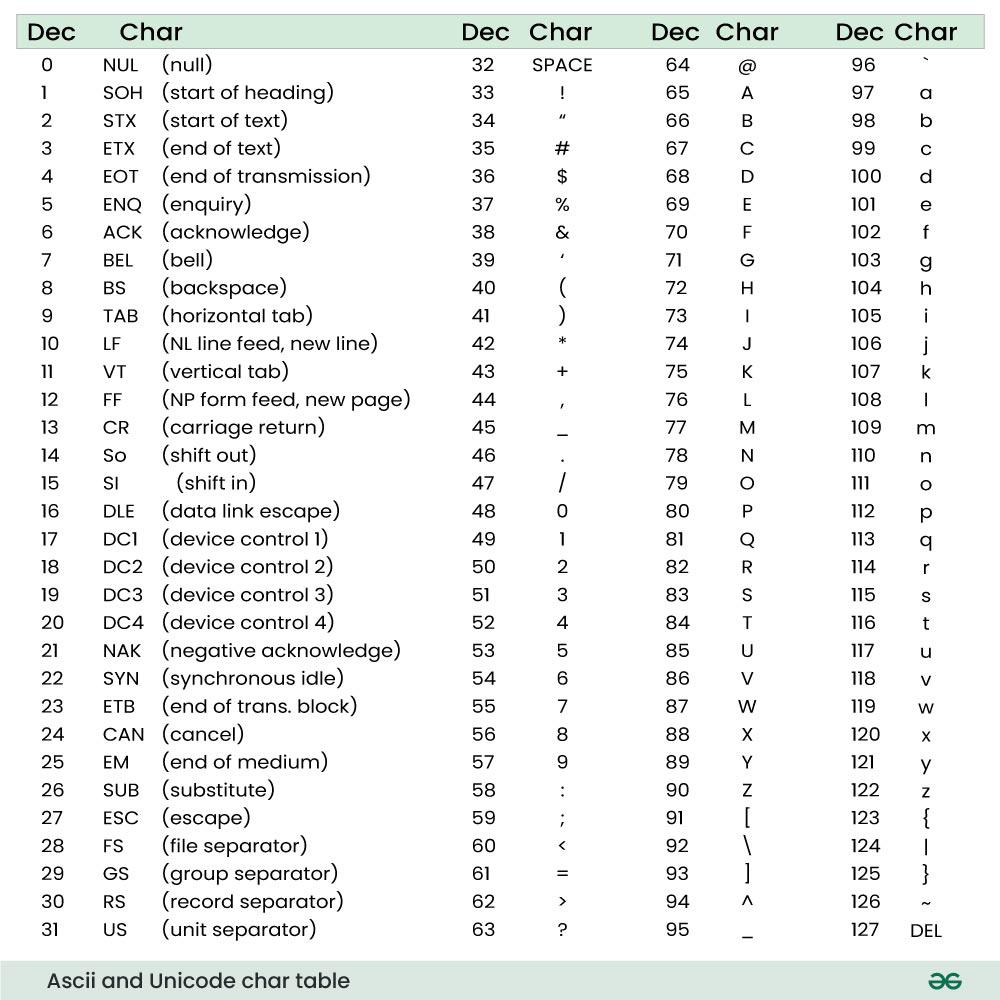

An ASCII table is a chart that displays the American Standard Code for Information Interchange (ASCII) characters along with their corresponding decimal, hexadecimal, and binary values. ASCII is a character encoding standard that assigns numerical values to letters, digits, and other characters commonly used in computing and telecommunications.

The following list represents the ASCII Table values and characters related to them.

Ascii and Unicode

What is Unicode?

Unicode is a character encoding well known used in conversation structures and computer systems. Unlike ASCII, which uses 7-bit encoding and encodes 128 unique characters (zero-127), Unicode makes use of variable-period encoding to symbolize a full-size variety of characters from numerous scripts and languages. Unicode can represent over 1,000,000 distinct characters, making it a comprehensive character encoding standard.

The interesting thing over here is that the ASCII (American Standard Code for Information Interchange) is a subset of the UNICODE (Universal Coded Character Set) encoding standard. Therefore there is not any need to convert ASCII to UNICODE as we have same values representing the characters in ASCII and UNICODE.

Need for ASCII to Unicode Conversion

ASCII, with its 128 characters, has limitations in representing characters from various languages and complex scripts. Unicode, on the other hand, provides a broader character set, supporting multiple languages and symbols. Converting from ASCII to Unicode is crucial when working with internationalization, ensuring proper representation and compatibility across different systems.

Algorithm for ASCII to Unicode Conversion

The algorithm for converting ASCII to Unicode involves mapping each ASCII character to its corresponding Unicode code point.

Let’s break down the steps of the algorithm:

- Step 1: Accept an ASCII string (inputString) as input.

- Step 2: Prepare an empty string (outputString) to store the Unicode output.

- Step 4: Iterate through each character in the input string.

- Step 5: Obtain the ASCII code of the current character (asciiCode).

- Step 6: Convert the ASCII code to the corresponding Unicode code point (unicodeCodePoint).

- Step 7: Append the Unicode code point to the output string.

- Step 8: Output the resulting Unicode string (outputString).

The algorithm for converting ASCII to Unicode involves mapping each ASCII character to its corresponding Unicode code point.

Below is the implementation for the ASCII to Unicode conversion:

C++

#include <iostream>

using namespace std;

int main() {

char asciiCharacter = 'A';

int unicodeValue = static_cast<int>(asciiCharacter);

cout << "Unicode value for character 'A': " << unicodeValue << endl;

return 0;

}

|

C

#include <stdio.h>

int main() {

char asciiCharacter = 'A';

int unicodeValue = (int)asciiCharacter;

printf("Unicode value for character 'A': %d\n", unicodeValue);

return 0;

}

|

Java

public class Main {

public static void main(String[] args) {

char asciiCharacter = 'A';

int unicodeValue = (int)asciiCharacter;

System.out.println("Unicode value for character 'A': " + unicodeValue);

}

}

|

Python

asciiCharacter = 'A'

unicodeValue = ord(asciiCharacter)

print(f"Unicode value for character 'A': {unicodeValue}")

|

C#

using System;

class Program

{

static void Main()

{

char asciiCharacter = 'A';

int unicodeValue = (int)asciiCharacter;

Console.WriteLine($"Unicode value for character 'A': {unicodeValue}");

}

}

|

Javascript

const asciiCharacter = 'A';

const unicodeValue = asciiCharacter.charCodeAt(0);

console.log(`Unicode value for character 'A': ${unicodeValue}`);

|

Output

Unicode value for character 'A': 65

Time Complexity: O(1)

Auxiliary Space: O(1)

Share your thoughts in the comments

Please Login to comment...