Google Cloud developed a specific platform named Vertex AI, which provides the user with a single environment to train their machine learning model, interact with them, and discover already available machine learning models and AI applications. It also lets the user customize and improve their Large Language Models (LLMs) for their AI application. Vertex AI is a platform that brings data science, data engineering, and machine learning workflows under the same umbrella. Bringing everything under the same umbrella lets the teams collaborate easily on a project and use all the required tools in the same place without finding them elsewhere. They can also use the benefit of Google Cloud to scale and maintain their applications over the cloud.

Tools Provided for Training and Deployment

Vertex AI provides various tools for training and deployment purposes; some of them are listed below:

- AutoML – AutoML or Auto Machine Learning is a tool provided by Vertex AI that lets the user train image data, normal text data, tabular data, video data etc. without writing any code or preparing data sets manually.

- Custom Training – It gives the user 100% control of the training process of the ML model, it involves writing the code, choosing the framework and choosing hyperparameters as required.

- Generative AI – It lets the developer access Google Cloud’s vast amount of generative AI models of various type which includes text, images, speech, code etc.

- Model Deployment – Vertex AI allows the developers to deploy their Machine Learning models as RESTful APIs, this makes it easier to integrate or use those models easily into the application.

- Integration with MLOps – Vertex AI works with the Google Cloud’s MLOPs capabilities for continuous integration and continuous deployment (CI/CD) and versioning of the Machine Learning models.

Interfaces for Vertex AI

Vertex AI offers a plethora of Interfaces using which users can interact with it and use its services. The only catch is that, some services can only be accessed and used by some interfaces, whereas some can be accessed and used by all.

Vertex AI Console

Vertex AI Console section of the Google Cloud Console is the GUI interface to use and interact with the Vertex AI and it’s services. User can interact with the models, datasets, endpoints and jobs. The Console is divided well based on the type of work it can do, for example in the sub-section called Model Deployment user gets the option Train the Model, Do some experiment with it and Collect it’s metadata.

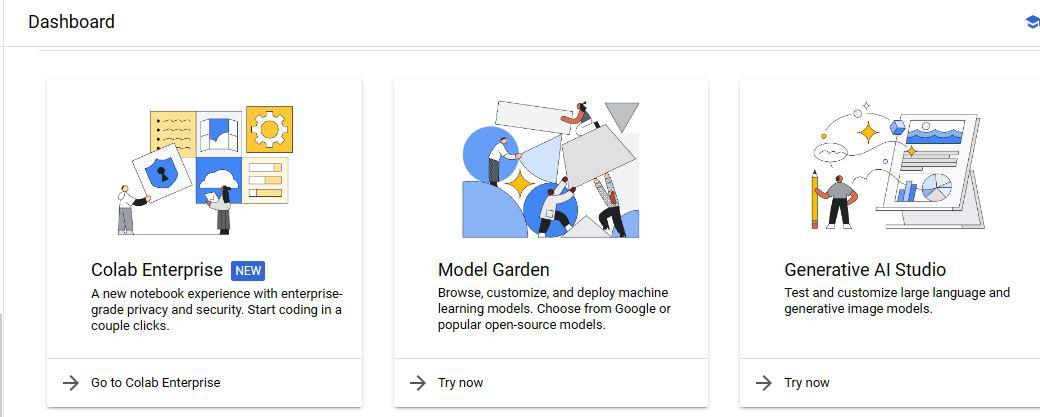

Below are the images of the Vertex AI console divided in those sub parts for better understanding.

Welcome Part

The above section is encouraging the user to enable all the recommended APIs needed to use the Vertex AI properly. It also lets the user to view the tutorial of how to use the Vertex AI if they need.

Mostly used services section

Just below the Welcome part, the services which are mostly used by the developers are listed in a row wise manner. There are usually two rows which consists of 6 mostly used services of Vertex AI, clicking any one of these will open that particular service and user can manipulate that as they want.

This part is the next row, it also consists of three mostly used services of Vertex AI.

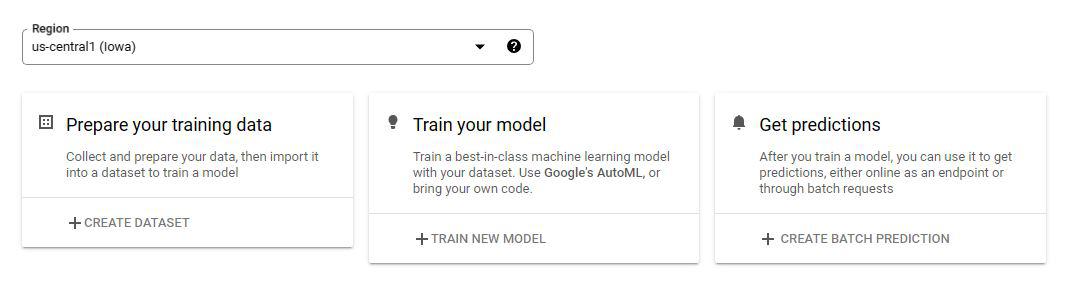

Last Part

This is the end most part of the Vertex AI console where the user can select their region and prepare their dataset or train their models or get batch prediction. All of the above mentioned service requires a API to be enabled first. Without which none of them will work.

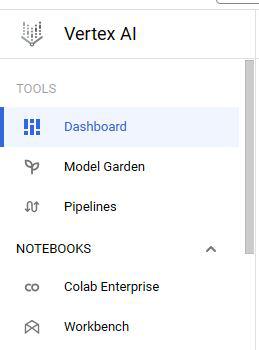

Left Sub Menu with Detailed list of services of Vertex AI

Vertex AI Console also provides a left sub menu with a detailed list of services provided by Vertex AI.

Google Cloud Command Line Interface (CLI)

The Cloud Shell, also known as the Google Cloud Command Line Interface can also be used to perform tasks related to Vertex AI. User can use only commands to manipulate the Vertex AI. User must have some good knowledge about the specific commands to use this approach, otherwise they can’t manipulate it easily.

Below are some of the commands which can be used in CLI to manipulate Vertex AI services.

gcloud ai-platform jobs list

The above command can be used to see all the Vertex AI jobs in the developer’s project in a listed manner.

gcloud ai-platform jobs submit training JOB [optional flags] [-- USER_ARGS ...]

This command can be used to submit a training job, user need to provide the exact specification of the Job they want to submit for training. The [optional flags] section can be any of the following –

--async | --config | --enable-web-access | --help |

--job-dir | --kms-key | --kms-keyring |

--kms-location | --kms-project | --labels |

--master-accelerator | --master-image-uri |

--master-machine-type | --module-name |

--package-path | --packages |

--parameter-server-accelerator |

--parameter-server-count |

--parameter-server-image-uri |

--parameter-server-machine-type | --python-version |

--region | --runtime-version | --scale-tier |

--service-account | --staging-bucket | --stream-logs |

--use-chief-in-tf-config | --worker-accelerator |

--worker-count | --worker-image-uri |

--worker-machine-type

gcloud ai-platform jobs submit prediction JOB --data-format=DATA_FORMAT --input-paths=INPUT_PATH,[INPUT_PATH,...] --output-path=OUTPUT_PATH --region=REGION (--model=MODEL | --model-dir=MODEL_DIR) [optional flags]

The above command is used to start a Vertex AI batch prediction job, here also the user need to provide proper details of the job. Here the [optional flags] section can be any of the following, based on user’s requirement –

optional flags may be --batch-size | --help | --labels | --max-worker-count |

--model | --model-dir | --runtime-version |

--signature-name | --version

gcloud ai endpoints create --display-name=DISPLAY_NAME [optional flags]

This command is used to create and Endpoint, user MUST provide the Display Name here, otherwise the command will throw an error.

gcloud ai models list

This command is used to list the AI models created using Vertex AI. After running the command the CLI will ask the user to enter the region in which they want to see the models. After entering the region it will give the output of how many models are available in that region.

gcloud ai-platform operations list

This command lists all the long running operations which are part of the Vertex AI project, this helps in monitoring the progess or model training and deployment.

gcloud ai custom-jobs create --display-name=DISPLAY_NAME (--config=CONFIG --worker-pool-spec=[WORKER_POOL_SPEC,...]) [optional flags]

This command is used to create a custom Vertex AI job with specific configuration and Display Name.

gcloud ai custom-jobs describe (CUSTOM_JOB : --region=REGION) [optional flags]

This command is used to describe a specific custom Vertex AI job. It returns the detailed explanation of the Custom Job.

Terraform

Terraform is a tool that helps you set up and manage different services in Google Cloud, like Vertex AI. It uses a special language to describe what resources you want to create and what permissions they should have.

Let’s say you want to set up multiple resources in Vertex AI with the same settings. Instead of doing it manually, you can use Terraform to make the process easier. Here’s how it works:

- First, you write a file that describes the resources you want to create in Vertex AI. You don’t need to write any complicated code, just describe what you want in a simple way.

- Next, you use Terraform to check your file and create a plan. The plan shows you what changes Terraform will make to the Vertex AI resources based on your file.

- Once you’re happy with the plan, you can tell Terraform to apply the changes. Terraform will use the Vertex AI API to make the changes for you.

- If a resource doesn’t exist yet, Terraform will create it.

- If a resource already exists but has different settings, Terraform will update it to match what you described in your file.

- If a resource already matches what you described, Terraform will leave it as it is.

So, Terraform helps you set up and manage resources in Vertex AI by following a simple process. It saves you time and makes sure everything is set up correctly.

Below is a list of some of the Terraform resources currently available or support Vertex AI –

For Vertex AI Workbench –

- terraform google_notebooks_environment

The above is used to define the configuration of the Google Cloud Notebook environment throughout the project. A Generic structure of the configuration file is given below –

resource "google_notebooks_environment" "environment" {

name = "name-of-notebooks-environment"

location = "us-central1-a"

container_image {

repository = "gcr.io/deeplearning-platform-release/repo-name"

}

}

- terraform google_notebooks_instance

The above service is used to create a Google Notebooks Instance based on certain configuration. In this file the user need to provide all the necessary details they want the instance to have like network details, sub-network details, metadata details, GPU details (if any) etc. A basic structure of a configuration file is given below –

resource "google_notebooks_instance" "instance" {

name = "notebooks-instance"

location = "us-central1-a"

machine_type = "e2-medium"

vm_image {

project = "deeplearning-platform-release"

image_family = "tf-latest-cpu"

}

instance_owners = [ "my@service-account.com"]

service_account = "my@service-account.com"

install_gpu_driver = true

boot_disk_type = "PD_SSD"

boot_disk_size_gb = 110

no_public_ip = true

no_proxy_access = true

network = data.google_compute_network.my_network.id

subnet = data.google_compute_subnetwork.my_subnetwork.id

labels = {

k = "val"

}

metadata = {

terraform = "true"

}

}

data "google_compute_network" "my_network-name" {

name = "default"

}

data "google_compute_subnetwork" "my_subnet-name" {

name = "my-sub"

region = "us-east1"

}

- terraform google_notebooks_runtime

This is used to define the configuration of the Runtime Environment.

Basic Runtime environment with minimalistic configuration details –

resource "google_notebooks_runtime" "runtime" {

name = "notebooks-runtime"

location = "us-central1"

access_config {

access_type = "SINGLE_USER"

runtime_owner = "admin@hashicorptest.com"

}

virtual_machine {

virtual_machine_config {

machine_type = "n1-standard-4"

data_disk {

initialize_params {

disk_size_gb = "100"

disk_type = "PD_STANDARD"

}

}

}

}

}

Runtime with a basic GPU configuration –

resource "google_notebooks_runtime" "runtime_gpu" {

name = "notebooks-runtime-gpu"

location = "us-central1"

access_config {

access_type = "SINGLE_USER"

runtime_owner = "admin@hashicorptest.com"

}

software_config {

install_gpu_driver = true

}

virtual_machine {

virtual_machine_config {

machine_type = "n1-standard-4"

data_disk {

initialize_params {

disk_size_gb = "100"

disk_type = "PD_STANDARD"

}

}

accelerator_config {

core_count = "1"

type = "NVIDIA_TESLA_V100"

}

}

}

}

Runtime with basic Container –

resource "google_notebooks_runtime" "runtime_container" {

name = "notebooks-runtime-container"

location = "us-central1"

access_config {

access_type = "SINGLE_USER"

runtime_owner = "admin@hashicorptest.com"

}

virtual_machine {

virtual_machine_config {

machine_type = "n1-standard-4"

data_disk {

initialize_params {

disk_size_gb = "100"

disk_type = "PD_STANDARD"

}

}

container_images {

repository = "gcr.io/deeplearning-platform-release/base-cpu"

tag = "latest"

}

container_images {

repository = "gcr.io/deeplearning-platform-release/beam-notebooks"

tag = "latest"

}

}

}

}

- terraform google_vertex_ai_dataset

Used to define the Dataset details and provide it’s source of Metadata and region details. A basic structure of the configuration file is given below –

resource "google_vertex_ai_dataset" "tf-dataset" {

display_name = "terraform-dataset"

metadata_schema_uri = "gs://google-cloud-aiplatform/schema/dataset/metadata/filename.yaml"

region = "us-east1"

labels = {

env = "test-env"

}

}

- terraform google_vertex_ai_endpoint

Used to define the configuration of the Deployment Endpoint of the Vertex AI project. A generic syntax of the configuration file for the endpoint is given below –

resource "google_vertex_ai_endpoint" "endpoint" {

name = "endpoint-name"

display_name = "sample-endpoint"

description = "A sample vertex endpoint"

location = "us-central1"

region = "us-central1"

labels = {

label-one = "value-one"

}

network = "projects/${data.google_project.project.number}/global/networks/${google_compute_network.vertex_network.name}"

encryption_spec {

kms_key_name = "kms-name"

}

depends_on = [

google_service_networking_connection.vertex_vpc_connection

]

}

resource "google_service_networking_connection" "vertex_vpc_connection" {

network = google_compute_network.vertex_network.id

service = "servicenetworking.googleapis.com"

reserved_peering_ranges = [google_compute_global_address.vertex_range.name]

}

resource "google_compute_global_address" "vertex_range" {

name = "address-name"

purpose = "VPC_PEERING"

address_type = "INTERNAL"

prefix_length = 24

network = google_compute_network.vertex_network.id

}

resource "google_compute_network" "vertex_network" {

name = "network-name"

}

resource "google_kms_crypto_key_iam_member" "crypto_key" {

crypto_key_id = "kms-name"

role = "roles/cloudkms.cryptoKeyEncrypterDecrypter"

member = "serviceAccount:service-${data.google_project.project.number}@gcp-sa-aiplatform.iam.gserviceaccount.com"

}

data "google_project" "project" {}

Vertex AI SDK for Python

The Vertex AI SDK for Python uses Python code to access the Vertex AI API and automate the process of data ingestion, model training and get prediction on Vertex AI. The main aim of using this is to programmatically accomplish most of the tasks which should be done via Google Cloud console manually.

Use of Vertex AI SDK for Python is recommended if the user is an experienced Machine Learning or Artificial Engineer who is well versed with Python and wants to programmatically automate the Vertex AI processes.

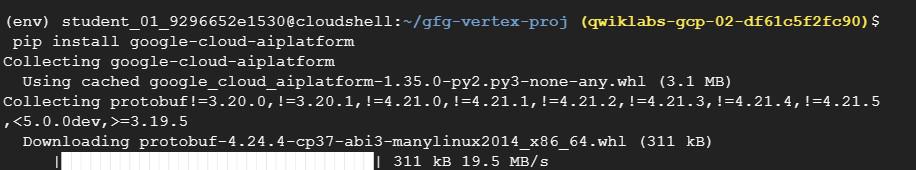

Installing Vertex AI SDK for Python –

There are generally three steps to install Vertex AI SDK for Python.

pip install google-cloud-aiplatform

- Step – 3 : Now whenever we will create any Python file which will be used to automate the vertex AI processes, the below configuration and imports should be present in that file to provide necessary informations like Project Name, Region etc to the Vertex AI

Python3

def init_sample(

project: Optional[str] = None,

location: Optional[str] = None,

experiment: Optional[str] = None,

staging_bucket: Optional[str] = None,

credentials: Optional[auth_credentials.Credentials] = None,

encryption_spec_key_name: Optional[str] = None,

service_account: Optional[str] = None,

):

from google.cloud import aiplatform

aiplatform.init(

project=project,

location=location,

experiment=experiment,

staging_bucket=staging_bucket,

credentials=credentials,

encryption_spec_key_name=encryption_spec_key_name,

service_account=service_account,

)

|

The above is a general representation, user will provide the information according to their needs, it is not necessary to provide all these everytime.

Conclusion

Vertex AI is a comprehensive solution for fostering collaboration among teams and leveraging the capabilities of Google Cloud for scalable and maintainable machine learning applications. Whether through graphical interfaces, command-line tools, Terraform or Python SDK, users have spectrum of options to harness the power of Vertex AI for their projects.

Share your thoughts in the comments

Please Login to comment...