How To List S3 Buckets With Boto3 ?

Last Updated :

16 Feb, 2024

Amazon S3 and Amazon Lambda are two crucial AWS services used in different organizations across the industry. Amazon S3 is used to store and manage data while Amazon Lambda provides serverless computing service to run code without any management of the server. In this guide, I will first discuss briefly Amazon S3 and Amazon Lambda. Then i will guide you through the different steps to list all the S3 buckets using the boto3 module on Amazon Lambda.

Amazon S3

Amazon S3 is an object storage service provided by AWS. S3 is used for backup purposes and to store large data. It is also used to store images, videos, artifacts, static websites, and many more. You can also store many versions of objects by enabling versioning on the S3 bucket. As per AWS, S3 provides 99.99% durability and availability of objects over a year. Amazon S3 has a cross-region replication feature which helps it to recover from any disaster. You can define AWS S3 lifecycle policies to automatically delete any object or move objects to different storage classes which results in optimizing the cost and managing the data lifecycle. Amazon S3 is overall a highly durable and reliable service for storing and managing data on the AWS cloud platform and suitable for a wide range of applications to store their simple files to complex data analytics and artifacts.

Amazon Lambda

Amazon Lambda is a serverless computing service that runs and executes the code without any management of the server. Lambda supports a variety of programming languages to write lambda functions such as Python, Node.Js, go, ruby, and many more. This service can integrate with various AWS services and resources and based on the code written it can perform many actions. Amazon Lambda automatically scales up and down based on the traffic. Basically, it runs the code(lambda function) based on any AWS events. As the code does not use any server for computing purposes and runs only when any particular AWS event occurs, it incurs less cost. Amazon Lambda overall simplifies the development of serverless applications and provides a flexible, scalable, and cost-effective platform for running code without any server management.

Pre-requisites

Before moving to the next section make sure that you go through these geeks for geeks articles to create an IAM role and S3 bucket on AWS. If you already know how to create these resources then you can follow the next section steps to list S3 buckets.

Steps To List S3 Buckets by using boto3

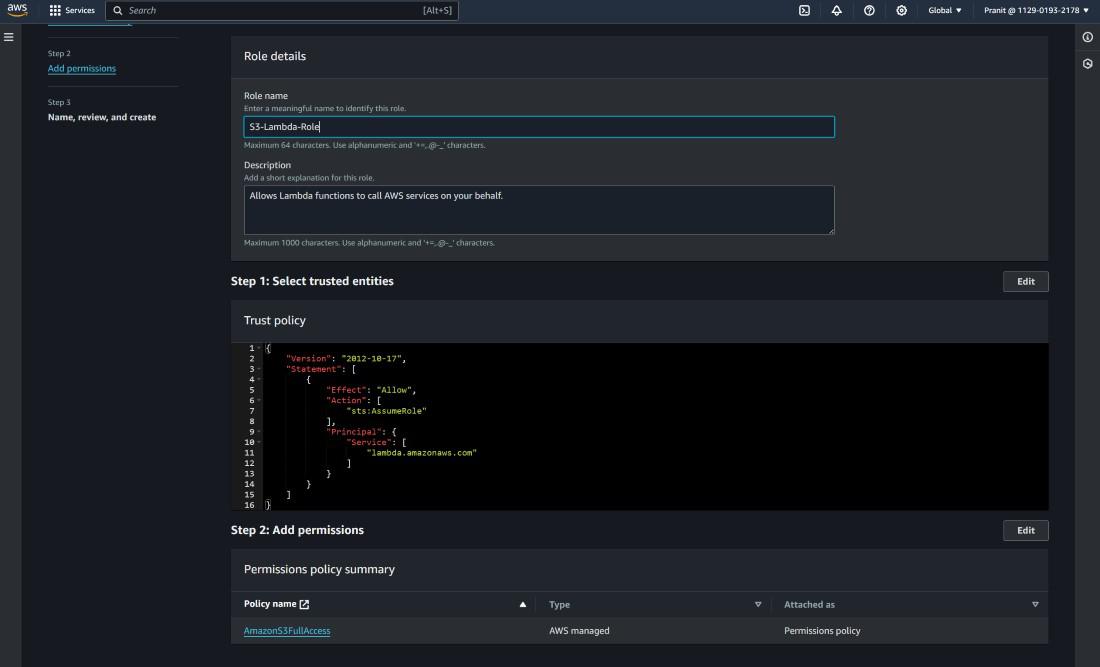

Step 1: First create an IAM role for Lambda service with AmazonS3FullAccess.

Step 2: Then move to Lambda dashboard and select create function . Then create a lambda function using python . Here i attached the role to Lambda function that is created in step 1 .

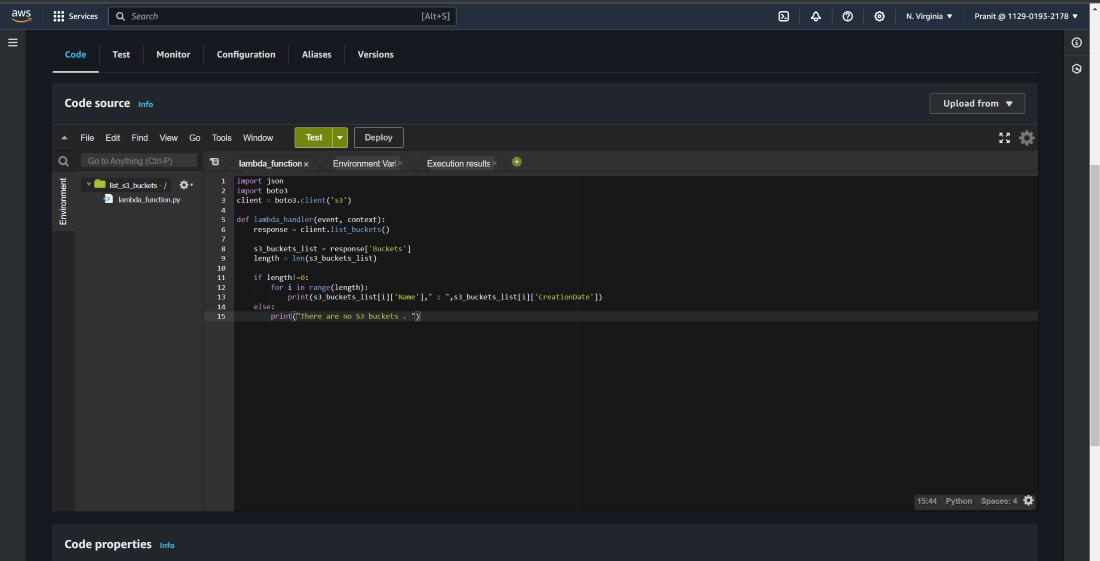

Step 3: Here i have used boto3 module to get the S3 names and creation date . After writing this code select deploy .

import json

import boto3

client = boto3.client('s3')

def lambda_handler(event, context):

response = client.list_buckets()

s3_buckets_list = response['Buckets']

length = len(s3_buckets_list)

if length!=0:

for i in range(length):

print(s3_buckets_list[i]['Name']," : ",s3_buckets_list[i]['CreationDate'])

else:

print("There are no S3 buckets . ")

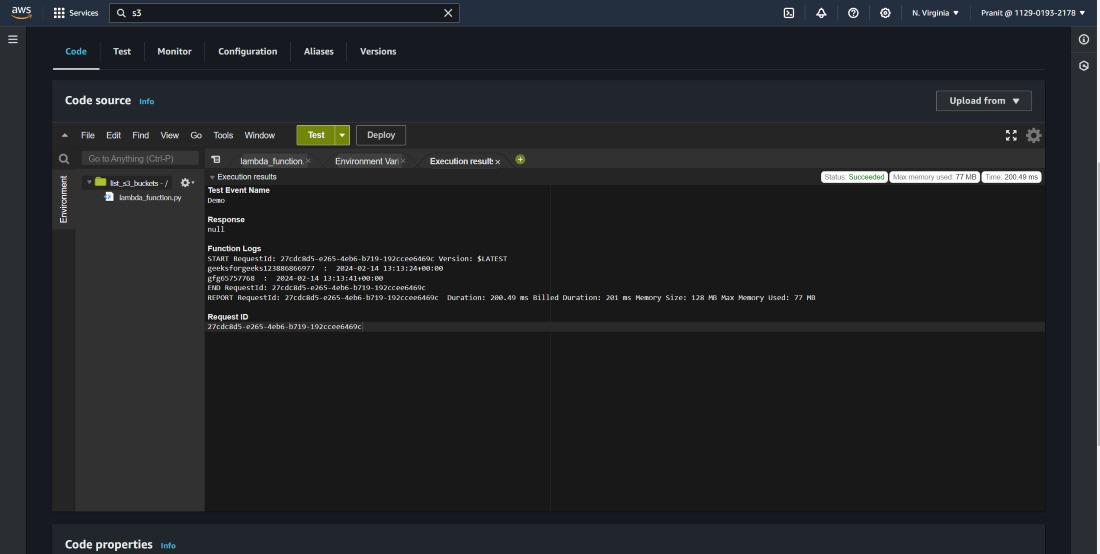

Step 4: Configure a demo test event.

Step 5: Now test the lambda ,if your account does not have any S3 buckets then you will see output “There are no S3 buckets .”

Step 6: Create some S3 buckets to test the lambda function .

Step 7: Now test the lambda function . This time lambda function lists all the S3 buckets with their creation time .

Conclusion

Here we started this guide by learning what is Amazon S3 . Then learned some basic stuff related to Amazon Lambda . Then followed steps to list S3 buckets using boto3 . Basically here we have written a python code using boto3 module to list all the S3 buckets on AWS account .

S3 Buckets and Lambda-FAQ’s

How to handle errors while listing S3 buckets using boto3 ?

Exceptions are raised during API calls by boto3 . These errors can be handled using ‘try-except’ blocks .

Is it possible to list a different AWS account’s S3 buckets using boto3 ?

Yes, it is possible . You just have to configure cross account access using IAM role and provide the credentials when creating the boto3 client .

What programming language supported by lambda ?

AWS Lambda supports python, java , go , node.js , .net and many more programing language to write lambda functions .

What is the maximum execution time for a lambda function ?

The maximum execution time is 15 minutes or 900 seconds for a lambda function .

What is basic idea behind integrating any AWS service to lambda function ?

You have to create IAM role for Lambda service , where you should attach permission related to the AWS service you want to perform action on .

Share your thoughts in the comments

Please Login to comment...