How is TinyML Used for Embedding Smaller Systems?

Last Updated :

06 Feb, 2024

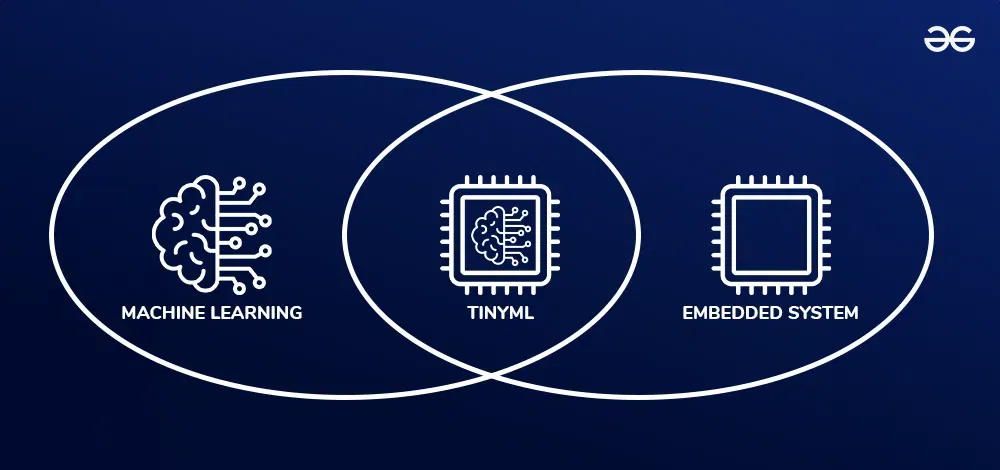

In the rapidly changing world of technology, there is an increasing need for more compact and effective solutions. TinyML, a cutting-edge technology that gives devices with little resources access to machine learning capabilities, is one amazing option that has surfaced. This article explores the use of TinyML to incorporate smaller systems, transforming our understanding of micro-scale computing.

What is TinyML?

Tiny Machine Learning is a field of deploying machine learning models on microcontrollers and other resource-constrained devices. The aim of TinyML is to bring machine learning capabilities to the microcontrollers, sensors and other embedding systems. TinyML offer advantages like low power consumption, reduced latency, and the ability to process data locally without relying on cloud services.

This cutting-edge technology is used Internet of Things, wearable devices, health monitoring, industrial automation and so on.

Need for TinyML

- Edge Computing: With the rise of edge computing, there’s a growing demand for running machine learning models directly on edge devices. TinyML enables this by bringing intelligence to the edge, reducing the reliance on centralized cloud servers for processing.

- Low-Power Devices: Many applications, such as wearables, IoT sensors, and battery-operated devices, have stringent power constraints. TinyML allows machine learning models to operate efficiently on these low-power devices, extending their battery life.

- Real-time Processing: Applications that require real-time processing, like gesture recognition, voice commands, and sensor data analysis, benefit from TinyML. It allows for immediate decision-making without the need for round-trip communication to a cloud server.

- Cost Reduction: The deployment of TinyML models on edge devices can lead to cost savings. It reduces the need for extensive cloud infrastructure, minimizing data transfer costs and eliminating the need for continuous connectivity.

- Ubiquitous Computing: TinyML enables the embedding of machine learning in everyday devices, fostering the vision of ubiquitous computing. Devices such as thermostats, cameras, and household appliances can become smarter and more adaptive with on-device machine learning.

- Edge AI for Industry 4.0: In Industry 4.0, where smart factories and industrial IoT play a crucial role, TinyML enables intelligent processing at the edge. This is vital for applications like predictive maintenance, quality control, and process optimization.

The Need for Embedding Machine Learning on Smaller Systems

As technology advances, the demand for embedding machine learning (ML) capabilities on smaller systems has become increasingly evident. This shift is driven by various factors, each highlighting the importance of bringing ML to the edge. Here are key reasons for the growing need to embed machine learning on smaller systems:

- Real-Time Decision Making: Smaller systems, such as IoT devices and edge computing platforms, often operate in real-time environments where rapid decision-making is crucial. Embedding ML on these systems allows for on-the-spot analysis and immediate responses without relying on centralized processing.

- Reduced Latency: By performing ML computations locally on smaller systems, latency is significantly reduced compared to sending data to a centralized server. This is critical for applications where low latency is imperative, such as autonomous vehicles, robotics, and industrial automation.

- Bandwidth Efficiency: Transmitting large volumes of raw data from edge devices to centralized servers can strain network bandwidth. Embedding ML on smaller systems enables preprocessing and filtering of data locally, sending only relevant information, leading to more efficient bandwidth utilization.

- Privacy and Security: Many applications involve sensitive data that requires privacy and security measures. Localized ML processing ensures that sensitive information stays on the device, reducing the risk of data breaches during data transmission.

- Offline Functionality: Smaller systems may operate in environments with intermittent or no internet connectivity. Embedding ML allows these systems to function autonomously, making decisions even when disconnected from the central network.

- Energy Efficiency: Transmitting data over long distances consumes energy. Local ML processing on smaller systems reduces the need for continuous data transmission, resulting in energy-efficient operations—particularly important for battery-powered devices.

- Scalability: Distributing ML capabilities across smaller systems enables scalable deployment. Instead of relying on a single powerful server, multiple edge devices can collectively contribute to the overall ML workload, enhancing system scalability.

- Customization for Specific Use Cases: Smaller systems are often designed for specific applications and use cases. Embedding ML allows for tailoring models to the unique requirements of these scenarios, optimizing performance and accuracy.

- Adaptability to Edge Conditions: Smaller systems frequently operate in diverse and challenging environments. Embedding ML enables models to adapt to these conditions without relying on constant updates from a central server.

- Cost Efficiency: Localized ML on smaller systems can reduce the need for extensive cloud resources, resulting in cost savings for organizations. It minimizes the dependence on high-performance servers and expensive network infrastructure.

How is TinyML used for Embedding smaller systems?

Embedding TinyML models into smaller systems is achieved through a process known as model deployment. This process entails converting the trained machine learning model into a format compatible with the target device’s hardware, enabling its interpretation and execution on the device. Here’s how TinyML is used for embedding smaller systems:

Model Optimization

- TinyML requires lightweight models due to hardware limitations.

- Techniques such as quantization, pruning, and model compression are employed to reduce model size without compromising performance.

Hardware Compatibility

- Choose microcontrollers and hardware platforms compatible with TinyML frameworks.

- Optimize hardware for specific tasks to ensure efficient execution of machine learning models.

Training for Edge Devices

- Train models with edge-specific datasets to improve performance in real-world, resource-constrained scenarios.

- Utilize transfer learning to leverage pre-trained models and adapt them to specific tasks.

TinyML Frameworks

- Explore popular TinyML frameworks such as TensorFlow Lite for Microcontrollers and Edge Impulse.

- Leverage pre-built models or build custom models based on project requirements.

Integration with Embedded Systems

- Integrate TinyML models seamlessly into embedded systems.

- Develop communication protocols between the microcontroller and other components for effective collaboration.

Challenges of Embedding ML on Small Systems

While embedding machine learning (ML) on small systems offers numerous benefits, it also comes with a set of challenges that must be addressed to ensure successful deployment and optimal performance. Here are some key challenges associated with embedding ML on small systems:

- Limited Resources: Small systems often have constraints in terms of processing power, memory, and storage. Developing ML models that fit within these limitations while maintaining acceptable performance is a significant challenge.

- Energy Efficiency: Energy consumption is a critical concern for small systems, especially those running on batteries. ML algorithms need to be optimized to minimize energy consumption and extend the operational life of the device.

- Model Complexity: Complex ML models may struggle to fit into the limited resources of small systems. Balancing model complexity with the available resources is essential to ensure both accuracy and feasibility.

- Training Data Availability: Small systems may have limited access to diverse and extensive training datasets. Training ML models with constrained data can result in models that lack robustness and generalization.

- Real-time Processing: Achieving real-time processing on small systems can be challenging due to their limited computational capabilities. Ensuring timely execution of ML algorithms while meeting latency requirements is a delicate balance.

Applications of TinyML

IoT (Internet of Things)

- Application: Smart home devices, environmental monitoring sensors, asset tracking.

- Benefits: Enables on-device processing for efficient data analysis, reducing the need for continuous cloud connectivity.

Healthcare

- Application: Wearable health monitors, remote patient monitoring, personalized medicine.

- Benefits: Allows for real-time health monitoring and personalized interventions on wearable devices without continuous data transmission.

Industrial IoT

- Application: Predictive maintenance, quality control, process optimization.

- Benefits: Brings intelligence to industrial equipment, enabling proactive maintenance and optimization of manufacturing processes.

Smart Agriculture

- Application: Crop monitoring, soil health assessment, precision farming.

- Benefits: Facilitates data-driven decision-making in agriculture by processing data directly on sensors and edge devices.

Smart Cities

- Application: Traffic management, waste management, environmental monitoring.

- Benefits: Enables efficient resource allocation and real-time decision-making for urban planning and management.

Wearable Devices

- Application: Fitness trackers, smartwatches, augmented reality glasses.

- Benefits: Enhances the capabilities of wearable devices by running ML models locally, providing personalized insights to users.

Consumer Electronics

- Application: Smart cameras, voice-activated devices, smart appliances.

- Benefits: Enables devices to understand and respond to user behavior locally, enhancing user experience and privacy.

Autonomous Vehicles

- Application: Edge processing for object detection, traffic prediction, and navigation.

- Benefits: Supports real-time decision-making in autonomous vehicles, reducing dependence on centralized servers.

Energy Management

- Application: Smart grids, energy consumption prediction, energy-efficient devices.

- Benefits: Optimizes energy consumption by processing data locally and making real-time decisions.

Security and Surveillance

- Application: Intrusion detection, facial recognition, anomaly detection.

- Benefits: Enhances security systems by enabling real-time analysis and decision-making on edge devices, minimizing latency.

Examples of TinyML in Action

Keyword Spotting for Voice Assistants:

- Scenario: Consider a scenario where a tiny, battery-powered voice-controlled device is designed to recognize specific wake words like “Hey, device.”

- Implementation: A TinyML model trained for keyword spotting can run on the microcontroller, activating the device only when the wake word is detected. This minimizes the need for constant internet connectivity and reduces the device’s dependency on cloud services.

Anomaly Detection in Industrial IoT:

- Scenario: In an industrial setting, monitoring equipment for anomalies is crucial for preventing malfunctions.

- Implementation: TinyML models can be deployed on edge devices connected to sensors. These models analyze sensor data in real-time, flagging anomalies and triggering immediate responses, all while operating within the constraints of the industrial IoT system.

Edge-based Image Classification in Smart Cameras:

- Scenario: Deploying smart cameras for surveillance or wildlife monitoring where quick image classification is crucial.

- Implementation: TinyML models are optimized for edge devices to classify images locally. This allows smart cameras to identify objects or animals in real-time without relying on continuous internet connectivity, making them suitable for remote and resource-limited locations.

Conclusion

In the realm of embedded systems, TinyML emerges as a transformative force, enabling the infusion of machine learning into smaller devices. Its lightweight models and local processing capabilities not only optimize efficiency but also open doors to a myriad of applications, from wearables to industrial IoT. The future unfolds with TinyML as a catalyst, propelling us towards a world where intelligence seamlessly resides in the tiniest corners of our technological landscape.

TinyML – Frequently asked Questions (FAQs)

Can TinyML be used in applications other than IoT devices?

Absolutely! TinyML finds applications in various domains, including healthcare wearables, industrial sensors, and smart home devices.

How does TinyML impact power consumption in embedded systems?

TinyML is designed to be power-efficient, allowing models to run on low-power microcontrollers, significantly reducing energy consumption compared to traditional cloud-based solutions.

Share your thoughts in the comments

Please Login to comment...