Web crawling is the process of indexing data on web pages using a program or automated script, known as crawlers or spiders, or spider bots. This crawler gathers a lot of information which is often helpful in the Web Application Penetration Testing and Bug Bounty. GoSpider is also the Fastest Web Crawler which is designed in the Golang language. GoSpider tool is open-source and free to use.GoSpider also supports multiple target domain scan simultaneously and allows to save the results in the local storage.

Note: As GoSpider is a Golang language-based tool, so you need to have a Golang environment on your system. So check this link to Install Golang in your system. – Installation of Go Lang in Linux

Features of GoSpider Tool

- GoSpider tool is the fastest web crawling tool.

- GoSpider tool supports parsing of robots.txt files.

- GoSpider tool has the feature to generate and verify links from JavaScript files.

- GoSpider tool supports Burp Suite Input for Scan.

- GoSpider tool helps multiple target domain scan simultaneously.

- GoSpider tool can detect subdomains from the response source.

Installation of GoSpider Tool on Kali Linux OS

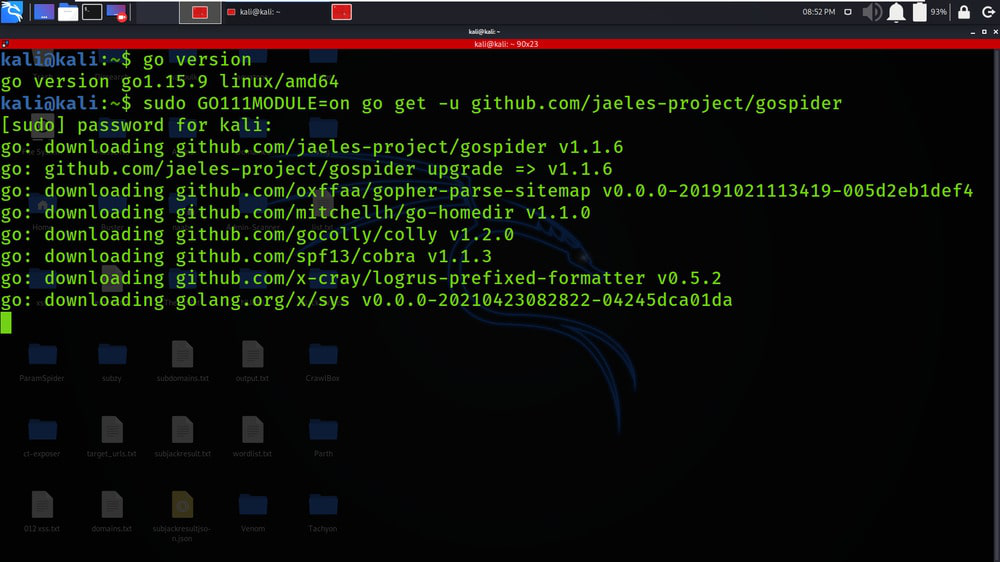

Step 1: If you have downloaded Golang in your system, verify the installation by checking the version of Golang, use the following command.

go version

Step 2: Get the GoSpider repository or clone the GoSpider tool from Github, use the following command.

sudo GO111MODULE=on go get -u github.com/jaeles-project/gospider

Step 3: Copy the path of the tool in /usr/bin directory for accessing the tool from anywhere, use the following command

sudo cp /go/bin/gospider /usr/local/go/bin/

Step 4: Check the version of the GoSpider tool using the following command.

gospider --version

Step 5: Check the help menu page to get a better understanding of the GoSpider tool, use the following command.

gospider -h

Working with GoSpider Tool on Kali Linux

Example 1: Run with single site

gospider -s “https://geeksforgeeks.org” -o geeksforgeeks_output.txt -c 10 -d 1

1. In this example, We will be crawling links from a single target domain (geeksforgeeks.org). -s tag is used to specify the target domain.

2. In the below Screenshot, the results of the crawling process are stored in the text file which can be used for further scanning,

Example 2: Run with site list

gospider -s list.txt -o output -c 10 -d 1

1. In this example, We will be performing crawling on multiple target domains. In the below Screenshot, a text file is displayed which consists of multiple target domains.

2. In the below Screenshot, You can see that the results of the crawling process on the multiple targets are displayed on the terminal.

3. In the below Screenshot, You can see that a separate text file is created for domains specified in the 1st Screenshot.

4. In the below Screenshot, You can see that the results of Crawling are saved in the files associated with its domain. (geeksforgeeks.org results are saved in geeksforgeeks.org_results, txt).

Example 3: Run with 10 sites at the same time with 5 bots each site

gospider -S targets.txt -o output1 -c 10 -d 1 -t 20

1. In this example, We will be crawling the 10 different websites or target domains simultaneously. In the below Screenshot, the target domains are saved in the targets.txt file.

2. In the below Screenshot, We have provided the command and the crawling process is started

3. In the below Screenshot, You can see that GoSpider is crawling the various target domains simultaneously. In the below Screenshot, tesla.com, geeksforgeeks.org, yahoo.com, etc domains are crawled simultaneously.

4. Although the crawling is done simultaneously but the results of each target domain are saved in separate text files associated with its name. In the below Screenshot, 10 Target domains result are saved in 10 different text files along with their names.

5. In the below Screenshot, We have opened the google.com_results.txt file which contains all the crawled data.

Example 4: Also get URLs from 3rd party (Archive.org, CommonCrawl.org, VirusTotal.com, AlienVault.com)

gospider -s "https://geeksforgeeks.org/" -o output -c 10 -d 1 --other-source

1. In this Example, We are trying to get links from external 3rd Parties.

2. In the below Screenshot, Results are saved in the text file associated with the target domain name.

Example 5: Also get URLs from 3rd party (Archive.org, CommonCrawl.org, VirusTotal.com, AlienVault.com) and include subdomains

gospider -s “https://google.com/” -o output2 -c 10 -d 1 –other-source –include-subs

1. In this example, We will be crawling the data from the subdomains which are associated with the provided main domain. In the below Screenshot, we are crawling for google.com as it has huge scope.

2. In the below Screenshot, You can see that subdomains are also included in the crawling process.

Example 6: Use custom header/cookies

gospider -s “https://geeksforgeeks.org/” -o output3 -c 10 -d 1 –other-source -H “Accept: */*” -H “Test: test” –cookie “testA=a; testB=b”

1. In this Example, We are providing additional information in the form of cookies. We have used –cookie tag to provide the values of the cookie.

Example 7: Blacklist URL/file extension.

gospider -s “https://geeksforgeeks.org” -o output -c 10 -d 1 –blacklist “.(woff|pdf)”

1. In this Example, We are filtering or adding some extensions and URLs to the blacklist. All this blacklisted data will be excluded or ignored while retrieving the results.

Share your thoughts in the comments

Please Login to comment...